Gargya Gokhale

Control Policy Correction Framework for Reinforcement Learning-based Energy Arbitrage Strategies

Apr 30, 2024

Abstract:A continuous rise in the penetration of renewable energy sources, along with the use of the single imbalance pricing, provides a new opportunity for balance responsible parties to reduce their cost through energy arbitrage in the imbalance settlement mechanism. Model-free reinforcement learning (RL) methods are an appropriate choice for solving the energy arbitrage problem due to their outstanding performance in solving complex stochastic sequential problems. However, RL is rarely deployed in real-world applications since its learned policy does not necessarily guarantee safety during the execution phase. In this paper, we propose a new RL-based control framework for batteries to obtain a safe energy arbitrage strategy in the imbalance settlement mechanism. In our proposed control framework, the agent initially aims to optimize the arbitrage revenue. Subsequently, in the post-processing step, we correct (constrain) the learned policy following a knowledge distillation process based on properties that follow human intuition. Our post-processing step is a generic method and is not restricted to the energy arbitrage domain. We use the Belgian imbalance price of 2023 to evaluate the performance of our proposed framework. Furthermore, we deploy our proposed control framework on a real battery to show its capability in the real world.

Distill2Explain: Differentiable decision trees for explainable reinforcement learning in energy application controllers

Mar 18, 2024

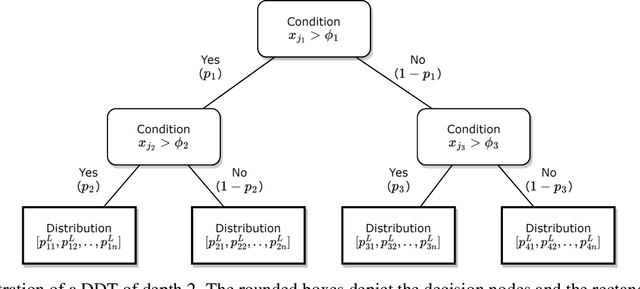

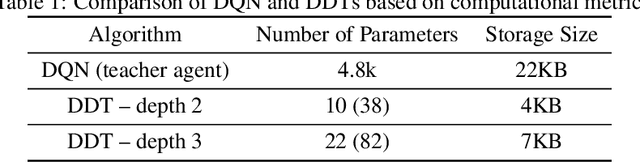

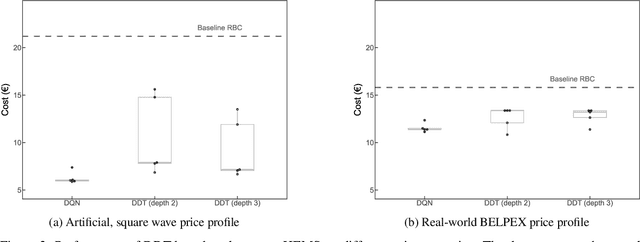

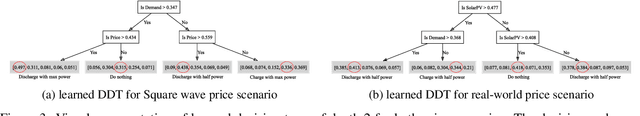

Abstract:Demand-side flexibility is gaining importance as a crucial element in the energy transition process. Accounting for about 25% of final energy consumption globally, the residential sector is an important (potential) source of energy flexibility. However, unlocking this flexibility requires developing a control framework that (1) easily scales across different houses, (2) is easy to maintain, and (3) is simple to understand for end-users. A potential control framework for such a task is data-driven control, specifically model-free reinforcement learning (RL). Such RL-based controllers learn a good control policy by interacting with their environment, learning purely based on data and with minimal human intervention. Yet, they lack explainability, which hampers user acceptance. Moreover, limited hardware capabilities of residential assets forms a hurdle (e.g., using deep neural networks). To overcome both those challenges, we propose a novel method to obtain explainable RL policies by using differentiable decision trees. Using a policy distillation approach, we train these differentiable decision trees to mimic standard RL-based controllers, leading to a decision tree-based control policy that is data-driven and easy to explain. As a proof-of-concept, we examine the performance and explainability of our proposed approach in a battery-based home energy management system to reduce energy costs. For this use case, we show that our proposed approach can outperform baseline rule-based policies by about 20-25%, while providing simple, explainable control policies. We further compare these explainable policies with standard RL policies and examine the performance trade-offs associated with this increased explainability.

Explainable Reinforcement Learning-based Home Energy Management Systems using Differentiable Decision Trees

Mar 18, 2024Abstract:With the ongoing energy transition, demand-side flexibility has become an important aspect of the modern power grid for providing grid support and allowing further integration of sustainable energy sources. Besides traditional sources, the residential sector is another major and largely untapped source of flexibility, driven by the increased adoption of solar PV, home batteries, and EVs. However, unlocking this residential flexibility is challenging as it requires a control framework that can effectively manage household energy consumption, and maintain user comfort while being readily scalable across different, diverse houses. We aim to address this challenging problem and introduce a reinforcement learning-based approach using differentiable decision trees. This approach integrates the scalability of data-driven reinforcement learning with the explainability of (differentiable) decision trees. This leads to a controller that can be easily adapted across different houses and provides a simple control policy that can be explained to end-users, further improving user acceptance. As a proof-of-concept, we analyze our method using a home energy management problem, comparing its performance with commercially available rule-based baseline and standard neural network-based RL controllers. Through this preliminary study, we show that the performance of our proposed method is comparable to standard RL-based controllers, outperforming baseline controllers by ~20% in terms of daily cost savings while being straightforward to explain.

Demand response for residential building heating: Effective Monte Carlo Tree Search control based on physics-informed neural networks

Dec 06, 2023

Abstract:Controlling energy consumption in buildings through demand response (DR) has become increasingly important to reduce global carbon emissions and limit climate change. In this paper, we specifically focus on controlling the heating system of a residential building to optimize its energy consumption while respecting user's thermal comfort. Recent works in this area have mainly focused on either model-based control, e.g., model predictive control (MPC), or model-free reinforcement learning (RL) to implement practical DR algorithms. A specific RL method that recently has achieved impressive success in domains such as board games (go, chess) is Monte Carlo Tree Search (MCTS). Yet, for building control it has remained largely unexplored. Thus, we study MCTS specifically for building demand response. Its natural structure allows a flexible optimization that implicitly integrate exogenous constraints (as opposed, for example, to conventional RL solutions), making MCTS a promising candidate for DR control problems. We demonstrate how to improve MCTS control performance by incorporating a Physics-informed Neural Network (PiNN) model for its underlying thermal state prediction, as opposed to traditional purely data-driven Black-Box approaches. Our MCTS implementation aligned with a PiNN model is able to obtain a 3% increment of the obtained reward compared to a rule-based controller; leading to a 10% cost reduction and 35% reduction on temperature difference with the desired one when applied to an artificial price profile. We further implemented a Deep Learning layer into the Monte Carlo Tree Search technique using a neural network that leads the tree search through more optimal nodes. We then compared this addition with its Vanilla version, showing the improvement in computational cost required.

Real-World Implementation of Reinforcement Learning Based Energy Coordination for a Cluster of Households

Oct 29, 2023

Abstract:Given its substantial contribution of 40\% to global power consumption, the built environment has received increasing attention to serve as a source of flexibility to assist the modern power grid. In that respect, previous research mainly focused on energy management of individual buildings. In contrast, in this paper, we focus on aggregated control of a set of residential buildings, to provide grid supporting services, that eventually should include ancillary services. In particular, we present a real-life pilot study that studies the effectiveness of reinforcement-learning (RL) in coordinating the power consumption of 8 residential buildings to jointly track a target power signal. Our RL approach relies solely on observed data from individual households and does not require any explicit building models or simulators, making it practical to implement and easy to scale. We show the feasibility of our proposed RL-based coordination strategy in a real-world setting. In a 4-week case study, we demonstrate a hierarchical control system, relying on an RL-based ranking system to select which households to activate flex assets from, and a real-time PI control-based power dispatch mechanism to control the selected assets. Our results demonstrate satisfactory power tracking, and the effectiveness of the RL-based ranks which are learnt in a purely data-driven manner.

Transfer Learning in Transformer-Based Demand Forecasting For Home Energy Management System

Oct 29, 2023

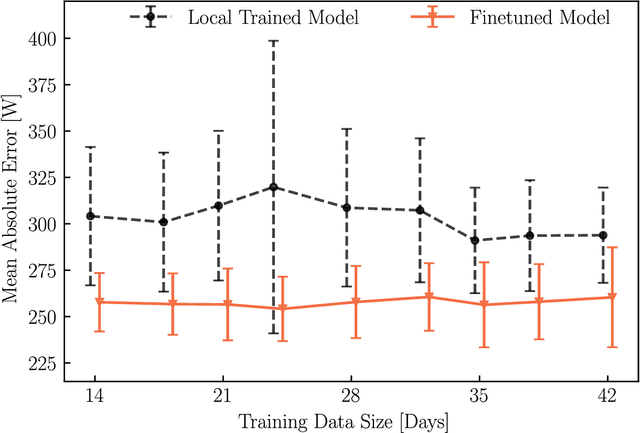

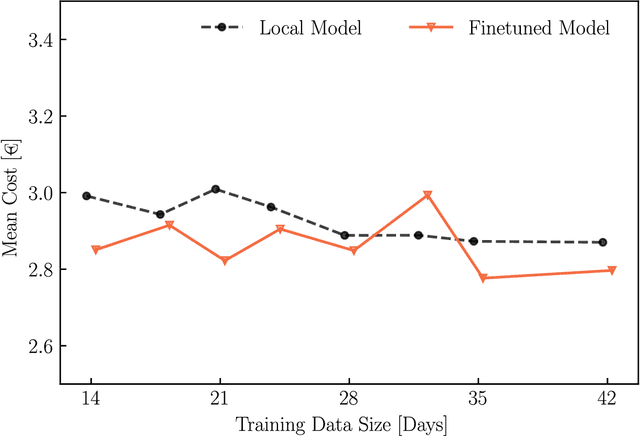

Abstract:Increasingly, homeowners opt for photovoltaic (PV) systems and/or battery storage to minimize their energy bills and maximize renewable energy usage. This has spurred the development of advanced control algorithms that maximally achieve those goals. However, a common challenge faced while developing such controllers is the unavailability of accurate forecasts of household power consumption, especially for shorter time resolutions (15 minutes) and in a data-efficient manner. In this paper, we analyze how transfer learning can help by exploiting data from multiple households to improve a single house's load forecasting. Specifically, we train an advanced forecasting model (a temporal fusion transformer) using data from multiple different households, and then finetune this global model on a new household with limited data (i.e. only a few days). The obtained models are used for forecasting power consumption of the household for the next 24 hours~(day-ahead) at a time resolution of 15 minutes, with the intention of using these forecasts in advanced controllers such as Model Predictive Control. We show the benefit of this transfer learning setup versus solely using the individual new household's data, both in terms of (i) forecasting accuracy ($\sim$15\% MAE reduction) and (ii) control performance ($\sim$2\% energy cost reduction), using real-world household data.

Physics Informed Neural Networks for Control Oriented Thermal Modeling of Buildings

Nov 23, 2021

Abstract:This paper presents a data-driven modeling approach for developing control-oriented thermal models of buildings. These models are developed with the objective of reducing energy consumption costs while controlling the indoor temperature of the building within required comfort limits. To combine the interpretability of white/gray box physics models and the expressive power of neural networks, we propose a physics informed neural network approach for this modeling task. Along with measured data and building parameters, we encode the neural networks with the underlying physics that governs the thermal behavior of these buildings. Thus, realizing a model that is guided by physics, aids in modeling the temporal evolution of room temperature and power consumption as well as the hidden state, i.e., the temperature of building thermal mass for subsequent time steps. The main research contributions of this work are: (1) we propose two variants of physics informed neural network architectures for the task of control-oriented thermal modeling of buildings, (2) we show that training these architectures is data-efficient, requiring less training data compared to conventional, non-physics informed neural networks, and (3) we show that these architectures achieve more accurate predictions than conventional neural networks for longer prediction horizons. We test the prediction performance of the proposed architectures using simulated and real-word data to demonstrate (2) and (3) and show that the proposed physics informed neural network architectures can be used for this control-oriented modeling problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge