Distill2Explain: Differentiable decision trees for explainable reinforcement learning in energy application controllers

Paper and Code

Mar 18, 2024

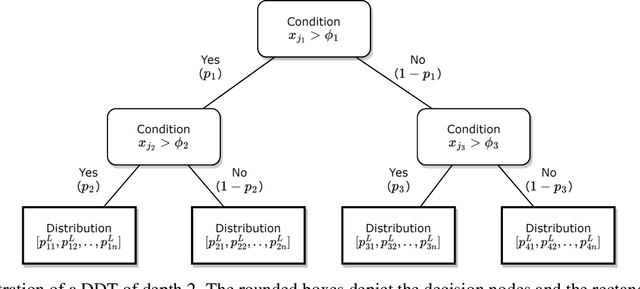

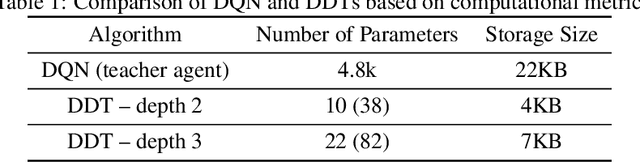

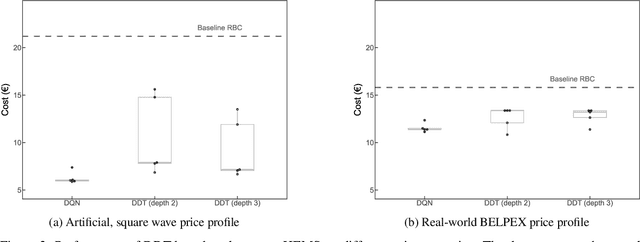

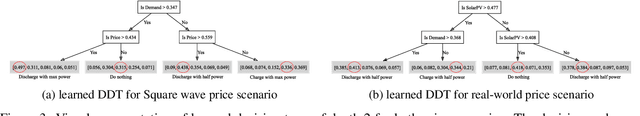

Demand-side flexibility is gaining importance as a crucial element in the energy transition process. Accounting for about 25% of final energy consumption globally, the residential sector is an important (potential) source of energy flexibility. However, unlocking this flexibility requires developing a control framework that (1) easily scales across different houses, (2) is easy to maintain, and (3) is simple to understand for end-users. A potential control framework for such a task is data-driven control, specifically model-free reinforcement learning (RL). Such RL-based controllers learn a good control policy by interacting with their environment, learning purely based on data and with minimal human intervention. Yet, they lack explainability, which hampers user acceptance. Moreover, limited hardware capabilities of residential assets forms a hurdle (e.g., using deep neural networks). To overcome both those challenges, we propose a novel method to obtain explainable RL policies by using differentiable decision trees. Using a policy distillation approach, we train these differentiable decision trees to mimic standard RL-based controllers, leading to a decision tree-based control policy that is data-driven and easy to explain. As a proof-of-concept, we examine the performance and explainability of our proposed approach in a battery-based home energy management system to reduce energy costs. For this use case, we show that our proposed approach can outperform baseline rule-based policies by about 20-25%, while providing simple, explainable control policies. We further compare these explainable policies with standard RL policies and examine the performance trade-offs associated with this increased explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge