Gang Quan

MT-Spike: A Multilayer Time-based Spiking Neuromorphic Architecture with Temporal Error Backpropagation

Mar 14, 2018

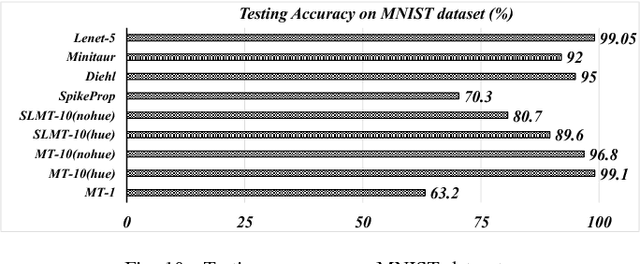

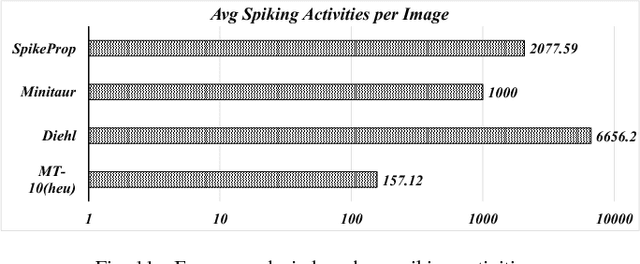

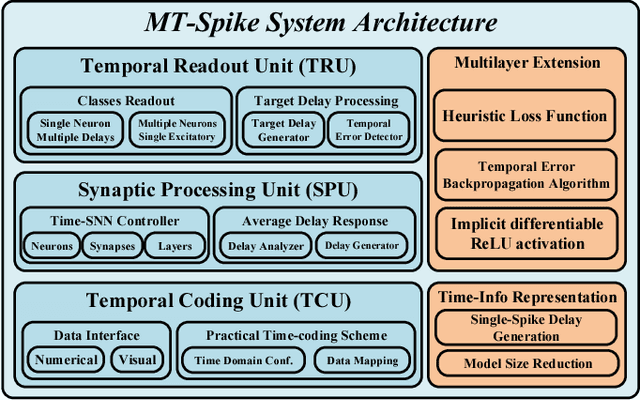

Abstract:Modern deep learning enabled artificial neural networks, such as Deep Neural Network (DNN) and Convolutional Neural Network (CNN), have achieved a series of breaking records on a broad spectrum of recognition applications. However, the enormous computation and storage requirements associated with such deep and complex neural network models greatly challenge their implementations on resource-limited platforms. Time-based spiking neural network has recently emerged as a promising solution in Neuromorphic Computing System designs for achieving remarkable computing and power efficiency within a single chip. However, the relevant research activities have been narrowly concentrated on the biological plausibility and theoretical learning approaches, causing inefficient neural processing and impracticable multilayer extension thus significantly limitations on speed and accuracy when handling the realistic cognitive tasks. In this work, a practical multilayer time-based spiking neuromorphic architecture, namely "MT-Spike", is developed to fill this gap. With the proposed practical time-coding scheme, average delay response model, temporal error backpropagation algorithm, and heuristic loss function, "MT-Spike" achieves more efficient neural processing through flexible neural model size reduction while offering very competitive classification accuracy for realistic recognition tasks. Simulation results well validated that the algorithmic power of deep multi-layer learning can be seamlessly merged with the efficiency of time-based spiking neuromorphic architecture, demonstrating great potentials of "MT-Spike" in resource and power constrained embedded platforms.

PT-Spike: A Precise-Time-Dependent Single Spike Neuromorphic Architecture with Efficient Supervised Learning

Mar 14, 2018

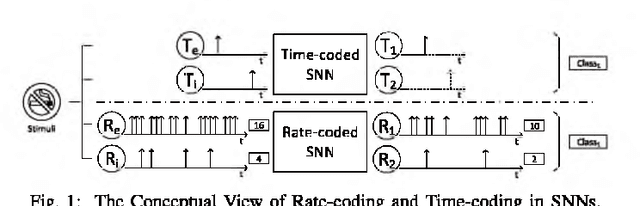

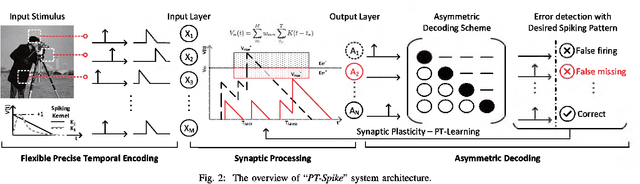

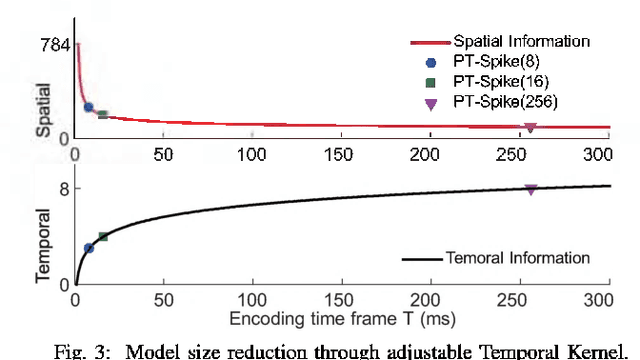

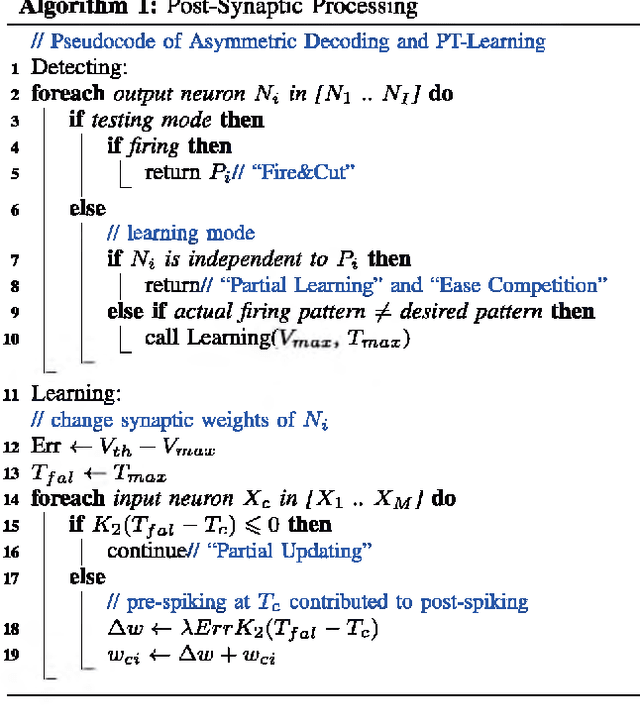

Abstract:One of the most exciting advancements in AI over the last decade is the wide adoption of ANNs, such as DNN and CNN, in many real-world applications. However, the underlying massive amounts of computation and storage requirement greatly challenge their applicability in resource-limited platforms like the drone, mobile phone, and IoT devices etc. The third generation of neural network model--Spiking Neural Network (SNN), inspired by the working mechanism and efficiency of human brain, has emerged as a promising solution for achieving more impressive computing and power efficiency within light-weighted devices (e.g. single chip). However, the relevant research activities have been narrowly carried out on conventional rate-based spiking system designs for fulfilling the practical cognitive tasks, underestimating SNN's energy efficiency, throughput, and system flexibility. Although the time-based SNN can be more attractive conceptually, its potentials are not unleashed in realistic applications due to lack of efficient coding and practical learning schemes. In this work, a Precise-Time-Dependent Single Spike Neuromorphic Architecture, namely "PT-Spike", is developed to bridge this gap. Three constituent hardware-favorable techniques: precise single-spike temporal encoding, efficient supervised temporal learning, and fast asymmetric decoding are proposed accordingly to boost the energy efficiency and data processing capability of the time-based SNN at a more compact neural network model size when executing real cognitive tasks. Simulation results show that "PT-Spike" demonstrates significant improvements in network size, processing efficiency and power consumption with marginal classification accuracy degradation when compared with the rate-based SNN and ANN under the similar network configuration.

DeepN-JPEG: A Deep Neural Network Favorable JPEG-based Image Compression Framework

Mar 14, 2018

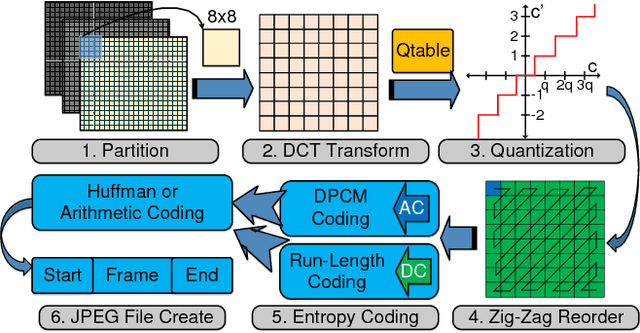

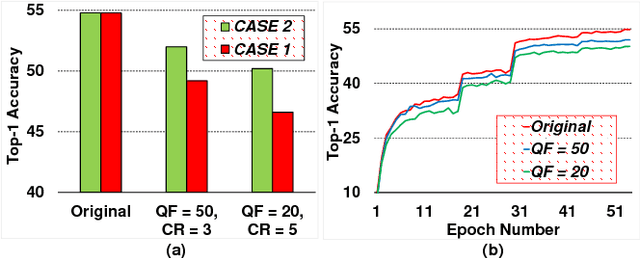

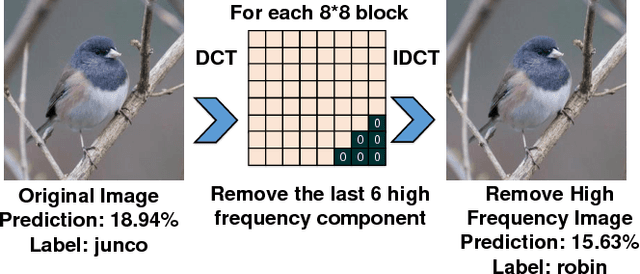

Abstract:As one of most fascinating machine learning techniques, deep neural network (DNN) has demonstrated excellent performance in various intelligent tasks such as image classification. DNN achieves such performance, to a large extent, by performing expensive training over huge volumes of training data. To reduce the data storage and transfer overhead in smart resource-limited Internet-of-Thing (IoT) systems, effective data compression is a "must-have" feature before transferring real-time produced dataset for training or classification. While there have been many well-known image compression approaches (such as JPEG), we for the first time find that a human-visual based image compression approach such as JPEG compression is not an optimized solution for DNN systems, especially with high compression ratios. To this end, we develop an image compression framework tailored for DNN applications, named "DeepN-JPEG", to embrace the nature of deep cascaded information process mechanism of DNN architecture. Extensive experiments, based on "ImageNet" dataset with various state-of-the-art DNNs, show that "DeepN-JPEG" can achieve ~3.5x higher compression rate over the popular JPEG solution while maintaining the same accuracy level for image recognition, demonstrating its great potential of storage and power efficiency in DNN-based smart IoT system design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge