Gan Ma

Robotic Perception with a Large Tactile-Vision-Language Model for Physical Property Inference

Jun 24, 2025Abstract:Inferring physical properties can significantly enhance robotic manipulation by enabling robots to handle objects safely and efficiently through adaptive grasping strategies. Previous approaches have typically relied on either tactile or visual data, limiting their ability to fully capture properties. We introduce a novel cross-modal perception framework that integrates visual observations with tactile representations within a multimodal vision-language model. Our physical reasoning framework, which employs a hierarchical feature alignment mechanism and a refined prompting strategy, enables our model to make property-specific predictions that strongly correlate with ground-truth measurements. Evaluated on 35 diverse objects, our approach outperforms existing baselines and demonstrates strong zero-shot generalization. Keywords: tactile perception, visual-tactile fusion, physical property inference, multimodal integration, robot perception

NeRF-Based Transparent Object Grasping Enhanced by Shape Priors

Apr 14, 2025

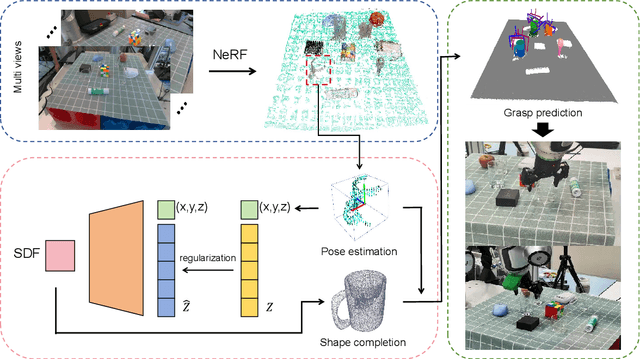

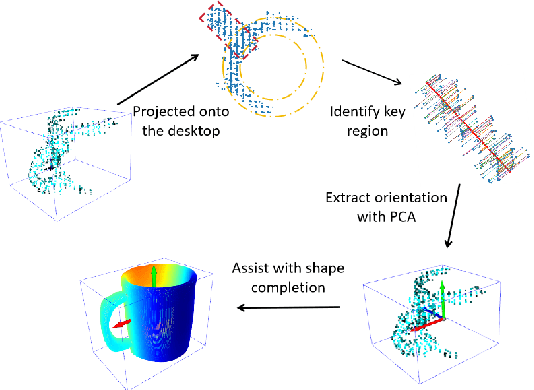

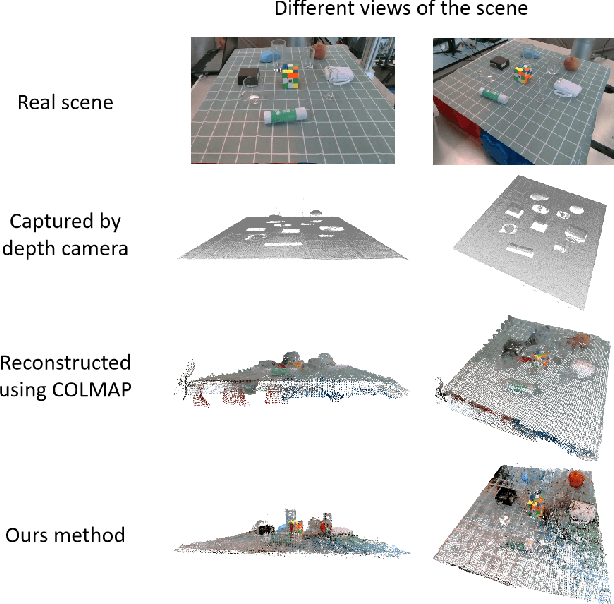

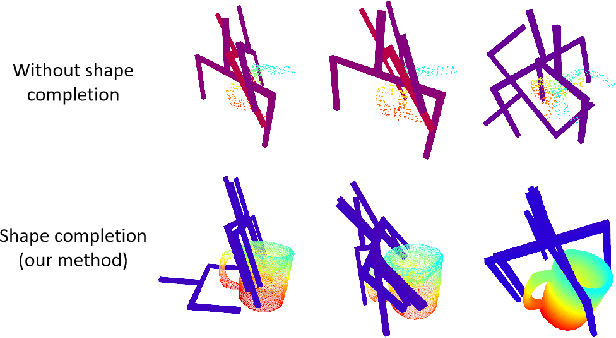

Abstract:Transparent object grasping remains a persistent challenge in robotics, largely due to the difficulty of acquiring precise 3D information. Conventional optical 3D sensors struggle to capture transparent objects, and machine learning methods are often hindered by their reliance on high-quality datasets. Leveraging NeRF's capability for continuous spatial opacity modeling, our proposed architecture integrates a NeRF-based approach for reconstructing the 3D information of transparent objects. Despite this, certain portions of the reconstructed 3D information may remain incomplete. To address these deficiencies, we introduce a shape-prior-driven completion mechanism, further refined by a geometric pose estimation method we have developed. This allows us to obtain a complete and reliable 3D information of transparent objects. Utilizing this refined data, we perform scene-level grasp prediction and deploy the results in real-world robotic systems. Experimental validation demonstrates the efficacy of our architecture, showcasing its capability to reliably capture 3D information of various transparent objects in cluttered scenes, and correspondingly, achieve high-quality, stables, and executable grasp predictions.

An Onboard Framework for Staircases Modeling Based on Point Clouds

May 03, 2024

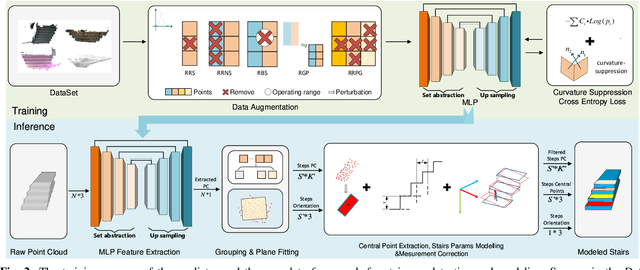

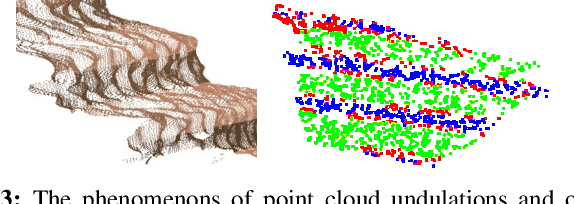

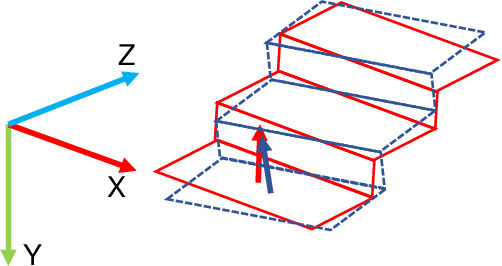

Abstract:The detection of traversable regions on staircases and the physical modeling constitutes pivotal aspects of the mobility of legged robots. This paper presents an onboard framework tailored to the detection of traversable regions and the modeling of physical attributes of staircases by point cloud data. To mitigate the influence of illumination variations and the overfitting due to the dataset diversity, a series of data augmentations are introduced to enhance the training of the fundamental network. A curvature suppression cross-entropy(CSCE) loss is proposed to reduce the ambiguity of prediction on the boundary between traversable and non-traversable regions. Moreover, a measurement correction based on the pose estimation of stairs is introduced to calibrate the output of raw modeling that is influenced by tilted perspectives. Lastly, we collect a dataset pertaining to staircases and introduce new evaluation criteria. Through a series of rigorous experiments conducted on this dataset, we substantiate the superior accuracy and generalization capabilities of our proposed method. Codes, models, and datasets will be available at https://github.com/szturobotics/Stair-detection-and-modeling-project.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge