Gabi Dreo Rodosek

Detection Avoidance Techniques for Large Language Models

Mar 10, 2025Abstract:The increasing popularity of large language models has not only led to widespread use but has also brought various risks, including the potential for systematically spreading fake news. Consequently, the development of classification systems such as DetectGPT has become vital. These detectors are vulnerable to evasion techniques, as demonstrated in an experimental series: Systematic changes of the generative models' temperature proofed shallow learning-detectors to be the least reliable. Fine-tuning the generative model via reinforcement learning circumvented BERT-based-detectors. Finally, rephrasing led to a >90\% evasion of zero-shot-detectors like DetectGPT, although texts stayed highly similar to the original. A comparison with existing work highlights the better performance of the presented methods. Possible implications for society and further research are discussed.

How well can machine-generated texts be identified and can language models be trained to avoid identification?

Oct 25, 2023Abstract:With the rise of generative pre-trained transformer models such as GPT-3, GPT-NeoX, or OPT, distinguishing human-generated texts from machine-generated ones has become important. We refined five separate language models to generate synthetic tweets, uncovering that shallow learning classification algorithms, like Naive Bayes, achieve detection accuracy between 0.6 and 0.8. Shallow learning classifiers differ from human-based detection, especially when using higher temperature values during text generation, resulting in a lower detection rate. Humans prioritize linguistic acceptability, which tends to be higher at lower temperature values. In contrast, transformer-based classifiers have an accuracy of 0.9 and above. We found that using a reinforcement learning approach to refine our generative models can successfully evade BERT-based classifiers with a detection accuracy of 0.15 or less.

Universal Adversarial Perturbations for Malware

Feb 12, 2021

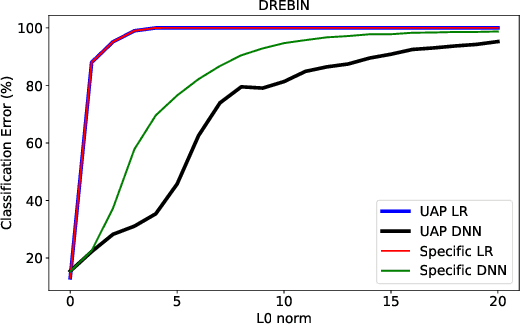

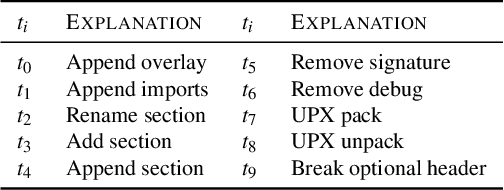

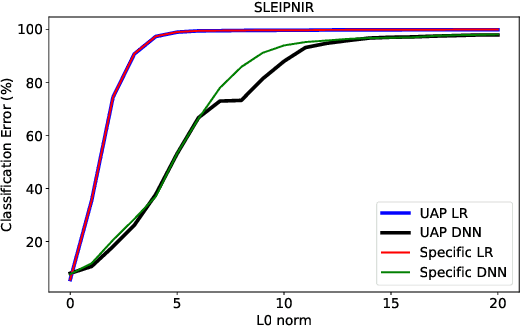

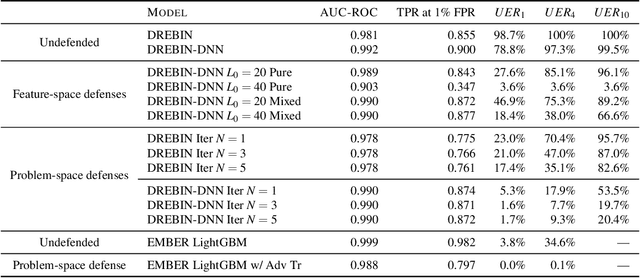

Abstract:Machine learning classification models are vulnerable to adversarial examples -- effective input-specific perturbations that can manipulate the model's output. Universal Adversarial Perturbations (UAPs), which identify noisy patterns that generalize across the input space, allow the attacker to greatly scale up the generation of these adversarial examples. Although UAPs have been explored in application domains beyond computer vision, little is known about their properties and implications in the specific context of realizable attacks, such as malware, where attackers must reason about satisfying challenging problem-space constraints. In this paper, we explore the challenges and strengths of UAPs in the context of malware classification. We generate sequences of problem-space transformations that induce UAPs in the corresponding feature-space embedding and evaluate their effectiveness across threat models that consider a varying degree of realistic attacker knowledge. Additionally, we propose adversarial training-based mitigations using knowledge derived from the problem-space transformations, and compare against alternative feature-space defenses. Our experiments limit the effectiveness of a white box Android evasion attack to ~20 % at the cost of 3 % TPR at 1 % FPR. We additionally show how our method can be adapted to more restrictive application domains such as Windows malware. We observe that while adversarial training in the feature space must deal with large and often unconstrained regions, UAPs in the problem space identify specific vulnerabilities that allow us to harden a classifier more effectively, shifting the challenges and associated cost of identifying new universal adversarial transformations back to the attacker.

A Novel Multi-Agent System for Complex Scheduling Problems

Apr 20, 2020

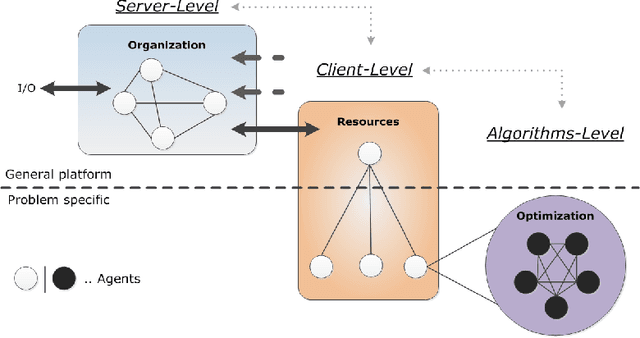

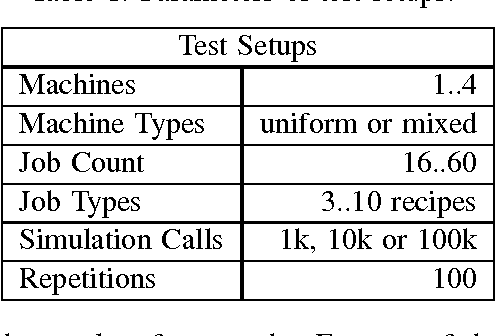

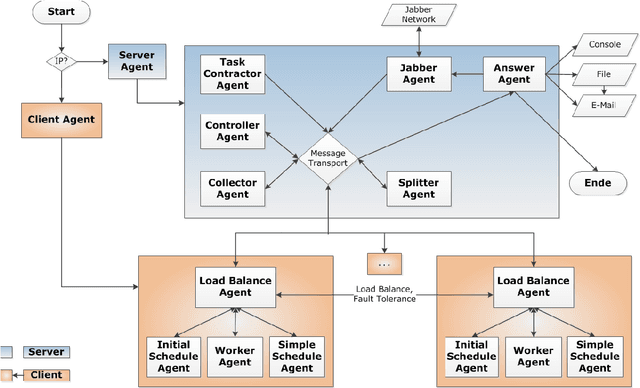

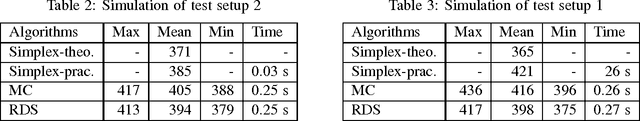

Abstract:Complex scheduling problems require a large amount computation power and innovative solution methods. The objective of this paper is the conception and implementation of a multi-agent system that is applicable in various problem domains. Independent specialized agents handle small tasks, to reach a superordinate target. Effective coordination is therefore required to achieve productive cooperation. Role models and distributed artificial intelligence are employed to tackle the resulting challenges. We simulate a NP-hard scheduling problem to demonstrate the validity of our approach. In addition to the general agent based framework we propose new simulation-based optimization heuristics to given scheduling problems. Two of the described optimization algorithms are implemented using agents. This paper highlights the advantages of the agent-based approach, like the reduction in layout complexity, improved control of complicated systems, and extendability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge