Frederik Zuiderveen Borgesius

Mitigating Digital Discrimination in Dating Apps -- The Dutch Breeze case

Sep 24, 2024Abstract:In September 2023, the Netherlands Institute for Human Rights, the Dutch non-discrimination authority, decided that Breeze, a Dutch dating app, was justified in suspecting that their algorithm discriminated against non-white. Consequently, the Institute decided that Breeze must prevent this discrimination based on ethnicity. This paper explores two questions. (i) Is the discrimination based on ethnicity in Breeze's matching algorithm illegal? (ii) How can dating apps mitigate or stop discrimination in their matching algorithms? We illustrate the legal and technical difficulties dating apps face in tackling discrimination and illustrate promising solutions. We analyse the Breeze decision in-depth, combining insights from computer science and law. We discuss the implications of this judgment for scholarship and practice in the field of fair and non-discriminatory machine learning.

Fairness and Bias in Algorithmic Hiring

Sep 25, 2023

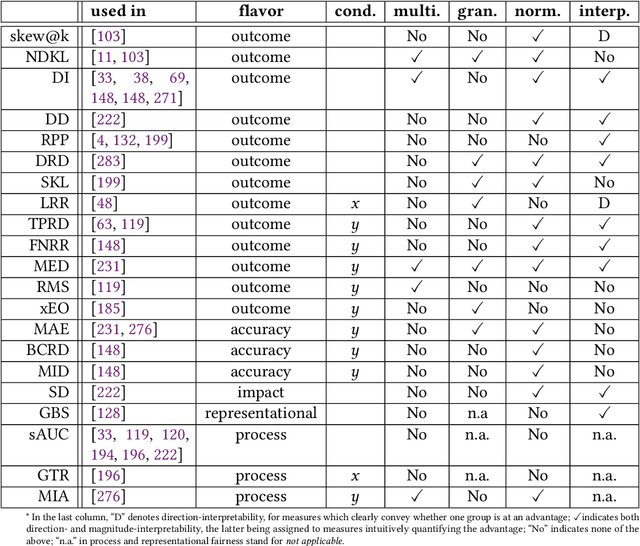

Abstract:Employers are adopting algorithmic hiring technology throughout the recruitment pipeline. Algorithmic fairness is especially applicable in this domain due to its high stakes and structural inequalities. Unfortunately, most work in this space provides partial treatment, often constrained by two competing narratives, optimistically focused on replacing biased recruiter decisions or pessimistically pointing to the automation of discrimination. Whether, and more importantly what types of, algorithmic hiring can be less biased and more beneficial to society than low-tech alternatives currently remains unanswered, to the detriment of trustworthiness. This multidisciplinary survey caters to practitioners and researchers with a balanced and integrated coverage of systems, biases, measures, mitigation strategies, datasets, and legal aspects of algorithmic hiring and fairness. Our work supports a contextualized understanding and governance of this technology by highlighting current opportunities and limitations, providing recommendations for future work to ensure shared benefits for all stakeholders.

Targeted and Troublesome: Tracking and Advertising on Children's Websites

Aug 09, 2023

Abstract:On the modern web, trackers and advertisers frequently construct and monetize users' detailed behavioral profiles without consent. Despite various studies on web tracking mechanisms and advertisements, there has been no rigorous study focusing on websites targeted at children. To address this gap, we present a measurement of tracking and (targeted) advertising on websites directed at children. Motivated by lacking a comprehensive list of child-directed (i.e., targeted at children) websites, we first build a multilingual classifier based on web page titles and descriptions. Applying this classifier to over two million pages, we compile a list of two thousand child-directed websites. Crawling these sites from five vantage points, we measure the prevalence of trackers, fingerprinting scripts, and advertisements. Our crawler detects ads displayed on child-directed websites and determines if ad targeting is enabled by scraping ad disclosure pages whenever available. Our results show that around 90% of child-directed websites embed one or more trackers, and about 27% contain targeted advertisements--a practice that should require verifiable parental consent. Next, we identify improper ads on child-directed websites by developing an ML pipeline that processes both images and text extracted from ads. The pipeline allows us to run semantic similarity queries for arbitrary search terms, revealing ads that promote services related to dating, weight loss, and mental health; as well as ads for sex toys and flirting chat services. Some of these ads feature repulsive and sexually explicit imagery. In summary, our findings indicate a trend of non-compliance with privacy regulations and troubling ad safety practices among many advertisers and child-directed websites. To protect children and create a safer online environment, regulators and stakeholders must adopt and enforce more stringent measures.

Demystifying the Draft EU Artificial Intelligence Act

Jul 20, 2021

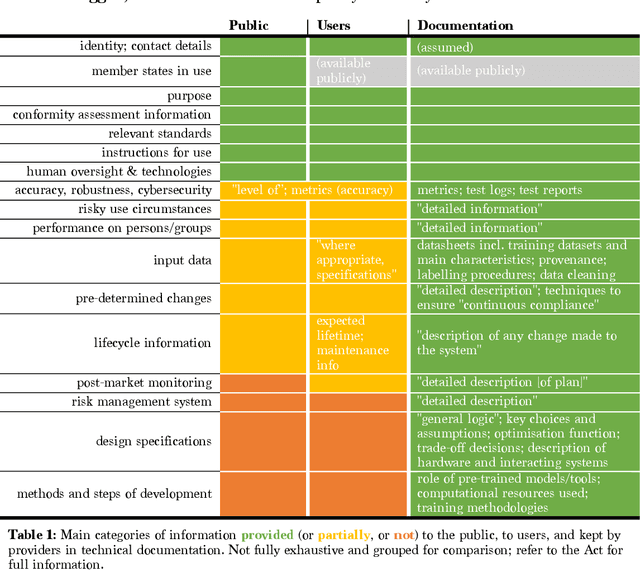

Abstract:In April 2021, the European Commission proposed a Regulation on Artificial Intelligence, known as the AI Act. We present an overview of the Act and analyse its implications, drawing on scholarship ranging from the study of contemporary AI practices to the structure of EU product safety regimes over the last four decades. Aspects of the AI Act, such as different rules for different risk-levels of AI, make sense. But we also find that some provisions of the Draft AI Act have surprising legal implications, whilst others may be largely ineffective at achieving their stated goals. Several overarching aspects, including the enforcement regime and the risks of maximum harmonisation pre-empting legitimate national AI policy, engender significant concern. These issues should be addressed as a priority in the legislative process.

* 16 pages, 1 table

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge