Francis wyffels

Instrumentation for Better Demonstrations: A Case Study

Apr 25, 2025Abstract:Learning from demonstrations is a powerful paradigm for robot manipulation, but its effectiveness hinges on both the quantity and quality of the collected data. In this work, we present a case study of how instrumentation, i.e. integration of sensors, can improve the quality of demonstrations and automate data collection. We instrument a squeeze bottle with a pressure sensor to learn a liquid dispensing task, enabling automated data collection via a PI controller. Transformer-based policies trained on automated demonstrations outperform those trained on human data in 78% of cases. Our findings indicate that instrumentation not only facilitates scalable data collection but also leads to better-performing policies, highlighting its potential in the pursuit of generalist robotic agents.

Self-Mixing Laser Interferometry: In Search of an Ambient Noise-Resilient Alternative to Acoustic Sensing

Apr 18, 2025

Abstract:Self-mixing interferometry (SMI) has been lauded for its sensitivity in detecting microvibrations, while requiring no physical contact with its target. Microvibrations, i.e., sounds, have recently been used as a salient indicator of extrinsic contact in robotic manipulation. In previous work, we presented a robotic fingertip using SMI for extrinsic contact sensing as an ambient-noise-resilient alternative to acoustic sensing. Here, we extend the validation experiments to the frequency domain. We find that for broadband ambient noise, SMI still outperforms acoustic sensing, but the difference is less pronounced than in time-domain analyses. For targeted noise disturbances, analogous to multiple robots simultaneously collecting data for the same task, SMI is still the clear winner. Lastly, we show how motor noise affects SMI sensing more so than acoustic sensing, and that a higher SMI readout frequency is important for future work. Design and data files are available at https://github.com/RemkoPr/icra2025-SMI-tactile-sensing.

Self-Mixing Laser Interferometry for Robotic Tactile Sensing

Feb 21, 2025Abstract:Self-mixing interferometry (SMI) has been lauded for its sensitivity in detecting microvibrations, while requiring no physical contact with its target. In robotics, microvibrations have traditionally been interpreted as a marker for object slip, and recently as a salient indicator of extrinsic contact. We present the first-ever robotic fingertip making use of SMI for slip and extrinsic contact sensing. The design is validated through measurement of controlled vibration sources, both before and after encasing the readout circuit in its fingertip package. Then, the SMI fingertip is compared to acoustic sensing through three experiments. The results are distilled into a technology decision map. SMI was found to be more sensitive to subtle slip events and significantly more robust against ambient noise. We conclude that the integration of SMI in robotic fingertips offers a new, promising branch of tactile sensing in robotics.

Evaluating Text-to-Image Diffusion Models for Texturing Synthetic Data

Nov 15, 2024Abstract:Building generic robotic manipulation systems often requires large amounts of real-world data, which can be dificult to collect. Synthetic data generation offers a promising alternative, but limiting the sim-to-real gap requires significant engineering efforts. To reduce this engineering effort, we investigate the use of pretrained text-to-image diffusion models for texturing synthetic images and compare this approach with using random textures, a common domain randomization technique in synthetic data generation. We focus on generating object-centric representations, such as keypoints and segmentation masks, which are important for robotic manipulation and require precise annotations. We evaluate the efficacy of the texturing methods by training models on the synthetic data and measuring their performance on real-world datasets for three object categories: shoes, T-shirts, and mugs. Surprisingly, we find that texturing using a diffusion model performs on par with random textures, despite generating seemingly more realistic images. Our results suggest that, for now, using diffusion models for texturing does not benefit synthetic data generation for robotics. The code, data and trained models are available at \url{https://github.com/tlpss/diffusing-synthetic-data.git}.

Automatic Calibration for an Open-source Magnetic Tactile Sensor

May 28, 2024

Abstract:Tactile sensing can enable robots to perform complex, contact-rich tasks. Magnetic sensors offer accurate three-axis force measurements while using affordable materials. Calibrating such a sensor involves either manual data collection, or automated procedures with precise mounting of the sensor relative to an actuator. We present an open-source magnetic tactile sensor with an automatic, in situ, gripper-agnostic calibration method, after which the sensor is immediately ready for use. Our goal is to lower the barrier to entry for tactile sensing, fostering collaboration in robotics. Design files and readout code can be found at https://github.com/LowiekVDS/Open-source-Magnetic-Tactile-Sensor}{https://github.com/LowiekVDS/Open-source-Magnetic-Tactile-Sensor.

Learning Keypoints for Robotic Cloth Manipulation using Synthetic Data

Jan 03, 2024Abstract:Assistive robots should be able to wash, fold or iron clothes. However, due to the variety, deformability and self-occlusions of clothes, creating general-purpose robot systems for cloth manipulation is challenging. Synthetic data is a promising direction to improve generalization, though its usability is often limited by the sim-to-real gap. To advance the use of synthetic data for cloth manipulation and to enable tasks such as robotic folding, we present a synthetic data pipeline to train keypoint detectors for almost flattened cloth items. To test its performance, we have also collected a real-world dataset. We train detectors for both T-shirts, towels and shorts and obtain an average precision of 64.3%. Fine-tuning on real-world data improves performance to 74.2%. Additional insight is provided by discussing various failure modes of the keypoint detectors and by comparing different approaches to obtain cloth meshes and materials. We also quantify the remaining sim-to-real gap and argue that further improvements to the fidelity of cloth assets will be required to further reduce this gap. The code, dataset and trained models are available online.

Seamless Integration of Tactile Sensors for Cobots

Sep 11, 2023Abstract:The development of tactile sensing is expected to enhance robotic systems in handling complex objects like deformables or reflective materials. However, readily available industrial grippers generally lack tactile feedback, which has led researchers to develop their own tactile sensors, resulting in a wide range of sensor hardware. Reading data from these sensors poses an integration challenge: either external wires must be routed along the robotic arm, or a wireless processing unit has to be fixed to the robot, increasing its size. We have developed a microcontroller-based sensor readout solution that seamlessly integrates with Robotiq grippers. Our Arduino compatible design takes away a major part of the integration complexity of tactile sensors and can serve as a valuable accelerator of research in the field. Design files and installation instructions can be found at https://github.com/RemkoPr/airo-halberd.

Augmenting Off-the-Shelf Grippers with Tactile Sensing

Jun 09, 2023

Abstract:The development of tactile sensing and its fusion with computer vision is expected to enhance robotic systems in handling complex tasks like deformable object manipulation. However, readily available industrial grippers typically lack tactile feedback, which has led researchers to develop and integrate their own tactile sensors. This has resulted in a wide range of sensor hardware, making it difficult to compare performance between different systems. We highlight the value of accessible open-source sensors and present a set of fingertips specifically designed for fine object manipulation, with readily interpretable data outputs. The fingertips are validated through two difficult tasks: cloth edge tracing and cable tracing. Videos of these demonstrations, as well as design files and readout code can be found at https://github.com/RemkoPr/icra-2023-workshop-tactile-fingertips.

Revisiting Proprioceptive Sensing for Articulated Object Manipulation

May 16, 2023Abstract:Robots that assist humans will need to interact with articulated objects such as cabinets or microwaves. Early work on creating systems for doing so used proprioceptive sensing to estimate joint mechanisms during contact. However, nowadays, almost all systems use only vision and no longer consider proprioceptive information during contact. We believe that proprioceptive information during contact is a valuable source of information and did not find clear motivation for not using it in the literature. Therefore, in this paper, we create a system that, starting from a given grasp, uses proprioceptive sensing to open cabinets with a position-controlled robot and a parallel gripper. We perform a qualitative evaluation of this system, where we find that slip between the gripper and handle limits the performance. Nonetheless, we find that the system already performs quite well. This poses the question: should we make more use of proprioceptive information during contact in articulated object manipulation systems, or is it not worth the added complexity, and can we manage with vision alone? We do not have an answer to this question, but we hope to spark some discussion on the matter. The codebase and videos of the system are available at https://tlpss.github.io/revisiting-proprioception-for-articulated-manipulation/.

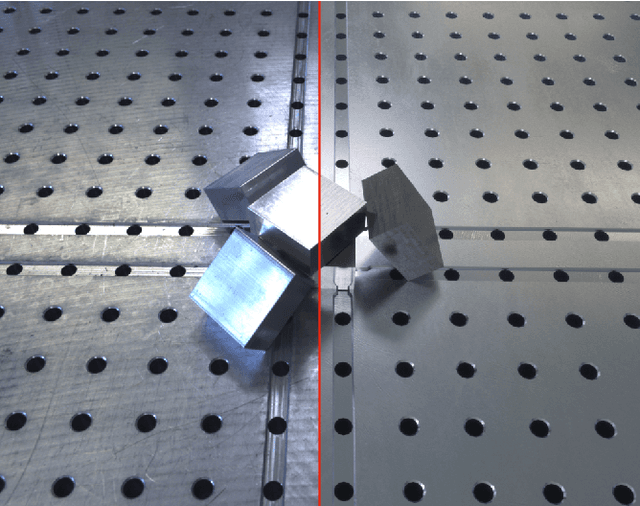

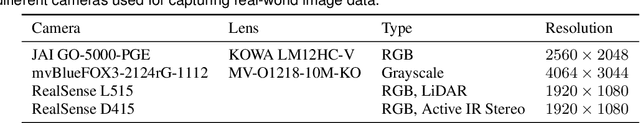

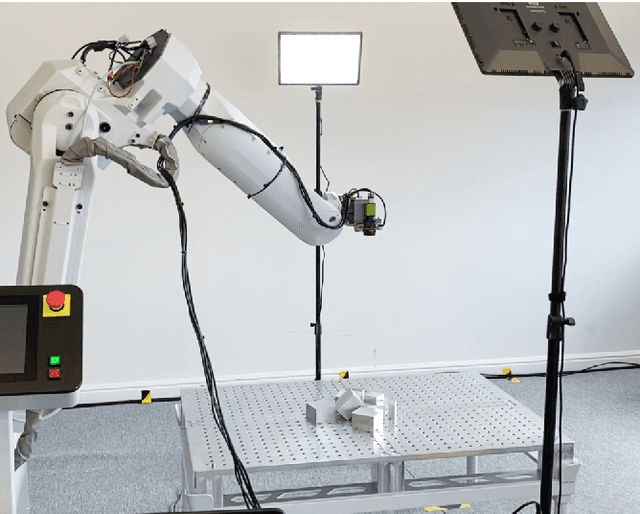

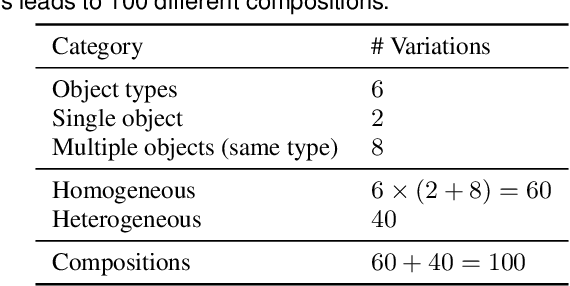

Dataset of Industrial Metal Objects

Aug 08, 2022

Abstract:We present a diverse dataset of industrial metal objects. These objects are symmetric, textureless and highly reflective, leading to challenging conditions not captured in existing datasets. Our dataset contains both real-world and synthetic multi-view RGB images with 6D object pose labels. Real-world data is obtained by recording multi-view images of scenes with varying object shapes, materials, carriers, compositions and lighting conditions. This results in over 30,000 images, accurately labelled using a new public tool. Synthetic data is obtained by carefully simulating real-world conditions and varying them in a controlled and realistic way. This leads to over 500,000 synthetic images. The close correspondence between synthetic and real-world data, and controlled variations, will facilitate sim-to-real research. Our dataset's size and challenging nature will facilitate research on various computer vision tasks involving reflective materials. The dataset and accompanying resources are made available on the project website at https://pderoovere.github.io/dimo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge