Victor-Louis De Gusseme

Learning Keypoints for Robotic Cloth Manipulation using Synthetic Data

Jan 03, 2024Abstract:Assistive robots should be able to wash, fold or iron clothes. However, due to the variety, deformability and self-occlusions of clothes, creating general-purpose robot systems for cloth manipulation is challenging. Synthetic data is a promising direction to improve generalization, though its usability is often limited by the sim-to-real gap. To advance the use of synthetic data for cloth manipulation and to enable tasks such as robotic folding, we present a synthetic data pipeline to train keypoint detectors for almost flattened cloth items. To test its performance, we have also collected a real-world dataset. We train detectors for both T-shirts, towels and shorts and obtain an average precision of 64.3%. Fine-tuning on real-world data improves performance to 74.2%. Additional insight is provided by discussing various failure modes of the keypoint detectors and by comparing different approaches to obtain cloth meshes and materials. We also quantify the remaining sim-to-real gap and argue that further improvements to the fidelity of cloth assets will be required to further reduce this gap. The code, dataset and trained models are available online.

Learning Keypoints from Synthetic Data for Robotic Cloth Folding

May 13, 2022

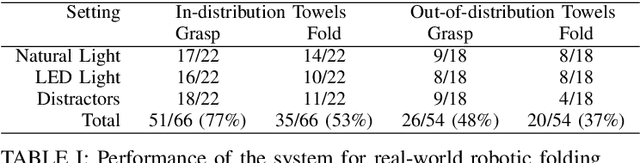

Abstract:Robotic cloth manipulation is challenging due to its deformability, which makes determining its full state infeasible. However, for cloth folding, it suffices to know the position of a few semantic keypoints. Convolutional neural networks (CNN) can be used to detect these keypoints, but require large amounts of annotated data, which is expensive to collect. To overcome this, we propose to learn these keypoint detectors purely from synthetic data, enabling low-cost data collection. In this paper, we procedurally generate images of towels and use them to train a CNN. We evaluate the performance of this detector for folding towels on a unimanual robot setup and find that the grasp and fold success rates are 77% and 53%, respectively. We conclude that learning keypoint detectors from synthetic data for cloth folding and related tasks is a promising research direction, discuss some failures and relate them to future work. A video of the system, as well as the codebase, more details on the CNN architecture and the training setup can be found at https://github.com/tlpss/workshop-icra-2022-cloth-keypoints.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge