Francesco d'Amore

COATI

Polynomially Over-Parameterized Convolutional Neural Networks Contain Structured Strong Winning Lottery Tickets

Nov 16, 2023

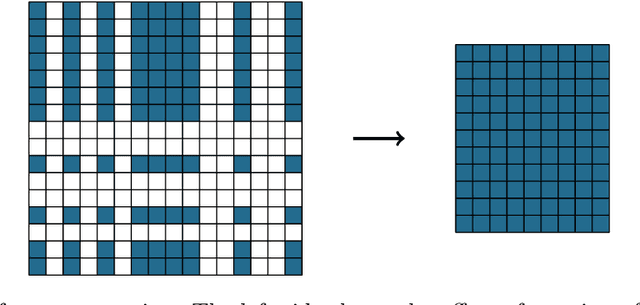

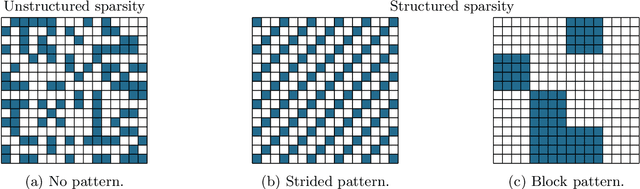

Abstract:The Strong Lottery Ticket Hypothesis (SLTH) states that randomly-initialised neural networks likely contain subnetworks that perform well without any training. Although unstructured pruning has been extensively studied in this context, its structured counterpart, which can deliver significant computational and memory efficiency gains, has been largely unexplored. One of the main reasons for this gap is the limitations of the underlying mathematical tools used in formal analyses of the SLTH. In this paper, we overcome these limitations: we leverage recent advances in the multidimensional generalisation of the Random Subset-Sum Problem and obtain a variant that admits the stochastic dependencies that arise when addressing structured pruning in the SLTH. We apply this result to prove, for a wide class of random Convolutional Neural Networks, the existence of structured subnetworks that can approximate any sufficiently smaller network. This result provides the first sub-exponential bound around the SLTH for structured pruning, opening up new avenues for further research on the hypothesis and contributing to the understanding of the role of over-parameterization in deep learning.

On the Multidimensional Random Subset Sum Problem

Jul 28, 2022Abstract:In the Random Subset Sum Problem, given $n$ i.i.d. random variables $X_1, ..., X_n$, we wish to approximate any point $z \in [-1,1]$ as the sum of a suitable subset $X_{i_1(z)}, ..., X_{i_s(z)}$ of them, up to error $\varepsilon$. Despite its simple statement, this problem is of fundamental interest to both theoretical computer science and statistical mechanics. More recently, it gained renewed attention for its implications in the theory of Artificial Neural Networks. An obvious multidimensional generalisation of the problem is to consider $n$ i.i.d.\ $d$-dimensional random vectors, with the objective of approximating every point $\mathbf{z} \in [-1,1]^d$. Rather surprisingly, after Lueker's 1998 proof that, in the one-dimensional setting, $n=O(\log \frac 1\varepsilon)$ samples guarantee the approximation property with high probability, little progress has been made on achieving the above generalisation. In this work, we prove that, in $d$ dimensions, $n = O(d^3\log \frac 1\varepsilon \cdot (\log \frac 1\varepsilon + \log d))$ samples suffice for the approximation property to hold with high probability. As an application highlighting the potential interest of this result, we prove that a recently proposed neural network model exhibits \emph{universality}: with high probability, the model can approximate any neural network within a polynomial overhead in the number of parameters.

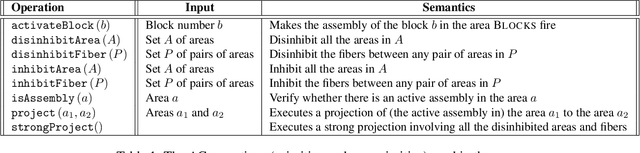

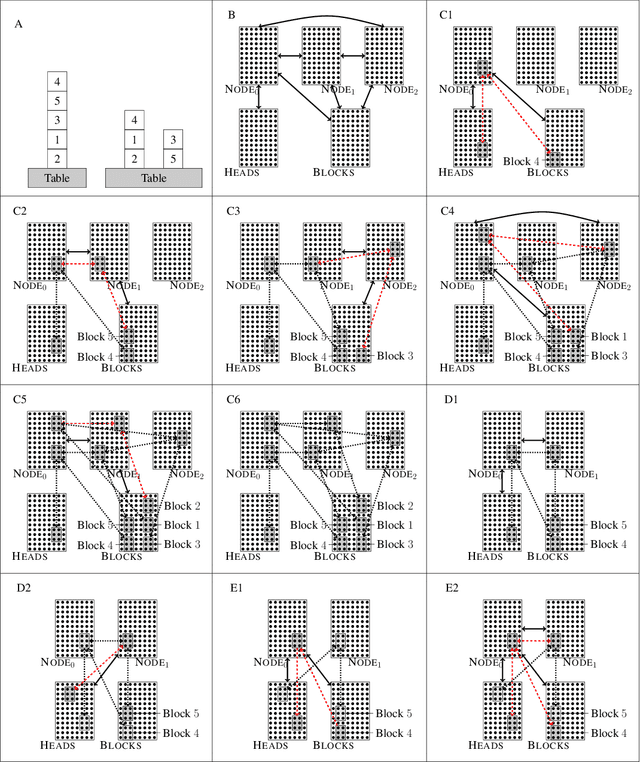

Planning with Biological Neurons and Synapses

Dec 16, 2021

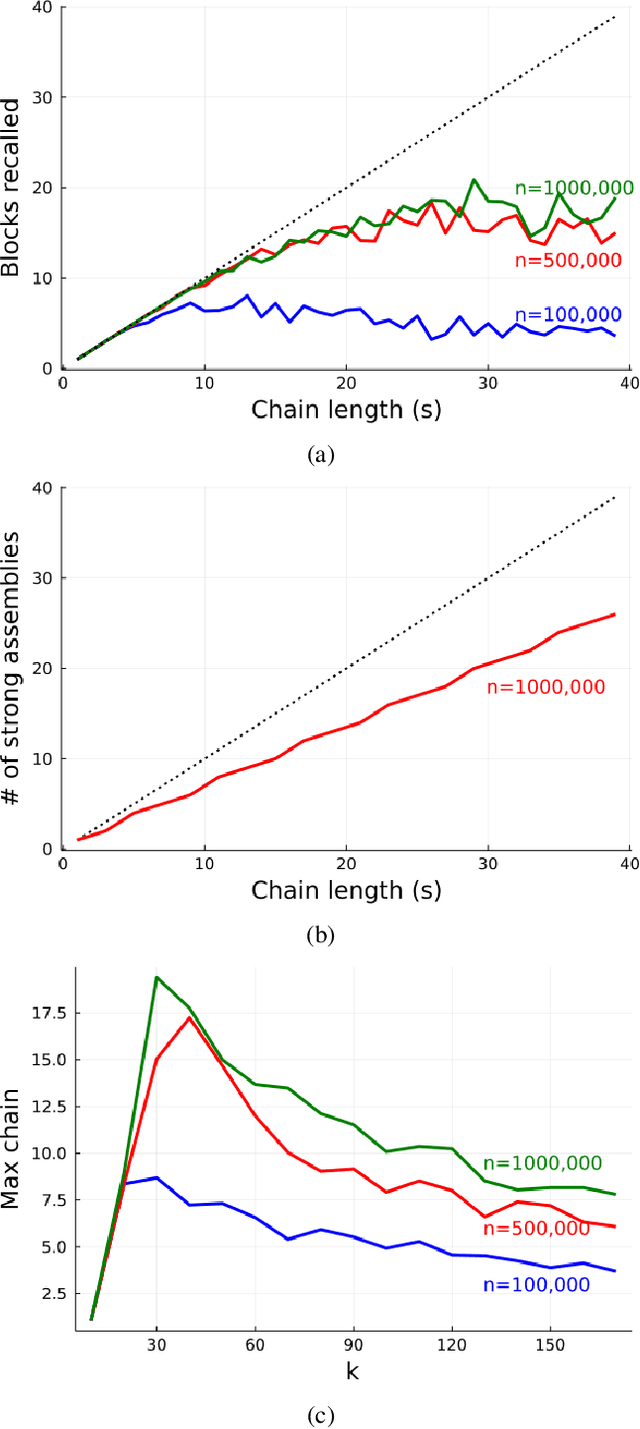

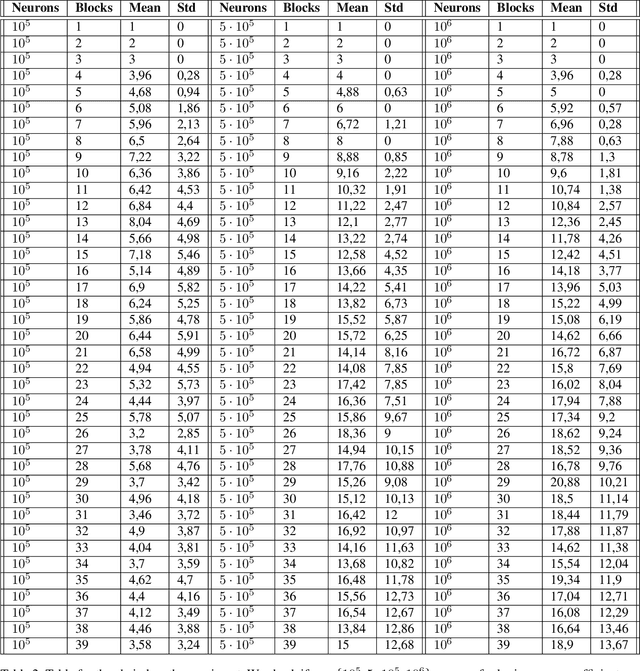

Abstract:We revisit the planning problem in the blocks world, and we implement a known heuristic for this task. Importantly, our implementation is biologically plausible, in the sense that it is carried out exclusively through the spiking of neurons. Even though much has been accomplished in the blocks world over the past five decades, we believe that this is the first algorithm of its kind. The input is a sequence of symbols encoding an initial set of block stacks as well as a target set, and the output is a sequence of motion commands such as "put the top block in stack 1 on the table". The program is written in the Assembly Calculus, a recently proposed computational framework meant to model computation in the brain by bridging the gap between neural activity and cognitive function. Its elementary objects are assemblies of neurons (stable sets of neurons whose simultaneous firing signifies that the subject is thinking of an object, concept, word, etc.), its commands include project and merge, and its execution model is based on widely accepted tenets of neuroscience. A program in this framework essentially sets up a dynamical system of neurons and synapses that eventually, with high probability, accomplishes the task. The purpose of this work is to establish empirically that reasonably large programs in the Assembly Calculus can execute correctly and reliably; and that rather realistic -- if idealized -- higher cognitive functions, such as planning in the blocks world, can be implemented successfully by such programs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge