Florian Cafiero

PSL

Diachronic Document Dataset for Semantic Layout Analysis

Nov 15, 2024

Abstract:We present a novel, open-access dataset designed for semantic layout analysis, built to support document recreation workflows through mapping with the Text Encoding Initiative (TEI) standard. This dataset includes 7,254 annotated pages spanning a large temporal range (1600-2024) of digitised and born-digital materials across diverse document types (magazines, papers from sciences and humanities, PhD theses, monographs, plays, administrative reports, etc.) sorted into modular subsets. By incorporating content from different periods and genres, it addresses varying layout complexities and historical changes in document structure. The modular design allows domain-specific configurations. We evaluate object detection models on this dataset, examining the impact of input size and subset-based training. Results show that a 1280-pixel input size for YOLO is optimal and that training on subsets generally benefits from incorporating them into a generic model rather than fine-tuning pre-trained weights.

Who could be behind QAnon? Authorship attribution with supervised machine-learning

Mar 03, 2023Abstract:A series of social media posts signed under the pseudonym "Q", started a movement known as QAnon, which led some of its most radical supporters to violent and illegal actions. To identify the person(s) behind Q, we evaluate the coincidence between the linguistic properties of the texts written by Q and to those written by a list of suspects provided by journalistic investigation. To identify the authors of these posts, serious challenges have to be addressed. The "Q drops" are very short texts, written in a way that constitute a sort of literary genre in itself, with very peculiar features of style. These texts might have been written by different authors, whose other writings are often hard to find. After an online ethnology of the movement, necessary to collect enough material written by these thirteen potential authors, we use supervised machine learning to build stylistic profiles for each of them. We then performed a rolling analysis on Q's writings, to see if any of those linguistic profiles match the so-called 'QDrops' in part or entirety. We conclude that two different individuals, Paul F. and Ron W., are the closest match to Q's linguistic signature, and they could have successively written Q's texts. These potential authors are not high-ranked personality from the U.S. administration, but rather social media activists.

No comments: Addressing commentary sections in websites' analyses

Apr 19, 2021

Abstract:Removing or extracting the commentary sections from a series of websites is a tedious task, as no standard way to code them is widely adopted. This operation is thus very rarely performed. In this paper, we show that these commentary sections can induce significant biases in the analyses, especially in the case of controversial Highlights $\bullet$ Commentary sections can induce biases in the analysis of websites' contents $\bullet$ Analyzing these sections can be interesting per se. $\bullet$ We illustrate these points using a corpus of anti-vaccine websites. $\bullet$ We provide guidelines to remove or extract these sections.

Corpus and Models for Lemmatisation and POS-tagging of Classical French Theatre

May 15, 2020

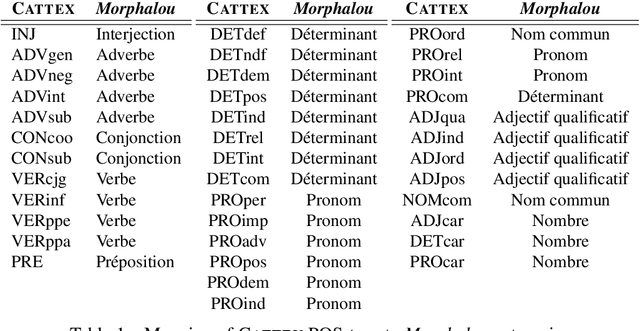

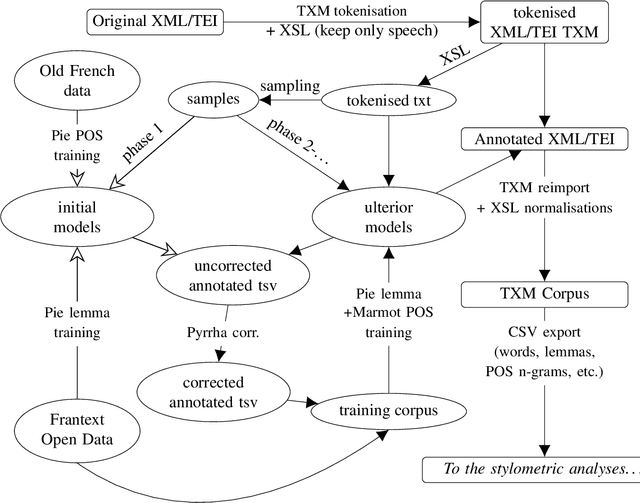

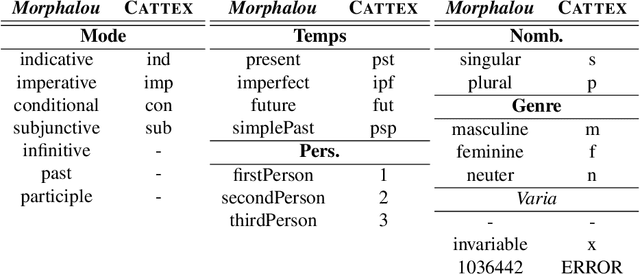

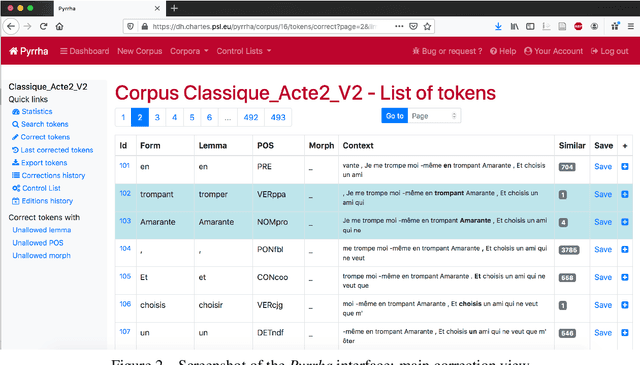

Abstract:This paper describes the process of building an annotated corpus and training models for classical French literature, with a focus on theatre, and particularly comedies in verse. It was originally developed as a preliminary step to the stylometric analyses presented in Cafiero and Camps [2019]. The use of a recent lemmatiser based on neural networks and a CRF tagger allows to achieve accuracies beyond the current state-of-the art on the in-domain test, and proves to be robust during out-of-domain tests, i.e.up to 20th c.novels.

Why Molière most likely did write his plays

Jan 02, 2020Abstract:As for Shakespeare, a hard-fought debate has emerged about Moli\`ere, a supposedly uneducated actor who, according to some, could not have written the masterpieces attributed to him. In the past decades, the century-old thesis according to which Pierre Corneille would be their actual author has become popular, mostly because of new works in computational linguistics. These results are reassessed here through state-of-the-art attribution methods. We study a corpus of comedies in verse by major authors of Moli\`ere and Corneille's time. Analysis of lexicon, rhymes, word forms, affixes, morphosyntactic sequences, and function words do not give any clue that another author among the major playwrights of the time would have written the plays signed under the name Moli\`ere.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge