Flavia Sofia Acerbo

Imitation Learning from Observations: An Autoregressive Mixture of Experts Approach

Nov 12, 2024

Abstract:This paper presents a novel approach to imitation learning from observations, where an autoregressive mixture of experts model is deployed to fit the underlying policy. The parameters of the model are learned via a two-stage framework. By leveraging the existing dynamics knowledge, the first stage of the framework estimates the control input sequences and hence reduces the problem complexity. At the second stage, the policy is learned by solving a regularized maximum-likelihood estimation problem using the estimated control input sequences. We further extend the learning procedure by incorporating a Lyapunov stability constraint to ensure asymptotic stability of the identified model, for accurate multi-step predictions. The effectiveness of the proposed framework is validated using two autonomous driving datasets collected from human demonstrations, demonstrating its practical applicability in modelling complex nonlinear dynamics.

Learning from Visual Demonstrations through Differentiable Nonlinear MPC for Personalized Autonomous Driving

Mar 22, 2024

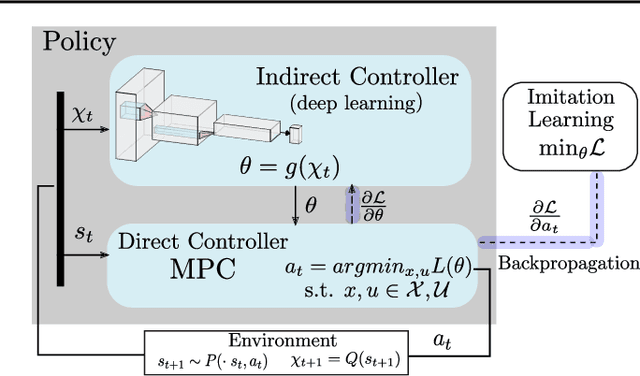

Abstract:Human-like autonomous driving controllers have the potential to enhance passenger perception of autonomous vehicles. This paper proposes DriViDOC: a model for Driving from Vision through Differentiable Optimal Control, and its application to learn personalized autonomous driving controllers from human demonstrations. DriViDOC combines the automatic inference of relevant features from camera frames with the properties of nonlinear model predictive control (NMPC), such as constraint satisfaction. Our approach leverages the differentiability of parametric NMPC, allowing for end-to-end learning of the driving model from images to control. The model is trained on an offline dataset comprising various driving styles collected on a motion-base driving simulator. During online testing, the model demonstrates successful imitation of different driving styles, and the interpreted NMPC parameters provide insights into the achievement of specific driving behaviors. Our experimental results show that DriViDOC outperforms other methods involving NMPC and neural networks, exhibiting an average improvement of 20% in imitation scores.

Evaluation of MPC-based Imitation Learning for Human-like Autonomous Driving

Nov 25, 2022

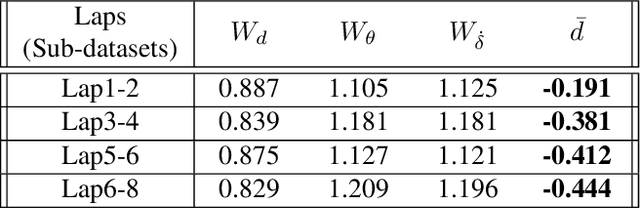

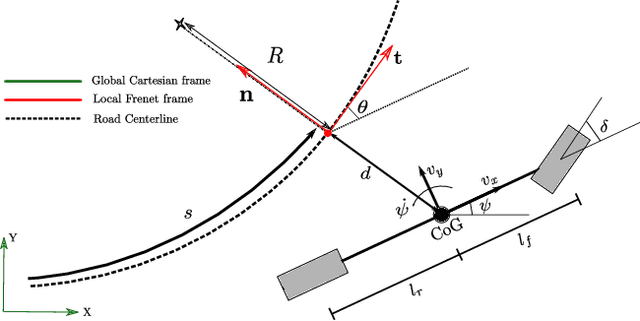

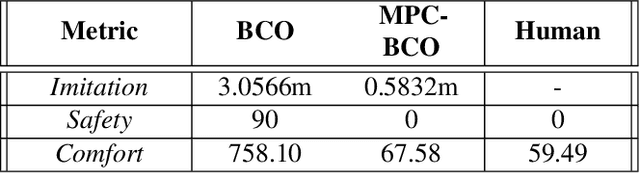

Abstract:This work evaluates and analyzes the combination of imitation learning (IL) and differentiable model predictive control (MPC) for the application of human-like autonomous driving. We combine MPC with a hierarchical learning-based policy, and measure its performance in open-loop and closed-loop with metrics related to safety, comfort and similarity to human driving characteristics. We also demonstrate the value of augmenting open-loop behavioral cloning with closed-loop training for a more robust learning, approximating the policy gradient through time with the state space model used by the MPC. We perform experimental evaluations on a lane keeping control system, learned from demonstrations collected on a fixed-base driving simulator, and show that our imitative policies approach the human driving style preferences.

Learning from Demonstrations of Critical Driving Behaviours Using Driver's Risk Field

Oct 04, 2022

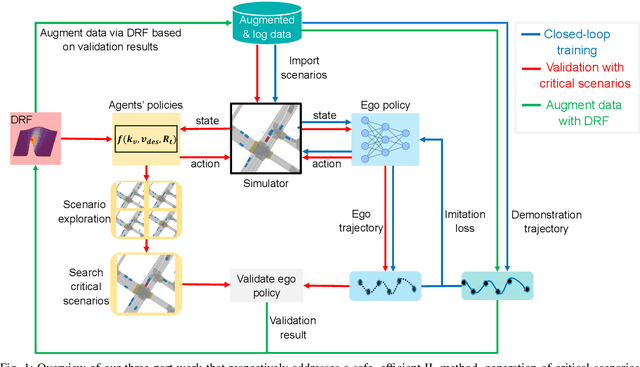

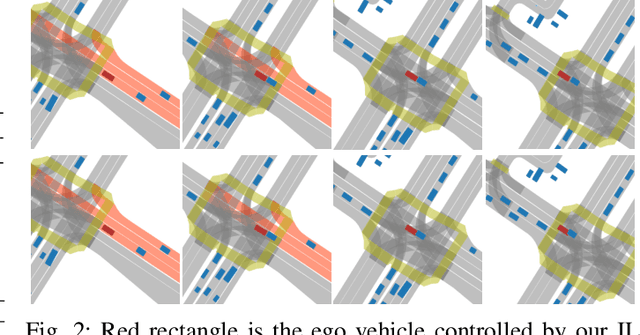

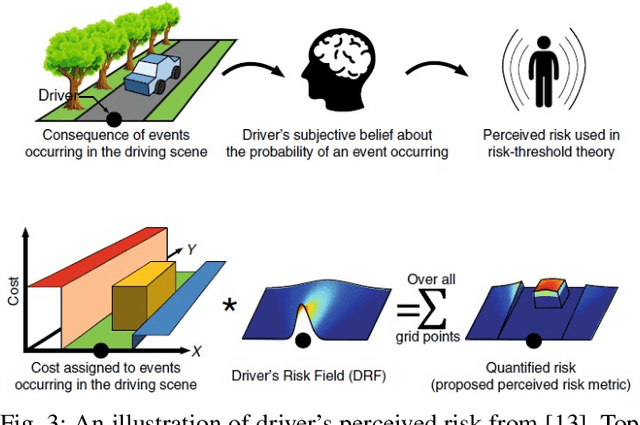

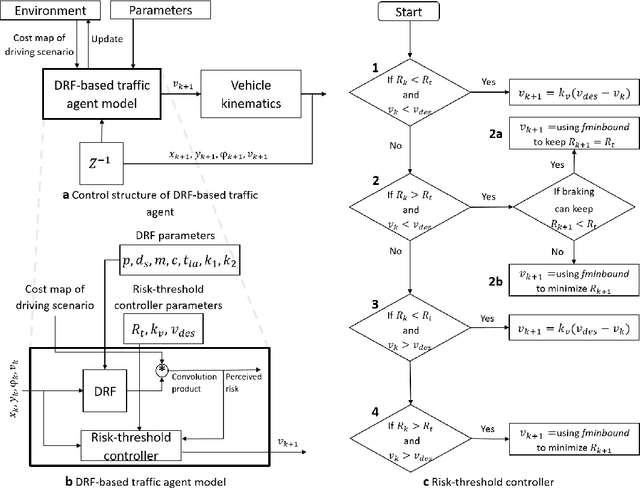

Abstract:In recent years, imitation learning (IL) has been widely used in industry as the core of autonomous vehicle (AV) planning modules. However, previous work on IL planners shows sample inefficiency and low generalisation in safety-critical scenarios, on which they are rarely tested. As a result, IL planners can reach a performance plateau where adding more training data ceases to improve the learnt policy. First, our work presents an IL model using the spline coefficient parameterisation and offline expert queries to enhance safety and training efficiency. Then, we expose the weakness of the learnt IL policy by synthetically generating critical scenarios through optimisation of parameters of the driver's risk field (DRF), a parametric human driving behaviour model implemented in a multi-agent traffic simulator based on the Lyft Prediction Dataset. To continuously improve the learnt policy, we retrain the IL model with augmented data. Thanks to the expressivity and interpretability of the DRF, the desired driving behaviours can be encoded and aggregated to the original training data. Our work constitutes a full development cycle that can efficiently and continuously improve the learnt IL policies in closed-loop. Finally, we show that our IL planner developed with 30 times less training resource still has superior performance compared to the previous state-of-the-art.

MPC-based Imitation Learning for Safe and Human-like Autonomous Driving

Jun 24, 2022

Abstract:To ensure user acceptance of autonomous vehicles (AVs), control systems are being developed to mimic human drivers from demonstrations of desired driving behaviors. Imitation learning (IL) algorithms serve this purpose, but struggle to provide safety guarantees on the resulting closed-loop system trajectories. On the other hand, Model Predictive Control (MPC) can handle nonlinear systems with safety constraints, but realizing human-like driving with it requires extensive domain knowledge. This work suggests the use of a seamless combination of the two techniques to learn safe AV controllers from demonstrations of desired driving behaviors, by using MPC as a differentiable control layer within a hierarchical IL policy. With this strategy, IL is performed in closed-loop and end-to-end, through parameters in the MPC cost, model or constraints. Experimental results of this methodology are analyzed for the design of a lane keeping control system, learned via behavioral cloning from observations (BCO), given human demonstrations on a fixed-base driving simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge