Fernando Ortega

Comprehensive Evaluation of Matrix Factorization Models for Collaborative Filtering Recommender Systems

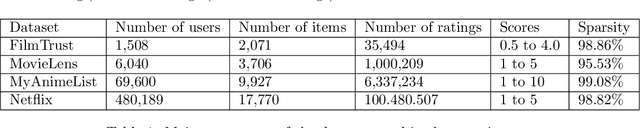

Oct 23, 2024Abstract:Matrix factorization models are the core of current commercial collaborative filtering Recommender Systems. This paper tested six representative matrix factorization models, using four collaborative filtering datasets. Experiments have tested a variety of accuracy and beyond accuracy quality measures, including prediction, recommendation of ordered and unordered lists, novelty, and diversity. Results show each convenient matrix factorization model attending to their simplicity, the required prediction quality, the necessary recommendation quality, the desired recommendation novelty and diversity, the need to explain recommendations, the adequacy of assigning semantic interpretations to hidden factors, the advisability of recommending to groups of users, and the need to obtain reliability values. To ensure the reproducibility of the experiments, an open framework has been used, and the implementation code is provided.

* 10 pages, 5 figures

Reliability quality measures for recommender systems

Feb 06, 2024

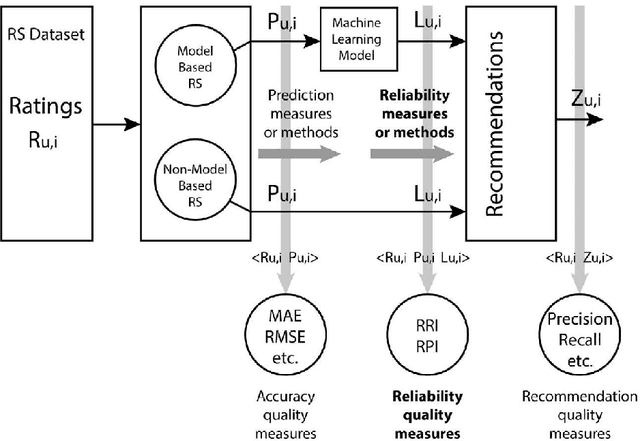

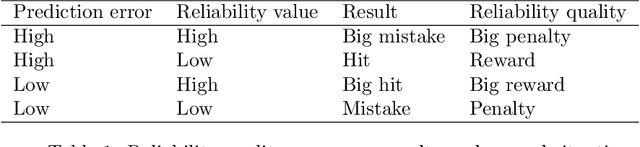

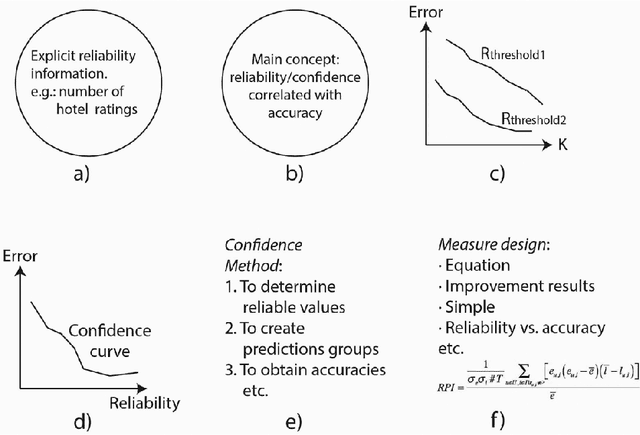

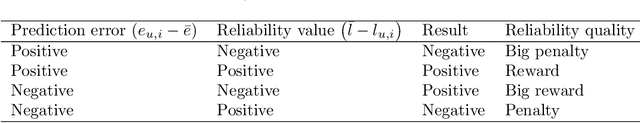

Abstract:Users want to know the reliability of the recommendations; they do not accept high predictions if there is no reliability evidence. Recommender systems should provide reliability values associated with the predictions. Research into reliability measures requires the existence of simple, plausible and universal reliability quality measures. Research into recommender system quality measures has focused on accuracy. Moreover, novelty, serendipity and diversity have been studied; nevertheless there is an important lack of research into reliability/confidence quality measures. This paper proposes a reliability quality prediction measure (RPI) and a reliability quality recommendation measure (RRI). Both quality measures are based on the hypothesis that the more suitable a reliability measure is, the better accuracy results it will provide when applied. These reliability quality measures show accuracy improvements when appropriated reliability values are associated with their predictions (i.e. high reliability values associated with correct predictions or low reliability values associated with incorrect predictions). The proposed reliability quality metrics will lead to the design of brand new recommender system reliability measures. These measures could be applied to different matrix factorization techniques and to content-based, context-aware and social recommendation approaches. The recommender system reliability measures designed could be tested, compared and improved using the proposed reliability quality metrics.

CF4J: Collaborative Filtering for Java

Feb 01, 2024

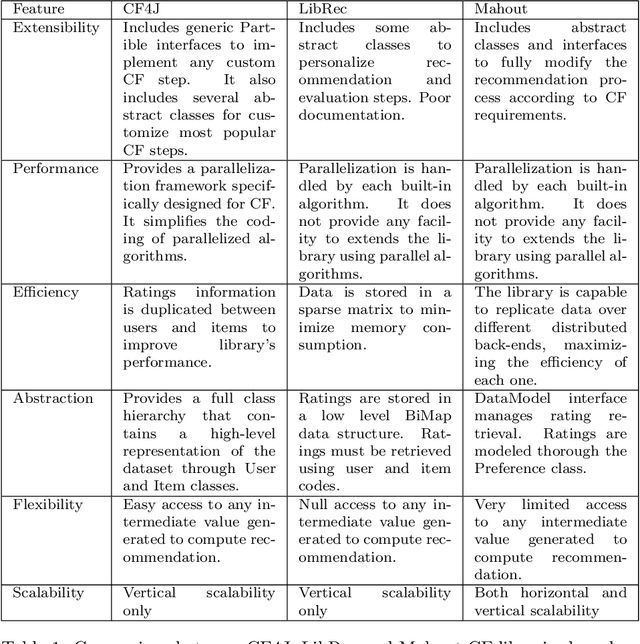

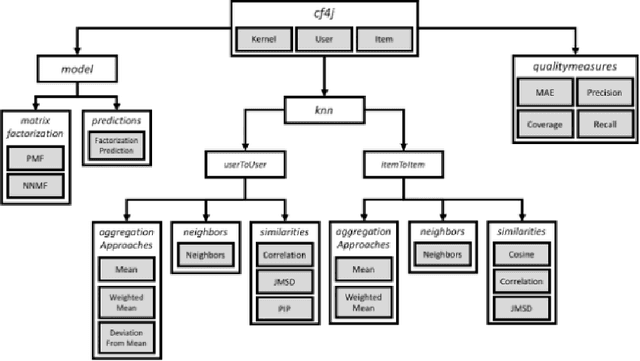

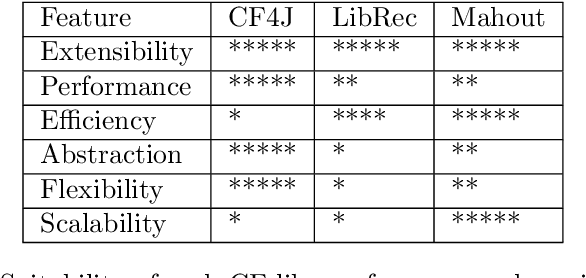

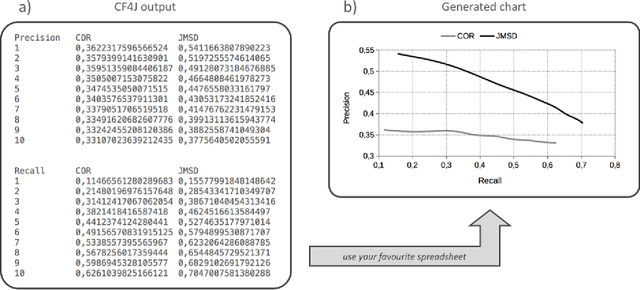

Abstract:Recommender Systems (RS) provide a relevant tool to mitigate the information overload problem. A large number of researchers have published hundreds of papers to improve different RS features. It is advisable to use RS frameworks that simplify RS researchers: a) to design and implement recommendations methods and, b) to speed up the execution time of the experiments. In this paper, we present CF4J, a Java library designed to carry out Collaborative Filtering based RS research experiments. CF4J has been designed from researchers to researchers. It allows: a) RS datasets reading, b) full and easy access to data and intermediate or final results, c) to extend their main functionalities, d) to concurrently execute the implemented methods, and e) to provide a thorough evaluation for the implementations by quality measures. In summary, CF4J serves as a library specifically designed for the research trial and error process.

Incorporating Recklessness to Collaborative Filtering based Recommender Systems

Aug 03, 2023

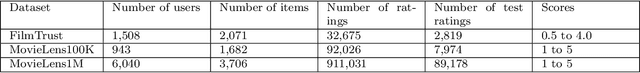

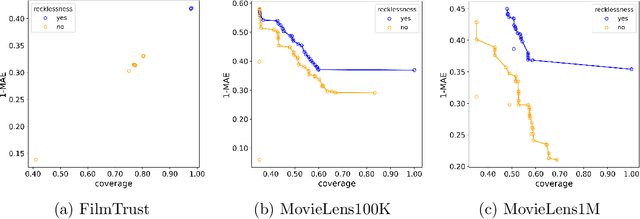

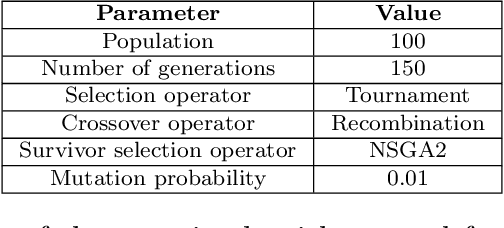

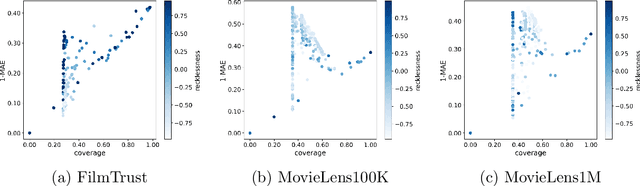

Abstract:Recommender systems that include some reliability measure of their predictions tend to be more conservative in forecasting, due to their constraint to preserve reliability. This leads to a significant drop in the coverage and novelty that these systems can provide. In this paper, we propose the inclusion of a new term in the learning process of matrix factorization-based recommender systems, called recklessness, which enables the control of the risk level desired when making decisions about the reliability of a prediction. Experimental results demonstrate that recklessness not only allows for risk regulation but also improves the quantity and quality of predictions provided by the recommender system.

Deep Neural Aggregation for Recommending Items to Group of Users

Jul 18, 2023

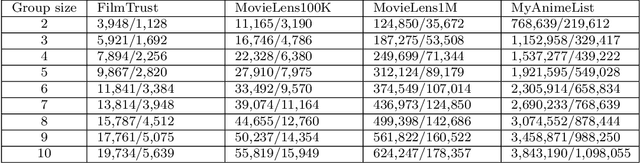

Abstract:Modern society devotes a significant amount of time to digital interaction. Many of our daily actions are carried out through digital means. This has led to the emergence of numerous Artificial Intelligence tools that assist us in various aspects of our lives. One key tool for the digital society is Recommender Systems, intelligent systems that learn from our past actions to propose new ones that align with our interests. Some of these systems have specialized in learning from the behavior of user groups to make recommendations to a group of individuals who want to perform a joint task. In this article, we analyze the current state of Group Recommender Systems and propose two new models that use emerging Deep Learning architectures. Experimental results demonstrate the improvement achieved by employing the proposed models compared to the state-of-the-art models using four different datasets. The source code of the models, as well as that of all the experiments conducted, is available in a public repository.

An evaluation framework for dimensionality reduction through sectional curvature

Mar 17, 2023Abstract:Unsupervised machine learning lacks ground truth by definition. This poses a major difficulty when designing metrics to evaluate the performance of such algorithms. In sharp contrast with supervised learning, for which plenty of quality metrics have been studied in the literature, in the field of dimensionality reduction only a few over-simplistic metrics has been proposed. In this work, we aim to introduce the first highly non-trivial dimensionality reduction performance metric. This metric is based on the sectional curvature behaviour arising from Riemannian geometry. To test its feasibility, this metric has been used to evaluate the performance of the most commonly used dimension reduction algorithms in the state of the art. Furthermore, to make the evaluation of the algorithms robust and representative, using curvature properties of planar curves, a new parameterized problem instance generator has been constructed in the form of a function generator. Experimental results are consistent with what could be expected based on the design and characteristics of the evaluated algorithms and the features of the data instances used to feed the method.

Neural Group Recommendation Based on a Probabilistic Semantic Aggregation

Mar 13, 2023Abstract:Recommendation to groups of users is a challenging subfield of recommendation systems. Its key concept is how and where to make the aggregation of each set of user information into an individual entity, such as a ranked recommendation list, a virtual user, or a multi-hot input vector encoding. This paper proposes an innovative strategy where aggregation is made in the multi-hot vector that feeds the neural network model. The aggregation provides a probabilistic semantic, and the resulting input vectors feed a model that is able to conveniently generalize the group recommendation from the individual predictions. Furthermore, using the proposed architecture, group recommendations can be obtained by simply feedforwarding the pre-trained model with individual ratings; that is, without the need to obtain datasets containing group of user information, and without the need of running two separate trainings (individual and group). This approach also avoids maintaining two different models to support both individual and group learning. Experiments have tested the proposed architecture using three representative collaborative filtering datasets and a series of baselines; results show suitable accuracy improvements compared to the state-of-the-art.

Deep Variational Models for Collaborative Filtering-based Recommender Systems

Jul 27, 2021

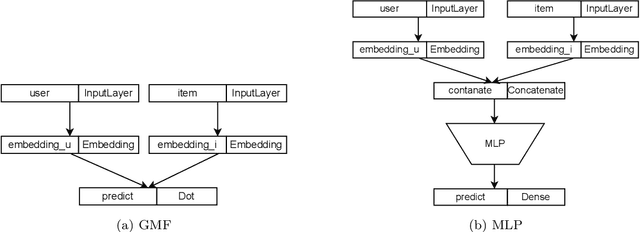

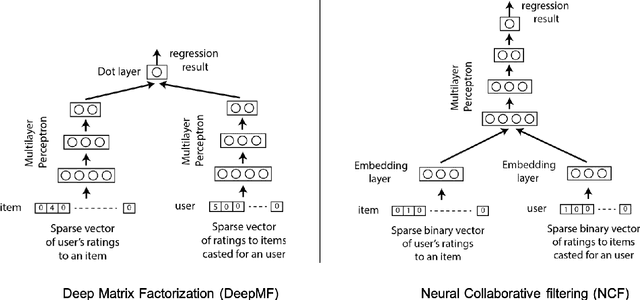

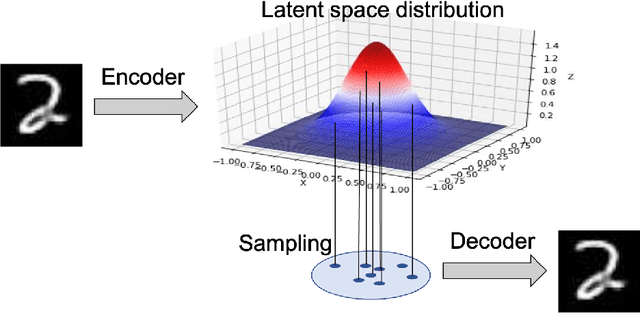

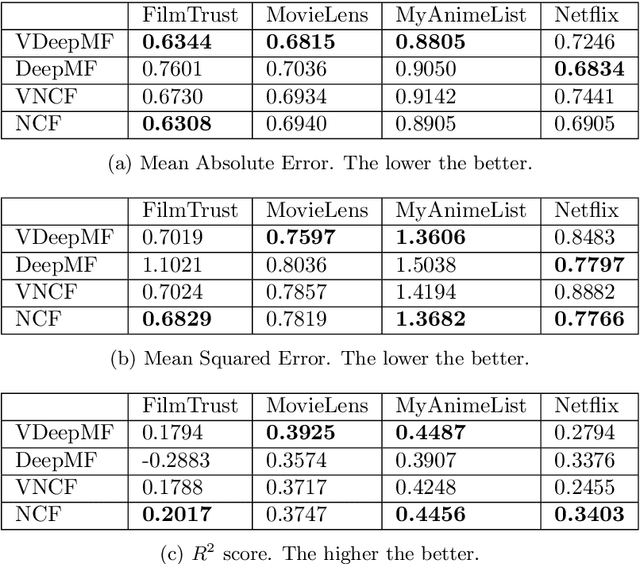

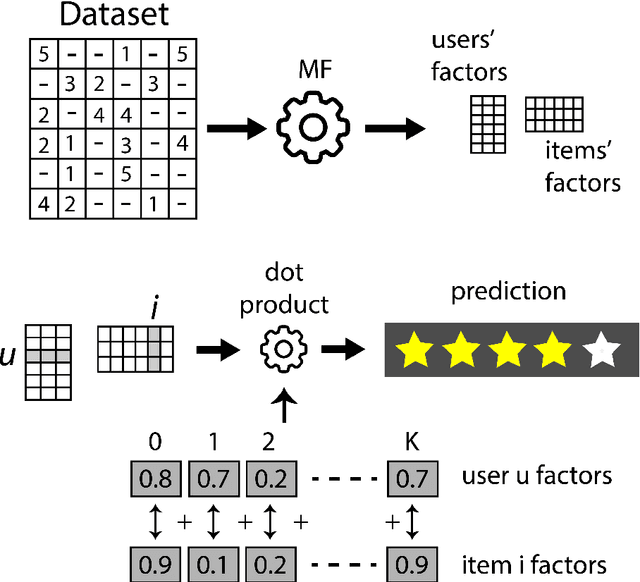

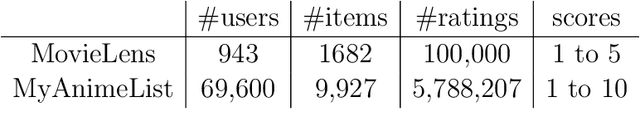

Abstract:Deep learning provides accurate collaborative filtering models to improve recommender system results. Deep matrix factorization and their related collaborative neural networks are the state-of-art in the field; nevertheless, both models lack the necessary stochasticity to create the robust, continuous, and structured latent spaces that variational autoencoders exhibit. On the other hand, data augmentation through variational autoencoder does not provide accurate results in the collaborative filtering field due to the high sparsity of recommender systems. Our proposed models apply the variational concept to inject stochasticity in the latent space of the deep architecture, introducing the variational technique in the neural collaborative filtering field. This method does not depend on the particular model used to generate the latent representation. In this way, this approach can be applied as a plugin to any current and future specific models. The proposed models have been tested using four representative open datasets, three different quality measures, and state-of-art baselines. The results show the superiority of the proposed approach in scenarios where the variational enrichment exceeds the injected noise effect. Additionally, a framework is provided to enable the reproducibility of the conducted experiments.

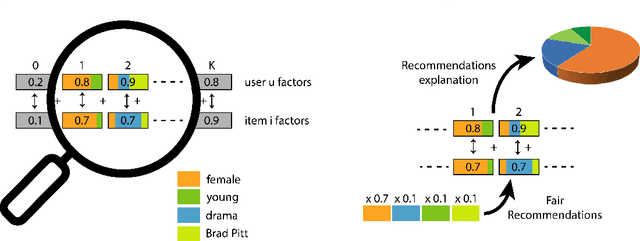

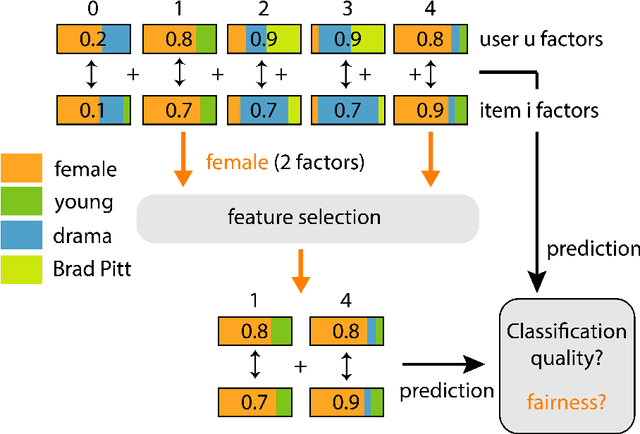

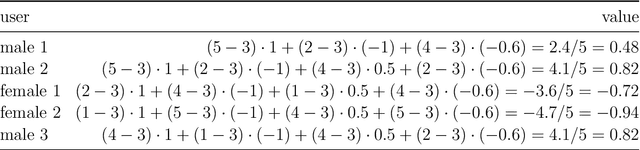

Deep Learning feature selection to unhide demographic recommender systems factors

Jun 17, 2020

Abstract:Extracting demographic features from hidden factors is an innovative concept that provides multiple and relevant applications. The matrix factorization model generates factors which do not incorporate semantic knowledge. This paper provides a deep learning-based method: DeepUnHide, able to extract demographic information from the users and items factors in collaborative filtering recommender systems. The core of the proposed method is the gradient-based localization used in the image processing literature to highlight the representative areas of each classification class. Validation experiments make use of two public datasets and current baselines. Results show the superiority of DeepUnHide to make feature selection and demographic classification, compared to the state of art of feature selection methods. Relevant and direct applications include recommendations explanation, fairness in collaborative filtering and recommendation to groups of users.

DeepFair: Deep Learning for Improving Fairness in Recommender Systems

Jun 09, 2020

Abstract:The lack of bias management in Recommender Systems leads to minority groups receiving unfair recommendations. Moreover, the trade-off between equity and precision makes it difficult to obtain recommendations that meet both criteria. Here we propose a Deep Learning based Collaborative Filtering algorithm that provides recommendations with an optimum balance between fairness and accuracy without knowing demographic information about the users. Experimental results show that it is possible to make fair recommendations without losing a significant proportion of accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge