Fergal Stapleton

NeuroLGP-SM: Scalable Surrogate-Assisted Neuroevolution for Deep Neural Networks

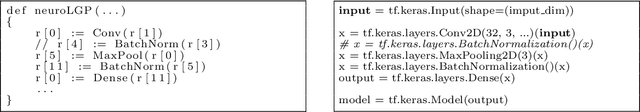

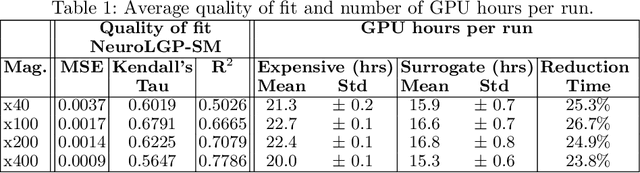

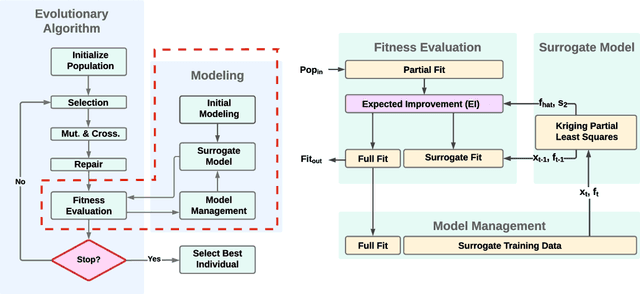

Apr 12, 2024Abstract:Evolutionary Algorithms (EAs) play a crucial role in the architectural configuration and training of Artificial Deep Neural Networks (DNNs), a process known as neuroevolution. However, neuroevolution is hindered by its inherent computational expense, requiring multiple generations, a large population, and numerous epochs. The most computationally intensive aspect lies in evaluating the fitness function of a single candidate solution. To address this challenge, we employ Surrogate-assisted EAs (SAEAs). While a few SAEAs approaches have been proposed in neuroevolution, none have been applied to truly large DNNs due to issues like intractable information usage. In this work, drawing inspiration from Genetic Programming semantics, we use phenotypic distance vectors, outputted from DNNs, alongside Kriging Partial Least Squares (KPLS), an approach that is effective in handling these large vectors, making them suitable for search. Our proposed approach, named Neuro-Linear Genetic Programming surrogate model (NeuroLGP-SM), efficiently and accurately estimates DNN fitness without the need for complete evaluations. NeuroLGP-SM demonstrates competitive or superior results compared to 12 other methods, including NeuroLGP without SM, convolutional neural networks, support vector machines, and autoencoders. Additionally, it is worth noting that NeuroLGP-SM is 25% more energy-efficient than its NeuroLGP counterpart. This efficiency advantage adds to the overall appeal of our proposed NeuroLGP-SM in optimising the configuration of large DNNs.

NeuroLGP-SM: A Surrogate-assisted Neuroevolution Approach using Linear Genetic Programming

Mar 28, 2024

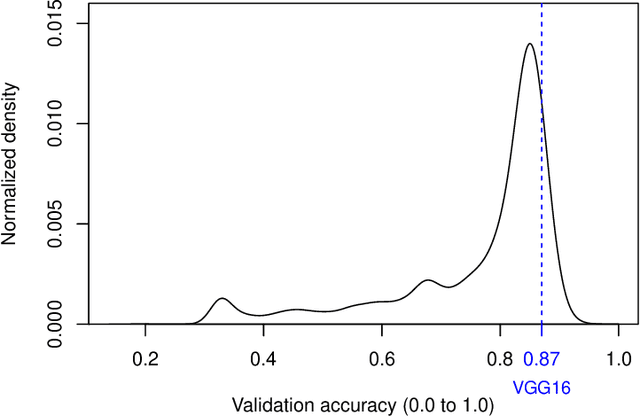

Abstract:Evolutionary algorithms are increasingly recognised as a viable computational approach for the automated optimisation of deep neural networks (DNNs) within artificial intelligence. This method extends to the training of DNNs, an approach known as neuroevolution. However, neuroevolution is an inherently resource-intensive process, with certain studies reporting the consumption of thousands of GPU days for refining and training a single DNN network. To address the computational challenges associated with neuroevolution while still attaining good DNN accuracy, surrogate models emerge as a pragmatic solution. Despite their potential, the integration of surrogate models into neuroevolution is still in its early stages, hindered by factors such as the effective use of high-dimensional data and the representation employed in neuroevolution. In this context, we address these challenges by employing a suitable representation based on Linear Genetic Programming, denoted as NeuroLGP, and leveraging Kriging Partial Least Squares. The amalgamation of these two techniques culminates in our proposed methodology known as the NeuroLGP-Surrogate Model (NeuroLGP-SM). For comparison purposes, we also code and use a baseline approach incorporating a repair mechanism, a common practice in neuroevolution. Notably, the baseline approach surpasses the renowned VGG-16 model in accuracy. Given the computational intensity inherent in DNN operations, a singular run is typically the norm. To evaluate the efficacy of our proposed approach, we conducted 96 independent runs. Significantly, our methodologies consistently outperform the baseline, with the SM model demonstrating superior accuracy or comparable results to the NeuroLGP approach. Noteworthy is the additional advantage that the SM approach exhibits a 25% reduction in computational requirements, further emphasising its efficiency for neuroevolution.

Evolutionary Multi-objective Optimisation in Neurotrajectory Prediction

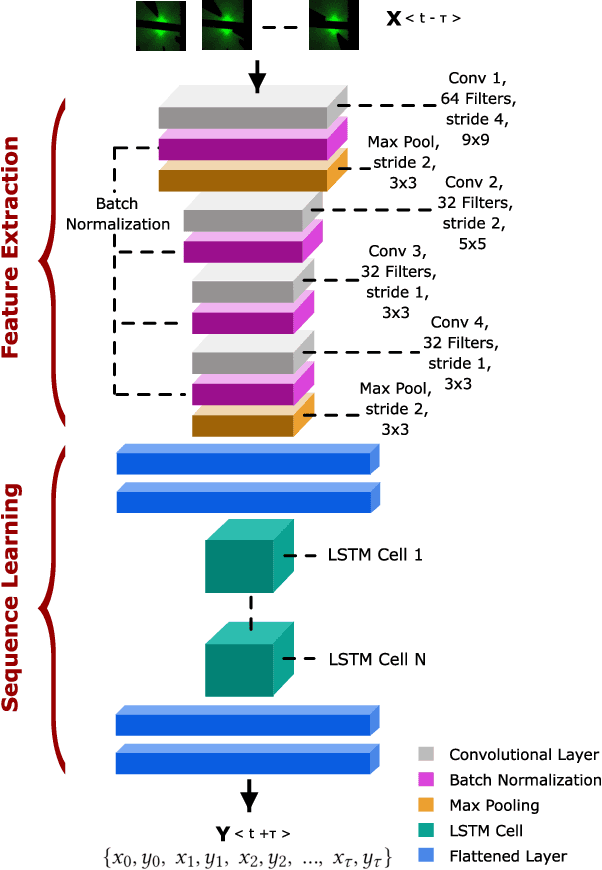

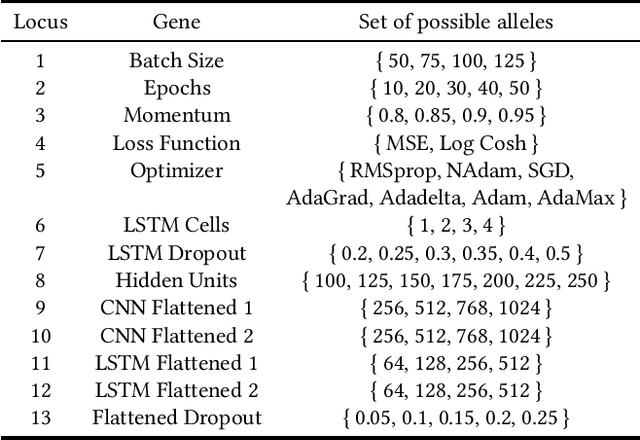

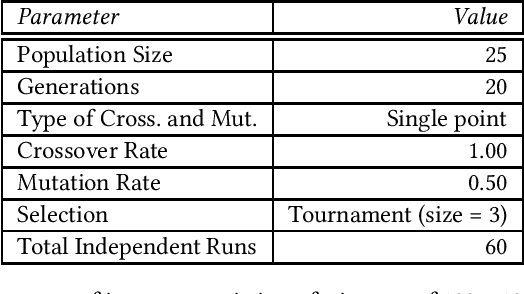

Aug 04, 2023Abstract:Machine learning has rapidly evolved during the last decade, achieving expert human performance on notoriously challenging problems such as image classification. This success is partly due to the re-emergence of bio-inspired modern artificial neural networks (ANNs) along with the availability of computation power, vast labelled data and ingenious human-based expert knowledge as well as optimisation approaches that can find the correct configuration (and weights) for these networks. Neuroevolution is a term used for the latter when employing evolutionary algorithms. Most of the works in neuroevolution have focused their attention in a single type of ANNs, named Convolutional Neural Networks (CNNs). Moreover, most of these works have used a single optimisation approach. This work makes a progressive step forward in neuroevolution for vehicle trajectory prediction, referred to as neurotrajectory prediction, where multiple objectives must be considered. To this end, rich ANNs composed of CNNs and Long-short Term Memory Network are adopted. Two well-known and robust Evolutionary Multi-objective Optimisation (EMO) algorithms, NSGA-II and MOEA/D are also adopted. The completely different underlying mechanism of each of these algorithms sheds light on the implications of using one over the other EMO approach in neurotrajectory prediction. In particular, the importance of considering objective scaling is highlighted, finding that MOEA/D can be more adept at focusing on specific objectives whereas, NSGA-II tends to be more invariant to objective scaling. Additionally, certain objectives are shown to be either beneficial or detrimental to finding valid models, for instance, inclusion of a distance feedback objective was considerably detrimental to finding valid models, while a lateral velocity objective was more beneficial.

* 38 pages, 6 Figure, 10 Tables

Initial Steps Towards Tackling High-dimensional Surrogate Modeling for Neuroevolution Using Kriging Partial Least Squares

May 11, 2023Abstract:Surrogate-assisted evolutionary algorithms (SAEAs) aim to use efficient computational models with the goal of approximating the fitness function in evolutionary computation systems. This area of research has been active for over two decades and has received significant attention from the specialised research community in different areas, for example, single and many objective optimisation or dynamic and stationary optimisation problems. An emergent and exciting area that has received little attention from the SAEAs community is in neuroevolution. This refers to the use of evolutionary algorithms in the automatic configuration of artificial neural network (ANN) architectures, hyper-parameters and/or the training of ANNs. However, ANNs suffer from two major issues: (a) the use of highly-intense computational power for their correct training, and (b) the highly specialised human expertise required to correctly configure ANNs necessary to get a well-performing network. This work aims to fill this important research gap in SAEAs in neuroevolution by addressing these two issues. We demonstrate how one can use a Kriging Partial Least Squares method that allows efficient computation of good approximate surrogate models compared to the well-known Kriging method, which normally cannot be used in neuroevolution due to the high dimensionality of the data.

Highlights of Semantics in Multi-objective Genetic Programming

Jun 13, 2022

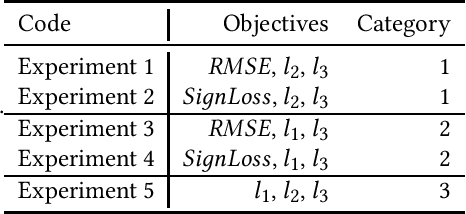

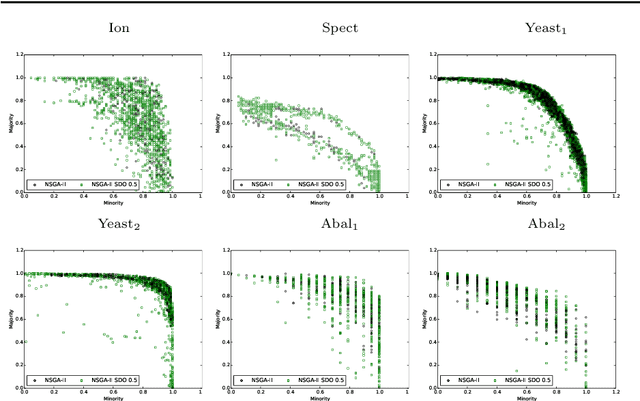

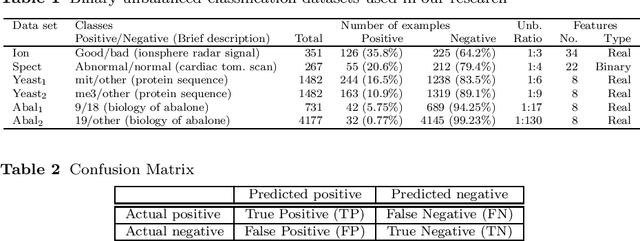

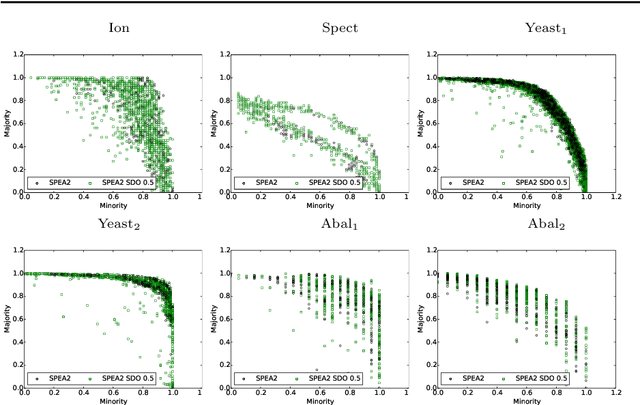

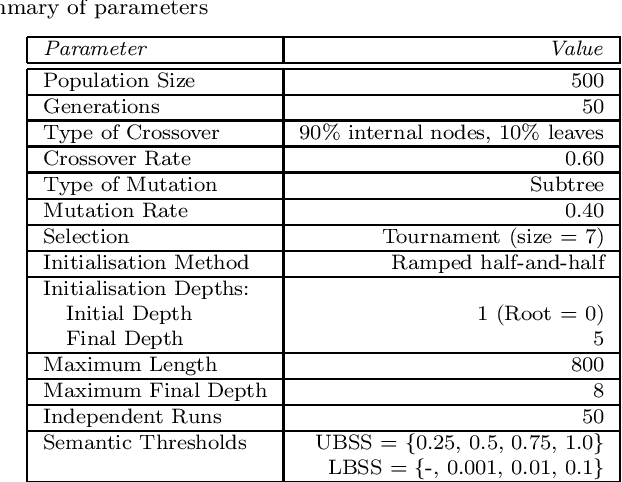

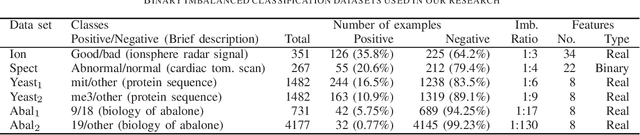

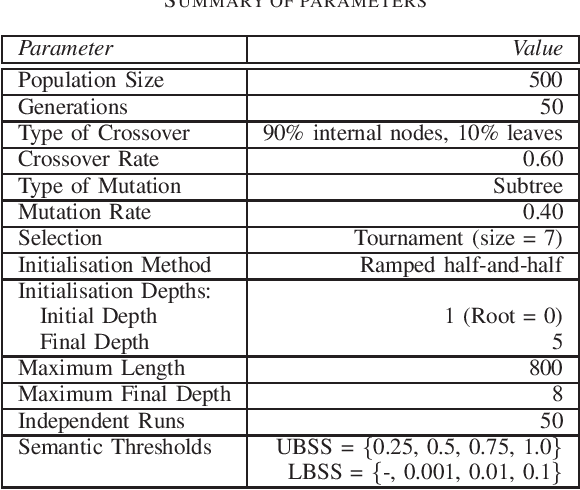

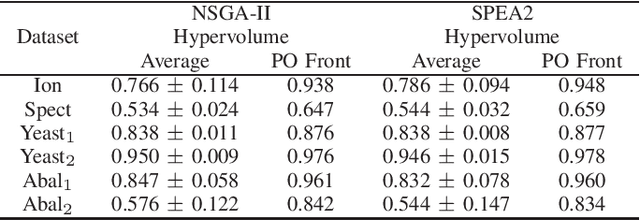

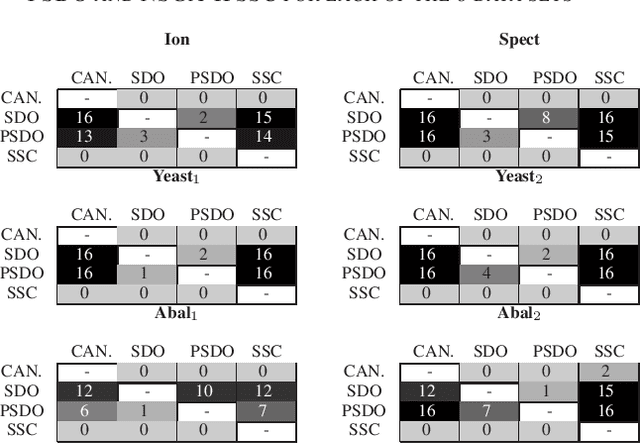

Abstract:Semantics is a growing area of research in Genetic programming (GP) and refers to the behavioural output of a Genetic Programming individual when executed. This research expands upon the current understanding of semantics by proposing a new approach: Semantic-based Distance as an additional criteriOn (SDO), in the thus far, somewhat limited researched area of semantics in Multi-objective GP (MOGP). Our work included an expansive analysis of the GP in terms of performance and diversity metrics, using two additional semantic-based approaches, namely Semantic Similarity-based Crossover (SCC) and Semantic-based Crowding Distance (SCD). Each approach is integrated into two evolutionary multi-objective (EMO) frameworks: Non-dominated Sorting Genetic Algorithm II (NSGA-II) and the Strength Pareto Evolutionary Algorithm 2 (SPEA2), and along with the three semantic approaches, the canonical form of NSGA-II and SPEA2 are rigorously compared. Using highly-unbalanced binary classification datasets, we demonstrated that the newly proposed approach of SDO consistently generated more non-dominated solutions, with better diversity and improved hypervolume results.

Neuroevolutionary Multi-objective approaches to Trajectory Prediction in Autonomous Vehicles

May 06, 2022

Abstract:The incentive for using Evolutionary Algorithms (EAs) for the automated optimization and training of deep neural networks (DNNs), a process referred to as neuroevolution, has gained momentum in recent years. The configuration and training of these networks can be posed as optimization problems. Indeed, most of the recent works on neuroevolution have focused their attention on single-objective optimization. Moreover, from the little research that has been done at the intersection of neuroevolution and evolutionary multi-objective optimization (EMO), all the research that has been carried out has focused predominantly on the use of one type of DNN: convolutional neural networks (CNNs), using well-established standard benchmark problems such as MNIST. In this work, we make a leap in the understanding of these two areas (neuroevolution and EMO), regarded in this work as neuroevolutionary multi-objective, by using and studying a rich DNN composed of a CNN and Long-short Term Memory network. Moreover, we use a robust and challenging vehicle trajectory prediction problem. By using the well-known Non-dominated Sorting Genetic Algorithm-II, we study the effects of five different objectives, tested in categories of three, allowing us to show how these objectives have either a positive or detrimental effect in neuroevolution for trajectory prediction in autonomous vehicles.

Semantics in Multi-objective Genetic Programming

May 06, 2021

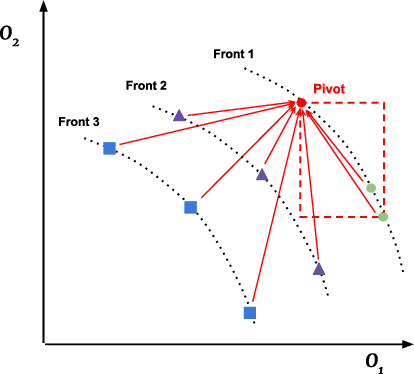

Abstract:Semantics has become a key topic of research in Genetic Programming (GP). Semantics refers to the outputs (behaviour) of a GP individual when this is run on a data set. The majority of works that focus on semantic diversity in single-objective GP indicates that it is highly beneficial in evolutionary search. Surprisingly, there is minuscule research conducted in semantics in Multi-objective GP (MOGP). In this work we make a leap beyond our understanding of semantics in MOGP and propose SDO: Semantic-based Distance as an additional criteriOn. This naturally encourages semantic diversity in MOGP. To do so, we find a pivot in the less dense region of the first Pareto front (most promising front). This is then used to compute a distance between the pivot and every individual in the population. The resulting distance is then used as an additional criterion to be optimised to favour semantic diversity. We also use two other semantic-based methods as baselines, called Semantic Similarity-based Crossover and Semantic-based Crowding Distance. Furthermore, we also use the NSGA-II and the SPEA2 for comparison too. We use highly unbalanced binary classification problems and consistently show how our proposed SDO approach produces more non-dominated solutions and better diversity, leading to better statistically significant results, using the hypervolume results as evaluation measure, compared to the rest of the other four methods.

Semantic Neighborhood Ordering in Multi-objective Genetic Programming based on Decomposition

Feb 28, 2021

Abstract:Semantic diversity in Genetic Programming has proved to be highly beneficial in evolutionary search. We have witnessed a surge in the number of scientific works in the area, starting first in discrete spaces and moving then to continuous spaces. The vast majority of these works, however, have focused their attention on single-objective genetic programming paradigms, with a few exceptions focusing on Evolutionary Multi-objective Optimization (EMO). The latter works have used well-known robust algorithms, including the Non-dominated Sorting Genetic Algorithm II and the Strength Pareto Evolutionary Algorithm, both heavily influenced by the notion of Pareto dominance. These inspiring works led us to make a step forward in EMO by considering Multi-objective Evolutionary Algorithms Based on Decomposition (MOEA/D). We show, for the first time, how we can promote semantic diversity in MOEA/D in Genetic Programming.

Promoting Semantics in Multi-objective Genetic Programming based on Decomposition

Dec 08, 2020

Abstract:The study of semantics in Genetic Program (GP) deals with the behaviour of a program given a set of inputs and has been widely reported in helping to promote diversity in GP for a range of complex problems ultimately improving evolutionary search. The vast majority of these studies have focused their attention in single-objective GP, with just a few exceptions where Pareto-based dominance algorithms such as NSGA-II and SPEA2 have been used as frameworks to test whether highly popular semantics-based methods, such as Semantic Similarity-based Crossover (SSC), helps or hinders evolutionary search. Surprisingly it has been reported that the benefits exhibited by SSC in SOGP are not seen in Pareto-based dominance Multi-objective GP. In this work, we are interested in studying if the same carries out in Multi-objective Evolutionary Algorithms based on Decomposition (MOEA/D). By using the MNIST dataset, a well-known dataset used in the machine learning community, we show how SSC in MOEA/D promotes semantic diversity yielding better results compared to when this is not present in canonical MOEA/D.

Semantic-based Distance Approaches in Multi-objective Genetic Programming

Oct 04, 2020

Abstract:Semantics in the context of Genetic Program (GP) can be understood as the behaviour of a program given a set of inputs and has been well documented in improving performance of GP for a range of diverse problems. There have been a wide variety of different methods which have incorporated semantics into single-objective GP. The study of semantics in Multi-objective (MO) GP, however, has been limited and this paper aims at tackling this issue. More specifically, we conduct a comparison of three different forms of semantics in MOGP. One semantic-based method, (i) Semantic Similarity-based Crossover (SSC), is borrowed from single-objective GP, where the method has consistently being reported beneficial in evolutionary search. We also study two other methods, dubbed (ii) Semantic-based Distance as an additional criteriOn (SDO) and (iii) Pivot Similarity SDO. We empirically and consistently show how by naturally handling semantic distance as an additional criterion to be optimised in MOGP leads to better performance when compared to canonical methods and SSC. Both semantic distance based approaches made use of a pivot, which is a reference point from the sparsest region of the search space and it was found that individuals which were both semantically similar and dissimilar to this pivot were beneficial in promoting diversity. Moreover, we also show how the semantics successfully promoted in single-objective optimisation does not necessary lead to a better performance when adopted in MOGP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge