Fengying Dang

Event-Triggered Model Predictive Control with Deep Reinforcement Learning for Autonomous Driving

Aug 22, 2022

Abstract:Event-triggered model predictive control (eMPC) is a popular optimal control method with an aim to alleviate the computation and/or communication burden of MPC. However, it generally requires priori knowledge of the closed-loop system behavior along with the communication characteristics for designing the event-trigger policy. This paper attempts to solve this challenge by proposing an efficient eMPC framework and demonstrate successful implementation of this framework on the autonomous vehicle path following. First of all, a model-free reinforcement learning (RL) agent is used to learn the optimal event-trigger policy without the need for a complete dynamical system and communication knowledge in this framework. Furthermore, techniques including prioritized experience replay (PER) buffer and long-short term memory (LSTM) are employed to foster exploration and improve training efficiency. In this paper, we use the proposed framework with three deep RL algorithms, i.e., Double Q-learning (DDQN), Proximal Policy Optimization (PPO), and Soft Actor-Critic (SAC), to solve this problem. Experimental results show that all three deep RL-based eMPC (deep-RL-eMPC) can achieve better evaluation performance than the conventional threshold-based and previous linear Q-based approach in the autonomous path following. In particular, PPO-eMPC with LSTM and DDQN-eMPC with PER and LSTM obtains a superior balance between the closed-loop control performance and event-trigger frequency. The associated code is open-sourced and available at: https://github.com/DangFengying/RL-based-event-triggered-MPC.

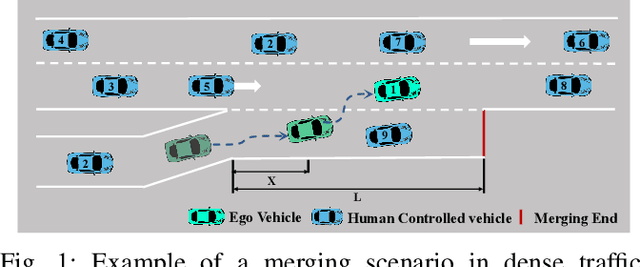

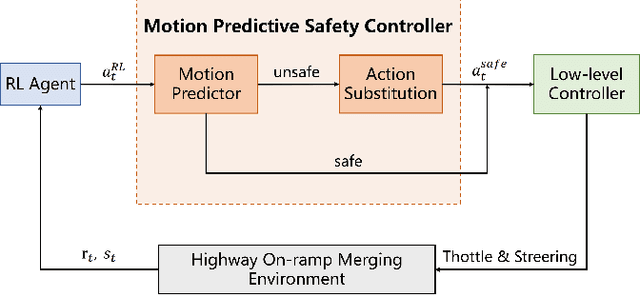

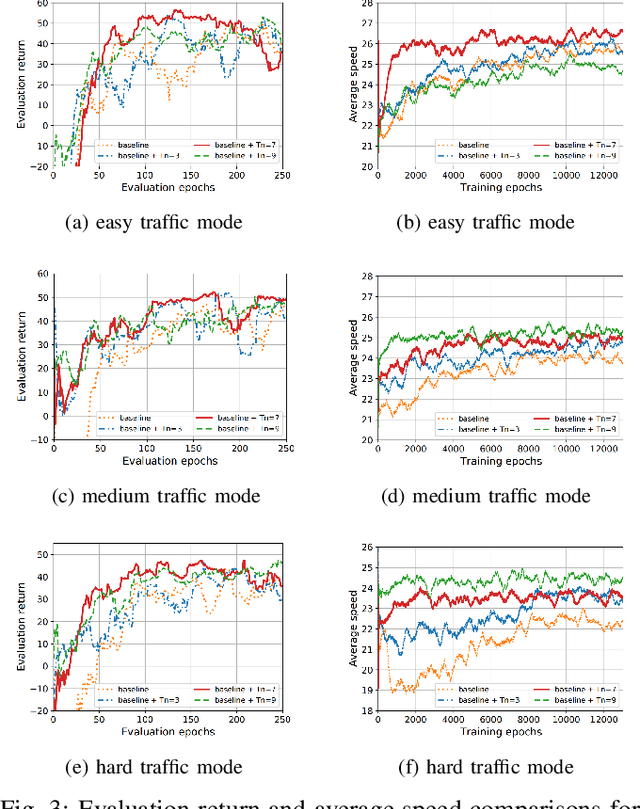

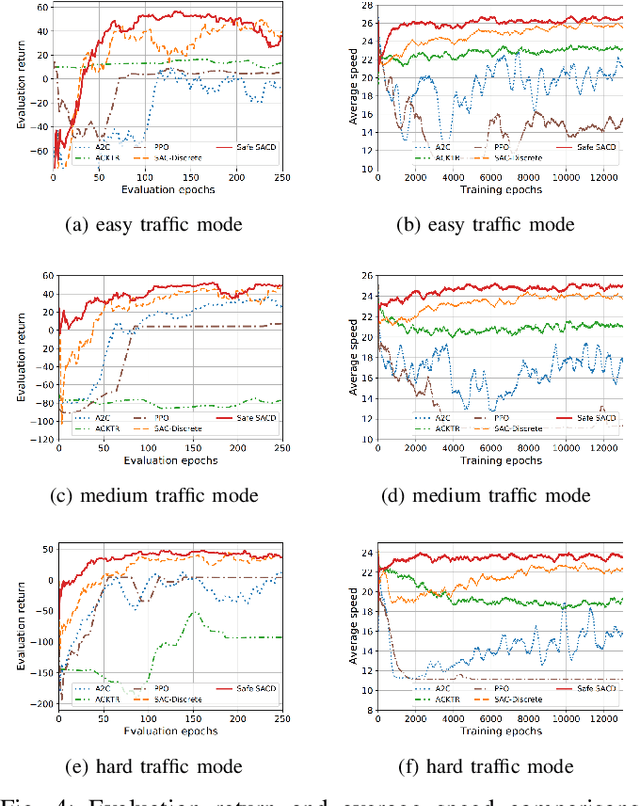

Autonomous Highway Merging in Mixed Traffic Using Reinforcement Learning and Motion Predictive Safety Controller

Apr 03, 2022

Abstract:Deep reinforcement learning (DRL) has a great potential for solving complex decision-making problems in autonomous driving, especially in mixed-traffic scenarios where autonomous vehicles and human-driven vehicles (HDVs) drive together. Safety is a key during both the learning and deploying reinforcement learning (RL) algorithms process. In this paper, we formulate the on-ramp merging as a Markov Decision Process (MDP) problem and solve it with an off-policy RL algorithm, i.e., Soft Actor-Critic for Discrete Action Settings (SAC-Discrete). In addition, a motion predictive safety controller including a motion predictor and an action substitution module, is proposed to ensure driving safety during both training and testing. The motion predictor estimates the trajectories of the ego vehicle and surrounding vehicles from kinematic models, and predicts potential collisions. The action substitution module updates the actions based on safety distance and replaces risky actions, before sending them to the low-level controller. We train, evaluate and test our approach on a gym-like highway simulation with three different levels of traffic modes. The simulation results show that even in harder traffic densities, our proposed method still significantly reduces collision rate while maintaining high efficiency, outperforming several state-of-the-art baselines in the considered on-ramp merging scenarios. The video demo of the evaluation process can be found at: https://www.youtube.com/watch?v=7FvjbAM4oFw

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge