Qianqian Liu

DiscoX: Benchmarking Discourse-Level Translation task in Expert Domains

Nov 14, 2025

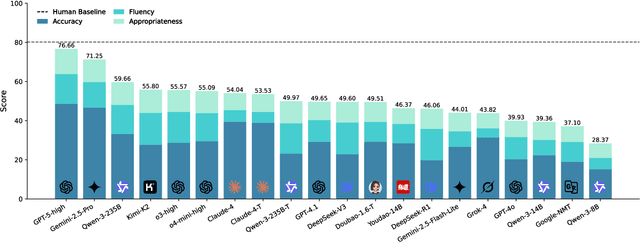

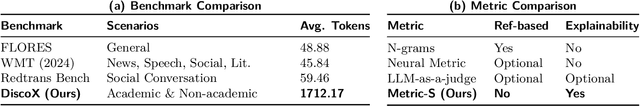

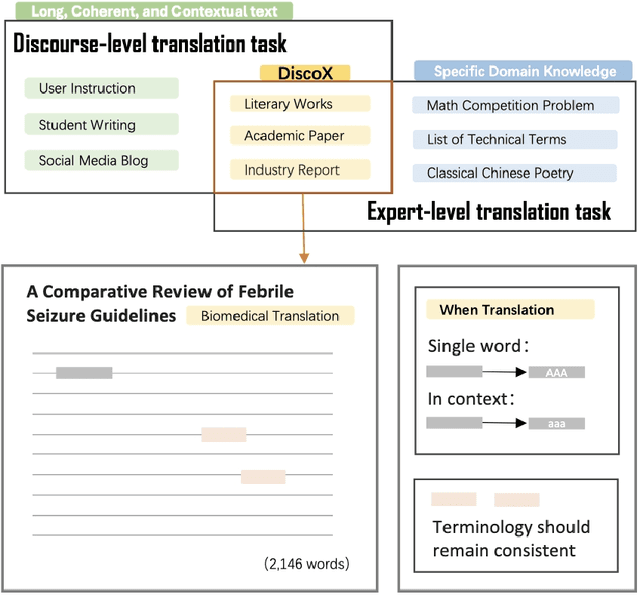

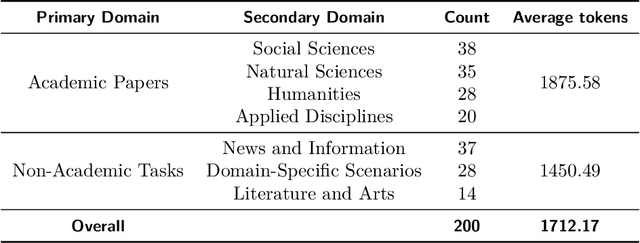

Abstract:The evaluation of discourse-level translation in expert domains remains inadequate, despite its centrality to knowledge dissemination and cross-lingual scholarly communication. While these translations demand discourse-level coherence and strict terminological precision, current evaluation methods predominantly focus on segment-level accuracy and fluency. To address this limitation, we introduce DiscoX, a new benchmark for discourse-level and expert-level Chinese-English translation. It comprises 200 professionally-curated texts from 7 domains, with an average length exceeding 1700 tokens. To evaluate performance on DiscoX, we also develop Metric-S, a reference-free system that provides fine-grained automatic assessments across accuracy, fluency, and appropriateness. Metric-S demonstrates strong consistency with human judgments, significantly outperforming existing metrics. Our experiments reveal a remarkable performance gap: even the most advanced LLMs still trail human experts on these tasks. This finding validates the difficulty of DiscoX and underscores the challenges that remain in achieving professional-grade machine translation. The proposed benchmark and evaluation system provide a robust framework for more rigorous evaluation, facilitating future advancements in LLM-based translation.

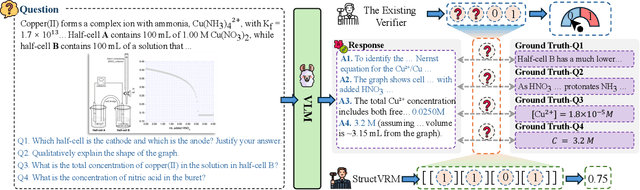

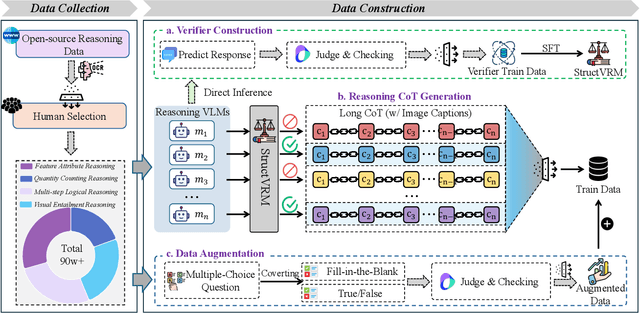

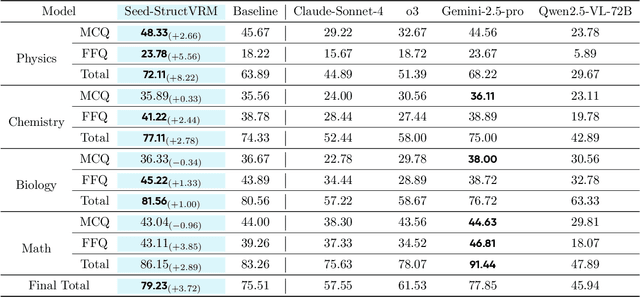

StructVRM: Aligning Multimodal Reasoning with Structured and Verifiable Reward Models

Aug 07, 2025

Abstract:Existing Vision-Language Models often struggle with complex, multi-question reasoning tasks where partial correctness is crucial for effective learning. Traditional reward mechanisms, which provide a single binary score for an entire response, are too coarse to guide models through intricate problems with multiple sub-parts. To address this, we introduce StructVRM, a method that aligns multimodal reasoning with Structured and Verifiable Reward Models. At its core is a model-based verifier trained to provide fine-grained, sub-question-level feedback, assessing semantic and mathematical equivalence rather than relying on rigid string matching. This allows for nuanced, partial credit scoring in previously intractable problem formats. Extensive experiments demonstrate the effectiveness of StructVRM. Our trained model, Seed-StructVRM, achieves state-of-the-art performance on six out of twelve public multimodal benchmarks and our newly curated, high-difficulty STEM-Bench. The success of StructVRM validates that training with structured, verifiable rewards is a highly effective approach for advancing the capabilities of multimodal models in complex, real-world reasoning domains.

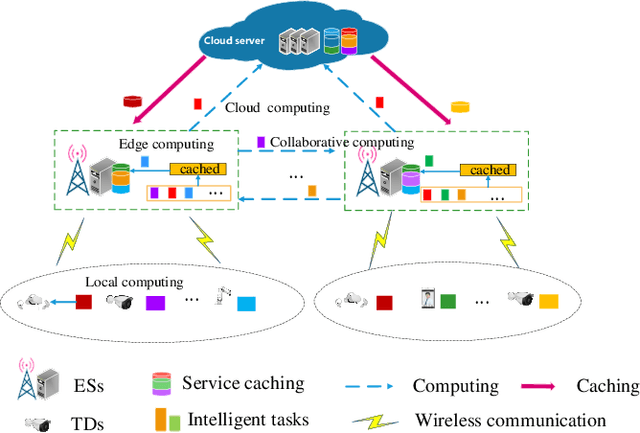

Joint Service Caching, Communication and Computing Resource Allocation in Collaborative MEC Systems: A DRL-based Two-timescale Approach

Jul 19, 2023

Abstract:Meeting the strict Quality of Service (QoS) requirements of terminals has imposed a signiffcant challenge on Multiaccess Edge Computing (MEC) systems, due to the limited multidimensional resources. To address this challenge, we propose a collaborative MEC framework that facilitates resource sharing between the edge servers, and with the aim to maximize the long-term QoS and reduce the cache switching cost through joint optimization of service caching, collaborative offfoading, and computation and communication resource allocation. The dual timescale feature and temporal recurrence relationship between service caching and other resource allocation make solving the problem even more challenging. To solve it, we propose a deep reinforcement learning (DRL)-based dual timescale scheme, called DGL-DDPG, which is composed of a short-term genetic algorithm (GA) and a long short-term memory network-based deep deterministic policy gradient (LSTM-DDPG). In doing so, we reformulate the optimization problem as a Markov decision process (MDP) where the small-timescale resource allocation decisions generated by an improved GA are taken as the states and input into a centralized LSTM-DDPG agent to generate the service caching decision for the large-timescale. Simulation results demonstrate that our proposed algorithm outperforms the baseline algorithms in terms of the average QoS and cache switching cost.

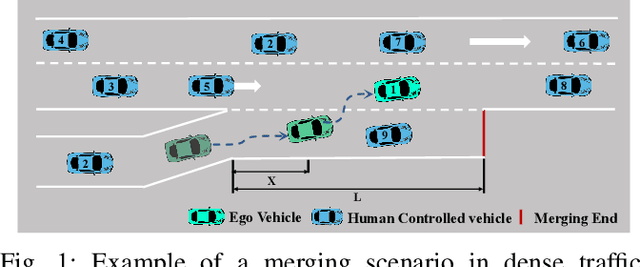

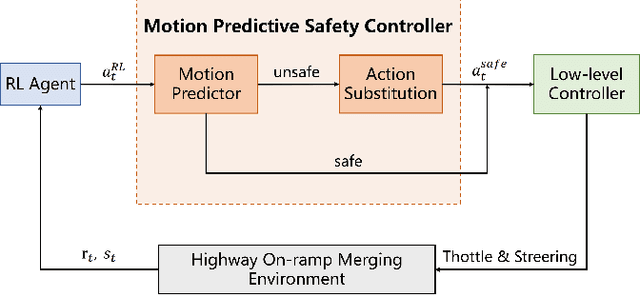

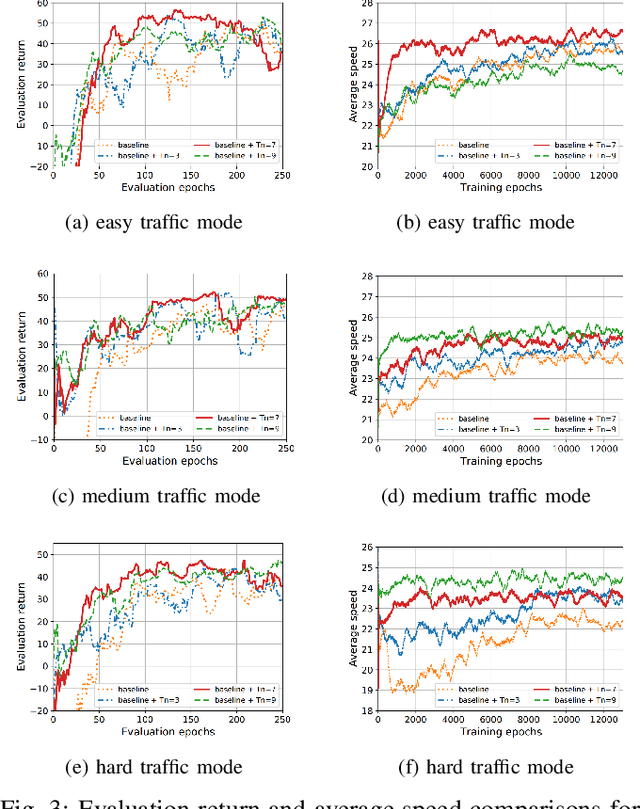

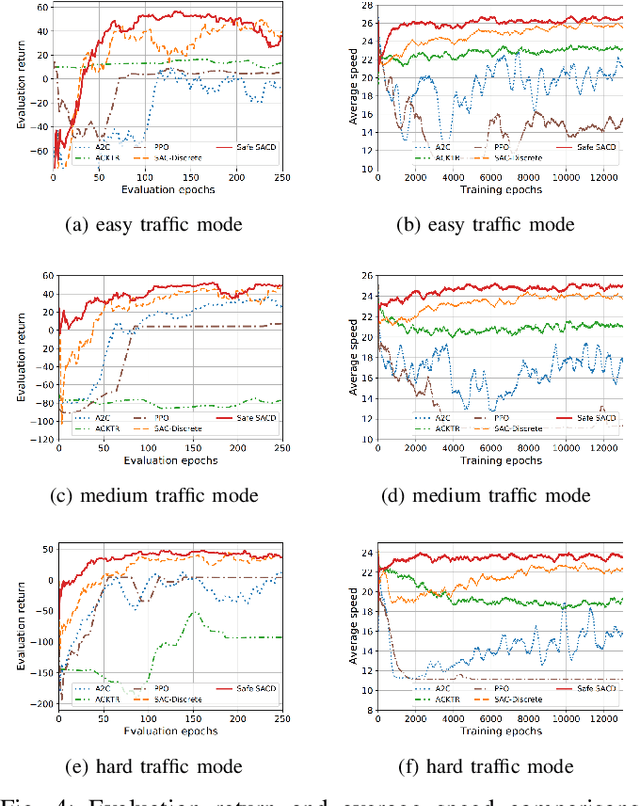

Autonomous Highway Merging in Mixed Traffic Using Reinforcement Learning and Motion Predictive Safety Controller

Apr 03, 2022

Abstract:Deep reinforcement learning (DRL) has a great potential for solving complex decision-making problems in autonomous driving, especially in mixed-traffic scenarios where autonomous vehicles and human-driven vehicles (HDVs) drive together. Safety is a key during both the learning and deploying reinforcement learning (RL) algorithms process. In this paper, we formulate the on-ramp merging as a Markov Decision Process (MDP) problem and solve it with an off-policy RL algorithm, i.e., Soft Actor-Critic for Discrete Action Settings (SAC-Discrete). In addition, a motion predictive safety controller including a motion predictor and an action substitution module, is proposed to ensure driving safety during both training and testing. The motion predictor estimates the trajectories of the ego vehicle and surrounding vehicles from kinematic models, and predicts potential collisions. The action substitution module updates the actions based on safety distance and replaces risky actions, before sending them to the low-level controller. We train, evaluate and test our approach on a gym-like highway simulation with three different levels of traffic modes. The simulation results show that even in harder traffic densities, our proposed method still significantly reduces collision rate while maintaining high efficiency, outperforming several state-of-the-art baselines in the considered on-ramp merging scenarios. The video demo of the evaluation process can be found at: https://www.youtube.com/watch?v=7FvjbAM4oFw

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge