Fadi Al Machot

Towards Sustainable Universal Deepfake Detection with Frequency-Domain Masking

Dec 08, 2025

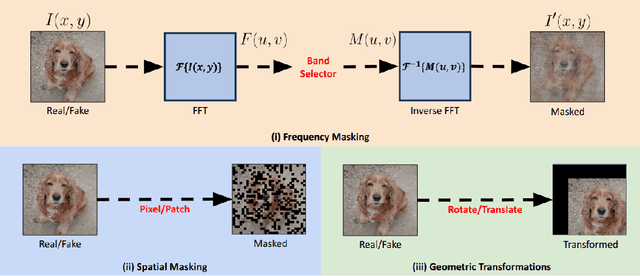

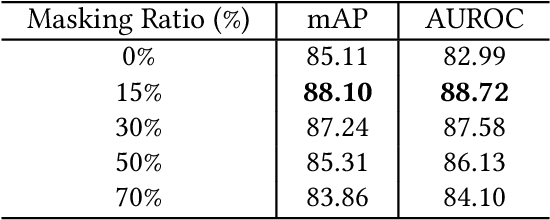

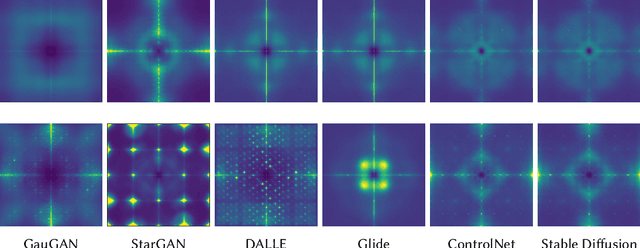

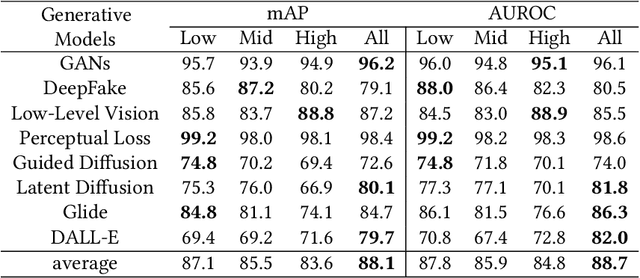

Abstract:Universal deepfake detection aims to identify AI-generated images across a broad range of generative models, including unseen ones. This requires robust generalization to new and unseen deepfakes, which emerge frequently, while minimizing computational overhead to enable large-scale deepfake screening, a critical objective in the era of Green AI. In this work, we explore frequency-domain masking as a training strategy for deepfake detectors. Unlike traditional methods that rely heavily on spatial features or large-scale pretrained models, our approach introduces random masking and geometric transformations, with a focus on frequency masking due to its superior generalization properties. We demonstrate that frequency masking not only enhances detection accuracy across diverse generators but also maintains performance under significant model pruning, offering a scalable and resource-conscious solution. Our method achieves state-of-the-art generalization on GAN- and diffusion-generated image datasets and exhibits consistent robustness under structured pruning. These results highlight the potential of frequency-based masking as a practical step toward sustainable and generalizable deepfake detection. Code and models are available at: [https://github.com/chandlerbing65nm/FakeImageDetection](https://github.com/chandlerbing65nm/FakeImageDetection).

Symbolic-AI-Fusion Deep Learning (SAIF-DL): Encoding Knowledge into Training with Answer Set Programming Loss Penalties by a Novel Loss Function Approach

Nov 13, 2024Abstract:This paper presents a hybrid methodology that enhances the training process of deep learning (DL) models by embedding domain expert knowledge using ontologies and answer set programming (ASP). By integrating these symbolic AI methods, we encode domain-specific constraints, rules, and logical reasoning directly into the model's learning process, thereby improving both performance and trustworthiness. The proposed approach is flexible and applicable to both regression and classification tasks, demonstrating generalizability across various fields such as healthcare, autonomous systems, engineering, and battery manufacturing applications. Unlike other state-of-the-art methods, the strength of our approach lies in its scalability across different domains. The design allows for the automation of the loss function by simply updating the ASP rules, making the system highly scalable and user-friendly. This facilitates seamless adaptation to new domains without significant redesign, offering a practical solution for integrating expert knowledge into DL models in industrial settings such as battery manufacturing.

Building Trustworthy AI: Transparent AI Systems via Large Language Models, Ontologies, and Logical Reasoning (TranspNet)

Nov 13, 2024Abstract:Growing concerns over the lack of transparency in AI, particularly in high-stakes fields like healthcare and finance, drive the need for explainable and trustworthy systems. While Large Language Models (LLMs) perform exceptionally well in generating accurate outputs, their "black box" nature poses significant challenges to transparency and trust. To address this, the paper proposes the TranspNet pipeline, which integrates symbolic AI with LLMs. By leveraging domain expert knowledge, retrieval-augmented generation (RAG), and formal reasoning frameworks like Answer Set Programming (ASP), TranspNet enhances LLM outputs with structured reasoning and verification. This approach ensures that AI systems deliver not only accurate but also explainable and trustworthy results, meeting regulatory demands for transparency and accountability. TranspNet provides a comprehensive solution for developing AI systems that are reliable and interpretable, making it suitable for real-world applications where trust is critical.

RevCD -- Reversed Conditional Diffusion for Generalized Zero-Shot Learning

Aug 31, 2024

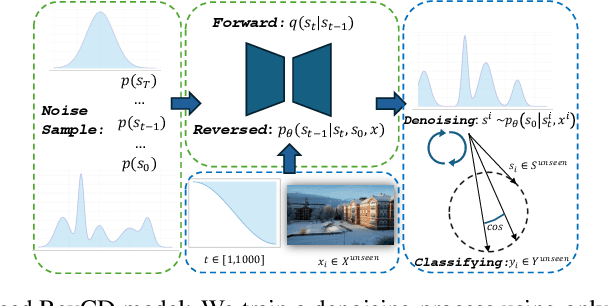

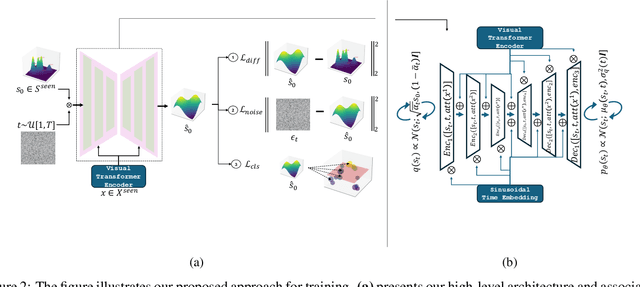

Abstract:In Generalized Zero-Shot Learning (GZSL), we aim to recognize both seen and unseen categories using a model trained only on seen categories. In computer vision, this translates into a classification problem, where knowledge from seen categories is transferred to unseen categories by exploiting the relationships between visual features and available semantic information, such as text corpora or manual annotations. However, learning this joint distribution is costly and requires one-to-one training with corresponding semantic information. We present a reversed conditional Diffusion-based model (RevCD) that mitigates this issue by generating semantic features synthesized from visual inputs by leveraging Diffusion models' conditional mechanisms. Our RevCD model consists of a cross Hadamard-Addition embedding of a sinusoidal time schedule and a multi-headed visual transformer for attention-guided embeddings. The proposed approach introduces three key innovations. First, we reverse the process of generating semantic space based on visual data, introducing a novel loss function that facilitates more efficient knowledge transfer. Second, we apply Diffusion models to zero-shot learning - a novel approach that exploits their strengths in capturing data complexity. Third, we demonstrate our model's performance through a comprehensive cross-dataset evaluation. The complete code will be available on GitHub.

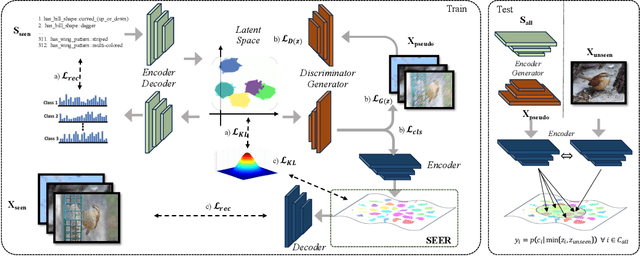

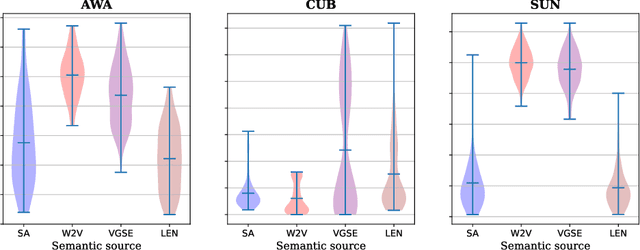

SEER-ZSL: Semantic Encoder-Enhanced Representations for Generalized Zero-Shot Learning

Dec 20, 2023

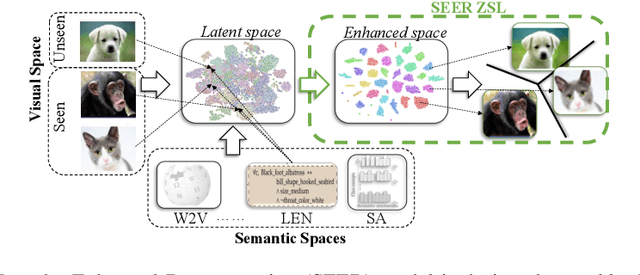

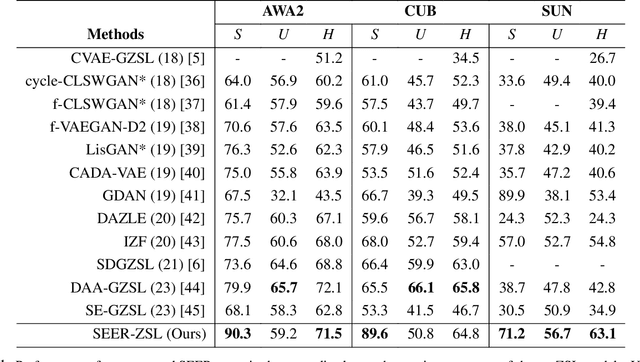

Abstract:Generalized Zero-Shot Learning (GZSL) recognizes unseen classes by transferring knowledge from the seen classes, depending on the inherent interactions between visual and semantic data. However, the discrepancy between well-prepared training data and unpredictable real-world test scenarios remains a significant challenge. This paper introduces a dual strategy to address the generalization gap. Firstly, we incorporate semantic information through an innovative encoder. This encoder effectively integrates class-specific semantic information by targeting the performance disparity, enhancing the produced features to enrich the semantic space for class-specific attributes. Secondly, we refine our generative capabilities using a novel compositional loss function. This approach generates discriminative classes, effectively classifying both seen and unseen classes. In addition, we extend the exploitation of the learned latent space by utilizing controlled semantic inputs, ensuring the robustness of the model in varying environments. This approach yields a model that outperforms the state-of-the-art models in terms of both generalization and diverse settings, notably without requiring hyperparameter tuning or domain-specific adaptations. We also propose a set of novel evaluation metrics to provide a more detailed assessment of the reliability and reproducibility of the results. The complete code is made available on https://github.com/william-heyden/SEER-ZeroShotLearning/.

Bridging Logic and Learning: A Neural-Symbolic Approach for Enhanced Reasoning in Neural Models

Dec 18, 2023Abstract:Neural-symbolic learning, an intersection of neural networks and symbolic reasoning, aims to blend neural networks' learning capabilities with symbolic AI's interpretability and reasoning. This paper introduces an approach designed to improve the performance of neural models in learning reasoning tasks. It achieves this by integrating Answer Set Programming (ASP) solvers and domain-specific expertise, which is an approach that diverges from traditional complex neural-symbolic models. In this paper, a shallow artificial neural network (ANN) is specifically trained to solve Sudoku puzzles with minimal training data. The model has a unique loss function that integrates losses calculated using the ASP solver outputs, effectively enhancing its training efficiency. Most notably, the model shows a significant improvement in solving Sudoku puzzles using only 12 puzzles for training and testing without hyperparameter tuning. This advancement indicates that the model's enhanced reasoning capabilities have practical applications, extending well beyond Sudoku puzzles to potentially include a variety of other domains. The code can be found on GitHub: https://github.com/Fadi2200/ASPEN.

A Comprehensive Review of Automated Data Annotation Techniques in Human Activity Recognition

Jul 12, 2023Abstract:Human Activity Recognition (HAR) has become one of the leading research topics of the last decade. As sensing technologies have matured and their economic costs have declined, a host of novel applications, e.g., in healthcare, industry, sports, and daily life activities have become popular. The design of HAR systems requires different time-consuming processing steps, such as data collection, annotation, and model training and optimization. In particular, data annotation represents the most labor-intensive and cumbersome step in HAR, since it requires extensive and detailed manual work from human annotators. Therefore, different methodologies concerning the automation of the annotation procedure in HAR have been proposed. The annotation problem occurs in different notions and scenarios, which all require individual solutions. In this paper, we provide the first systematic review on data annotation techniques for HAR. By grouping existing approaches into classes and providing a taxonomy, our goal is to support the decision on which techniques can be beneficially used in a given scenario.

An Integral Projection-based Semantic Autoencoder for Zero-Shot Learning

Jun 26, 2023Abstract:Zero-shot Learning (ZSL) classification categorizes or predicts classes (labels) that are not included in the training set (unseen classes). Recent works proposed different semantic autoencoder (SAE) models where the encoder embeds a visual feature vector space into the semantic space and the decoder reconstructs the original visual feature space. The objective is to learn the embedding by leveraging a source data distribution, which can be applied effectively to a different but related target data distribution. Such embedding-based methods are prone to domain shift problems and are vulnerable to biases. We propose an integral projection-based semantic autoencoder (IP-SAE) where an encoder projects a visual feature space concatenated with the semantic space into a latent representation space. We force the decoder to reconstruct the visual-semantic data space. Due to this constraint, the visual-semantic projection function preserves the discriminatory data included inside the original visual feature space. The enriched projection forces a more precise reconstitution of the visual feature space invariant to the domain manifold. Consequently, the learned projection function is less domain-specific and alleviates the domain shift problem. Our proposed IP-SAE model consolidates a symmetric transformation function for embedding and projection, and thus, it provides transparency for interpreting generative applications in ZSL. Therefore, in addition to outperforming state-of-the-art methods considering four benchmark datasets, our analytical approach allows us to investigate distinct characteristics of generative-based methods in the unique context of zero-shot inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge