Martin Thomas Horsch

Building Trustworthy AI: Transparent AI Systems via Large Language Models, Ontologies, and Logical Reasoning (TranspNet)

Nov 13, 2024Abstract:Growing concerns over the lack of transparency in AI, particularly in high-stakes fields like healthcare and finance, drive the need for explainable and trustworthy systems. While Large Language Models (LLMs) perform exceptionally well in generating accurate outputs, their "black box" nature poses significant challenges to transparency and trust. To address this, the paper proposes the TranspNet pipeline, which integrates symbolic AI with LLMs. By leveraging domain expert knowledge, retrieval-augmented generation (RAG), and formal reasoning frameworks like Answer Set Programming (ASP), TranspNet enhances LLM outputs with structured reasoning and verification. This approach ensures that AI systems deliver not only accurate but also explainable and trustworthy results, meeting regulatory demands for transparency and accountability. TranspNet provides a comprehensive solution for developing AI systems that are reliable and interpretable, making it suitable for real-world applications where trust is critical.

Symbolic-AI-Fusion Deep Learning (SAIF-DL): Encoding Knowledge into Training with Answer Set Programming Loss Penalties by a Novel Loss Function Approach

Nov 13, 2024Abstract:This paper presents a hybrid methodology that enhances the training process of deep learning (DL) models by embedding domain expert knowledge using ontologies and answer set programming (ASP). By integrating these symbolic AI methods, we encode domain-specific constraints, rules, and logical reasoning directly into the model's learning process, thereby improving both performance and trustworthiness. The proposed approach is flexible and applicable to both regression and classification tasks, demonstrating generalizability across various fields such as healthcare, autonomous systems, engineering, and battery manufacturing applications. Unlike other state-of-the-art methods, the strength of our approach lies in its scalability across different domains. The design allows for the automation of the loss function by simply updating the ASP rules, making the system highly scalable and user-friendly. This facilitates seamless adaptation to new domains without significant redesign, offering a practical solution for integrating expert knowledge into DL models in industrial settings such as battery manufacturing.

Multiscale modelling and simulation of physical systems as semiosis

Apr 01, 2020

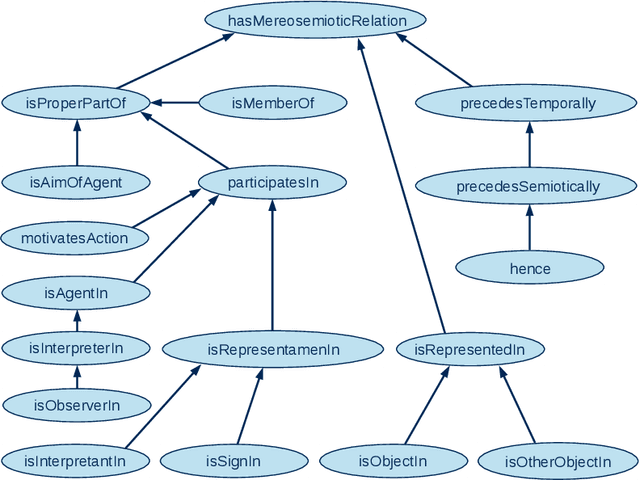

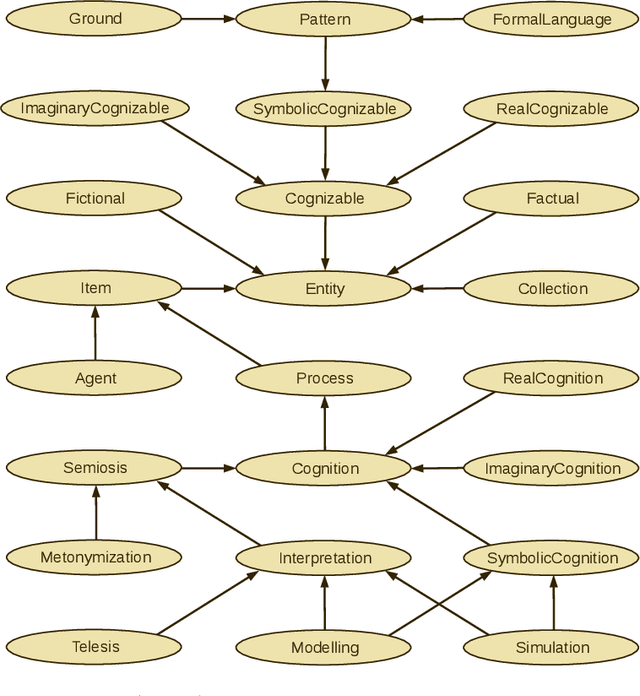

Abstract:It is explored how physicalist mereotopology and Peircean semiotics can be applied to represent models, simulations, and workflows in multiscale modelling and simulation of physical systems within a top-level ontology. It is argued that to conceptualize modelling and simulation in such a framework, two major types of semiosis need to be formalized and combined with each other: Interpretation, where a sign and a represented object yield an interpretant (another representamen for the same object), and metonymization, where the represented object and a sign are in a three-way relationship with another object to which the signification is transferred. It is outlined how the main elements of the pre-existing simulation workflow descriptions MODA and OSMO, i.e., use cases, models, solvers, and processors, can be aligned with a top-level ontology that implements this ontological paradigm, which is here referred to as mereosemiotic physicalism. Implications are discussed for the development of the European Materials and Modelling Ontology, an implementation of mereosemiotic physicalism.

Ontologies for the Virtual Materials Marketplace

Dec 03, 2019

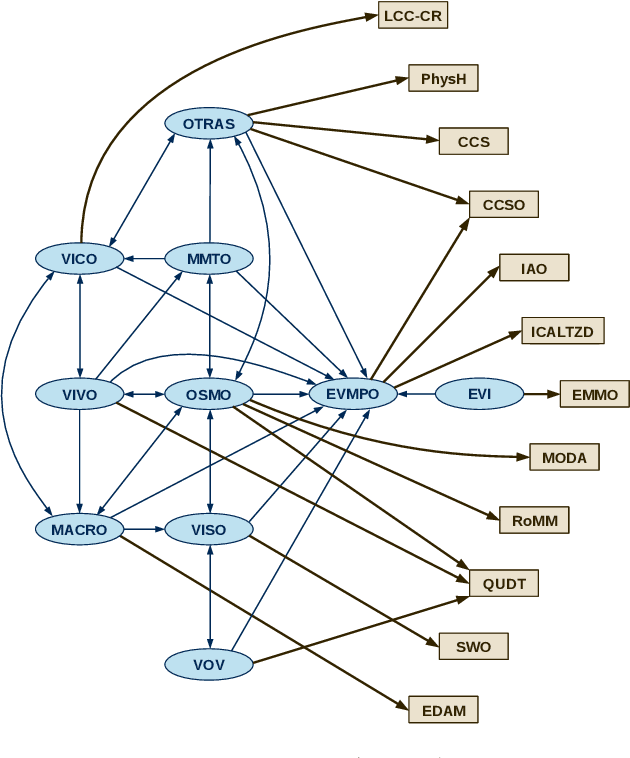

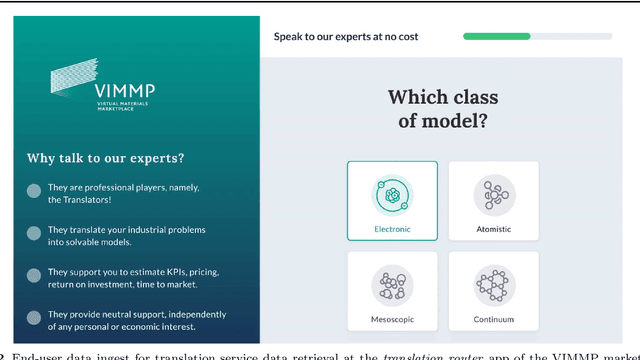

Abstract:The Virtual Materials Marketplace (VIMMP) project, which develops an open platform for providing and accessing services related to materials modelling, is presented with a focus on its ontology development and data technology aspects. Within VIMMP, a system of marketplace-level ontologies is developed to characterize services, models, and interactions between users; the European Materials and Modelling Ontology (EMMO), which is based on mereotopology following Varzi and semiotics following Peirce, is employed as a top-level ontology. The ontologies are used to annotate data that are stored in the ZONTAL Space component of VIMMP and to support the ingest and retrieval of data and metadata at the VIMMP marketplace frontend.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge