Fabrizia Auletta

Predicting and Understanding Human Action Decisions during Skillful Joint-Action via Machine Learning and Explainable-AI

Jun 06, 2022

Abstract:This study uses supervised machine learning (SML) and explainable artificial intelligence (AI) to model, predict and understand human decision-making during skillful joint-action. Long short-term memory networks were trained to predict the target selection decisions of expert and novice actors completing a dyadic herding task. Results revealed that the trained models were expertise specific and could not only accurately predict the target selection decisions of expert and novice herders but could do so at timescales that preceded an actor's conscious intent. To understand what differentiated the target selection decisions of expert and novice actors, we then employed the explainable-AI technique, SHapley Additive exPlanation, to identify the importance of informational features (variables) on model predictions. This analysis revealed that experts were more influenced by information about the state of their co-herders compared to novices. The utility of employing SML and explainable-AI techniques for investigating human decision-making is discussed.

Control-Tutored Reinforcement Learning

Dec 12, 2019

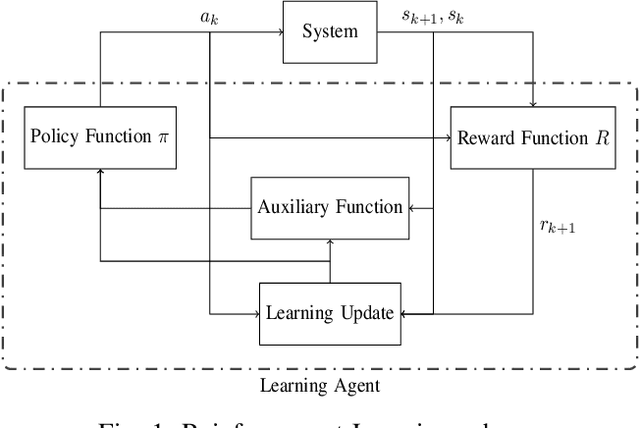

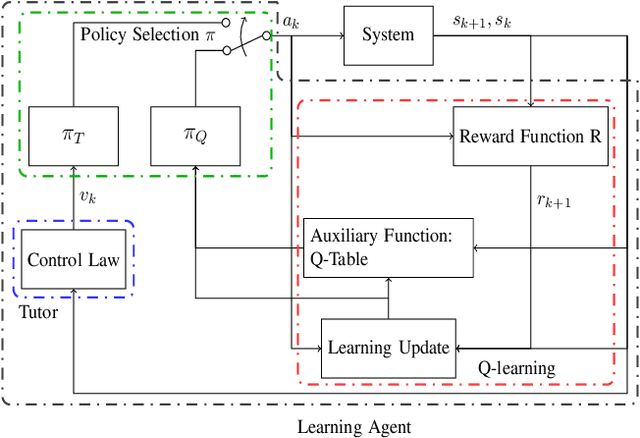

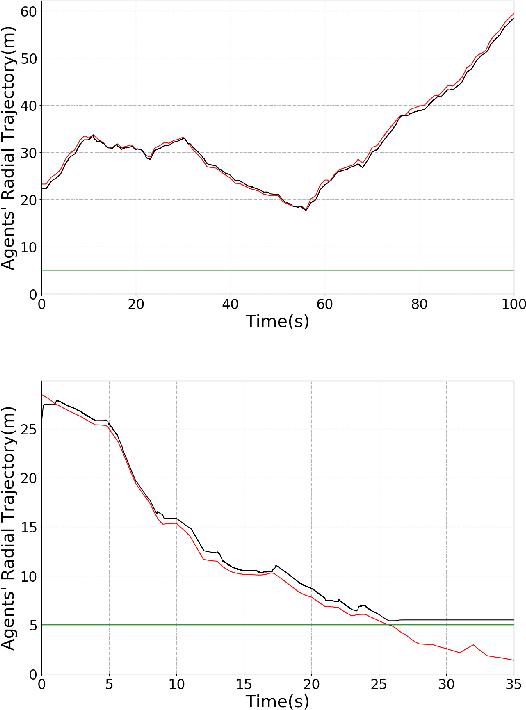

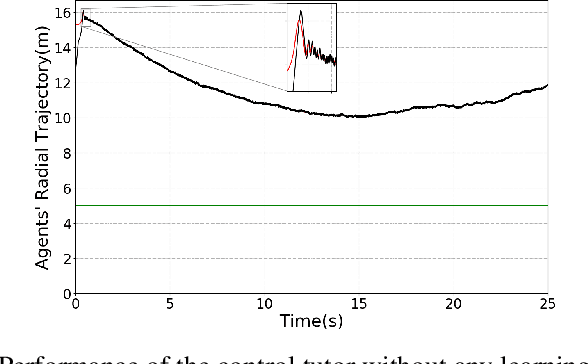

Abstract:We introduce a control-tutored reinforcement learning (CTRL) algorithm. The idea is to enhance tabular learning algorithms so as to improve the exploration of the state-space, and substantially reduce learning times by leveraging some limited knowledge of the plant encoded into a tutoring model-based control strategy. We illustrate the benefits of our novel approach and its effectiveness by using the problem of controlling one or more agents to herd and contain within a goal region a set of target free-roving agents in the plane.

Control-Tutored Reinforcement Learning: an application to the Herding Problem

Nov 27, 2019

Abstract:In this extended abstract we introduce a novel control-tutored Q-learning approach (CTQL) as part of the ongoing effort in developing model-based and safe RL for continuous state spaces. We validate our approach by applying it to a challenging multi-agent herding control problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge