Fabio Aurelio D'Asaro

A Translation of Probabilistic Event Calculus into Markov Decision Processes

Jul 17, 2025Abstract:Probabilistic Event Calculus (PEC) is a logical framework for reasoning about actions and their effects in uncertain environments, which enables the representation of probabilistic narratives and computation of temporal projections. The PEC formalism offers significant advantages in interpretability and expressiveness for narrative reasoning. However, it lacks mechanisms for goal-directed reasoning. This paper bridges this gap by developing a formal translation of PEC domains into Markov Decision Processes (MDPs), introducing the concept of "action-taking situations" to preserve PEC's flexible action semantics. The resulting PEC-MDP formalism enables the extensive collection of algorithms and theoretical tools developed for MDPs to be applied to PEC's interpretable narrative domains. We demonstrate how the translation supports both temporal reasoning tasks and objective-driven planning, with methods for mapping learned policies back into human-readable PEC representations, maintaining interpretability while extending PEC's capabilities.

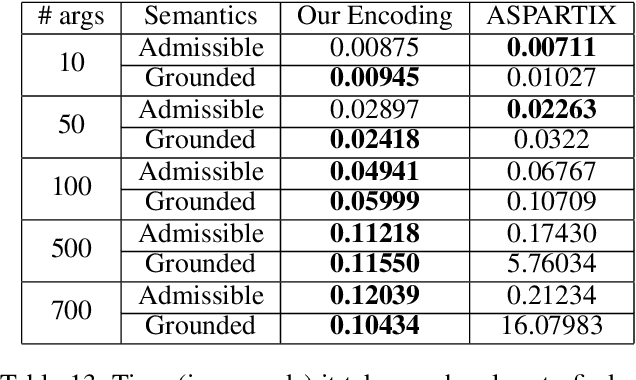

A Unifying Framework for Learning Argumentation Semantics

Oct 18, 2023

Abstract:Argumentation is a very active research field of Artificial Intelligence concerned with the representation and evaluation of arguments used in dialogues between humans and/or artificial agents. Acceptability semantics of formal argumentation systems define the criteria for the acceptance or rejection of arguments. Several software systems, known as argumentation solvers, have been developed to compute the accepted/rejected arguments using such criteria. These include systems that learn to identify the accepted arguments using non-interpretable methods. In this paper we present a novel framework, which uses an Inductive Logic Programming approach to learn the acceptability semantics for several abstract and structured argumentation frameworks in an interpretable way. Through an empirical evaluation we show that our framework outperforms existing argumentation solvers, thus opening up new future research directions in the area of formal argumentation and human-machine dialogues.

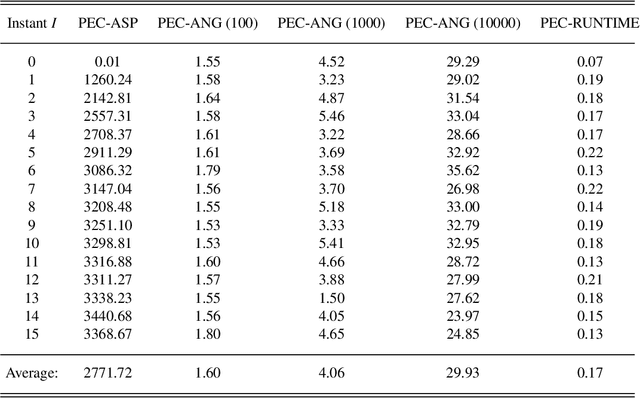

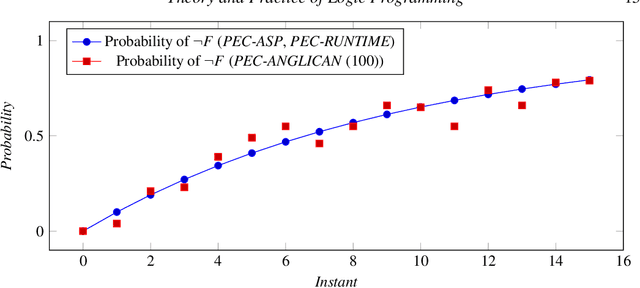

An Application of a Runtime Epistemic Probabilistic Event Calculus to Decision-making in e-Health Systems

Sep 26, 2022

Abstract:We present and discuss a runtime architecture that integrates sensorial data and classifiers with a logic-based decision-making system in the context of an e-Health system for the rehabilitation of children with neuromotor disorders. In this application, children perform a rehabilitation task in the form of games. The main aim of the system is to derive a set of parameters the child's current level of cognitive and behavioral performance (e.g., engagement, attention, task accuracy) from the available sensors and classifiers (e.g., eye trackers, motion sensors, emotion recognition techniques) and take decisions accordingly. These decisions are typically aimed at improving the child's performance by triggering appropriate re-engagement stimuli when their attention is low, by changing the game or making it more difficult when the child is losing interest in the task as it is too easy. Alongside state-of-the-art techniques for emotion recognition and head pose estimation, we use a runtime variant of a probabilistic and epistemic logic programming dialect of the Event Calculus, known as the Epistemic Probabilistic Event Calculus. In particular, the probabilistic component of this symbolic framework allows for a natural interface with the machine learning techniques. We overview the architecture and its components, and show some of its characteristics through a discussion of a running example and experiments. Under consideration for publication in Theory and Practice of Logic Programming (TPLP).

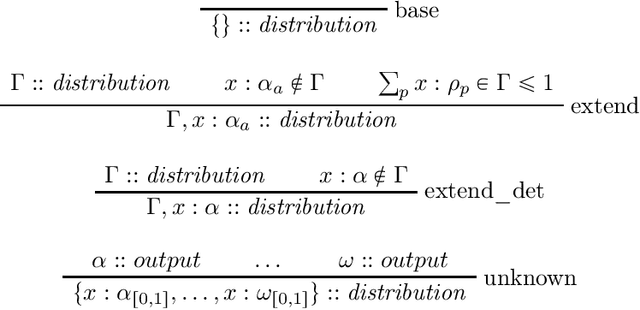

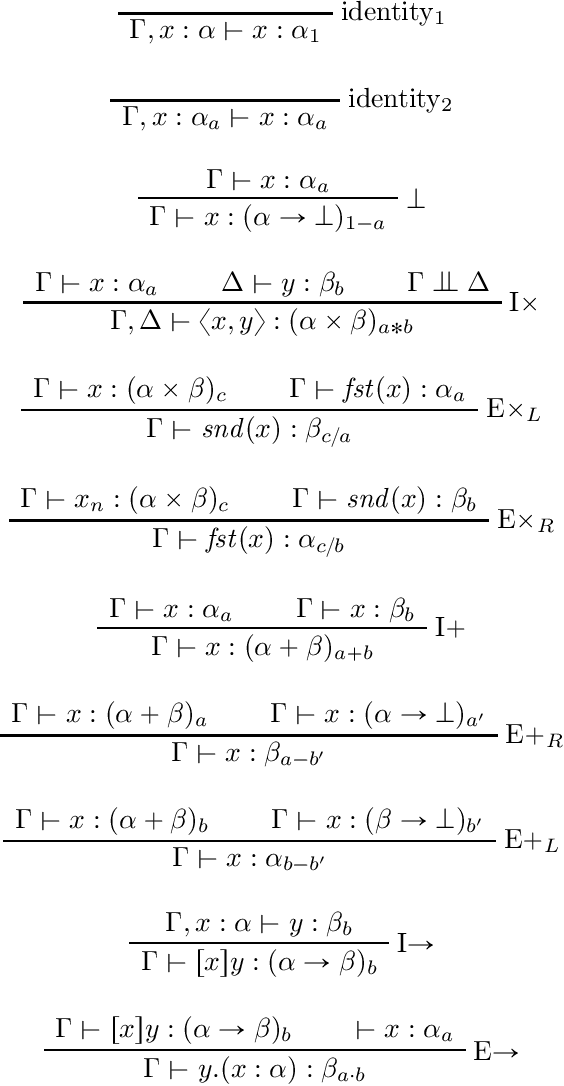

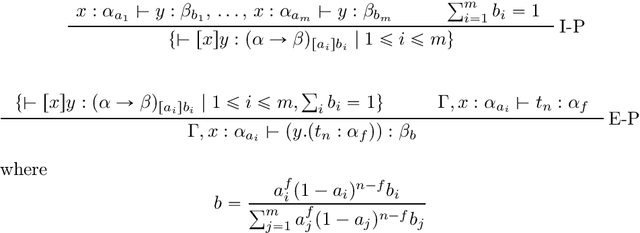

Checking Trustworthiness of Probabilistic Computations in a Typed Natural Deduction System

Jun 26, 2022

Abstract:In this paper we present the probabilistic typed natural deduction calculus TPTND, designed to reason about and derive trustworthiness properties of probabilistic computational processes, like those underlying current AI applications. Derivability in TPTND is interpreted as the process of extracting $n$ samples of outputs with a certain frequency from a given categorical distribution. We formalize trust within our framework as a form of hypothesis testing on the distance between such frequency and the intended probability. The main advantage of the calculus is to render such notion of trustworthiness checkable. We present the proof-theoretic semantics of TPTND and illustrate structural and metatheoretical properties, with particular focus on safety. We motivate its use in the verification of algorithms for automatic classification.

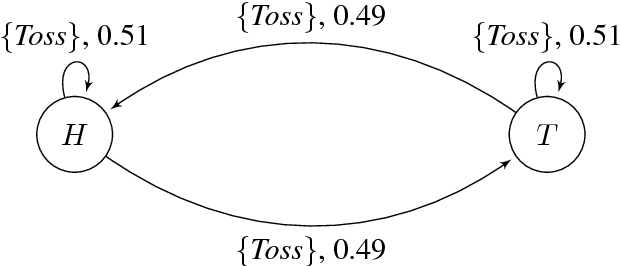

Foundations for a Probabilistic Event Calculus

Jun 30, 2017

Abstract:We present PEC, an Event Calculus (EC) style action language for reasoning about probabilistic causal and narrative information. It has an action language style syntax similar to that of the EC variant Modular-E. Its semantics is given in terms of possible worlds which constitute possible evolutions of the domain, and builds on that of EFEC, an epistemic extension of EC. We also describe an ASP implementation of PEC and show the sense in which this is sound and complete.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge