Eren Kurshan

The Agentic Regulator: Risks for AI in Finance and a Proposed Agent-based Framework for Governance

Dec 12, 2025Abstract:Generative and agentic artificial intelligence is entering financial markets faster than existing governance can adapt. Current model-risk frameworks assume static, well-specified algorithms and one-time validations; large language models and multi-agent trading systems violate those assumptions by learning continuously, exchanging latent signals, and exhibiting emergent behavior. Drawing on complex adaptive systems theory, we model these technologies as decentralized ensembles whose risks propagate along multiple time-scales. We then propose a modular governance architecture. The framework decomposes oversight into four layers of "regulatory blocks": (i) self-regulation modules embedded beside each model, (ii) firm-level governance blocks that aggregate local telemetry and enforce policy, (iii) regulator-hosted agents that monitor sector-wide indicators for collusive or destabilizing patterns, and (iv) independent audit blocks that supply third-party assurance. Eight design strategies enable the blocks to evolve as fast as the models they police. A case study on emergent spoofing in multi-agent trading shows how the layered controls quarantine harmful behavior in real time while preserving innovation. The architecture remains compatible with today's model-risk rules yet closes critical observability and control gaps, providing a practical path toward resilient, adaptive AI governance in financial systems.

3D Guard-Layer: An Integrated Agentic AI Safety System for Edge Artificial Intelligence

Nov 11, 2025Abstract:AI systems have found a wide range of real-world applications in recent years. The adoption of edge artificial intelligence, embedding AI directly into edge devices, is rapidly growing. Despite the implementation of guardrails and safety mechanisms, security vulnerabilities and challenges have become increasingly prevalent in this domain, posing a significant barrier to the practical deployment and safety of AI systems. This paper proposes an agentic AI safety architecture that leverages 3D to integrate a dedicated safety layer. It introduces an adaptive AI safety infrastructure capable of dynamically learning and mitigating attacks against the AI system. The system leverages the inherent advantages of co-location with the edge computing hardware to continuously monitor, detect and proactively mitigate threats to the AI system. The integration of local processing and learning capabilities enhances resilience against emerging network-based attacks while simultaneously improving system reliability, modularity, and performance, all with minimal cost and 3D integration overhead.

Temporal Knowledge Distillation for Time-Sensitive Financial Services Applications

Dec 28, 2023Abstract:Detecting anomalies has become an increasingly critical function in the financial service industry. Anomaly detection is frequently used in key compliance and risk functions such as financial crime detection fraud and cybersecurity. The dynamic nature of the underlying data patterns especially in adversarial environments like fraud detection poses serious challenges to the machine learning models. Keeping up with the rapid changes by retraining the models with the latest data patterns introduces pressures in balancing the historical and current patterns while managing the training data size. Furthermore the model retraining times raise problems in time-sensitive and high-volume deployment systems where the retraining period directly impacts the models ability to respond to ongoing attacks in a timely manner. In this study we propose a temporal knowledge distillation-based label augmentation approach (TKD) which utilizes the learning from older models to rapidly boost the latest model and effectively reduces the model retraining times to achieve improved agility. Experimental results show that the proposed approach provides advantages in retraining times while improving the model performance.

Systematic AI Approach for AGI: Addressing Alignment, Energy, and AGI Grand Challenges

Oct 23, 2023Abstract:AI faces a trifecta of grand challenges the Energy Wall, the Alignment Problem and the Leap from Narrow AI to AGI. Contemporary AI solutions consume unsustainable amounts of energy during model training and daily operations.Making things worse, the amount of computation required to train each new AI model has been doubling every 2 months since 2020, directly translating to increases in energy consumption.The leap from AI to AGI requires multiple functional subsystems operating in a balanced manner, which requires a system architecture. However, the current approach to artificial intelligence lacks system design; even though system characteristics play a key role in the human brain from the way it processes information to how it makes decisions. Similarly, current alignment and AI ethics approaches largely ignore system design, yet studies show that the brains system architecture plays a critical role in healthy moral decisions.In this paper, we argue that system design is critically important in overcoming all three grand challenges. We posit that system design is the missing piece in overcoming the grand challenges.We present a Systematic AI Approach for AGI that utilizes system design principles for AGI, while providing ways to overcome the energy wall and the alignment challenges.

On the Current and Emerging Challenges of Developing Fair and Ethical AI Solutions in Financial Services

Nov 02, 2021Abstract:Artificial intelligence (AI) continues to find more numerous and more critical applications in the financial services industry, giving rise to fair and ethical AI as an industry-wide objective. While many ethical principles and guidelines have been published in recent years, they fall short of addressing the serious challenges that model developers face when building ethical AI solutions. We survey the practical and overarching issues surrounding model development, from design and implementation complexities, to the shortage of tools, and the lack of organizational constructs. We show how practical considerations reveal the gaps between high-level principles and concrete, deployed AI applications, with the aim of starting industry-wide conversations toward solution approaches.

* 10 pages; expanded from conference version

Beyond Fairness Metrics: Roadblocks and Challenges for Ethical AI in Practice

Aug 11, 2021Abstract:We review practical challenges in building and deploying ethical AI at the scale of contemporary industrial and societal uses. Apart from the purely technical concerns that are the usual focus of academic research, the operational challenges of inconsistent regulatory pressures, conflicting business goals, data quality issues, development processes, systems integration practices, and the scale of deployment all conspire to create new ethical risks. Such ethical concerns arising from these practical considerations are not adequately addressed by existing research results. We argue that a holistic consideration of ethics in the development and deployment of AI systems is necessary for building ethical AI in practice, and exhort researchers to consider the full operational contexts of AI systems when assessing ethical risks.

A Case for 3D Integrated System Design for Neuromorphic Computing & AI Applications

Mar 02, 2021

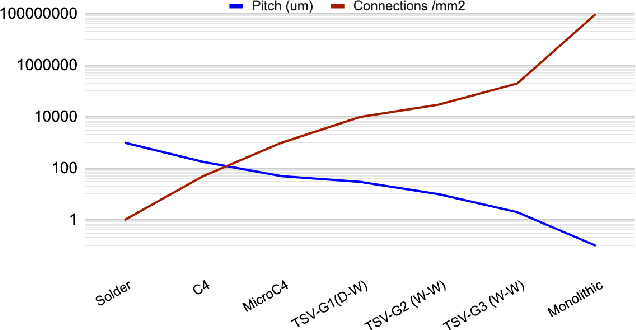

Abstract:Over the last decade, artificial intelligence has found many applications areas in the society. As AI solutions have become more sophistication and the use cases grew, they highlighted the need to address performance and energy efficiency challenges faced during the implementation process. To address these challenges, there has been growing interest in neuromorphic chips. Neuromorphic computing relies on non von Neumann architectures as well as novel devices, circuits and manufacturing technologies to mimic the human brain. Among such technologies, 3D integration is an important enabler for AI hardware and the continuation of the scaling laws. In this paper, we overview the unique opportunities 3D integration provides in neuromorphic chip design, discuss the emerging opportunities in next generation neuromorphic architectures and review the obstacles. Neuromorphic architectures, which relied on the brain for inspiration and emulation purposes, face grand challenges due to the limited understanding of the functionality and the architecture of the human brain. Yet, high-levels of investments are dedicated to develop neuromorphic chips. We argue that 3D integration not only provides strategic advantages to the cost-effective and flexible design of neuromorphic chips, it may provide design flexibility in incorporating advanced capabilities to further benefits the designs in the future.

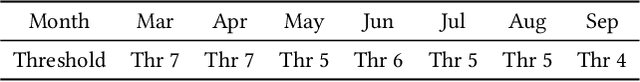

Deep Q-Network-based Adaptive Alert Threshold Selection Policy for Payment Fraud Systems in Retail Banking

Oct 21, 2020

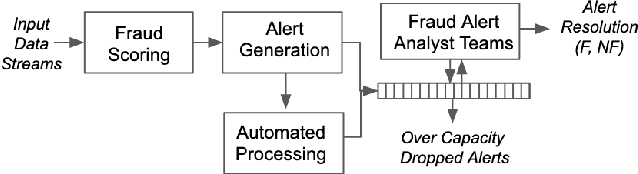

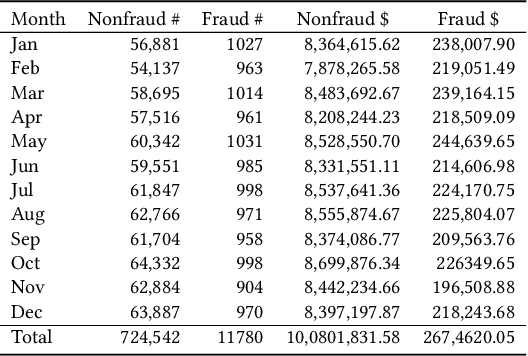

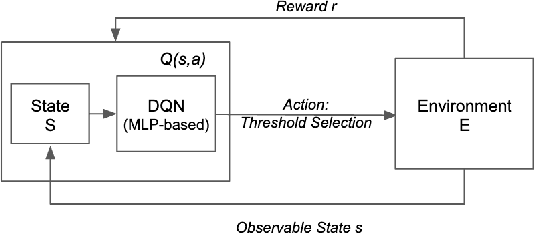

Abstract:Machine learning models have widely been used in fraud detection systems. Most of the research and development efforts have been concentrated on improving the performance of the fraud scoring models. Yet, the downstream fraud alert systems still have limited to no model adoption and rely on manual steps. Alert systems are pervasively used across all payment channels in retail banking and play an important role in the overall fraud detection process. Current fraud detection systems end up with large numbers of dropped alerts due to their inability to account for the alert processing capacity. Ideally, alert threshold selection enables the system to maximize the fraud detection while balancing the upstream fraud scores and the available bandwidth of the alert processing teams. However, in practice, fixed thresholds that are used for their simplicity do not have this ability. In this paper, we propose an enhanced threshold selection policy for fraud alert systems. The proposed approach formulates the threshold selection as a sequential decision making problem and uses Deep Q-Network based reinforcement learning. Experimental results show that this adaptive approach outperforms the current static solutions by reducing the fraud losses as well as improving the operational efficiency of the alert system.

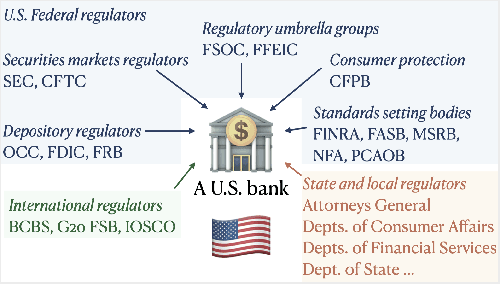

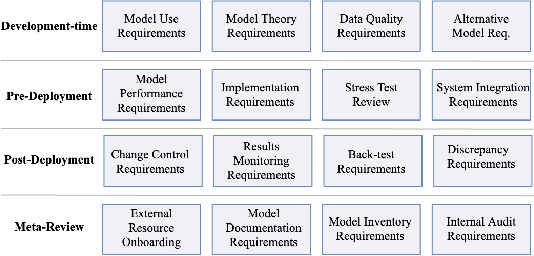

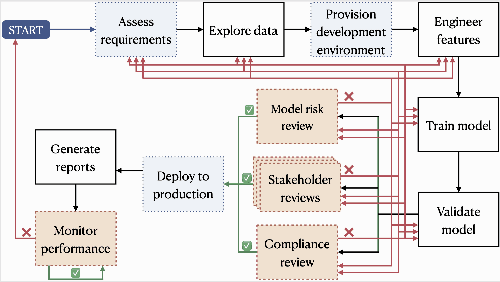

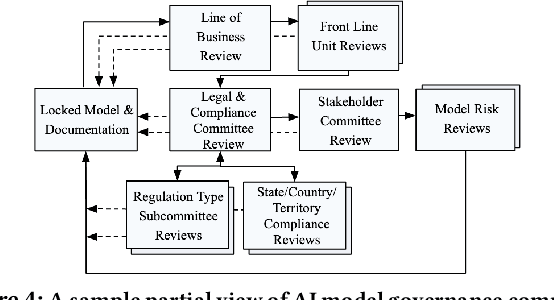

Towards Self-Regulating AI: Challenges and Opportunities of AI Model Governance in Financial Services

Oct 09, 2020

Abstract:AI systems have found a wide range of application areas in financial services. Their involvement in broader and increasingly critical decisions has escalated the need for compliance and effective model governance. Current governance practices have evolved from more traditional financial applications and modeling frameworks. They often struggle with the fundamental differences in AI characteristics such as uncertainty in the assumptions, and the lack of explicit programming. AI model governance frequently involves complex review flows and relies heavily on manual steps. As a result, it faces serious challenges in effectiveness, cost, complexity, and speed. Furthermore, the unprecedented rate of growth in the AI model complexity raises questions on the sustainability of the current practices. This paper focuses on the challenges of AI model governance in the financial services industry. As a part of the outlook, we present a system-level framework towards increased self-regulation for robustness and compliance. This approach aims to enable potential solution opportunities through increased automation and the integration of monitoring, management, and mitigation capabilities. The proposed framework also provides model governance and risk management improved capabilities to manage model risk during deployment.

* 8 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge