Emmanuel Jehanno

Self-Attention in Colors: Another Take on Encoding Graph Structure in Transformers

Apr 21, 2023Abstract:We introduce a novel self-attention mechanism, which we call CSA (Chromatic Self-Attention), which extends the notion of attention scores to attention _filters_, independently modulating the feature channels. We showcase CSA in a fully-attentional graph Transformer CGT (Chromatic Graph Transformer) which integrates both graph structural information and edge features, completely bypassing the need for local message-passing components. Our method flexibly encodes graph structure through node-node interactions, by enriching the original edge features with a relative positional encoding scheme. We propose a new scheme based on random walks that encodes both structural and positional information, and show how to incorporate higher-order topological information, such as rings in molecular graphs. Our approach achieves state-of-the-art results on the ZINC benchmark dataset, while providing a flexible framework for encoding graph structure and incorporating higher-order topology.

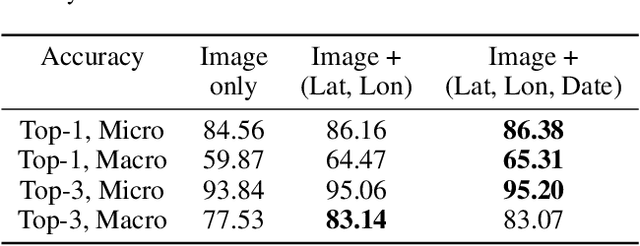

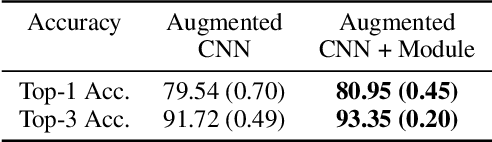

Geo-Spatiotemporal Features and Shape-Based Prior Knowledge for Fine-grained Imbalanced Data Classification

Mar 21, 2021

Abstract:Fine-grained classification aims at distinguishing between items with similar global perception and patterns, but that differ by minute details. Our primary challenges come from both small inter-class variations and large intra-class variations. In this article, we propose to combine several innovations to improve fine-grained classification within the use-case of wildlife, which is of practical interest for experts. We utilize geo-spatiotemporal data to enrich the picture information and further improve the performance. We also investigate state-of-the-art methods for handling the imbalanced data issue.

* Copyright by the authors. All rights reserved to authors only. Correspondence to: ckantor (at) stanford [dot] edu

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge