Elmira Amirloo Abolfathi

Multi-lane Cruising Using Hierarchical Planning and Reinforcement Learning

Oct 01, 2021

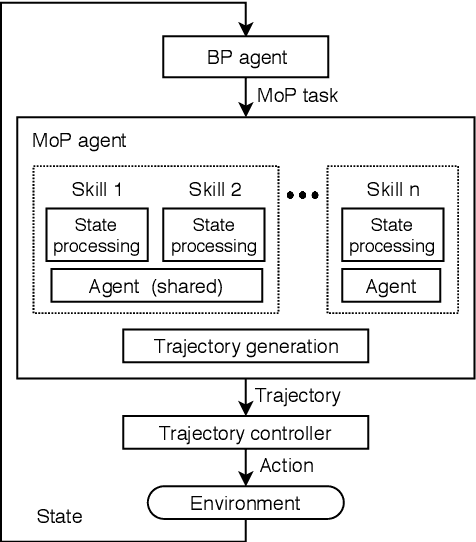

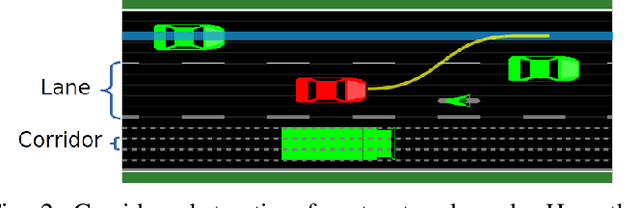

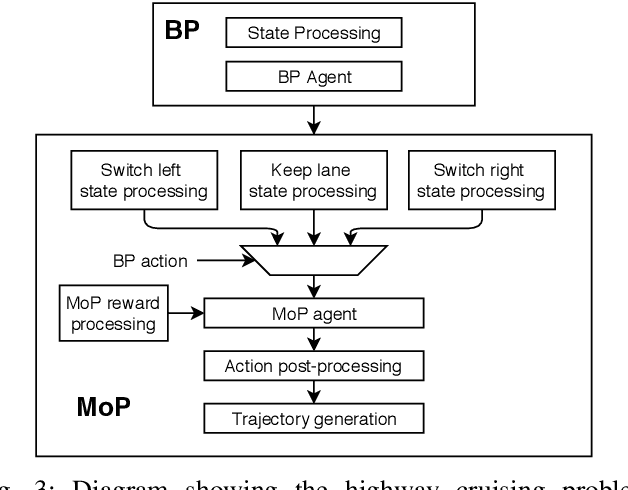

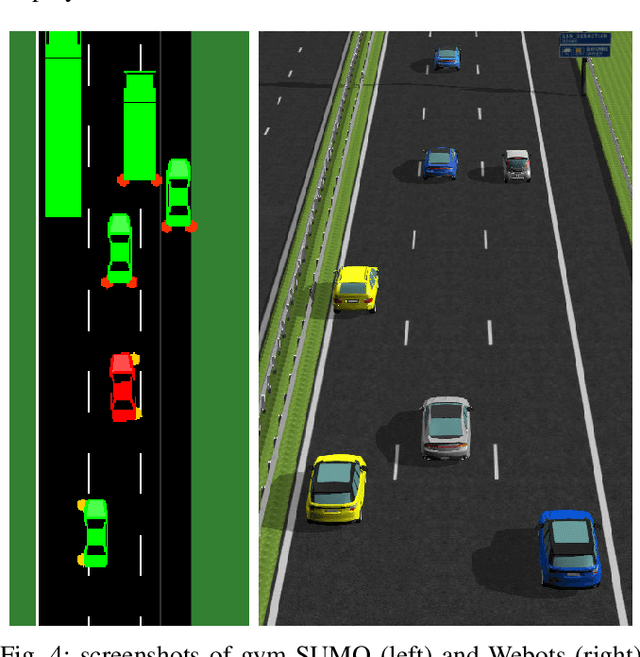

Abstract:Competent multi-lane cruising requires using lane changes and within-lane maneuvers to achieve good speed and maintain safety. This paper proposes a design for autonomous multi-lane cruising by combining a hierarchical reinforcement learning framework with a novel state-action space abstraction. While the proposed solution follows the classical hierarchy of behavior decision, motion planning and control, it introduces a key intermediate abstraction within the motion planner to discretize the state-action space according to high level behavioral decisions. We argue that this design allows principled modular extension of motion planning, in contrast to using either monolithic behavior cloning or a large set of hand-written rules. Moreover, we demonstrate that our state-action space abstraction allows transferring of the trained models without retraining from a simulated environment with virtually no dynamics to one with significantly more realistic dynamics. Together, these results suggest that our proposed hierarchical architecture is a promising way to allow reinforcement learning to be applied to complex multi-lane cruising in the real world.

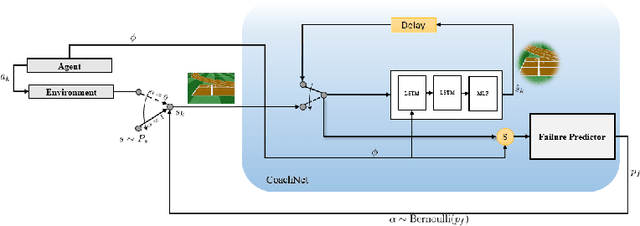

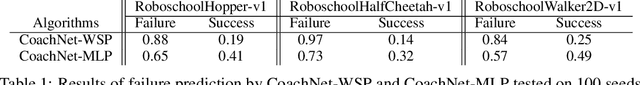

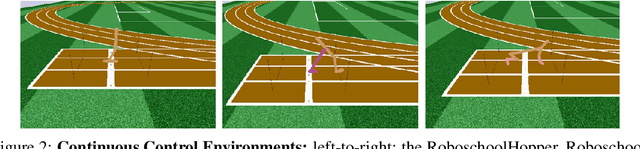

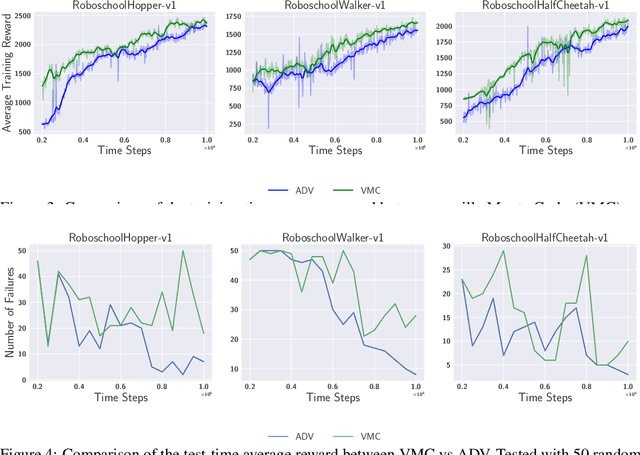

CoachNet: An Adversarial Sampling Approach for Reinforcement Learning

Jan 07, 2021

Abstract:Despite the recent successes of reinforcement learning in games and robotics, it is yet to become broadly practical. Sample efficiency and unreliable performance in rare but challenging scenarios are two of the major obstacles. Drawing inspiration from the effectiveness of deliberate practice for achieving expert-level human performance, we propose a new adversarial sampling approach guided by a failure predictor named "CoachNet". CoachNet is trained online along with the agent to predict the probability of failure. This probability is then used in a stochastic sampling process to guide the agent to more challenging episodes. This way, instead of wasting time on scenarios that the agent has already mastered, training is focused on the agent's "weak spots". We present the design of CoachNet, explain its underlying principles, and empirically demonstrate its effectiveness in improving sample efficiency and test-time robustness in common continuous control tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge