Multi-lane Cruising Using Hierarchical Planning and Reinforcement Learning

Paper and Code

Oct 01, 2021

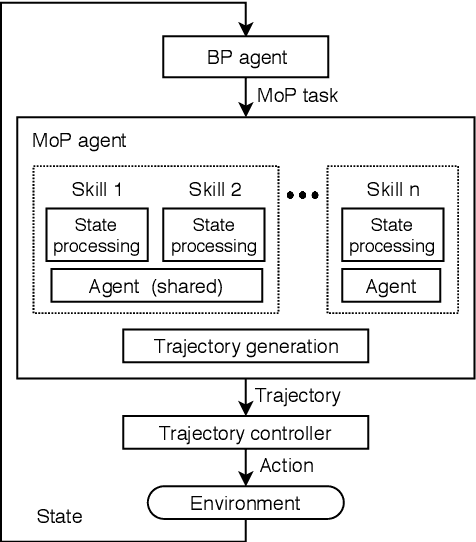

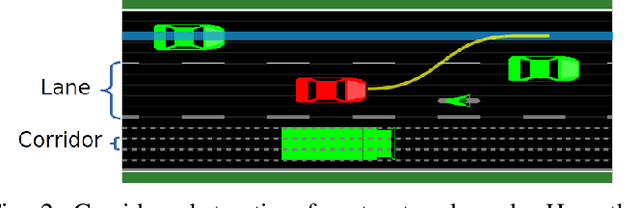

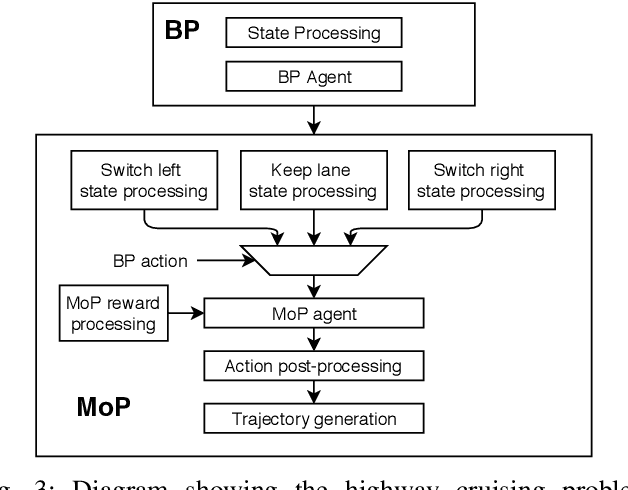

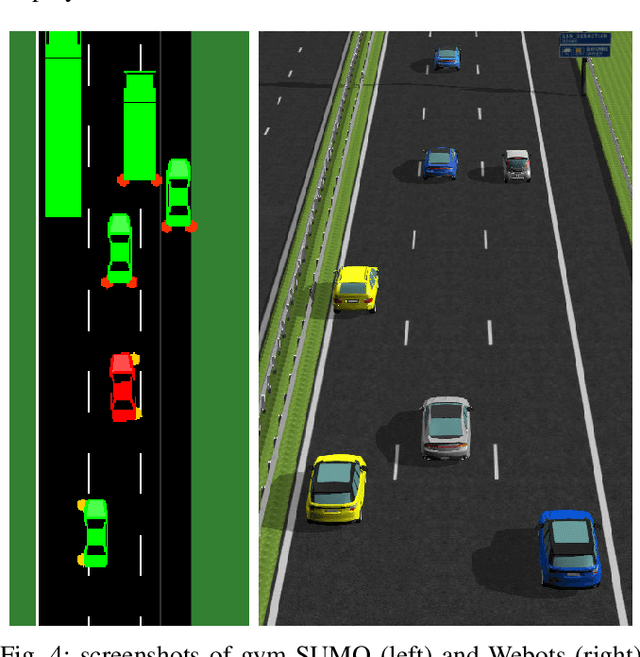

Competent multi-lane cruising requires using lane changes and within-lane maneuvers to achieve good speed and maintain safety. This paper proposes a design for autonomous multi-lane cruising by combining a hierarchical reinforcement learning framework with a novel state-action space abstraction. While the proposed solution follows the classical hierarchy of behavior decision, motion planning and control, it introduces a key intermediate abstraction within the motion planner to discretize the state-action space according to high level behavioral decisions. We argue that this design allows principled modular extension of motion planning, in contrast to using either monolithic behavior cloning or a large set of hand-written rules. Moreover, we demonstrate that our state-action space abstraction allows transferring of the trained models without retraining from a simulated environment with virtually no dynamics to one with significantly more realistic dynamics. Together, these results suggest that our proposed hierarchical architecture is a promising way to allow reinforcement learning to be applied to complex multi-lane cruising in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge