Ella Gale

Cluster Flow: how a hierarchical clustering layer make allows deep-NNs more resilient to hacking, more human-like and easily implements relational reasoning

Apr 27, 2023

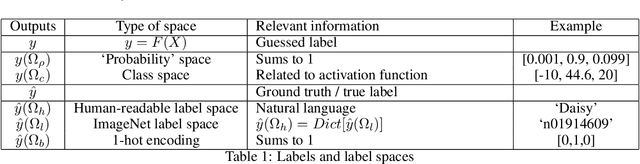

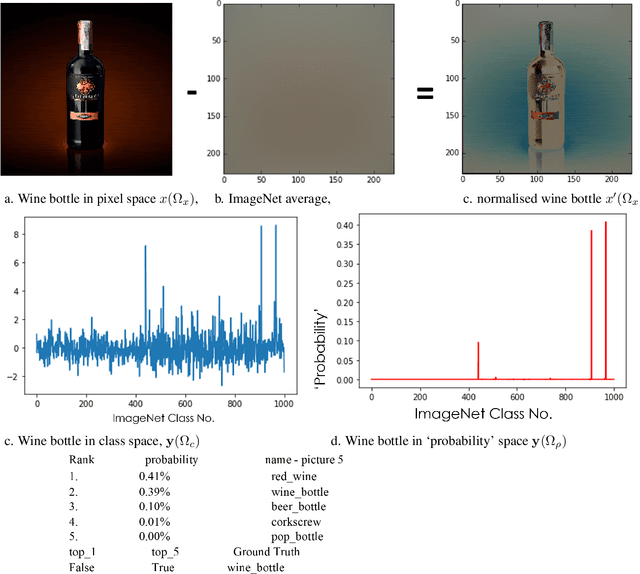

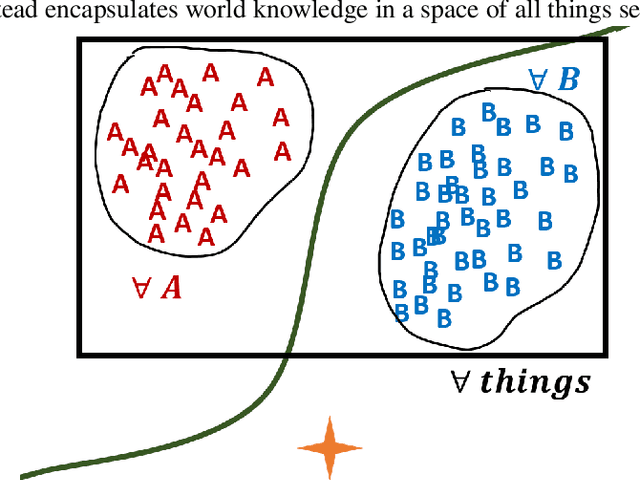

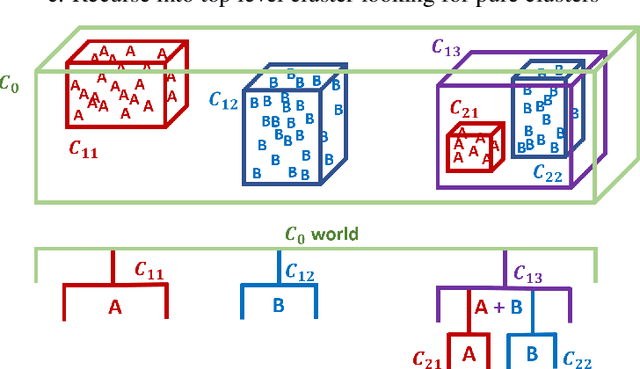

Abstract:Despite the huge recent breakthroughs in neural networks (NNs) for artificial intelligence (specifically deep convolutional networks) such NNs do not achieve human-level performance: they can be hacked by images that would fool no human and lack `common sense'. It has been argued that a basis of human-level intelligence is mankind's ability to perform relational reasoning: the comparison of different objects, measuring similarity, grasping of relations between objects and the converse, figuring out the odd one out in a set of objects. Mankind can even do this with objects they have never seen before. Here we show how ClusterFlow, a semi-supervised hierarchical clustering framework can operate on trained NNs utilising the rich multi-dimensional class and feature data found at the pre-SoftMax layer to build a hyperspacial map of classes/features and this adds more human-like functionality to modern deep convolutional neural networks. We demonstrate this with 3 tasks. 1. the statistical learning based `mistakes' made by infants when attending to images of cats and dogs. 2. improving both the resilience to hacking images and the accurate measure of certainty in deep-NNs. 3. Relational reasoning over sets of images, including those not known to the NN nor seen before. We also demonstrate that ClusterFlow can work on non-NN data and deal with missing data by testing it on a Chemistry dataset. This work suggests that modern deep NNs can be made more human-like without re-training of the NNs. As it is known that some methods used in deep and convolutional NNs are not biologically plausible or perhaps even the best approach: the ClusterFlow framework can sit on top of any NN and will be a useful tool to add as NNs are improved in this regard.

Icospherical Chemical Objects (ICOs) allow for chemical data augmentation and maintain rotational, translation and permutation invariance

Apr 15, 2023Abstract:Dataset augmentation is a common way to deal with small datasets; Chemistry datasets are often small. Spherical convolutional neural networks (SphNNs) and Icosahedral neural networks (IcoNNs) are a type of geometric machine learning algorithm that maintains rotational symmetry. Molecular structure has rotational invariance and is inherently 3-D, and thus we need 3-D encoding methods to input molecular structure into machine learning. In this paper I present Icospherical Chemical Objects (ICOs) that enable the encoding of 3-D data in a rotationally invariant way which works with spherical or icosahedral neural networks and allows for dataset augmentation. I demonstrate the ICO featurisation method on the following tasks: predicting general molecular properties, predicting solubility of drug like molecules and the protein binding problem and find that ICO and SphNNs perform well on all problems.

Shape is all!: Persistent homology features are an information rich input for efficient molecular machine learning

Apr 15, 2023

Abstract:3-D shape is important to chemistry, but how important? Machine learning works best when the inputs are simple and match the problem well. Chemistry datasets tend to be very small compared to those generally used in machine learning so we need to get the most from each datapoint. Persistent homology measures the topological shape properties of point clouds at different scales and is used in topological data analysis. Here we investigate what persistent homology captures about molecular structure and create persistent homology features (PHFs) that encode a molecule's shape whilst losing most of the symbolic detail like atom labels, valence, charge, bonds etc. We demonstrate the usefulness of PHFs on a series of chemical datasets: QM7, lipophilicity, Delaney and Tox21. PHFs work as well as the best benchmarks. PHFs are very information dense and much smaller than other encoding methods yet found, meaning ML algorithms are much more energy efficient. PHFs success despite losing a large amount of chemical detail highlights how much of chemistry can be simplified to topological shape.

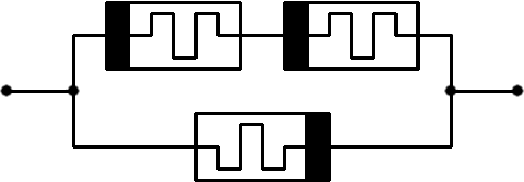

Single Memristor Logic Gates: From NOT to a Full Adder

Oct 19, 2015

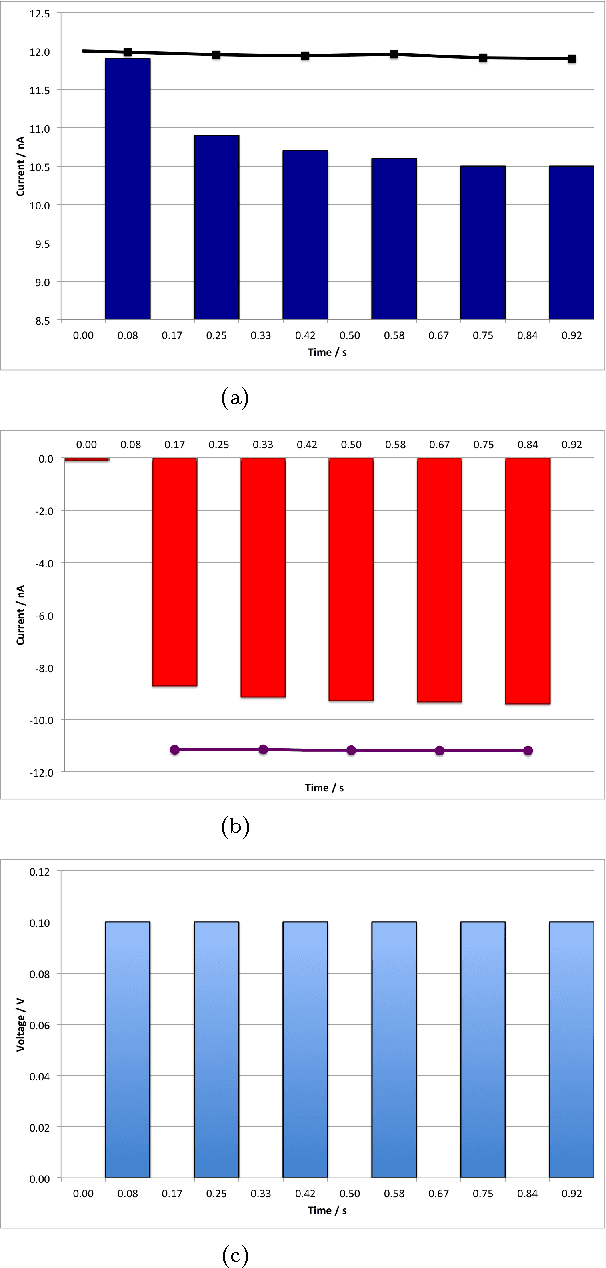

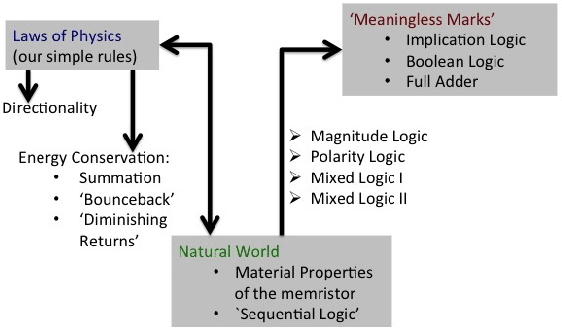

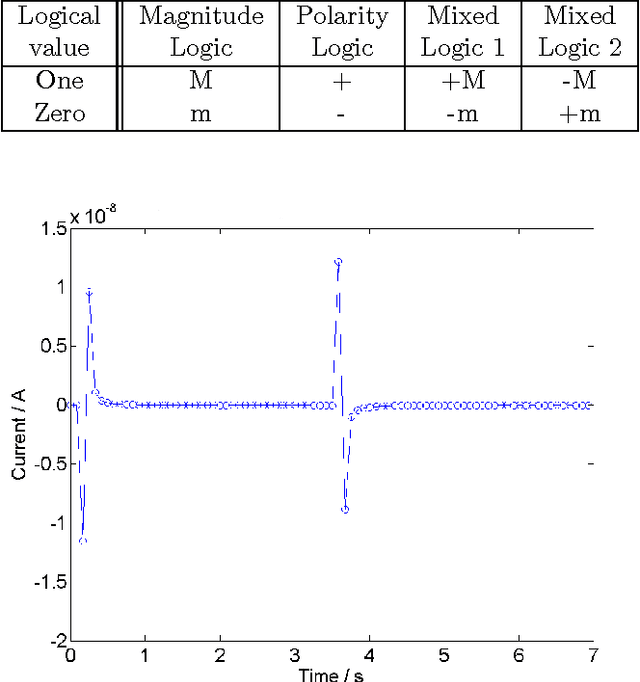

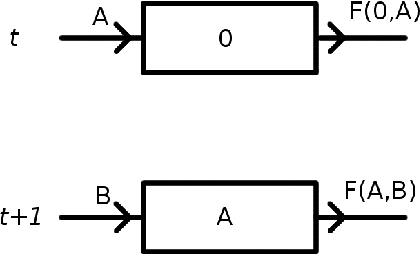

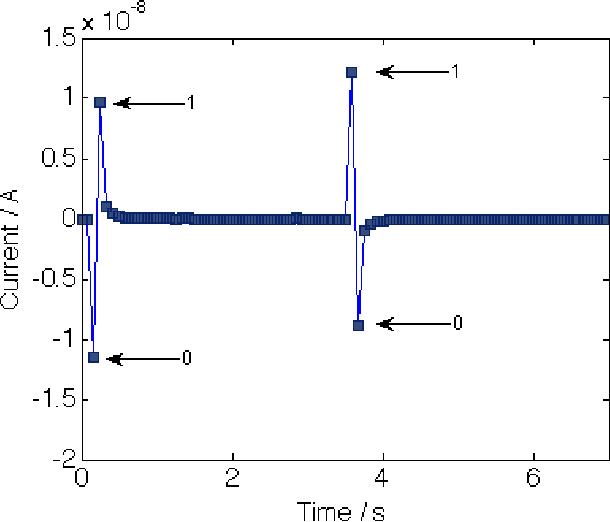

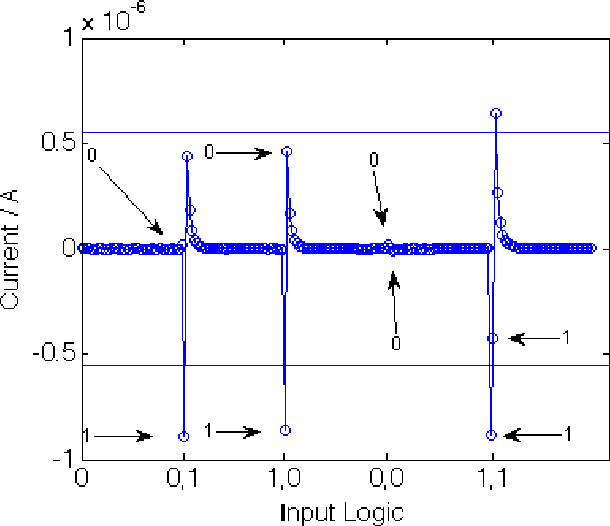

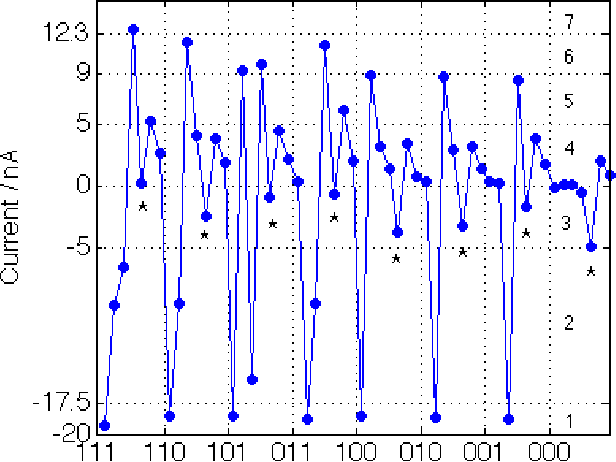

Abstract:Memristors have been suggested as a novel route to neuromorphic computing based on the similarity between them and neurons (specifically synapses and ion pumps). The d.c. action of the memristor is a current spike which imparts a short-term memory to the device. Here it is demonstrated that this short-term memory works exactly like habituation (e.g. in \emph{Aplysia}). We elucidate the physical rules, based on energy conservation, governing the interaction of these current spikes: summation, `bounce-back', directionality and `diminishing returns'. Using these rules, we introduce 4 different logical systems to implement sequential logic in the memristor and demonstrate how sequential logic works by instantiating a NOT gate, an AND gate, an XOR gate and a Full Adder with a single memristor. The Full Adder makes use of the memristor's short-term memory to add together three binary values and outputs the sum, the carry digit and even the order they were input in. A memristor full adder also outputs the arithmetical sum of bits, allowing for a logically (but not physically) reversible system. Essentially, we can replace an input/output port with an extra time-step, allowing a single memristor to do a hither-to unexpectedly large amount of computation. This makes up for the memristor's slow operation speed and may relate to how neurons do a similarly-large computation with such slow operations speeds. We propose that using spiking logic, either in gates or as neuron-analogues, with plastic rewritable connections between them, would allow the building of a neuromorphic computer.

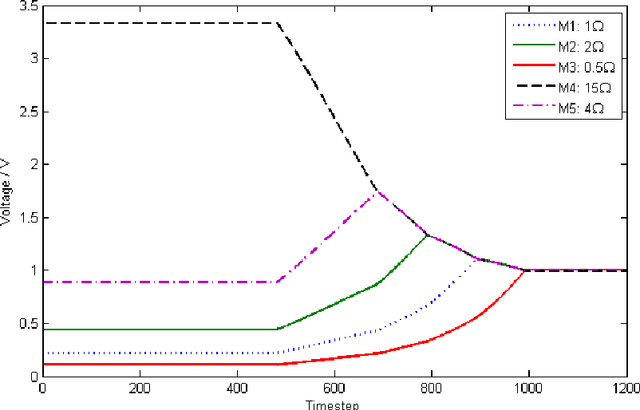

Evolving Spiking Networks with Variable Resistive Memories

May 17, 2015Abstract:Neuromorphic computing is a brainlike information processing paradigm that requires adaptive learning mechanisms. A spiking neuro-evolutionary system is used for this purpose; plastic resistive memories are implemented as synapses in spiking neural networks. The evolutionary design process exploits parameter self-adaptation and allows the topology and synaptic weights to be evolved for each network in an autonomous manner. Variable resistive memories are the focus of this research; each synapse has its own conductance profile which modifies the plastic behaviour of the device and may be altered during evolution. These variable resistive networks are evaluated on a noisy robotic dynamic-reward scenario against two static resistive memories and a system containing standard connections only. Results indicate that the extra behavioural degrees of freedom available to the networks incorporating variable resistive memories enable them to outperform the comparative synapse types.

* 27 pages

Is Spiking Logic the Route to Memristor-Based Computers?

Feb 17, 2014

Abstract:Memristors have been suggested as a novel route to neuromorphic computing based on the similarity between neurons (synapses and ion pumps) and memristors. The D.C. action of the memristor is a current spike, which we think will be fruitful for building memristor computers. In this paper, we introduce 4 different logical assignations to implement sequential logic in the memristor and introduce the physical rules, summation, `bounce-back', directionality and `diminishing returns', elucidated from our investigations. We then demonstrate how memristor sequential logic works by instantiating a NOT gate, an AND gate and a Full Adder with a single memristor. The Full Adder makes use of the memristor's memory to add three binary values together and outputs the value, the carry digit and even the order they were input in.

* Conference paper. Work also reported in US patent: `Logic device and method of performing a logical operation', patent application no. 14/089,191 (November 25, 2013)

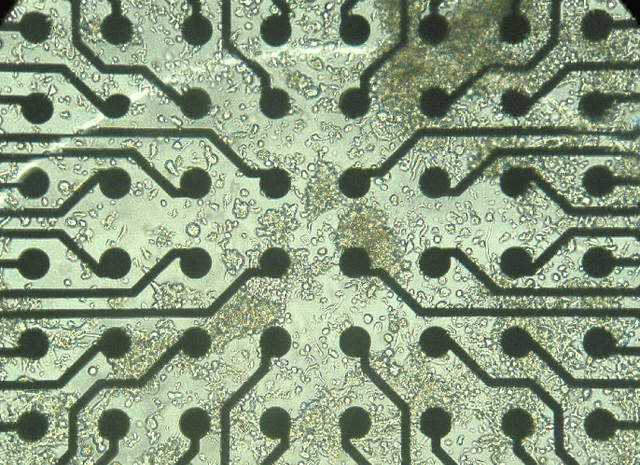

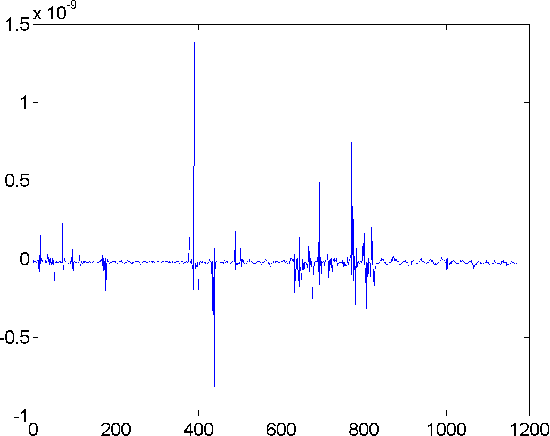

Connecting Spiking Neurons to a Spiking Memristor Network Changes the Memristor Dynamics

Feb 17, 2014

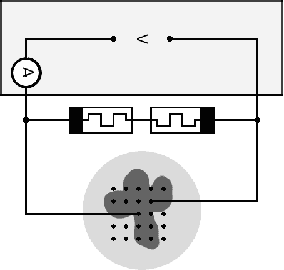

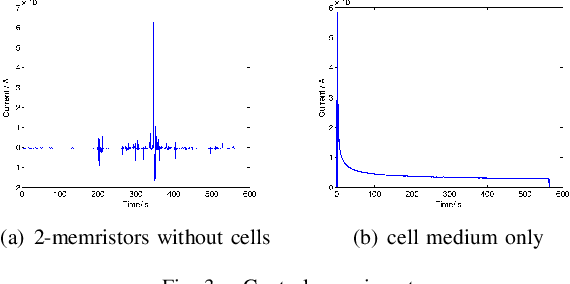

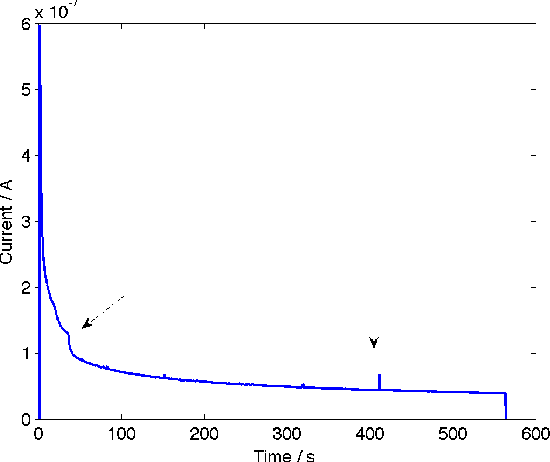

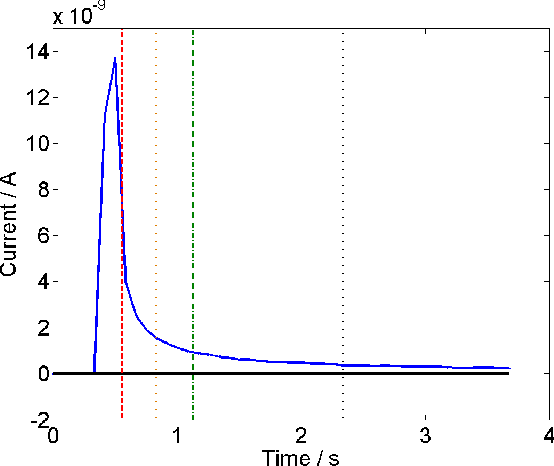

Abstract:Memristors have been suggested as neuromorphic computing elements. Spike-time dependent plasticity and the Hodgkin-Huxley model of the neuron have both been modelled effectively by memristor theory. The d.c. response of the memristor is a current spike. Based on these three facts we suggest that memristors are well-placed to interface directly with neurons. In this paper we show that connecting a spiking memristor network to spiking neuronal cells causes a change in the memristor network dynamics by: removing the memristor spikes, which we show is due to the effects of connection to aqueous medium; causing a change in current decay rate consistent with a change in memristor state; presenting more-linear $I-t$ dynamics; and increasing the memristor spiking rate, as a consequence of interaction with the spiking neurons. This demonstrates that neurons are capable of communicating directly with memristors, without the need for computer translation.

* Conference paper, 4 pages

Does the D.C. Response of Memristors Allow Robotic Short-Term Memory and a Possible Route to Artificial Time Perception?

Feb 17, 2014

Abstract:Time perception is essential for task switching, and in the mammalian brain appears alongside other processes. Memristors are electronic components used as synapses and as models for neurons. The d.c. response of memristors can be considered as a type of short-term memory. Interactions of the memristor d.c. response within networks of memristors leads to the emergence of oscillatory dynamics and intermittent spike trains, which are similar to neural dynamics. Based on this data, the structure of a memristor network control for a robot as it undergoes task switching is discussed and it is suggested that these emergent network dynamics could improve the performance of role switching and learning in an artificial intelligence and perhaps create artificial time perception.

* 3 page position paper

Design of a Hybrid Robot Control System using Memristor-Model and Ant-Inspired Based Information Transfer Protocols

Feb 17, 2014

Abstract:It is not always possible for a robot to process all the information from its sensors in a timely manner and thus quick and yet valid approximations of the robot's situation are needed. Here we design hybrid control for a robot within this limit using algorithms inspired by ant worker placement behaviour and based on memristor-based non-linearity.

* Conference

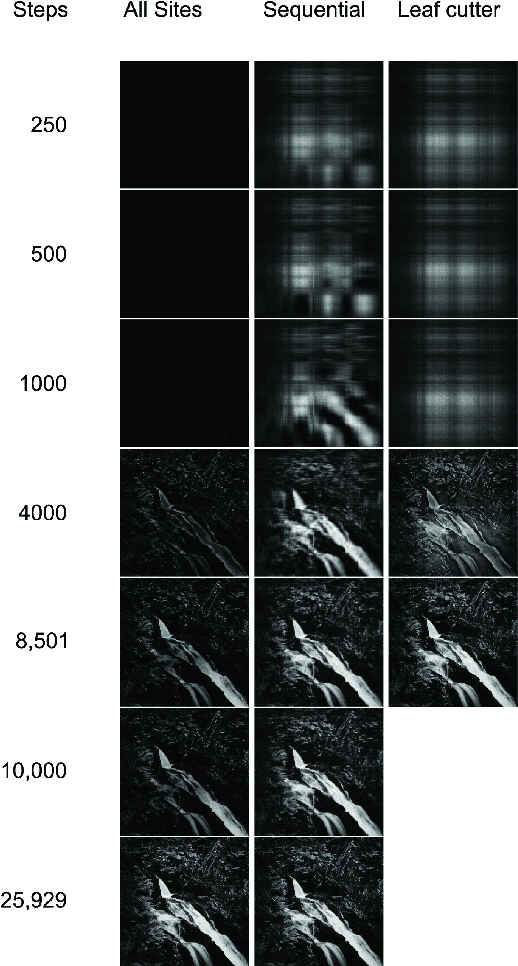

Comparison of Ant-Inspired Gatherer Allocation Approaches using Memristor-Based Environmental Models

Feb 04, 2013

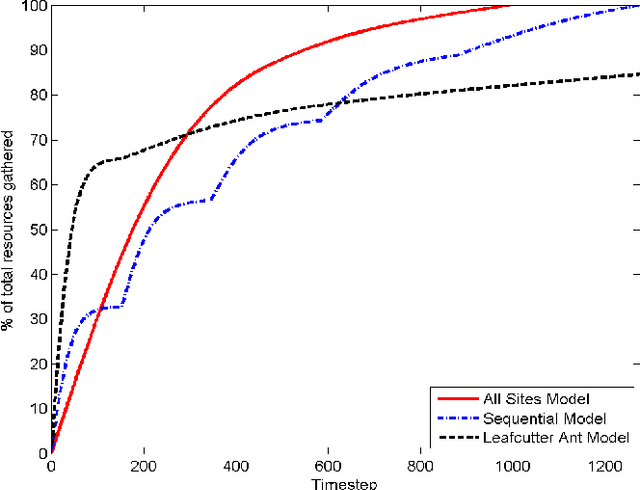

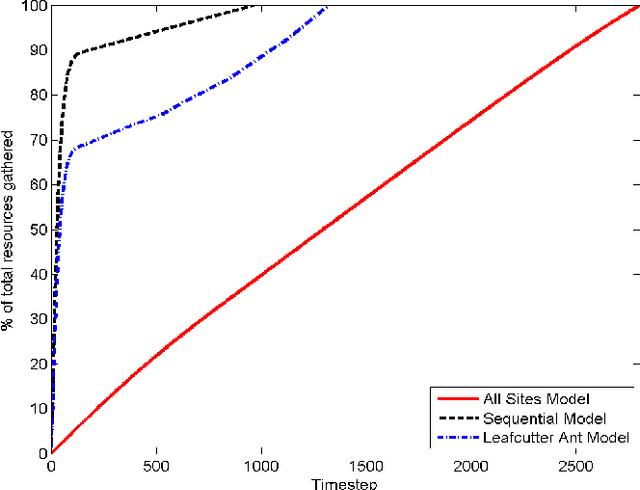

Abstract:Memristors are used to compare three gathering techniques in an already-mapped environment where resource locations are known. The All Site model, which apportions gatherers based on the modeled memristance of that path, proves to be good at increasing overall efficiency and decreasing time to fully deplete an environment, however it only works well when the resources are of similar quality. The Leaf Cutter method, based on Leaf Cutter Ant behaviour, assigns all gatherers first to the best resource, and once depleted, uses the All Site model to spread them out amongst the rest. The Leaf Cutter model is better at increasing resource influx in the short-term and vastly out-performs the All Site model in a more varied environments. It is demonstrated that memristor based abstractions of gatherer models provide potential methods for both the comparison and implementation of agent controls.

* 11 pages, 3 figures, conference paper

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge