Elisabetta Versace

School of Biological and Behavioural Sciences, Department of Biological and Experimental Psychology, Queen Mary University of London, UK

Robotics for poultry farming: challenges and opportunities

Nov 09, 2023

Abstract:Poultry farming plays a pivotal role in addressing human food demand. Robots are emerging as promising tools in poultry farming, with the potential to address sustainability issues while meeting the increasing production needs and demand for animal welfare. This review aims to identify the current advancements, limitations and future directions of development for robotics in poultry farming by examining existing challenges, solutions and innovative research, including robot-animal interactions. We cover the application of robots in different areas, from environmental monitoring to disease control, floor eggs collection and animal welfare. Robots not only demonstrate effective implementation on farms but also hold potential for ethological research on collective and social behaviour, which can in turn drive a better integration in industrial farming, with improved productivity and enhanced animal welfare.

Learning to detect an animal sound from five examples

May 22, 2023

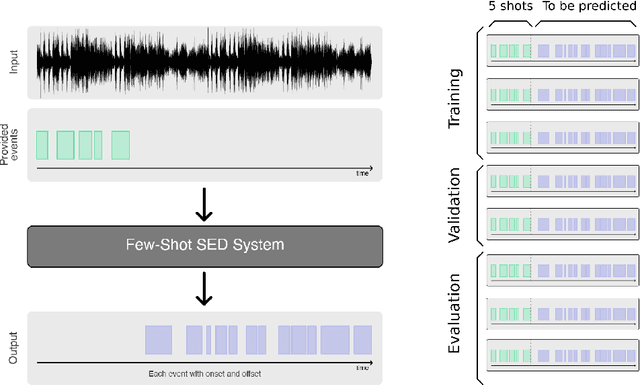

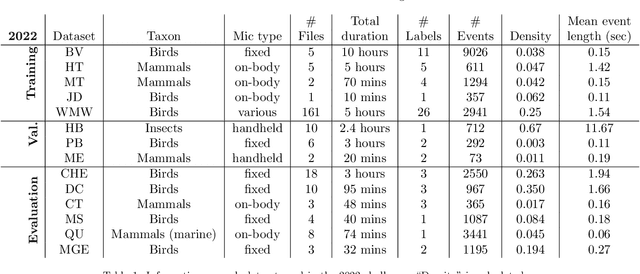

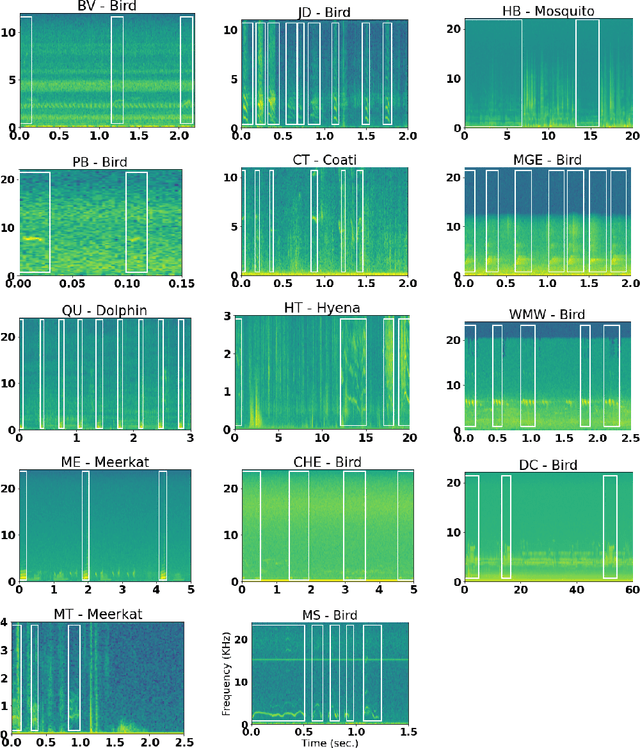

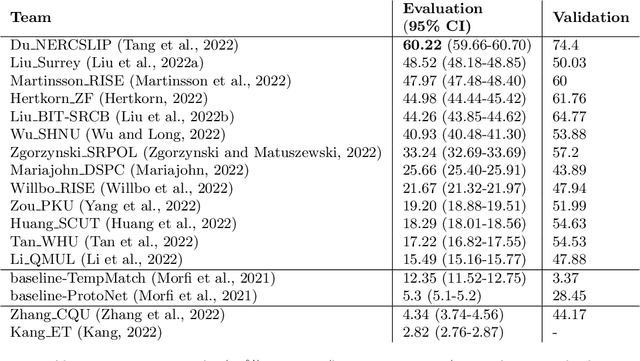

Abstract:Automatic detection and classification of animal sounds has many applications in biodiversity monitoring and animal behaviour. In the past twenty years, the volume of digitised wildlife sound available has massively increased, and automatic classification through deep learning now shows strong results. However, bioacoustics is not a single task but a vast range of small-scale tasks (such as individual ID, call type, emotional indication) with wide variety in data characteristics, and most bioacoustic tasks do not come with strongly-labelled training data. The standard paradigm of supervised learning, focussed on a single large-scale dataset and/or a generic pre-trained algorithm, is insufficient. In this work we recast bioacoustic sound event detection within the AI framework of few-shot learning. We adapt this framework to sound event detection, such that a system can be given the annotated start/end times of as few as 5 events, and can then detect events in long-duration audio -- even when the sound category was not known at the time of algorithm training. We introduce a collection of open datasets designed to strongly test a system's ability to perform few-shot sound event detections, and we present the results of a public contest to address the task. We show that prototypical networks are a strong-performing method, when enhanced with adaptations for general characteristics of animal sounds. We demonstrate that widely-varying sound event durations are an important factor in performance, as well as non-stationarity, i.e. gradual changes in conditions throughout the duration of a recording. For fine-grained bioacoustic recognition tasks without massive annotated training data, our results demonstrate that few-shot sound event detection is a powerful new method, strongly outperforming traditional signal-processing detection methods in the fully automated scenario.

Joint Scattering for Automatic Chick Call Recognition

Oct 08, 2021

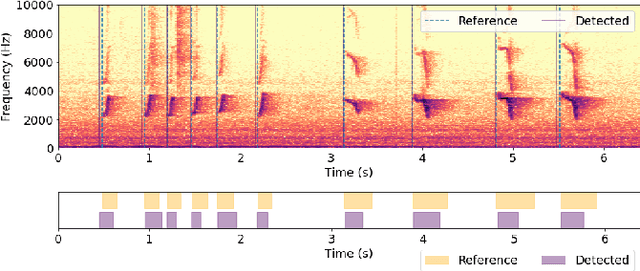

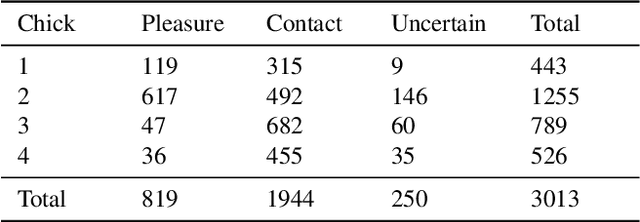

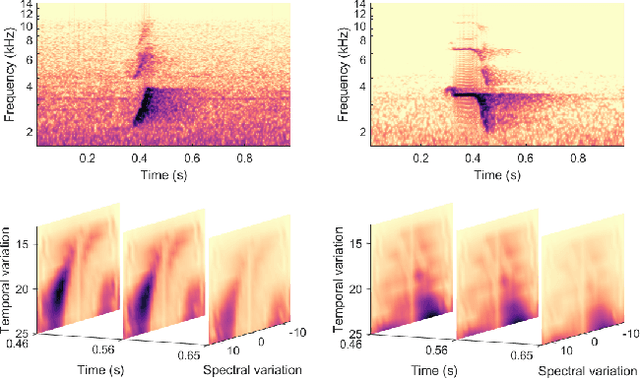

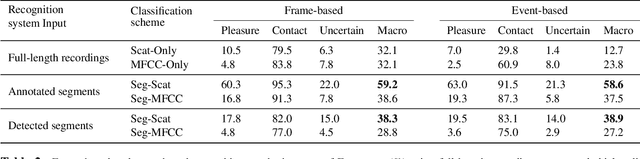

Abstract:Animal vocalisations contain important information about health, emotional state, and behaviour, thus can be potentially used for animal welfare monitoring. Motivated by the spectro-temporal patterns of chick calls in the time$-$frequency domain, in this paper we propose an automatic system for chick call recognition using the joint time$-$frequency scattering transform (JTFS). Taking full-length recordings as input, the system first extracts chick call candidates by an onset detector and silence removal. After computing their JTFS features, a support vector machine classifier groups each candidate into different chick call types. Evaluating on a dataset comprising 3013 chick calls collected in laboratory conditions, the proposed recognition system using the JTFS features improves the frame- and event-based macro F-measures by 9.5% and 11.7%, respectively, than that of a mel-frequency cepstral coefficients baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge