Elio Tuci

Studying speed-accuracy trade-offs in best-of-n collective decision-making through heterogeneous mean-field modelling

Oct 20, 2023Abstract:To succeed in their objectives, groups of individuals must be able to make quick and accurate collective decisions on the best among alternatives with different qualities. Group-living animals aim to do that all the time. Plants and fungi are thought to do so too. Swarms of autonomous robots can also be programmed to make best-of-n decisions for solving tasks collaboratively. Ultimately, humans critically need it and so many times they should be better at it! Despite their simplicity, mathematical tractability made models like the voter model (VM) and the local majority rule model (MR) useful to describe in simple terms such collective decision-making processes. To reach a consensus, individuals change their opinion by interacting with neighbours in their social network. At least among animals and robots, options with a better quality are exchanged more often and therefore spread faster than lower-quality options, leading to the collective selection of the best option. With our work, we study the impact of individuals making errors in pooling others' opinions caused, for example, to reduce the cognitive load. Our analysis in grounded on the introduction of a model that generalises the two existing VM and MR models, showing a speed-accuracy trade-off regulated by the cognitive effort of individuals. We also investigate the impact of the interaction network topology on the collective dynamics. To do so, we extend our model and, by using the heterogeneous mean-field approach, we show that another speed-accuracy trade-off is regulated by network connectivity. An interesting result is that reduced network connectivity corresponds to an increase in collective decision accuracy

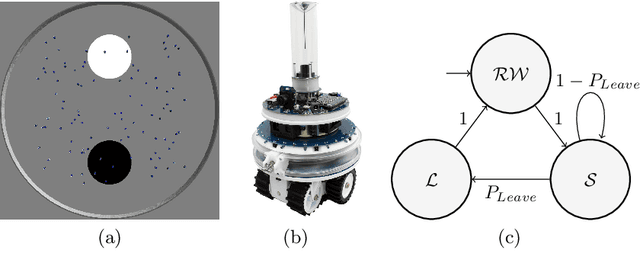

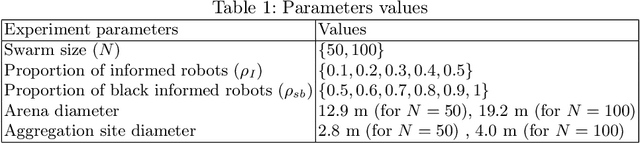

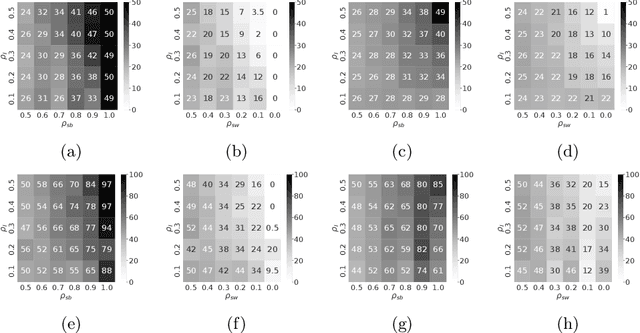

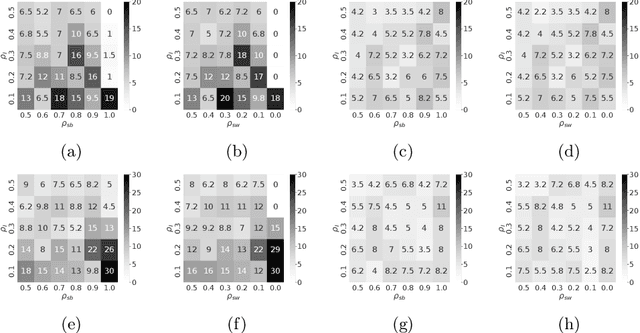

Controlling Robot Swarm Aggregation through a Minority of Informed Robots

May 06, 2022

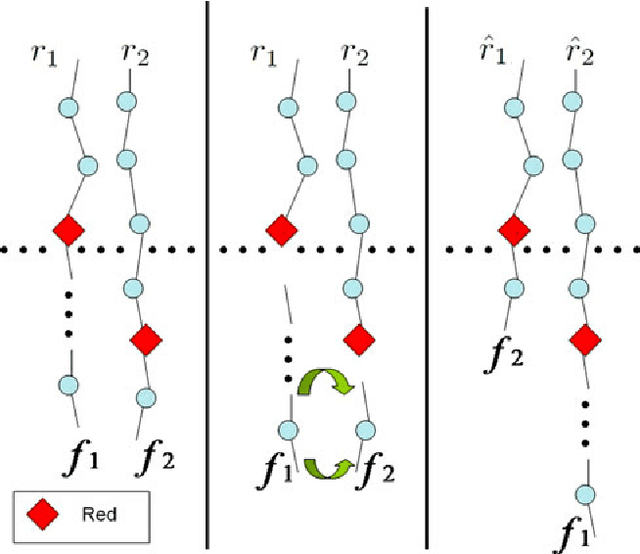

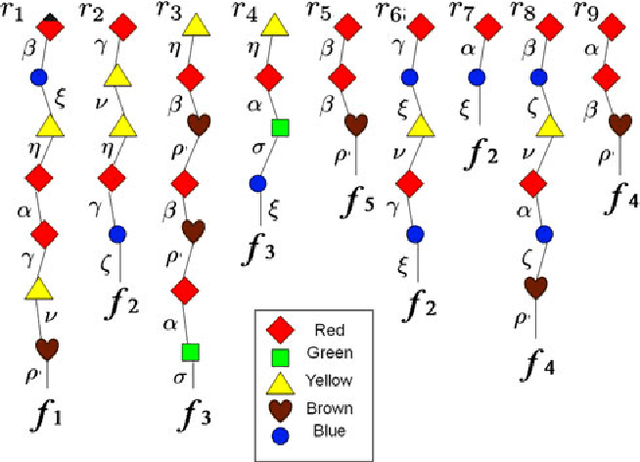

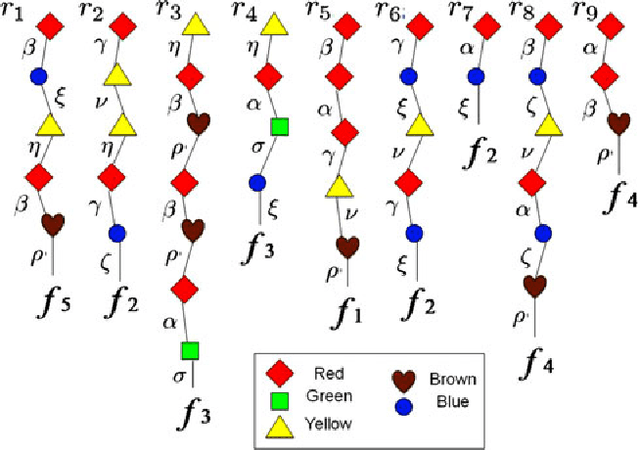

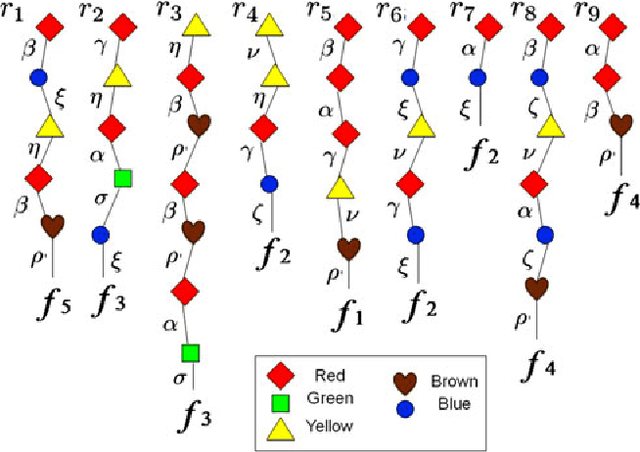

Abstract:Self-organised aggregation is a well studied behaviour in swarm robotics as it is the pre-condition for the development of more advanced group-level responses. In this paper, we investigate the design of decentralised algorithms for a swarm of heterogeneous robots that self-aggregate over distinct target sites. A previous study has shown that including as part of the swarm a number of informed robots can steer the dynamic of the aggregation process to a desirable distribution of the swarm between the available aggregation sites. We have replicated the results of the previous study using a simplified approach, we removed constraints related to the communication protocol of the robots and simplified the control mechanisms regulating the transitions between states of the probabilistic controller. The results show that the performances obtained with the previous, more complex, controller can be replicated with our simplified approach which offers clear advantages in terms of portability to the physical robots and in terms of flexibility. That is, our simplified approach can generate self-organised aggregation responses in a larger set of operating conditions than what can be achieved with the complex controller.

Geiringer Theorems: From Population Genetics to Computational Intelligence, Memory Evolutive Systems and Hebbian Learning

May 11, 2013

Abstract:The classical Geiringer theorem addresses the limiting frequency of occurrence of various alleles after repeated application of crossover. It has been adopted to the setting of evolutionary algorithms and, a lot more recently, reinforcement learning and Monte-Carlo tree search methodology to cope with a rather challenging question of action evaluation at the chance nodes. The theorem motivates novel dynamic parallel algorithms that are explicitly described in the current paper for the first time. The algorithms involve independent agents traversing a dynamically constructed directed graph that possibly has loops. A rather elegant and profound category-theoretic model of cognition in biological neural networks developed by a well-known French mathematician, professor Andree Ehresmann jointly with a neurosurgeon, Jan Paul Vanbremeersch over the last thirty years provides a hint at the connection between such algorithms and Hebbian learning.

* arXiv admin note: text overlap with arXiv:1110.4657

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge