Elif Ertekin

A Single Architecture for Representing Invariance Under Any Space Group

Dec 16, 2025Abstract:Incorporating known symmetries in data into machine learning models has consistently improved predictive accuracy, robustness, and generalization. However, achieving exact invariance to specific symmetries typically requires designing bespoke architectures for each group of symmetries, limiting scalability and preventing knowledge transfer across related symmetries. In the case of the space groups, symmetries critical to modeling crystalline solids in materials science and condensed matter physics, this challenge is particularly salient as there are 230 such groups in three dimensions. In this work we present a new approach to such crystallographic symmetries by developing a single machine learning architecture that is capable of adapting its weights automatically to enforce invariance to any input space group. Our approach is based on constructing symmetry-adapted Fourier bases through an explicit characterization of constraints that group operations impose on Fourier coefficients. Encoding these constraints into a neural network layer enables weight sharing across different space groups, allowing the model to leverage structural similarities between groups and overcome data sparsity when limited measurements are available for specific groups. We demonstrate the effectiveness of this approach in achieving competitive performance on material property prediction tasks and performing zero-shot learning to generalize to unseen groups.

Space Group Equivariant Crystal Diffusion

May 16, 2025Abstract:Accelerating inverse design of crystalline materials with generative models has significant implications for a range of technologies. Unlike other atomic systems, 3D crystals are invariant to discrete groups of isometries called the space groups. Crucially, these space group symmetries are known to heavily influence materials properties. We propose SGEquiDiff, a crystal generative model which naturally handles space group constraints with space group invariant likelihoods. SGEquiDiff consists of an SE(3)-invariant, telescoping discrete sampler of crystal lattices; permutation-invariant, transformer-based autoregressive sampling of Wyckoff positions, elements, and numbers of symmetrically unique atoms; and space group equivariant diffusion of atomic coordinates. We show that space group equivariant vector fields automatically live in the tangent spaces of the Wyckoff positions. SGEquiDiff achieves state-of-the-art performance on standard benchmark datasets as assessed by quantitative proxy metrics and quantum mechanical calculations.

Diagonal Symmetrization of Neural Network Solvers for the Many-Electron Schrödinger Equation

Feb 07, 2025Abstract:Incorporating group symmetries into neural networks has been a cornerstone of success in many AI-for-science applications. Diagonal groups of isometries, which describe the invariance under a simultaneous movement of multiple objects, arise naturally in many-body quantum problems. Despite their importance, diagonal groups have received relatively little attention, as they lack a natural choice of invariant maps except in special cases. We study different ways of incorporating diagonal invariance in neural network ans\"atze trained via variational Monte Carlo methods, and consider specifically data augmentation, group averaging and canonicalization. We show that, contrary to standard ML setups, in-training symmetrization destabilizes training and can lead to worse performance. Our theoretical and numerical results indicate that this unexpected behavior may arise from a unique computational-statistical tradeoff not found in standard ML analyses of symmetrization. Meanwhile, we demonstrate that post hoc averaging is less sensitive to such tradeoffs and emerges as a simple, flexible and effective method for improving neural network solvers.

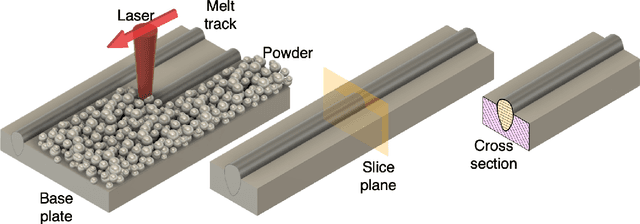

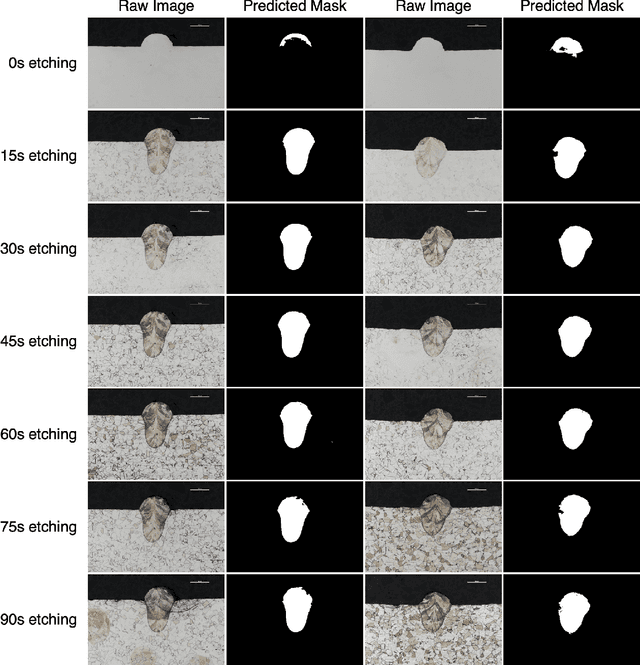

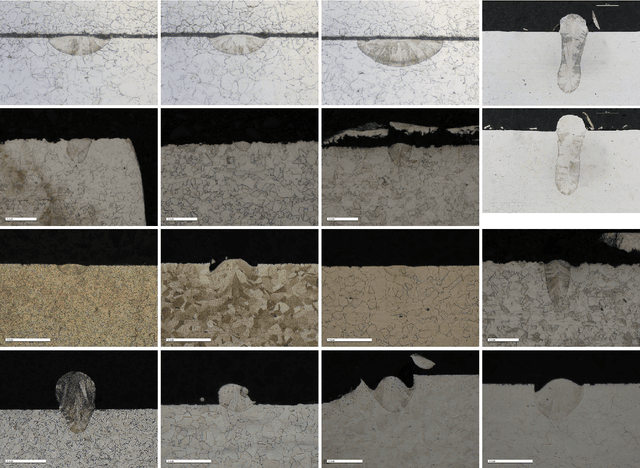

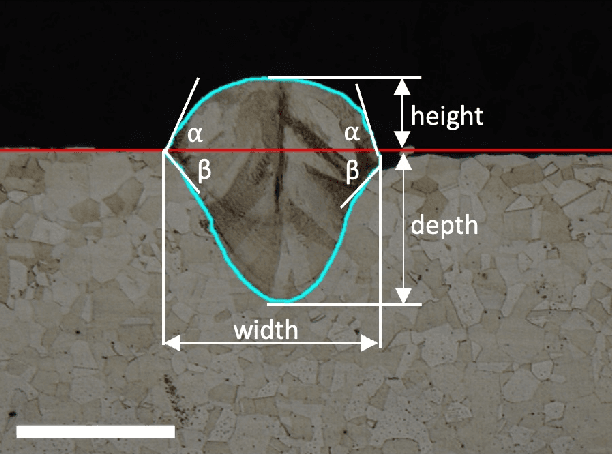

Automated Segmentation and Analysis of Microscopy Images of Laser Powder Bed Fusion Melt Tracks

Sep 26, 2024

Abstract:With the increasing adoption of metal additive manufacturing (AM), researchers and practitioners are turning to data-driven approaches to optimise printing conditions. Cross-sectional images of melt tracks provide valuable information for tuning process parameters, developing parameter scaling data, and identifying defects. Here we present an image segmentation neural network that automatically identifies and measures melt track dimensions from a cross-section image. We use a U-Net architecture to train on a data set of 62 pre-labelled images obtained from different labs, machines, and materials coupled with image augmentation. When neural network hyperparameters such as batch size and learning rate are properly tuned, the learned model shows an accuracy for classification of over 99% and an F1 score over 90%. The neural network exhibits robustness when tested on images captured by various users, printed on different machines, and acquired using different microscopes. A post-processing module extracts the height and width of the melt pool, and the wetting angles. We discuss opportunities to improve model performance and avenues for transfer learning, such as extension to other AM processes such as directed energy deposition.

Leveraging Language Representation for Material Recommendation, Ranking, and Exploration

May 01, 2023Abstract:Data-driven approaches for material discovery and design have been accelerated by emerging efforts in machine learning. While there is enormous progress towards learning the structure to property relationship of materials, methods that allow for general representations of crystals to effectively explore the vast material search space and identify high-performance candidates remain limited. In this work, we introduce a material discovery framework that uses natural language embeddings derived from material science-specific language models as representations of compositional and structural features. The discovery framework consists of a joint scheme that, given a query material, first recalls candidates based on representational similarity, and ranks the candidates based on target properties through multi-task learning. The contextual knowledge encoded in language representations is found to convey information about material properties and structures, enabling both similarity analysis for recall, and multi-task learning to share information for related properties. By applying the discovery framework to thermoelectric materials, we demonstrate diversified recommendations of prototype structures and identify under-studied high-performance material spaces, including halide perovskite, delafossite-like, and spinel-like structures. By leveraging material language representations, our framework provides a generalized means for effective material recommendation, which is task-agnostic and can be applied to various material systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge