Elad Hirsch

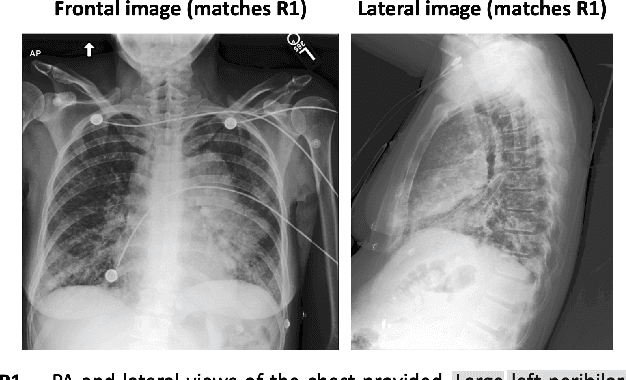

Image-aware Evaluation of Generated Medical Reports

Oct 22, 2024

Abstract:The paper proposes a novel evaluation metric for automatic medical report generation from X-ray images, VLScore. It aims to overcome the limitations of existing evaluation methods, which either focus solely on textual similarities, ignoring clinical aspects, or concentrate only on a single clinical aspect, the pathology, neglecting all other factors. The key idea of our metric is to measure the similarity between radiology reports while considering the corresponding image. We demonstrate the benefit of our metric through evaluation on a dataset where radiologists marked errors in pairs of reports, showing notable alignment with radiologists' judgments. In addition, we provide a new dataset for evaluating metrics. This dataset includes well-designed perturbations that distinguish between significant modifications (e.g., removal of a diagnosis) and insignificant ones. It highlights the weaknesses in current evaluation metrics and provides a clear framework for analysis.

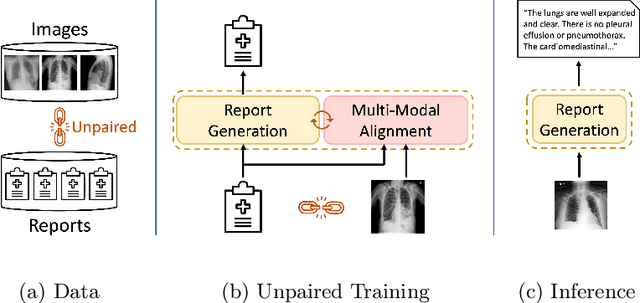

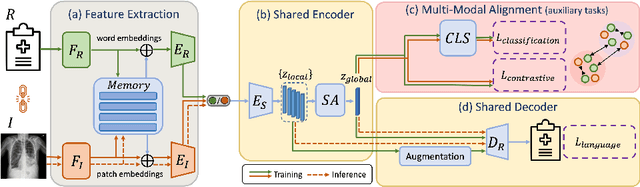

MedRAT: Unpaired Medical Report Generation via Auxiliary Tasks

Jul 04, 2024

Abstract:Generating medical reports for X-ray images is a challenging task, particularly in an unpaired scenario where paired image-report data is unavailable for training. To address this challenge, we propose a novel model that leverages the available information in two distinct datasets, one comprising reports and the other consisting of images. The core idea of our model revolves around the notion that combining auto-encoding report generation with multi-modal (report-image) alignment can offer a solution. However, the challenge persists regarding how to achieve this alignment when pair correspondence is absent. Our proposed solution involves the use of auxiliary tasks, particularly contrastive learning and classification, to position related images and reports in close proximity to each other. This approach differs from previous methods that rely on pre-processing steps using external information stored in a knowledge graph. Our model, named MedRAT, surpasses previous state-of-the-art methods, demonstrating the feasibility of generating comprehensive medical reports without the need for paired data or external tools.

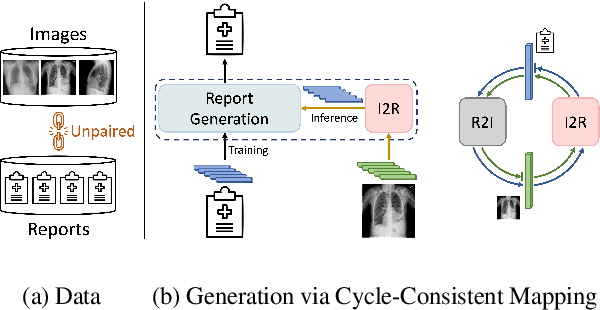

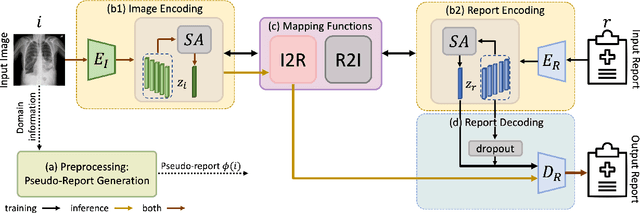

MedCycle: Unpaired Medical Report Generation via Cycle-Consistency

Mar 21, 2024

Abstract:Generating medical reports for X-ray images presents a significant challenge, particularly in unpaired scenarios where access to paired image-report data for training is unavailable. Previous works have typically learned a joint embedding space for images and reports, necessitating a specific labeling schema for both. We introduce an innovative approach that eliminates the need for consistent labeling schemas, thereby enhancing data accessibility and enabling the use of incompatible datasets. This approach is based on cycle-consistent mapping functions that transform image embeddings into report embeddings, coupled with report auto-encoding for medical report generation. Our model and objectives consider intricate local details and the overarching semantic context within images and reports. This approach facilitates the learning of effective mapping functions, resulting in the generation of coherent reports. It outperforms state-of-the-art results in unpaired chest X-ray report generation, demonstrating improvements in both language and clinical metrics.

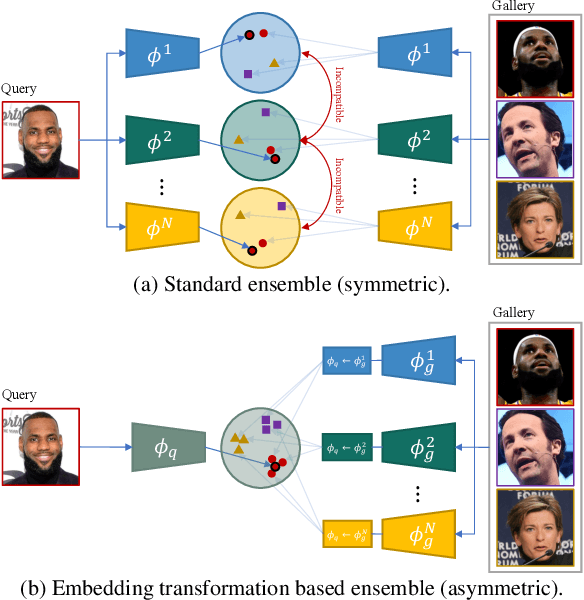

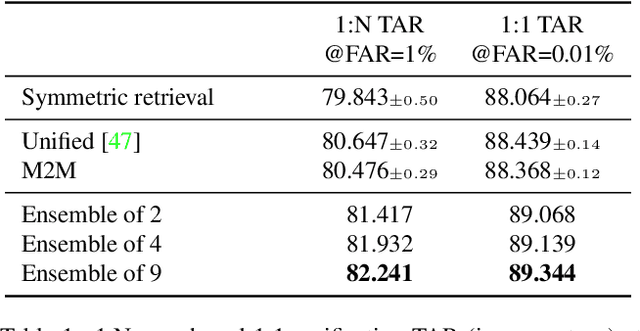

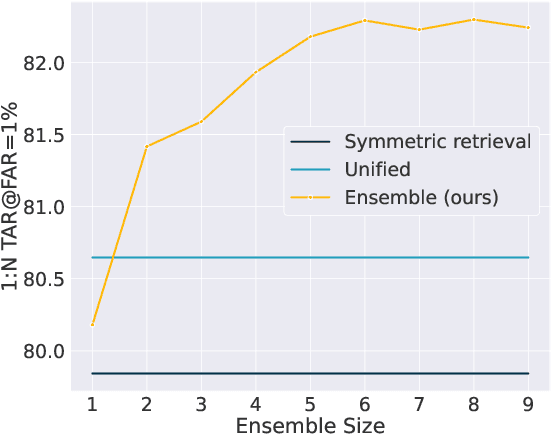

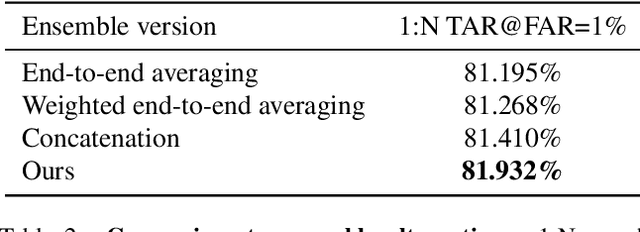

Asymmetric Face Recognition with Cross Model Compatible Ensembles

Mar 30, 2023

Abstract:The asymmetrical retrieval setting is a well suited solution for resource constrained face recognition. In this setting a large model is used for indexing the gallery while a lightweight model is used for querying. The key principle in such systems is ensuring that both models share the same embedding space. Most methods in this domain are based on knowledge distillation. While useful, they suffer from several drawbacks: they are upper-bounded by the performance of the single best model found and cannot be extended to use an ensemble of models in a straightforward manner. In this paper we present an approach that does not rely on knowledge distillation, rather it utilizes embedding transformation models. This allows the use of N independently trained and diverse gallery models (e.g., trained on different datasets or having a different architecture) and a single query model. As a result, we improve the overall accuracy beyond that of any single model while maintaining a low computational budget for querying. Additionally, we propose a gallery image rejection method that utilizes the diversity between multiple transformed embeddings to estimate the uncertainty of gallery images.

LIMITR: Leveraging Local Information for Medical Image-Text Representation

Mar 21, 2023

Abstract:Medical imaging analysis plays a critical role in the diagnosis and treatment of various medical conditions. This paper focuses on chest X-ray images and their corresponding radiological reports. It presents a new model that learns a joint X-ray image & report representation. The model is based on a novel alignment scheme between the visual data and the text, which takes into account both local and global information. Furthermore, the model integrates domain-specific information of two types -- lateral images and the consistent visual structure of chest images. Our representation is shown to benefit three types of retrieval tasks: text-image retrieval, class-based retrieval, and phrase-grounding.

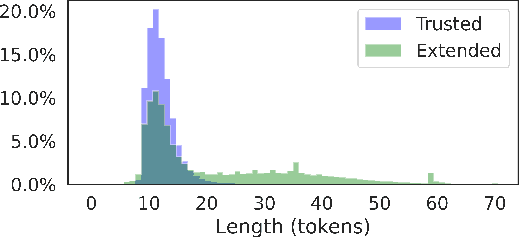

CLID: Controlled-Length Image Descriptions with Limited Data

Nov 27, 2022

Abstract:Controllable image captioning models generate human-like image descriptions, enabling some kind of control over the generated captions. This paper focuses on controlling the caption length, i.e. a short and concise description or a long and detailed one. Since existing image captioning datasets contain mostly short captions, generating long captions is challenging. To address the shortage of long training examples, we propose to enrich the dataset with varying-length self-generated captions. These, however, might be of varying quality and are thus unsuitable for conventional training. We introduce a novel training strategy that selects the data points to be used at different times during the training. Our method dramatically improves the length-control abilities, while exhibiting SoTA performance in terms of caption quality. Our approach is general and is shown to be applicable also to paragraph generation.

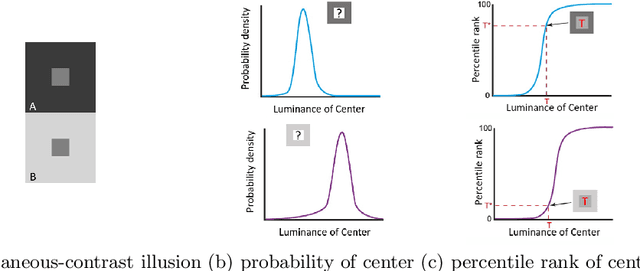

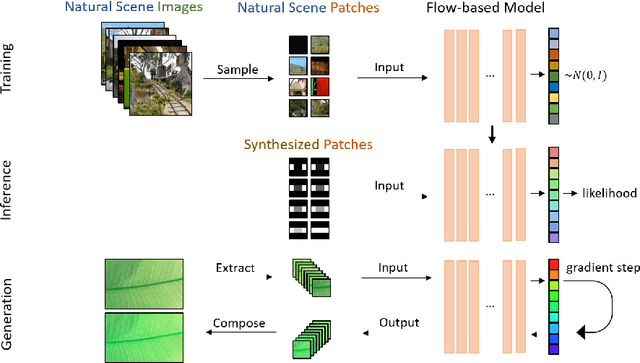

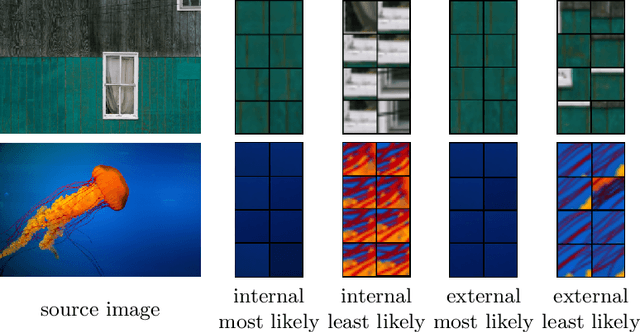

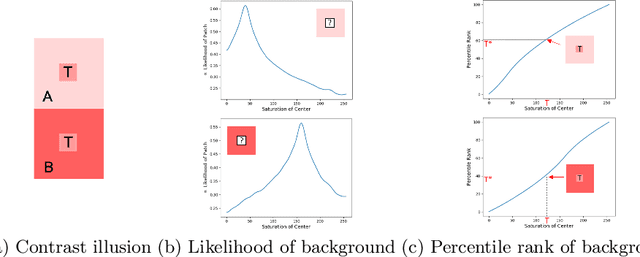

A Statistical Story of Visual Illusions

May 18, 2020

Abstract:This paper explores the wholly empirical paradigm of visual illusions, which was introduced two decades ago in Neuro-Science. This data-driven approach attempts to explain visual illusions by the likelihood of patches in real-world images. Neither the data, nor the tools, existed at the time to extensively support this paradigm. In the era of big data and deep learning, at last, it becomes possible. This paper introduces a tool that computes the likelihood of patches, given a large dataset to learn from. Given this tool, we present an approach that manages to support the paradigm and explain visual illusions in a unified manner. Furthermore, we show how to generate (or enhance) visual illusions in natural images, by applying the same principles (and tool) reversely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge