Edesio Alcobaça

Rethinking Default Values: a Low Cost and Efficient Strategy to Define Hyperparameters

Aug 19, 2020

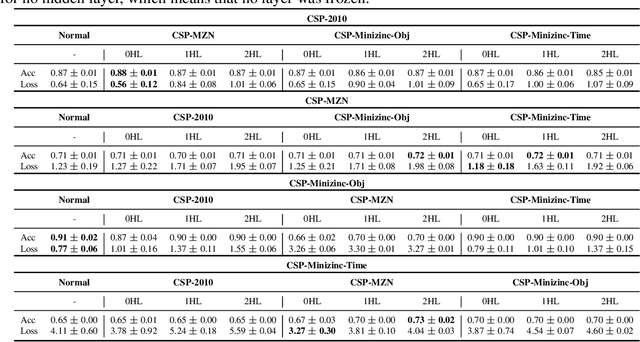

Abstract:Machine Learning (ML) algorithms have been successfully employed by a vast range of practitioners with different backgrounds. One of the reasons for ML popularity is the capability to consistently delivers accurate results, which can be further boosted by adjusting hyperparameters (HP). However, part of practitioners has limited knowledge about the algorithms and does not take advantage of suitable HP settings. In general, HP values are defined by trial and error, tuning, or by using default values. Trial and error is very subjective, time costly and dependent on the user experience. Tuning techniques search for HP values able to maximize the predictive performance of induced models for a given dataset, but with the drawback of a high computational cost and target specificity. To avoid tuning costs, practitioners use default values suggested by the algorithm developer or by tools implementing the algorithm. Although default values usually result in models with acceptable predictive performance, different implementations of the same algorithm can suggest distinct default values. To maintain a balance between tuning and using default values, we propose a strategy to generate new optimized default values. Our approach is grounded on a small set of optimized values able to obtain predictive performance values better than default settings provided by popular tools. The HP candidates are estimated through a pool of promising values tuned from a small and informative set of datasets. After performing a large experiment and a careful analysis of the results, we concluded that our approach delivers better default values. Besides, it leads to competitive solutions when compared with the use of tuned values, being easier to use and having a lower cost.Based on our results, we also extracted simple rules to guide practitioners in deciding whether using our new methodology or a tuning approach.

Transfer Learning for Algorithm Recommendation

Oct 15, 2019

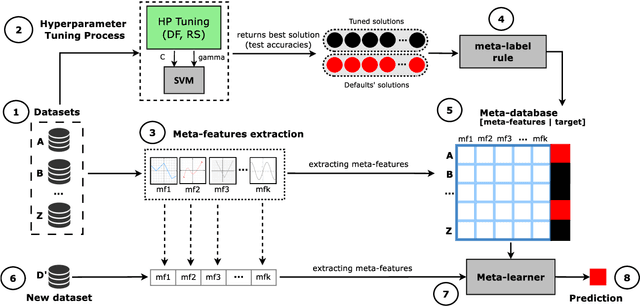

Abstract:Meta-Learning is a subarea of Machine Learning that aims to take advantage of prior knowledge to learn faster and with fewer data [1]. There are different scenarios where meta-learning can be applied, and one of the most common is algorithm recommendation, where previous experience on applying machine learning algorithms for several datasets can be used to learn which algorithm, from a set of options, would be more suitable for a new dataset [2]. Perhaps the most popular form of meta-learning is transfer learning, which consists of transferring knowledge acquired by a machine learning algorithm in a previous learning task to increase its performance faster in another and similar task [3]. Transfer Learning has been widely applied in a variety of complex tasks such as image classification, machine translation and, speech recognition, achieving remarkable results [4,5,6,7,8]. Although transfer learning is very used in traditional or base-learning, it is still unknown if it is useful in a meta-learning setup. For that purpose, in this paper, we investigate the effects of transferring knowledge in the meta-level instead of base-level. Thus, we train a neural network on meta-datasets related to algorithm recommendation, and then using transfer learning, we reuse the knowledge learned by the neural network in other similar datasets from the same domain, to verify how transferable is the acquired meta-knowledge.

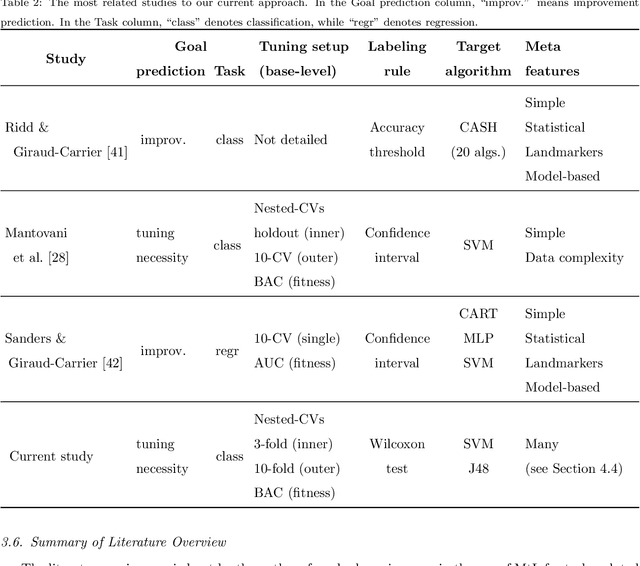

A meta-learning recommender system for hyperparameter tuning: predicting when tuning improves SVM classifiers

Jun 11, 2019

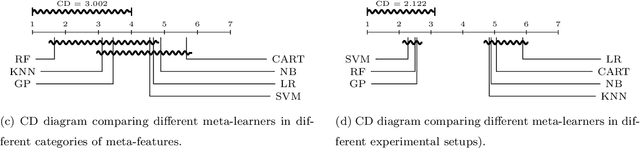

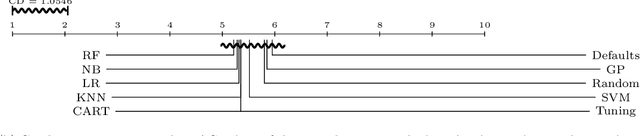

Abstract:For many machine learning algorithms, predictive performance is critically affected by the hyperparameter values used to train them. However, tuning these hyperparameters can come at a high computational cost, especially on larger datasets, while the tuned settings do not always significantly outperform the default values. This paper proposes a recommender system based on meta-learning to identify exactly when it is better to use default values and when to tune hyperparameters for each new dataset. Besides, an in-depth analysis is performed to understand what they take into account for their decisions, providing useful insights. An extensive analysis of different categories of meta-features, meta-learners, and setups across 156 datasets is performed. Results show that it is possible to accurately predict when tuning will significantly improve the performance of the induced models. The proposed system reduces the time spent on optimization processes, without reducing the predictive performance of the induced models (when compared with the ones obtained using tuned hyperparameters). We also explain the decision-making process of the meta-learners in terms of linear separability-based hypotheses. Although this analysis is focused on the tuning of Support Vector Machines, it can also be applied to other algorithms, as shown in experiments performed with decision trees.

* 49 pages, 11 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge