Donald D. Lucas

A Staged Deep Learning Approach to Spatial Refinement in 3D Temporal Atmospheric Transport

Dec 14, 2024Abstract:High-resolution spatiotemporal simulations effectively capture the complexities of atmospheric plume dispersion in complex terrain. However, their high computational cost makes them impractical for applications requiring rapid responses or iterative processes, such as optimization, uncertainty quantification, or inverse modeling. To address this challenge, this work introduces the Dual-Stage Temporal Three-dimensional UNet Super-resolution (DST3D-UNet-SR) model, a highly efficient deep learning model for plume dispersion prediction. DST3D-UNet-SR is composed of two sequential modules: the temporal module (TM), which predicts the transient evolution of a plume in complex terrain from low-resolution temporal data, and the spatial refinement module (SRM), which subsequently enhances the spatial resolution of the TM predictions. We train DST3DUNet- SR using a comprehensive dataset derived from high-resolution large eddy simulations (LES) of plume transport. We propose the DST3D-UNet-SR model to significantly accelerate LES simulations of three-dimensional plume dispersion by three orders of magnitude. Additionally, the model demonstrates the ability to dynamically adapt to evolving conditions through the incorporation of new observational data, substantially improving prediction accuracy in high-concentration regions near the source. Keywords: Atmospheric sciences, Geosciences, Plume transport,3D temporal sequences, Artificial intelligence, CNN, LSTM, Autoencoder, Autoregressive model, U-Net, Super-resolution, Spatial Refinement.

Spatiotemporal Predictions of Toxic Urban Plumes Using Deep Learning

May 30, 2024

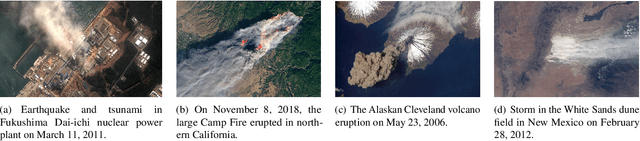

Abstract:Industrial accidents, chemical spills, and structural fires can release large amounts of harmful materials that disperse into urban atmospheres and impact populated areas. Computer models are typically used to predict the transport of toxic plumes by solving fluid dynamical equations. However, these models can be computationally expensive due to the need for many grid cells to simulate turbulent flow and resolve individual buildings and streets. In emergency response situations, alternative methods are needed that can run quickly and adequately capture important spatiotemporal features. Here, we present a novel deep learning model called ST-GasNet that was inspired by the mathematical equations that govern the behavior of plumes as they disperse through the atmosphere. ST-GasNet learns the spatiotemporal dependencies from a limited set of temporal sequences of ground-level toxic urban plumes generated by a high-resolution large eddy simulation model. On independent sequences, ST-GasNet accurately predicts the late-time spatiotemporal evolution, given the early-time behavior as an input, even for cases when a building splits a large plume into smaller plumes. By incorporating large-scale wind boundary condition information, ST-GasNet achieves a prediction accuracy of at least 90% on test data for the entire prediction period.

Learning Physics through Images: An Application to Wind-Driven Spatial Patterns

Feb 03, 2022

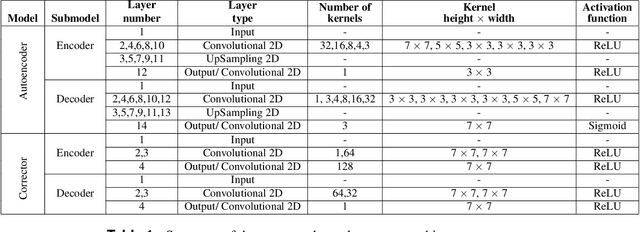

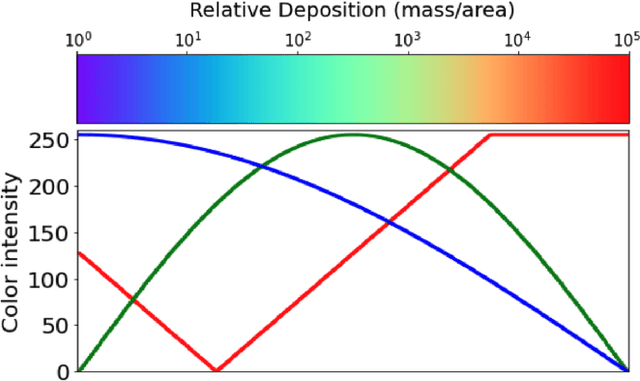

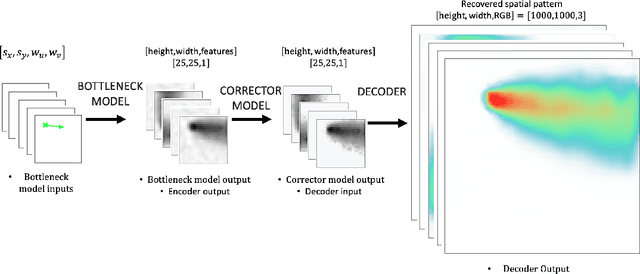

Abstract:For centuries, scientists have observed nature to understand the laws that govern the physical world. The traditional process of turning observations into physical understanding is slow. Imperfect models are constructed and tested to explain relationships in data. Powerful new algorithms are available that can enable computers to learn physics by observing images and videos. Inspired by this idea, instead of training machine learning models using physical quantities, we trained them using images, that is, pixel information. For this work, and as a proof of concept, the physics of interest are wind-driven spatial patterns. Examples of these phenomena include features in Aeolian dunes and the deposition of volcanic ash, wildfire smoke, and air pollution plumes. We assume that the spatial patterns were collected by an imaging device that records the magnitude of the logarithm of deposition as a red, green, blue (RGB) color image with channels containing values ranging from 0 to 255. In this paper, we explore deep convolutional neural network-based autoencoders to exploit relationships in wind-driven spatial patterns, which commonly occur in geosciences, and reduce their dimensionality. Reducing the data dimension size with an encoder allows us to train regression models linking geographic and meteorological scalar input quantities to the encoded space. Once this is achieved, full predictive spatial patterns are reconstructed using the decoder. We demonstrate this approach on images of spatial deposition from a pollution source, where the encoder compresses the dimensionality to 0.02% of the original size and the full predictive model performance on test data achieves an accuracy of 92%.

Deep Convolutional Autoencoders as Generic Feature Extractors in Seismological Applications

Oct 22, 2021

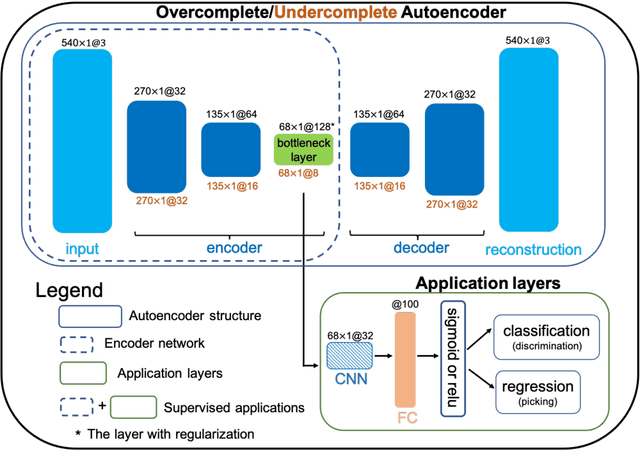

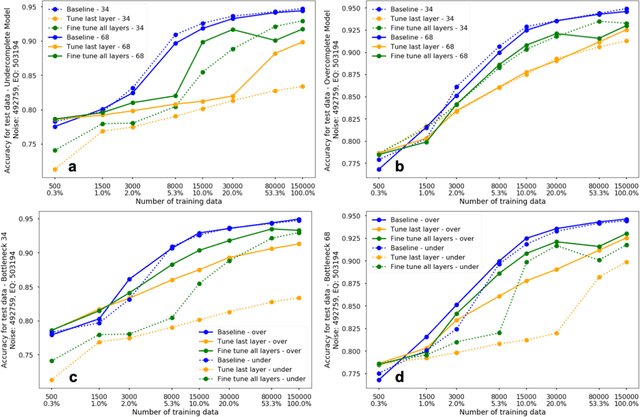

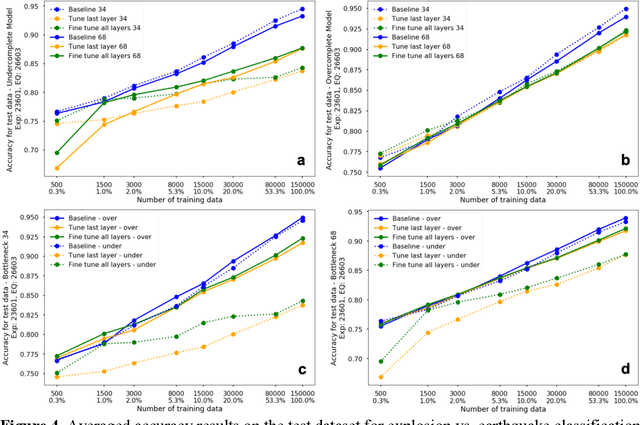

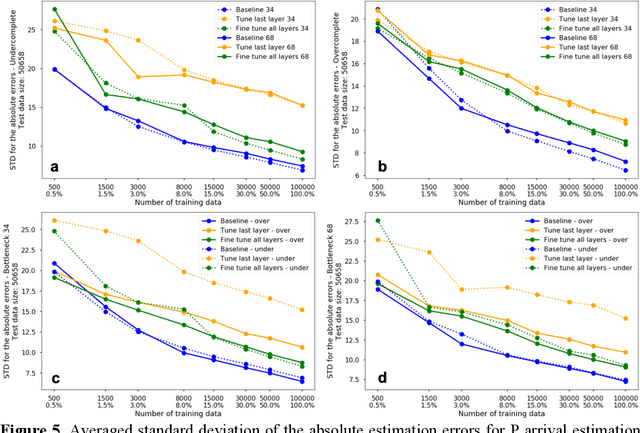

Abstract:The idea of using a deep autoencoder to encode seismic waveform features and then use them in different seismological applications is appealing. In this paper, we designed tests to evaluate this idea of using autoencoders as feature extractors for different seismological applications, such as event discrimination (i.e., earthquake vs. noise waveforms, earthquake vs. explosion waveforms, and phase picking). These tests involve training an autoencoder, either undercomplete or overcomplete, on a large amount of earthquake waveforms, and then using the trained encoder as a feature extractor with subsequent application layers (either a fully connected layer, or a convolutional layer plus a fully connected layer) to make the decision. By comparing the performance of these newly designed models against the baseline models trained from scratch, we conclude that the autoencoder feature extractor approach may only perform well under certain conditions such as when the target problems require features to be similar to the autoencoder encoded features, when a relatively small amount of training data is available, and when certain model structures and training strategies are utilized. The model structure that works best in all these tests is an overcomplete autoencoder with a convolutional layer and a fully connected layer to make the estimation.

Improving seasonal forecast using probabilistic deep learning

Oct 27, 2020

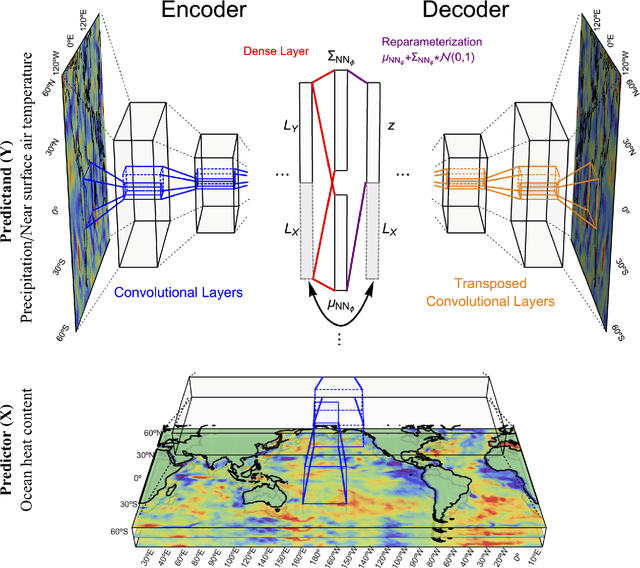

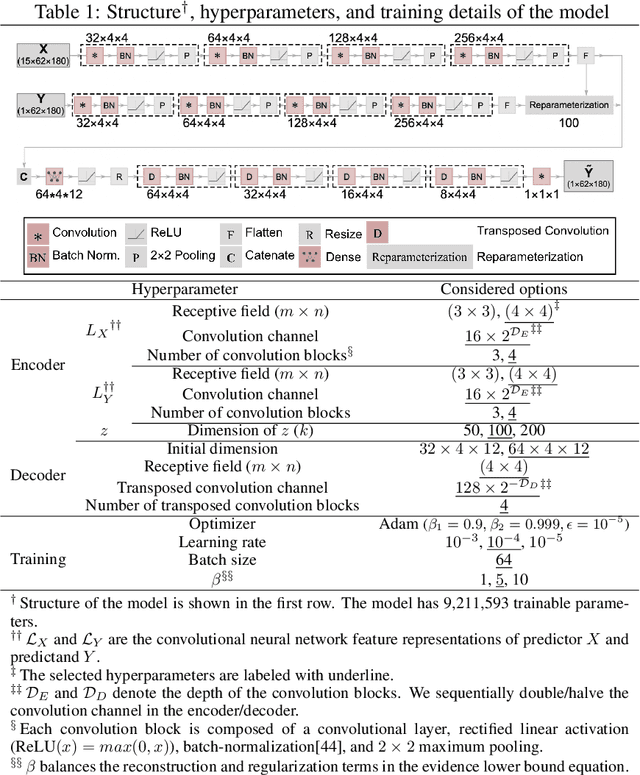

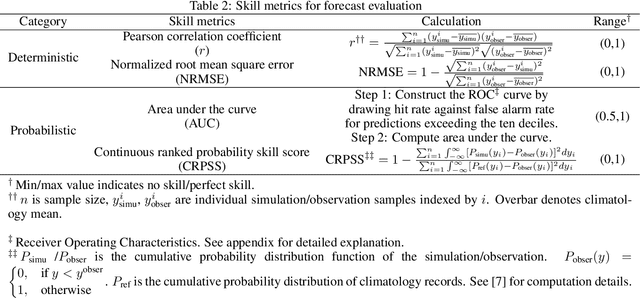

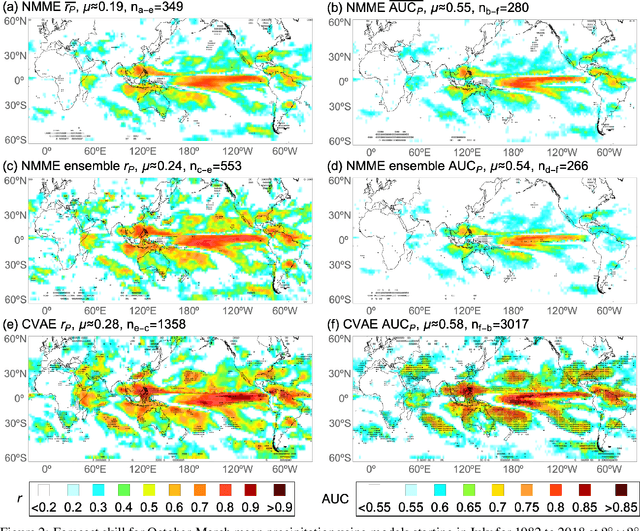

Abstract:The path toward realizing the potential of seasonal forecasting and its socioeconomic benefits depends heavily on improving general circulation model based dynamical forecasting systems. To improve dynamical seasonal forecast, it is crucial to set up forecast benchmarks, and clarify forecast limitations posed by model initialization errors, formulation deficiencies, and internal climate variability. With huge cost in generating large forecast ensembles, and limited observations for forecast verification, the seasonal forecast benchmarking and diagnosing task proves challenging. In this study, we develop a probabilistic deep neural network model, drawing on a wealth of existing climate simulations to enhance seasonal forecast capability and forecast diagnosis. By leveraging complex physical relationships encoded in climate simulations, our probabilistic forecast model demonstrates favorable deterministic and probabilistic skill compared to state-of-the-art dynamical forecast systems in quasi-global seasonal forecast of precipitation and near-surface temperature. We apply this probabilistic forecast methodology to quantify the impacts of initialization errors and model formulation deficiencies in a dynamical seasonal forecasting system. We introduce the saliency analysis approach to efficiently identify the key predictors that influence seasonal variability. Furthermore, by explicitly modeling uncertainty using variational Bayes, we give a more definitive answer to how the El Nino/Southern Oscillation, the dominant mode of seasonal variability, modulates global seasonal predictability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge