Wai Tong Chung

A Staged Deep Learning Approach to Spatial Refinement in 3D Temporal Atmospheric Transport

Dec 14, 2024Abstract:High-resolution spatiotemporal simulations effectively capture the complexities of atmospheric plume dispersion in complex terrain. However, their high computational cost makes them impractical for applications requiring rapid responses or iterative processes, such as optimization, uncertainty quantification, or inverse modeling. To address this challenge, this work introduces the Dual-Stage Temporal Three-dimensional UNet Super-resolution (DST3D-UNet-SR) model, a highly efficient deep learning model for plume dispersion prediction. DST3D-UNet-SR is composed of two sequential modules: the temporal module (TM), which predicts the transient evolution of a plume in complex terrain from low-resolution temporal data, and the spatial refinement module (SRM), which subsequently enhances the spatial resolution of the TM predictions. We train DST3DUNet- SR using a comprehensive dataset derived from high-resolution large eddy simulations (LES) of plume transport. We propose the DST3D-UNet-SR model to significantly accelerate LES simulations of three-dimensional plume dispersion by three orders of magnitude. Additionally, the model demonstrates the ability to dynamically adapt to evolving conditions through the incorporation of new observational data, substantially improving prediction accuracy in high-concentration regions near the source. Keywords: Atmospheric sciences, Geosciences, Plume transport,3D temporal sequences, Artificial intelligence, CNN, LSTM, Autoencoder, Autoregressive model, U-Net, Super-resolution, Spatial Refinement.

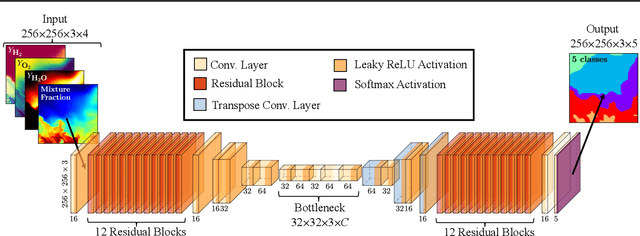

Turbulence in Focus: Benchmarking Scaling Behavior of 3D Volumetric Super-Resolution with BLASTNet 2.0 Data

Sep 26, 2023Abstract:Analysis of compressible turbulent flows is essential for applications related to propulsion, energy generation, and the environment. Here, we present BLASTNet 2.0, a 2.2 TB network-of-datasets containing 744 full-domain samples from 34 high-fidelity direct numerical simulations, which addresses the current limited availability of 3D high-fidelity reacting and non-reacting compressible turbulent flow simulation data. With this data, we benchmark a total of 49 variations of five deep learning approaches for 3D super-resolution - which can be applied for improving scientific imaging, simulations, turbulence models, as well as in computer vision applications. We perform neural scaling analysis on these models to examine the performance of different machine learning (ML) approaches, including two scientific ML techniques. We demonstrate that (i) predictive performance can scale with model size and cost, (ii) architecture matters significantly, especially for smaller models, and (iii) the benefits of physics-based losses can persist with increasing model size. The outcomes of this benchmark study are anticipated to offer insights that can aid the design of 3D super-resolution models, especially for turbulence models, while this data is expected to foster ML methods for a broad range of flow physics applications. This data is publicly available with download links and browsing tools consolidated at https://blastnet.github.io.

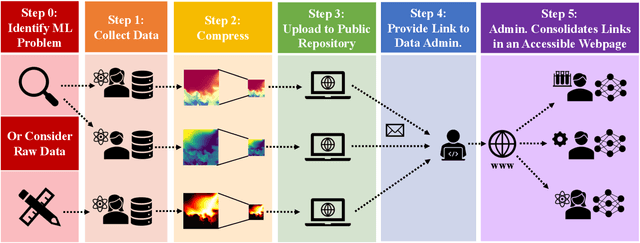

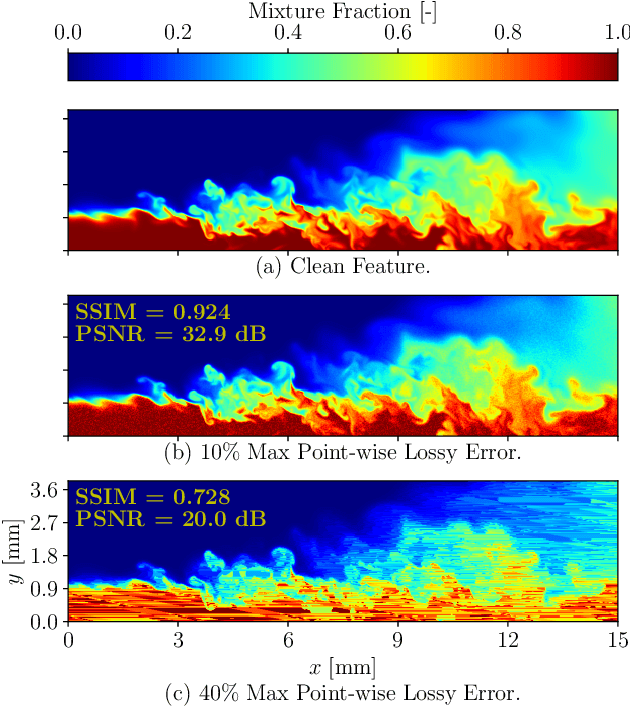

The Bearable Lightness of Big Data: Towards Massive Public Datasets in Scientific Machine Learning

Jul 25, 2022

Abstract:In general, large datasets enable deep learning models to perform with good accuracy and generalizability. However, massive high-fidelity simulation datasets (from molecular chemistry, astrophysics, computational fluid dynamics (CFD), etc. can be challenging to curate due to dimensionality and storage constraints. Lossy compression algorithms can help mitigate limitations from storage, as long as the overall data fidelity is preserved. To illustrate this point, we demonstrate that deep learning models, trained and tested on data from a petascale CFD simulation, are robust to errors introduced during lossy compression in a semantic segmentation problem. Our results demonstrate that lossy compression algorithms offer a realistic pathway for exposing high-fidelity scientific data to open-source data repositories for building community datasets. In this paper, we outline, construct, and evaluate the requirements for establishing a big data framework, demonstrated at https://blastnet.github.io/, for scientific machine learning.

* Accepted in ICML 2022 2nd AI for Science Workshop. 10 pages, 8 figures

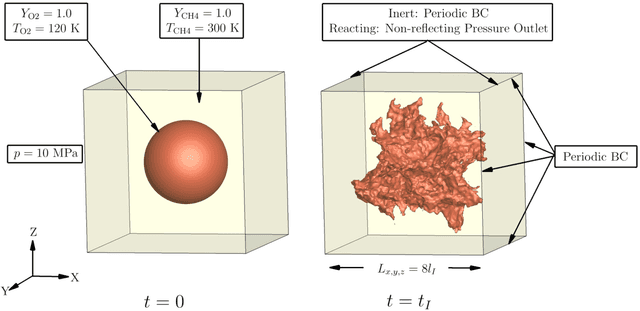

Interpretable Data-driven Methods for Subgrid-scale Closure in LES for Transcritical LOX/GCH4 Combustion

Mar 11, 2021

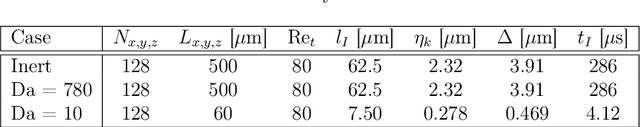

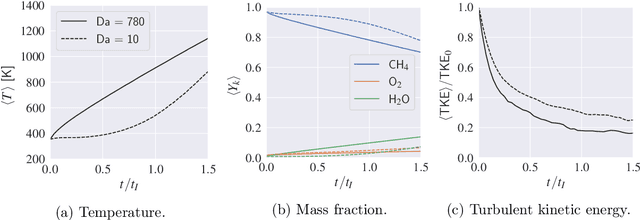

Abstract:Many practical combustion systems such as those in rockets, gas turbines, and internal combustion engines operate under high pressures that surpass the thermodynamic critical limit of fuel-oxidizer mixtures. These conditions require the consideration of complex fluid behaviors that pose challenges for numerical simulations, casting doubts on the validity of existing subgrid-scale (SGS) models in large-eddy simulations of these systems. While data-driven methods have shown high accuracy as closure models in simulations of turbulent flames, these models are often criticized for lack of physical interpretability, wherein they provide answers but no insight into their underlying rationale. The objective of this study is to assess SGS stress models from conventional physics-driven approaches and an interpretable machine learning algorithm, i.e., the random forest regressor, in a turbulent transcritical non-premixed flame. To this end, direct numerical simulations (DNS) of transcritical liquid-oxygen/gaseous-methane (LOX/GCH4) inert and reacting flows are performed. Using this data, a priori analysis is performed on the Favre-filtered DNS data to examine the accuracy of physics-based and random forest SGS-models under these conditions. SGS stresses calculated with the gradient model show good agreement with the exact terms extracted from filtered DNS. The accuracy of the random-forest regressor decreased when physics-based constraints are applied to the feature set. Results demonstrate that random forests can perform as effectively as algebraic models when modeling subgrid stresses, only when trained on a sufficiently representative database. The employment of random forest feature importance score is shown to provide insight into discovering subgrid-scale stresses through sparse regression.

Data-assisted combustion simulations with dynamic submodel assignment using random forests

Sep 12, 2020

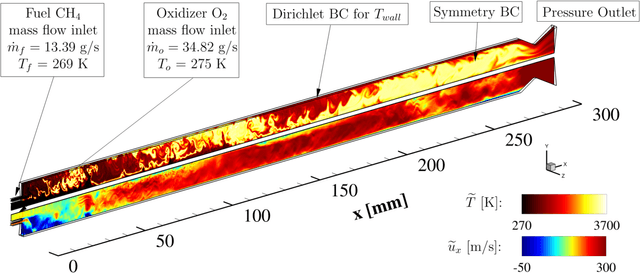

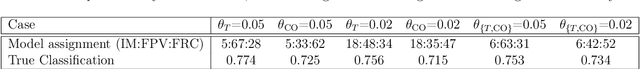

Abstract:In this investigation, we outline a data-assisted approach that employs random forest classifiers for local and dynamic combustion submodel assignment in turbulent-combustion simulations. This method is applied in simulations of a single-element GOX/GCH4 rocket combustor; a priori as well as a posteriori assessments are conducted to (i) evaluate the accuracy and adjustability of the classifier for targeting different quantities-of-interest (QoIs), and (ii) assess improvements, resulting from the data-assisted combustion model assignment, in predicting target QoIs during simulation runtime. Results from the a priori study show that random forests, trained with local flow properties as input variables and combustion model errors as training labels, assign three different combustion models - finite-rate chemistry (FRC), flamelet progress variable (FPV) model, and inert mixing (IM) - with reasonable classification performance even when targeting multiple QoIs. Applications in a posteriori studies demonstrate improved predictions from data-assisted simulations, in temperature and CO mass fraction, when compared with monolithic FPV calculations. These results demonstrate that this data-driven framework holds promise for the dynamic combustion submodel assignment in reacting flow simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge