Dominique Piché-Meunier

A Deep Perceptual Measure for Lens and Camera Calibration

Aug 25, 2022

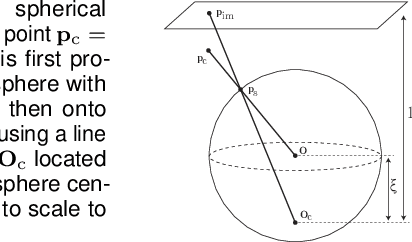

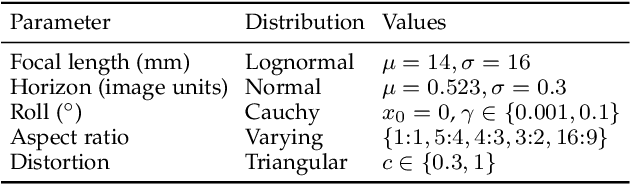

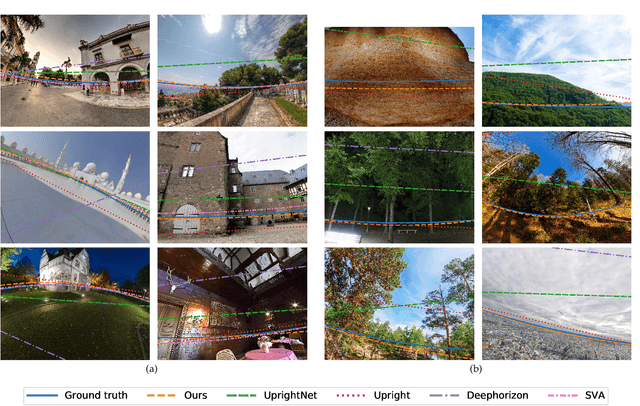

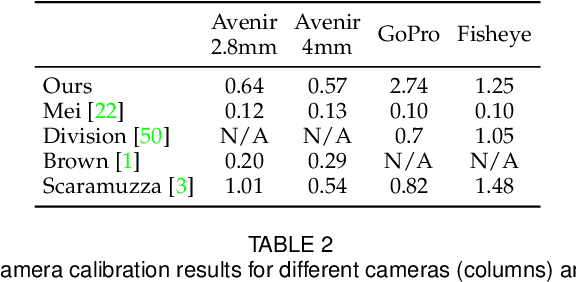

Abstract:Image editing and compositing have become ubiquitous in entertainment, from digital art to AR and VR experiences. To produce beautiful composites, the camera needs to be geometrically calibrated, which can be tedious and requires a physical calibration target. In place of the traditional multi-images calibration process, we propose to infer the camera calibration parameters such as pitch, roll, field of view, and lens distortion directly from a single image using a deep convolutional neural network. We train this network using automatically generated samples from a large-scale panorama dataset, yielding competitive accuracy in terms of standard l2 error. However, we argue that minimizing such standard error metrics might not be optimal for many applications. In this work, we investigate human sensitivity to inaccuracies in geometric camera calibration. To this end, we conduct a large-scale human perception study where we ask participants to judge the realism of 3D objects composited with correct and biased camera calibration parameters. Based on this study, we develop a new perceptual measure for camera calibration and demonstrate that our deep calibration network outperforms previous single-image based calibration methods both on standard metrics as well as on this novel perceptual measure. Finally, we demonstrate the use of our calibration network for several applications, including virtual object insertion, image retrieval, and compositing. A demonstration of our approach is available at https://lvsn.github.io/deepcalib .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge