Do Hai Son

Privacy-Preserving Driver Drowsiness Detection with Spatial Self-Attention and Federated Learning

Aug 01, 2025Abstract:Driver drowsiness is one of the main causes of road accidents and is recognized as a leading contributor to traffic-related fatalities. However, detecting drowsiness accurately remains a challenging task, especially in real-world settings where facial data from different individuals is decentralized and highly diverse. In this paper, we propose a novel framework for drowsiness detection that is designed to work effectively with heterogeneous and decentralized data. Our approach develops a new Spatial Self-Attention (SSA) mechanism integrated with a Long Short-Term Memory (LSTM) network to better extract key facial features and improve detection performance. To support federated learning, we employ a Gradient Similarity Comparison (GSC) that selects the most relevant trained models from different operators before aggregation. This improves the accuracy and robustness of the global model while preserving user privacy. We also develop a customized tool that automatically processes video data by extracting frames, detecting and cropping faces, and applying data augmentation techniques such as rotation, flipping, brightness adjustment, and zooming. Experimental results show that our framework achieves a detection accuracy of 89.9% in the federated learning settings, outperforming existing methods under various deployment scenarios. The results demonstrate the effectiveness of our approach in handling real-world data variability and highlight its potential for deployment in intelligent transportation systems to enhance road safety through early and reliable drowsiness detection.

RACNN: Residual Attention Convolutional Neural Network for Near-Field Channel Estimation in 6G Wireless Communications

Mar 04, 2025Abstract:Near-field channel estimation is a fundamental challenge in sixth-generation (6G) wireless communication, where extremely large antenna arrays (ELAA) enable near-field communication (NFC) but introduce significant signal processing complexity. Traditional model-based methods suffer from high computational costs and limited scalability in large-scale ELAA systems, while existing learning-based approaches often lack robustness across diverse channel conditions. To overcome these limitations, we propose the Residual Attention Convolutional Neural Network (RACNN), which integrates convolutional layers with self-attention mechanisms to enhance feature extraction by focusing on key regions within the CNN feature maps. Experimental results show that RACNN outperforms both traditional and learning-based methods, including XLCNet, across various scenarios, particularly in mixed far-field and near-field conditions. Notably, in these challenging settings, RACNN achieves a normalized mean square error (NMSE) of 4.8*10^(-3) at an SNR of 20dB, making it a promising solution for near-field channel estimation in 6G.

ISDNN: A Deep Neural Network for Channel Estimation in Massive MIMO systems

Oct 26, 2024

Abstract:Massive Multiple-Input Multiple-Output (massive MIMO) technology stands as a cornerstone in 5G and beyonds. Despite the remarkable advancements offered by massive MIMO technology, the extreme number of antennas introduces challenges during the channel estimation (CE) phase. In this paper, we propose a single-step Deep Neural Network (DNN) for CE, termed Iterative Sequential DNN (ISDNN), inspired by recent developments in data detection algorithms. ISDNN is a DNN based on the projected gradient descent algorithm for CE problems, with the iterative iterations transforming into a DNN using the deep unfolding method. Furthermore, we introduce the structured channel ISDNN (S-ISDNN), extending ISDNN to incorporate side information such as directions of signals and antenna array configurations for enhanced CE. Simulation results highlight that ISDNN significantly outperforms another DNN-based CE (DetNet), in terms of training time (13%), running time (4.6%), and accuracy (0.43 dB). Furthermore, the S-ISDNN demonstrates even faster than ISDNN in terms of training time, though its overall performance still requires further improvement.

Uoc luong kenh truyen trong he thong da robot su dung SDR

Mar 19, 2024Abstract:This study focuses on developing an experimental system for estimating communication channels in a multi-robot mobile system using software-defined radio (SDR) devices. The system consists of two mobile robots programmed for two scenarios: one where the robot remains stationary and another where it follows a predefined trajectory. Communication within the system is conducted through orthogonal frequency-division multiplexing (OFDM) to mitigate the effects of multipath propagation in indoor environments. The system's performance is evaluated using the bit error rate (BER). Connections related to robot motion and communication are implemented using Raspberry Pi 3 and BladeRF x115, respectively. The least squares (LS) technique is employed to estimate the channel with a bit error rate of approximately 10^(-2).

On the Semi-Blind Mutually Referenced Equalizers for MIMO Systems

Nov 01, 2023

Abstract:Minimizing training overhead in channel estimation is a crucial challenge in wireless communication systems. This paper presents an extension of the traditional blind algorithm, called "Mutually referenced equalizers" (MRE), specifically designed for MIMO systems. Additionally, we propose a novel semi-blind method, SB-MRE, which combines the benefits of pilot-based and MRE approaches to achieve enhanced performance while utilizing a reduced number of pilot symbols. Moreover, the SB-MRE algorithm helps to minimize complexity and training overhead and to remove the ambiguities inherent to blind processing. The simulation results demonstrated that SB-MRE outperforms other linear algorithms, i.e., MMSE, ZF, and MRE, in terms of training overhead symbols and complexity, thereby offering a promising solution to address the challenge of minimizing training overhead in channel estimation for wireless communication systems.

Impact Analysis of Antenna Array Geometry on Performance of Semi-blind Structured Channel Estimation for massive MIMO-OFDM systems

May 16, 2023Abstract:Channel estimation is always implemented in communication systems to overcome the effect of interference and noise. Especially, in wireless communications, this task is more challenging to improve system performance while saving resources. This paper focuses on investigating the impact of geometries of antenna arrays on the performance of structured channel estimation in massive MIMO-OFDM systems. We use Cram'er Rao Bound to analyze errors in two methods, i.e., training-based and semi-blind-based channel estimations. The simulation results show that the latter gets significantly better performance than the former. Besides, the system with Uniform Cylindrical Array outperforms the traditional Uniform Linear Array one in both estimation methods.

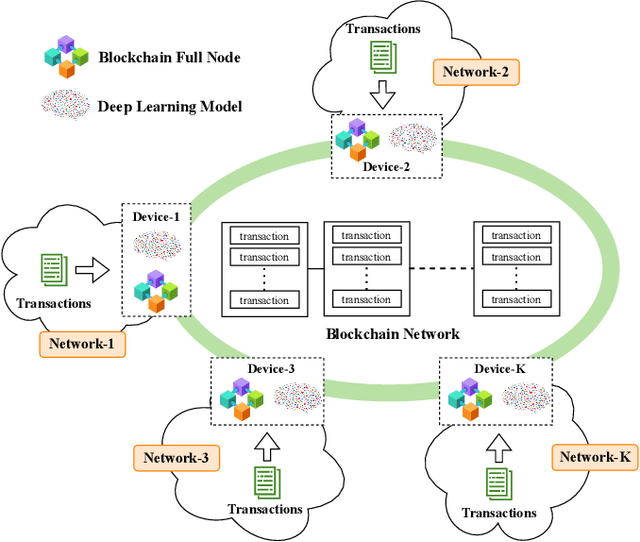

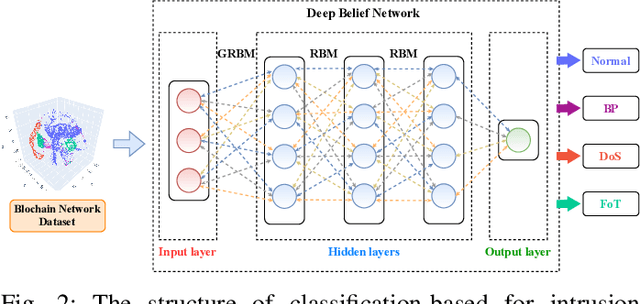

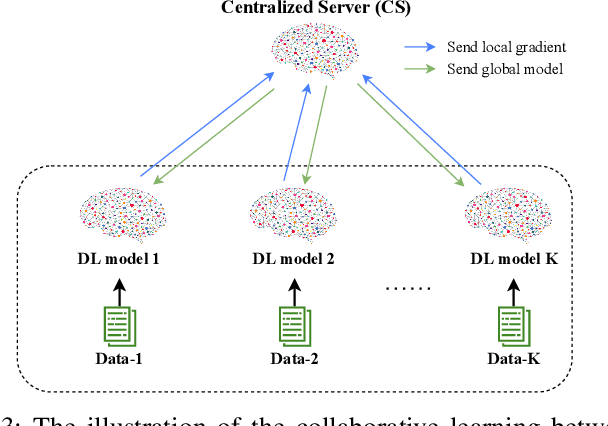

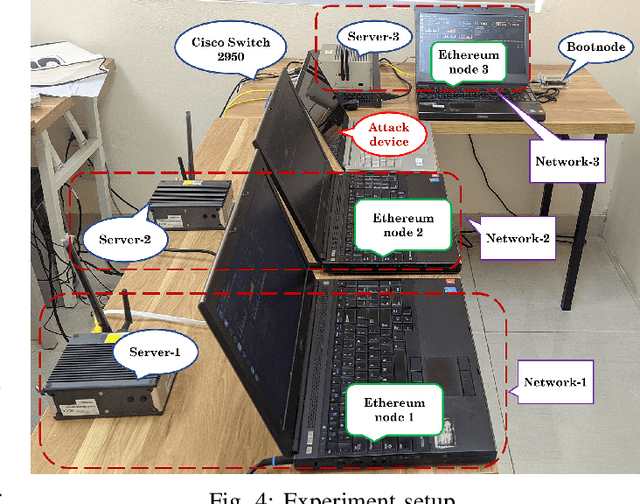

Collaborative Learning for Cyberattack Detection in Blockchain Networks

Mar 21, 2022

Abstract:This article aims to study intrusion attacks and then develop a novel cyberattack detection framework for blockchain networks. Specifically, we first design and implement a blockchain network in our laboratory. This blockchain network will serve two purposes, i.e., generate the real traffic data (including both normal data and attack data) for our learning models and implement real-time experiments to evaluate the performance of our proposed intrusion detection framework. To the best of our knowledge, this is the first dataset that is synthesized in a laboratory for cyberattacks in a blockchain network. We then propose a novel collaborative learning model that allows efficient deployment in the blockchain network to detect attacks. The main idea of the proposed learning model is to enable blockchain nodes to actively collect data, share the knowledge learned from its data, and then exchange the knowledge with other blockchain nodes in the network. In this way, we can not only leverage the knowledge from all the nodes in the network but also do not need to gather all raw data for training at a centralized node like conventional centralized learning solutions. Such a framework can also avoid the risk of exposing local data's privacy as well as the excessive network overhead/congestion. Both intensive simulations and real-time experiments clearly show that our proposed collaborative learning-based intrusion detection framework can achieve an accuracy of up to 97.7% in detecting attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge