Djordje Slijepčević

Institute of Creative\Media/Technologies, St. Poelten University of Applied Sciences, Austria

Explanatory Interactive Machine Learning for Bias Mitigation in Visual Gender Classification

Feb 07, 2026Abstract:Explanatory interactive learning (XIL) enables users to guide model training in machine learning (ML) by providing feedback on the model's explanations, thereby helping it to focus on features that are relevant to the prediction from the user's perspective. In this study, we explore the capability of this learning paradigm to mitigate bias and spurious correlations in visual classifiers, specifically in scenarios prone to data bias, such as gender classification. We investigate two methodologically different state-of-the-art XIL strategies, i.e., CAIPI and Right for the Right Reasons (RRR), as well as a novel hybrid approach that combines both strategies. The results are evaluated quantitatively by comparing segmentation masks with explanations generated using Gradient-weighted Class Activation Mapping (GradCAM) and Bounded Logit Attention (BLA). Experimental results demonstrate the effectiveness of these methods in (i) guiding ML models to focus on relevant image features, particularly when CAIPI is used, and (ii) reducing model bias (i.e., balancing the misclassification rates between male and female predictions). Our analysis further supports the potential of XIL methods to improve fairness in gender classifiers. Overall, the increased transparency and fairness obtained by XIL leads to slight performance decreases with an exception being CAIPI, which shows potential to even improve classification accuracy.

* 8 pages, 4 figures, CBMI2025

FHSTP@EXIST 2025 Benchmark: Sexism Detection with Transparent Speech Concept Bottleneck Models

Jul 28, 2025Abstract:Sexism has become widespread on social media and in online conversation. To help address this issue, the fifth Sexism Identification in Social Networks (EXIST) challenge is initiated at CLEF 2025. Among this year's international benchmarks, we concentrate on solving the first task aiming to identify and classify sexism in social media textual posts. In this paper, we describe our solutions and report results for three subtasks: Subtask 1.1 - Sexism Identification in Tweets, Subtask 1.2 - Source Intention in Tweets, and Subtask 1.3 - Sexism Categorization in Tweets. We implement three models to address each subtask which constitute three individual runs: Speech Concept Bottleneck Model (SCBM), Speech Concept Bottleneck Model with Transformer (SCBMT), and a fine-tuned XLM-RoBERTa transformer model. SCBM uses descriptive adjectives as human-interpretable bottleneck concepts. SCBM leverages large language models (LLMs) to encode input texts into a human-interpretable representation of adjectives, then used to train a lightweight classifier for downstream tasks. SCBMT extends SCBM by fusing adjective-based representation with contextual embeddings from transformers to balance interpretability and classification performance. Beyond competitive results, these two models offer fine-grained explanations at both instance (local) and class (global) levels. We also investigate how additional metadata, e.g., annotators' demographic profiles, can be leveraged. For Subtask 1.1, XLM-RoBERTa, fine-tuned on provided data augmented with prior datasets, ranks 6th for English and Spanish and 4th for English in the Soft-Soft evaluation. Our SCBMT achieves 7th for English and Spanish and 6th for Spanish.

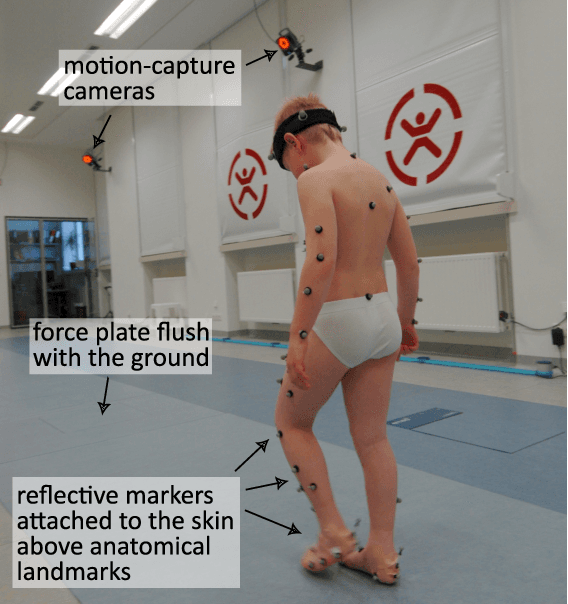

Machine Learning in Biomechanics: Key Applications and Limitations in Walking, Running, and Sports Movements

Mar 05, 2025Abstract:This chapter provides an overview of recent and promising Machine Learning applications, i.e. pose estimation, feature estimation, event detection, data exploration & clustering, and automated classification, in gait (walking and running) and sports biomechanics. It explores the potential of Machine Learning methods to address challenges in biomechanical workflows, highlights central limitations, i.e. data and annotation availability and explainability, that need to be addressed, and emphasises the importance of interdisciplinary approaches for fully harnessing the potential of Machine Learning in gait and sports biomechanics.

Exploring the Plausibility of Hate and Counter Speech Detectors with Explainable AI

Jul 25, 2024Abstract:In this paper we investigate the explainability of transformer models and their plausibility for hate speech and counter speech detection. We compare representatives of four different explainability approaches, i.e., gradient-based, perturbation-based, attention-based, and prototype-based approaches, and analyze them quantitatively with an ablation study and qualitatively in a user study. Results show that perturbation-based explainability performs best, followed by gradient-based and attention-based explainability. Prototypebased experiments did not yield useful results. Overall, we observe that explainability strongly supports the users in better understanding the model predictions.

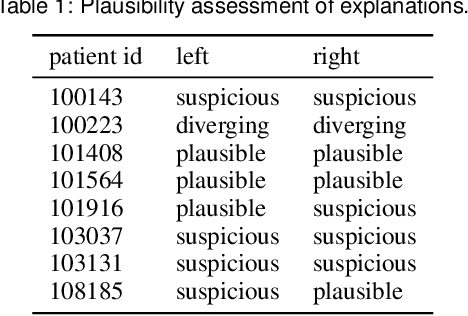

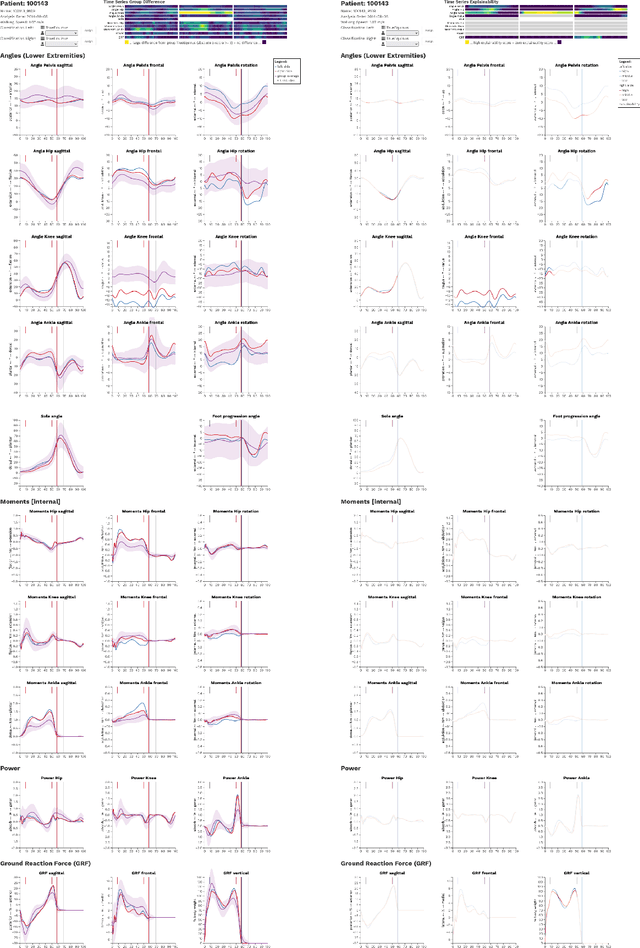

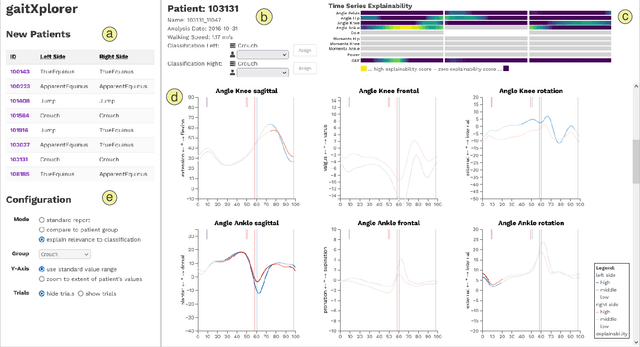

Trustworthy Visual Analytics in Clinical Gait Analysis: A Case Study for Patients with Cerebral Palsy

Aug 10, 2022

Abstract:Three-dimensional clinical gait analysis is essential for selecting optimal treatment interventions for patients with cerebral palsy (CP), but generates a large amount of time series data. For the automated analysis of these data, machine learning approaches yield promising results. However, due to their black-box nature, such approaches are often mistrusted by clinicians. We propose gaitXplorer, a visual analytics approach for the classification of CP-related gait patterns that integrates Grad-CAM, a well-established explainable artificial intelligence algorithm, for explanations of machine learning classifications. Regions of high relevance for classification are highlighted in the interactive visual interface. The approach is evaluated in a case study with two clinical gait experts. They inspected the explanations for a sample of eight patients using the visual interface and expressed which relevance scores they found trustworthy and which they found suspicious. Overall, the clinicians gave positive feedback on the approach as it allowed them a better understanding of which regions in the data were relevant for the classification.

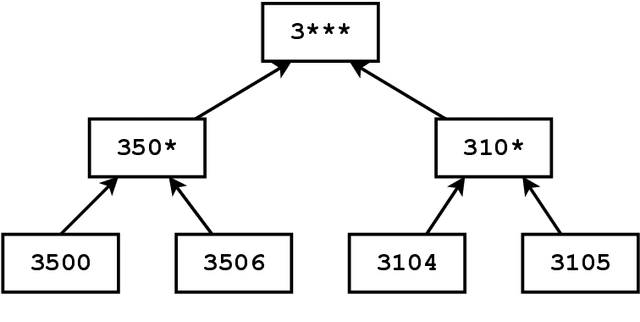

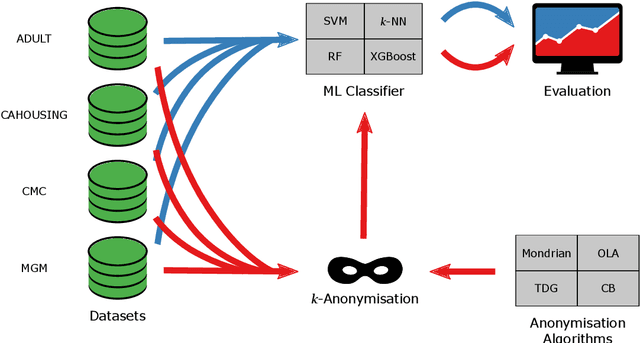

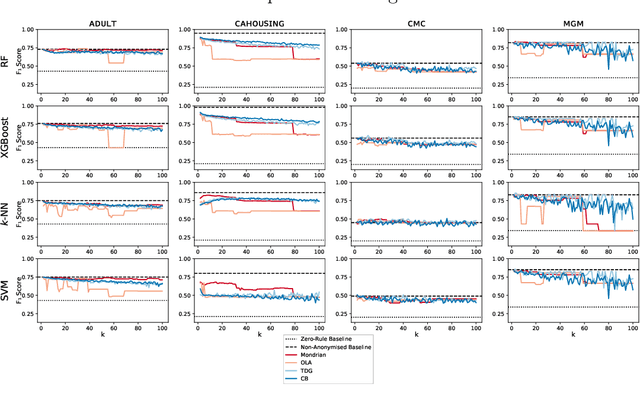

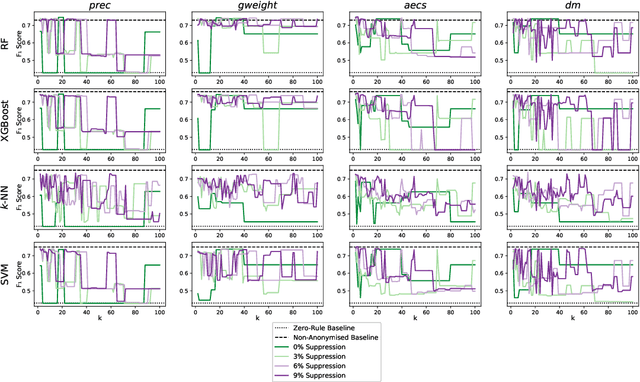

$k$-Anonymity in Practice: How Generalisation and Suppression Affect Machine Learning Classifiers

Feb 09, 2021

Abstract:The protection of private information is a crucial issue in data-driven research and business contexts. Typically, techniques like anonymisation or (selective) deletion are introduced in order to allow data sharing, \eg\ in the case of collaborative research endeavours. For use with anonymisation techniques, the $k$-anonymity criterion is one of the most popular, with numerous scientific publications on different algorithms and metrics. Anonymisation techniques often require changing the data and thus necessarily affect the results of machine learning models trained on the underlying data. In this work, we conduct a systematic comparison and detailed investigation into the effects of different $k$-anonymisation algorithms on the results of machine learning models. We investigate a set of popular $k$-anonymisation algorithms with different classifiers and evaluate them on different real-world datasets. Our systematic evaluation shows that with an increasingly strong $k$-anonymity constraint, the classification performance generally degrades, but to varying degrees and strongly depending on the dataset and anonymisation method. Furthermore, Mondrian can be considered as the method with the most appealing properties for subsequent classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge