Dishant Padalia

Decomposed evaluations of geographic disparities in text-to-image models

Jun 17, 2024Abstract:Recent work has identified substantial disparities in generated images of different geographic regions, including stereotypical depictions of everyday objects like houses and cars. However, existing measures for these disparities have been limited to either human evaluations, which are time-consuming and costly, or automatic metrics evaluating full images, which are unable to attribute these disparities to specific parts of the generated images. In this work, we introduce a new set of metrics, Decomposed Indicators of Disparities in Image Generation (Decomposed-DIG), that allows us to separately measure geographic disparities in the depiction of objects and backgrounds in generated images. Using Decomposed-DIG, we audit a widely used latent diffusion model and find that generated images depict objects with better realism than backgrounds and that backgrounds in generated images tend to contain larger regional disparities than objects. We use Decomposed-DIG to pinpoint specific examples of disparities, such as stereotypical background generation in Africa, struggling to generate modern vehicles in Africa, and unrealistically placing some objects in outdoor settings. Informed by our metric, we use a new prompting structure that enables a 52% worst-region improvement and a 20% average improvement in generated background diversity.

A CNN-LSTM Combination Network for Cataract Detection using Eye Fundus Images

Oct 28, 2022

Abstract:According to multiple authoritative authorities, including the World Health Organization, vision-related impairments and disorders are becoming a significant issue. According to a recent report, one of the leading causes of irreversible blindness in persons over the age of 50 is delayed cataract treatment. A cataract is a cloudy spot in the eye's lens that causes visual loss. Cataracts often develop slowly and consequently result in difficulty in driving, reading, and even recognizing faces. This necessitates the development of rapid and dependable diagnosis and treatment solutions for ocular illnesses. Previously, such visual illness diagnosis were done manually, which was time-consuming and prone to human mistake. However, as technology advances, automated, computer-based methods that decrease both time and human labor while producing trustworthy results are now accessible. In this study, we developed a CNN-LSTM-based model architecture with the goal of creating a low-cost diagnostic system that can classify normal and cataractous cases of ocular disease from fundus images. The proposed model was trained on the publicly available ODIR dataset, which included fundus images of patients' left and right eyes. The suggested architecture outperformed previous systems with a state-of-the-art 97.53% accuracy.

An Ensemble of Convolutional Neural Networks to Detect Foliar Diseases in Apple Plants

Oct 01, 2022

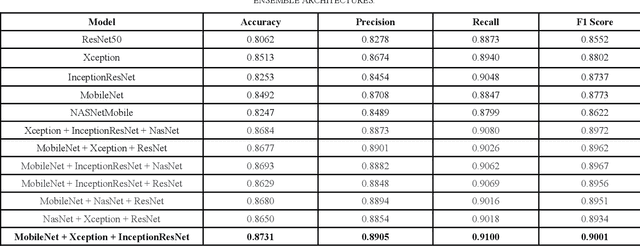

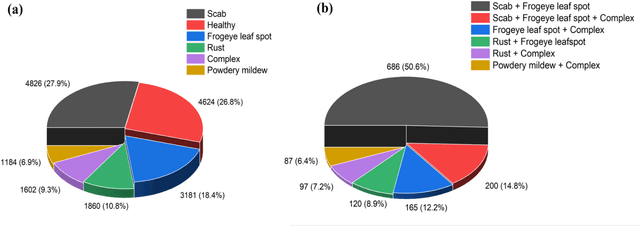

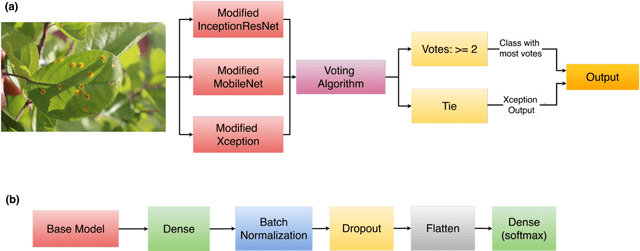

Abstract:Apple diseases, if not diagnosed early, can lead to massive resource loss and pose a serious threat to humans and animals who consume the infected apples. Hence, it is critical to diagnose these diseases early in order to manage plant health and minimize the risks associated with them. However, the conventional approach of monitoring plant diseases entails manual scouting and analyzing the features, texture, color, and shape of the plant leaves, resulting in delayed diagnosis and misjudgments. Our work proposes an ensembled system of Xception, InceptionResNet, and MobileNet architectures to detect 5 different types of apple plant diseases. The model has been trained on the publicly available Plant Pathology 2021 dataset and can classify multiple diseases in a given plant leaf. The system has achieved outstanding results in multi-class and multi-label classification and can be used in a real-time setting to monitor large apple plantations to aid the farmers manage their yields effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge