Dimitris Perdios

Improving Lipschitz-Constrained Neural Networks by Learning Activation Functions

Oct 28, 2022

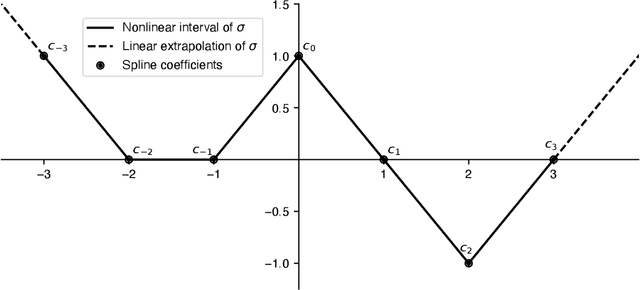

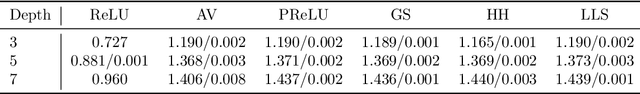

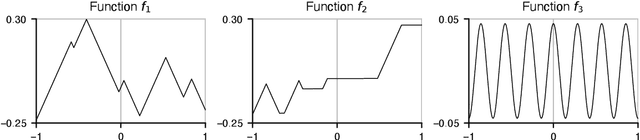

Abstract:Lipschitz-constrained neural networks have several advantages compared to unconstrained ones and can be applied to various different problems. Consequently, they have recently attracted considerable attention in the deep learning community. Unfortunately, it has been shown both theoretically and empirically that networks with ReLU activation functions perform poorly under such constraints. On the contrary, neural networks with learnable 1-Lipschitz linear splines are known to be more expressive in theory. In this paper, we show that such networks are solutions of a functional optimization problem with second-order total-variation regularization. Further, we propose an efficient method to train such 1-Lipschitz deep spline neural networks. Our numerical experiments for a variety of tasks show that our trained networks match or outperform networks with activation functions specifically tailored towards Lipschitz-constrained architectures.

CNN-Based Ultrasound Image Reconstruction for Ultrafast Displacement Tracking

Sep 03, 2020

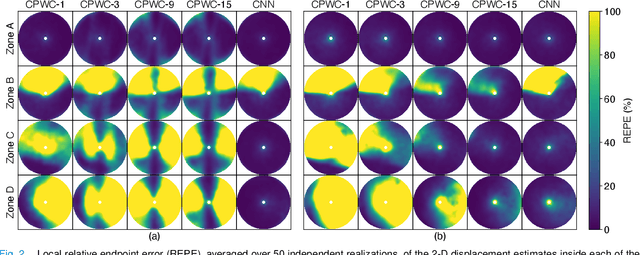

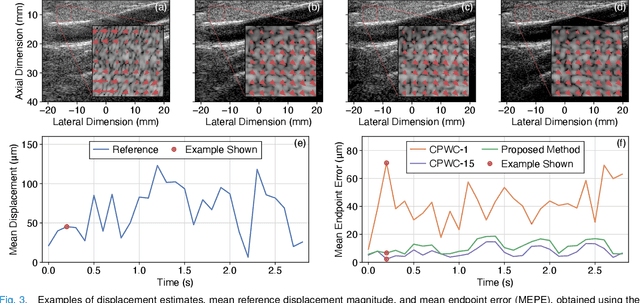

Abstract:Thanks to its capability of acquiring full-view frames at multiple kilohertz, ultrafast ultrasound imaging unlocked the analysis of rapidly changing physical phenomena in the human body, with pioneering applications such as ultrasensitive flow imaging in the cardiovascular system or shear-wave elastography. The accuracy achievable with these motion estimation techniques is strongly contingent upon two contradictory requirements: a high quality of consecutive frames and a high frame rate. Indeed, the image quality can usually be improved by increasing the number of steered ultrafast acquisitions, but at the expense of a reduced frame rate and possible motion artifacts. To achieve accurate motion estimation at uncompromised frame rates and immune to motion artifacts, the proposed approach relies on single ultrafast acquisitions to reconstruct high-quality frames and on only two consecutive frames to obtain 2-D displacement estimates. To this end, we deployed a convolutional neural network-based image reconstruction method combined with a speckle tracking algorithm based on cross-correlation. Numerical and in vivo experiments, conducted in the context of plane-wave imaging, demonstrate that the proposed approach is capable of estimating displacements in regions where the presence of side lobe and grating lobe artifacts prevents any displacement estimation with a state-of-the-art technique that rely on conventional delay-and-sum beamforming. The proposed approach may therefore unlock the full potential of ultrafast ultrasound, in applications such as ultrasensitive cardiovascular motion and flow analysis or shear-wave elastography.

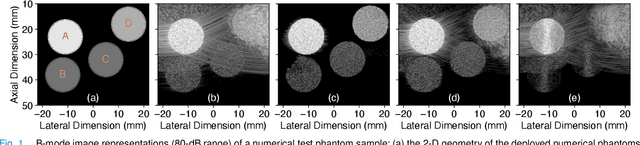

CNN-Based Image Reconstruction Method for Ultrafast Ultrasound Imaging

Aug 28, 2020

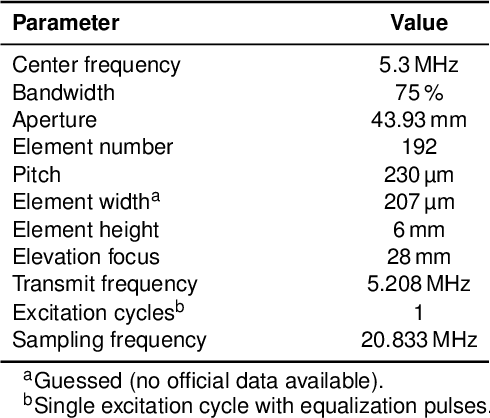

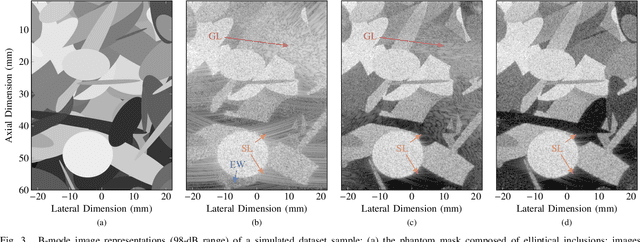

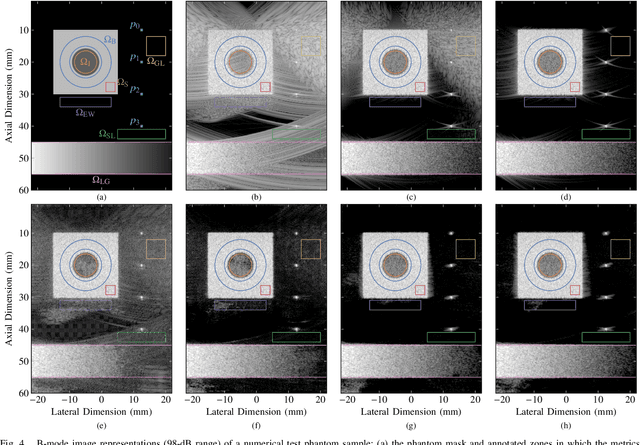

Abstract:Ultrafast ultrasound (US) revolutionized biomedical imaging with its capability of acquiring full-view frames at over 1 kHz, unlocking breakthrough modalities such as shear-wave elastography and functional US neuroimaging. Yet, it suffers from strong diffraction artifacts, mainly caused by grating lobes, side lobes, or edge waves. Multiple acquisitions are typically required to obtain a sufficient image quality, at the cost of a reduced frame rate. To answer the increasing demand for high-quality imaging from single-shot acquisitions, we propose a two-step convolutional neural network (CNN)-based image reconstruction method, compatible with real-time imaging. A low-quality estimate is obtained by means of a backprojection-based operation, akin to conventional delay-and-sum beamforming, from which a high-quality image is restored using a residual CNN with multi-scale and multi-channel filtering properties, trained specifically to remove the diffraction artifacts inherent to ultrafast US imaging. To account for both the high dynamic range and the radio frequency property of US images, we introduce the mean signed logarithmic absolute error (MSLAE) as training loss function. Experiments were conducted with a linear transducer array, in single plane wave (PW) imaging. Trainings were performed on a simulated dataset, crafted to contain a wide diversity of structures and echogenicities. Extensive numerical evaluations demonstrate that the proposed approach can reconstruct images from single PWs with a quality similar to that of gold-standard synthetic aperture imaging, on a dynamic range in excess of 60 dB. In vitro and in vivo experiments show that trainings performed on simulated data translate well to experimental settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge