Digby Chappell

The Hydra Hand: A Mode-Switching Underactuated Gripper with Precision and Power Grasping Modes

Sep 26, 2023Abstract:Human hands are able to grasp a wide range of object sizes, shapes, and weights, achieved via reshaping and altering their apparent grasping stiffness between compliant power and rigid precision. Achieving similar versatility in robotic hands remains a challenge, which has often been addressed by adding extra controllable degrees of freedom, tactile sensors, or specialised extra grasping hardware, at the cost of control complexity and robustness. We introduce a novel reconfigurable four-fingered two-actuator underactuated gripper -- the Hydra Hand -- that switches between compliant power and rigid precision grasps using a single motor, while generating grasps via a single hydraulic actuator -- exhibiting adaptive grasping between finger pairs, enabling the power grasping of two objects simultaneously. The mode switching mechanism and the hand's kinematics are presented and analysed, and performance is tested on two grasping benchmarks: one focused on rigid objects, and the other on items of clothing. The Hydra Hand is shown to excel at grasping large and irregular objects, and small objects with its respective compliant power and rigid precision configurations. The hand's versatility is then showcased by executing the challenging manipulation task of safely grasping and placing a bunch of grapes, and then plucking a single grape from the bunch.

Learning to Grasp Clothing Structural Regions for Garment Manipulation Tasks

Jun 26, 2023Abstract:When performing cloth-related tasks, such as garment hanging, it is often important to identify and grasp certain structural regions -- a shirt's collar as opposed to its sleeve, for instance. However, due to cloth deformability, these manipulation activities, which are essential in domestic, health care, and industrial contexts, remain challenging for robots. In this paper, we focus on how to segment and grasp structural regions of clothes to enable manipulation tasks, using hanging tasks as case study. To this end, a neural network-based perception system is proposed to segment a shirt's collar from areas that represent the rest of the scene in a depth image. With a 10-minute video of a human manipulating shirts to train it, our perception system is capable of generalizing to other shirts regardless of texture as well as to other types of collared garments. A novel grasping strategy is then proposed based on the segmentation to determine grasping pose. Experiments demonstrate that our proposed grasping strategy achieves 92\%, 80\%, and 50\% grasping success rates with one folded garment, one crumpled garment and three crumpled garments, respectively. Our grasping strategy performs considerably better than tested baselines that do not take into account the structural nature of the garments. With the proposed region segmentation and grasping strategy, challenging garment hanging tasks are successfully implemented using an open-loop control policy. Supplementary material is available at https://sites.google.com/view/garment-hanging

When and Where to Step: Terrain-Aware Real-Time Footstep Location and Timing Optimization for Bipedal Robots

Feb 14, 2023

Abstract:Online footstep planning is essential for bipedal walking robots, allowing them to walk in the presence of disturbances and sensory noise. Most of the literature on the topic has focused on optimizing the footstep placement while keeping the step timing constant. In this work, we introduce a footstep planner capable of optimizing footstep placement and step time online. The proposed planner, consisting of an Interior Point Optimizer (IPOPT) and an optimizer based on Augmented Lagrangian (AL) method with analytical gradient descent, solves the full dynamics of the Linear Inverted Pendulum (LIP) model in real time to optimize for footstep location as well as step timing at the rate of 200~Hz. We show that such asynchronous real-time optimization with the AL method (ARTO-AL) provides the required robustness and speed for successful online footstep planning. Furthermore, ARTO-AL can be extended to plan footsteps in 3D, allowing terrain-aware footstep planning on uneven terrains. Compared to an algorithm with no footstep time adaptation, our proposed ARTO-AL demonstrates increased stability in simulated walking experiments as it can resist pushes on flat ground and on a $10^{\circ}$ ramp up to 120 N and 100 N respectively. For the video, see https://youtu.be/ABdnvPqCUu4. For code, see https://github.com/WangKeAlchemist/ARTO-AL/tree/master.

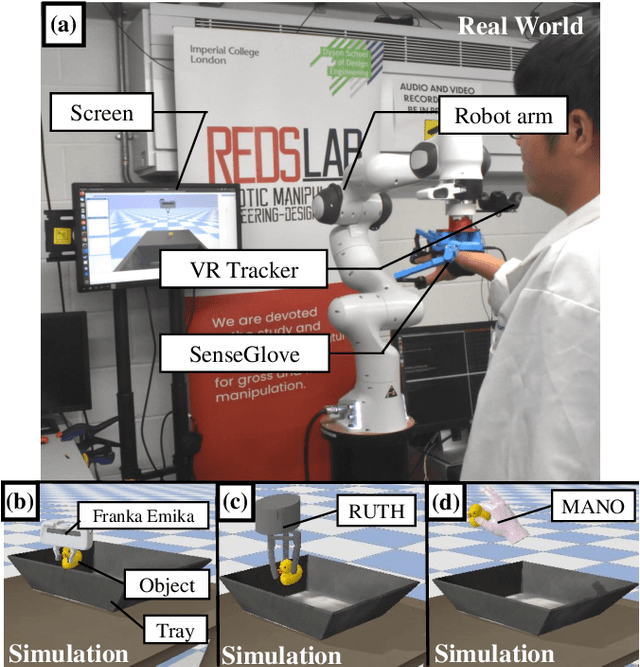

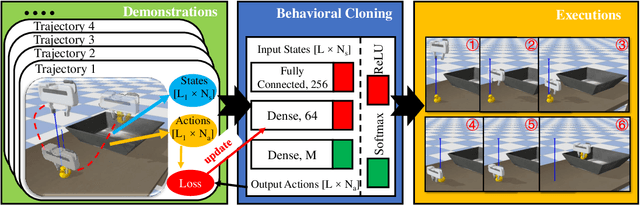

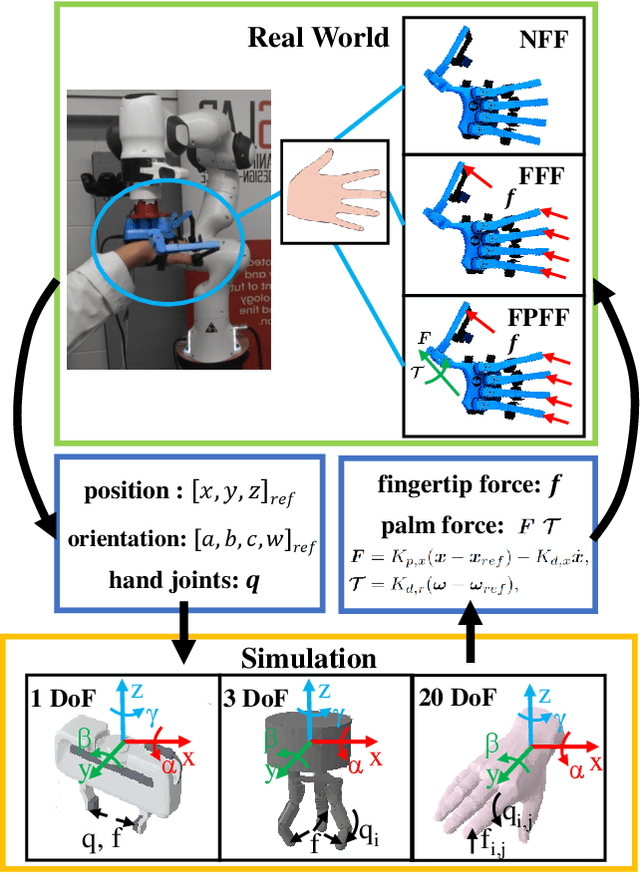

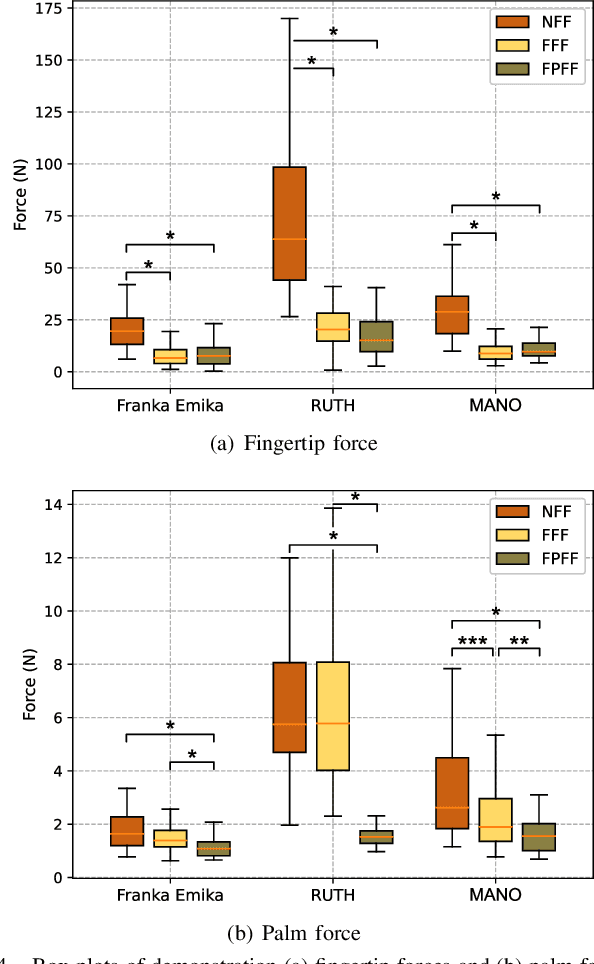

Immersive Demonstrations are the Key to Imitation Learning

Jan 22, 2023

Abstract:Achieving successful robotic manipulation is an essential step towards robots being widely used in industry and home settings. Recently, many learning-based methods have been proposed to tackle this challenge, with imitation learning showing great promise. However, imperfect demonstrations and a lack of feedback from teleoperation systems may lead to poor or even unsafe results. In this work we explore the effect of demonstrator force feedback on imitation learning, using a feedback glove and a robot arm to render fingertip-level and palm-level forces, respectively. 10 participants recorded 5 demonstrations of a pick-and-place task with 3 grippers, under conditions with no force feedback, fingertip force feedback, and fingertip and palm force feedback. Results show that force feedback significantly reduces demonstrator fingertip and palm forces, leads to a lower variation in demonstrator forces, and recorded trajectories that a quicker to execute. Using behavioral cloning, we find that agents trained to imitate these trajectories mirror these benefits, even though agents have no force data shown to them during training. We conclude that immersive demonstrations, achieved with force feedback, may be the key to unlocking safer, quicker to execute dexterous manipulation policies.

Asynchronous Real-Time Optimization of Footstep Placement and Timing in Bipedal Walking Robots

Jul 02, 2020

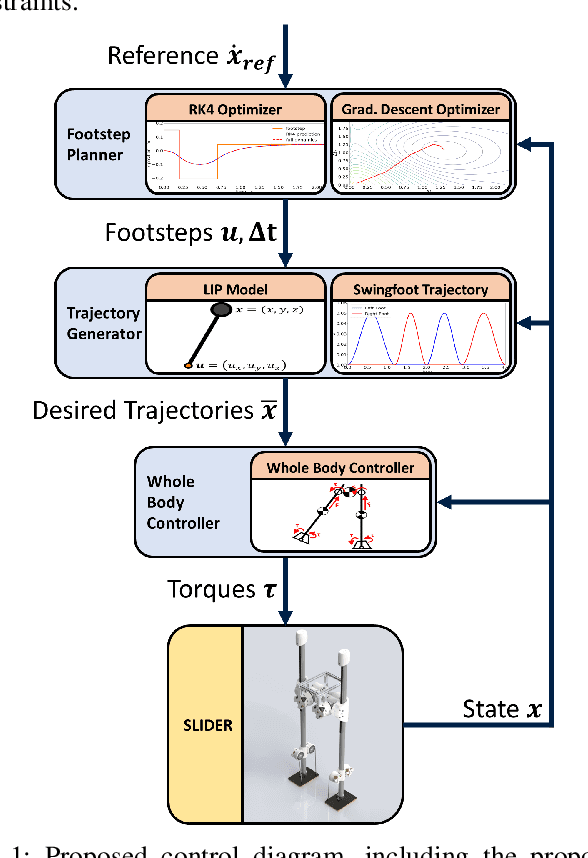

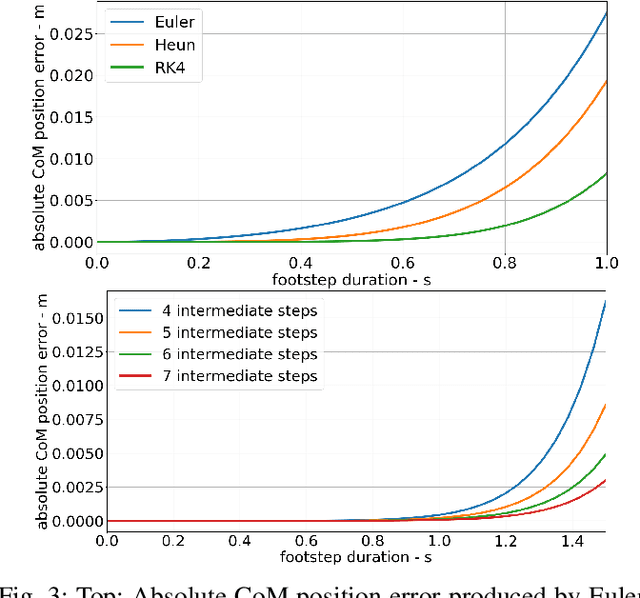

Abstract:Online footstep planning is essential for bipedal walking robots to be able to walk in the presence of disturbances. Until recently this has been achieved by only optimizing the placement of the footstep, keeping the duration of the step constant. In this paper we introduce a footstep planner capable of optimizing footstep placement and timing in real-time by asynchronously combining two optimizers, which we refer to as asynchronous real-time optimization (ARTO). The first optimizer which runs at approximately 25 Hz, utilizes a fourth-order Runge-Kutta (RK4) method to accurately approximate the dynamics of the linear inverted pendulum (LIP) model for bipedal walking, then uses non-linear optimization to find optimal footsteps and duration at a lower frequency. The second optimizer that runs at approximately 250 Hz, uses analytical gradients derived from the full dynamics of the LIP model and constraint penalty terms to perform gradient descent, which finds approximately optimal footstep placement and timing at a higher frequency. By combining the two optimizers asynchronously, ARTO has the benefits of fast reactions to disturbances from the gradient descent optimizer, accurate solutions that avoid local optima from the RK4 optimizer, and increases the probability that a feasible solution will be found from the two optimizers. Experimentally, we show that ARTO is able to recover from considerably larger pushes and produces feasible solutions to larger reference velocity changes than a standard footstep location optimizer, and outperforms using just the RK4 optimizer alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge