Dian Fan

Over-the-Air Federated Multi-Task Learning via Model Sparsification and Turbo Compressed Sensing

May 08, 2022

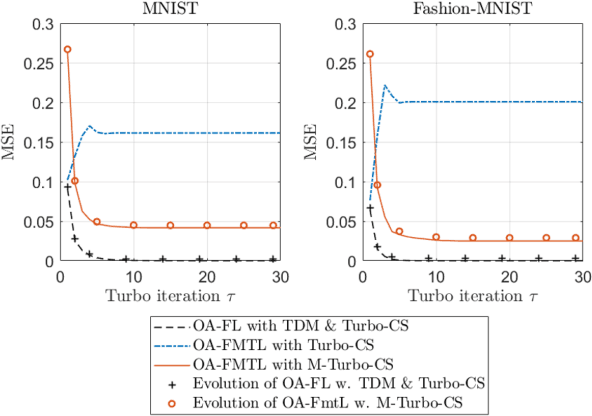

Abstract:To achieve communication-efficient federated multitask learning (FMTL), we propose an over-the-air FMTL (OAFMTL) framework, where multiple learning tasks deployed on edge devices share a non-orthogonal fading channel under the coordination of an edge server (ES). In OA-FMTL, the local updates of edge devices are sparsified, compressed, and then sent over the uplink channel in a superimposed fashion. The ES employs over-the-air computation in the presence of intertask interference. More specifically, the model aggregations of all the tasks are reconstructed from the channel observations concurrently, based on a modified version of the turbo compressed sensing (Turbo-CS) algorithm (named as M-Turbo-CS). We analyze the performance of the proposed OA-FMTL framework together with the M-Turbo-CS algorithm. Furthermore, based on the analysis, we formulate a communication-learning optimization problem to improve the system performance by adjusting the power allocation among the tasks at the edge devices. Numerical simulations show that our proposed OAFMTL effectively suppresses the inter-task interference, and achieves a learning performance comparable to its counterpart with orthogonal multi-task transmission. It is also shown that the proposed inter-task power allocation optimization algorithm substantially reduces the overall communication overhead by appropriately adjusting the power allocation among the tasks.

Multi-task Over-the-Air Federated Learning: A Non-Orthogonal Transmission Approach

Jun 29, 2021

Abstract:In this letter, we propose a multi-task over-theair federated learning (MOAFL) framework, where multiple learning tasks share edge devices for data collection and learning models under the coordination of a edge server (ES). Specially, the model updates for all the tasks are transmitted and superpositioned concurrently over a non-orthogonal uplink channel via over-the-air computation, and the aggregation results of all the tasks are reconstructed at the ES through an extended version of the turbo compressed sensing algorithm. Both the convergence analysis and numerical results demonstrate that the MOAFL framework can significantly reduce the uplink bandwidth consumption of multiple tasks without causing substantial learning performance degradation.

Temporal-Structure-Assisted Gradient Aggregation for Over-the-Air Federated Edge Learning

Mar 03, 2021

Abstract:In this paper, we investigate over-the-air model aggregation in a federated edge learning (FEEL) system. We introduce a Markovian probability model to characterize the intrinsic temporal structure of the model aggregation series. With this temporal probability model, we formulate the model aggregation problem as to infer the desired aggregated update given all the past observations from a Bayesian perspective. We develop a message passing based algorithm, termed temporal-structure-assisted gradient aggregation (TSA-GA), to fulfil this estimation task with low complexity and near-optimal performance. We further establish the state evolution (SE) analysis to characterize the behaviour of the proposed TSA-GA algorithm, and derive an explicit bound of the expected loss reduction of the FEEL system under certain standard regularity conditions. In addition, we develop an expectation maximization (EM) strategy to learn the unknown parameters in the Markovian model. We show that the proposed TSAGA algorithm significantly outperforms the state-of-the-art, and is able to achieve comparable learning performance as the error-free benchmark in terms of both convergence rate and final test accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge