Denny Yu

Reinforced Sequential Decision-Making for Sepsis Treatment: The POSNEGDM Framework with Mortality Classifier and Transformer

Mar 12, 2024

Abstract:Sepsis, a life-threatening condition triggered by the body's exaggerated response to infection, demands urgent intervention to prevent severe complications. Existing machine learning methods for managing sepsis struggle in offline scenarios, exhibiting suboptimal performance with survival rates below 50%. This paper introduces the POSNEGDM -- ``Reinforcement Learning with Positive and Negative Demonstrations for Sequential Decision-Making" framework utilizing an innovative transformer-based model and a feedback reinforcer to replicate expert actions while considering individual patient characteristics. A mortality classifier with 96.7\% accuracy guides treatment decisions towards positive outcomes. The POSNEGDM framework significantly improves patient survival, saving 97.39% of patients, outperforming established machine learning algorithms (Decision Transformer and Behavioral Cloning) with survival rates of 33.4% and 43.5%, respectively. Additionally, ablation studies underscore the critical role of the transformer-based decision maker and the integration of a mortality classifier in enhancing overall survival rates. In summary, our proposed approach presents a promising avenue for enhancing sepsis treatment outcomes, contributing to improved patient care and reduced healthcare costs.

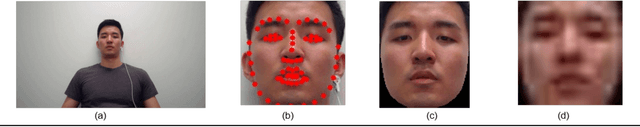

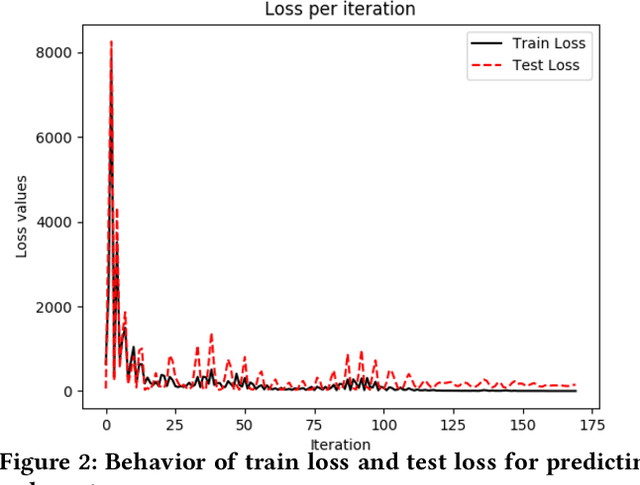

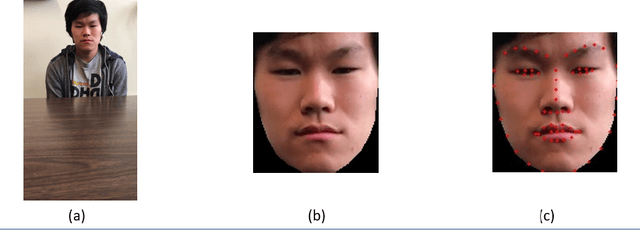

A Supervised Learning Approach for Robust Health Monitoring using Face Videos

Jan 30, 2021

Abstract:Monitoring of cardiovascular activity is highly desired and can enable novel applications in diagnosing potential cardiovascular diseases and maintaining an individual's well-being. Currently, such vital signs are measured using intrusive contact devices such as an electrocardiogram (ECG), chest straps, and pulse oximeters that require the patient or the health provider to manually implement. Non-contact, device-free human sensing methods can eliminate the need for specialized heart and blood pressure monitoring equipment. Non-contact methods can have additional advantages since they are scalable with any environment where video can be captured, can be used for continuous measurements, and can be used on patients with varying levels of dexterity and independence, from people with physical impairments to infants (e.g., baby camera). In this paper, we used a non-contact method that only requires face videos recorded using commercially-available webcams. These videos were exploited to predict the health attributes like pulse rate and variance in pulse rate. The proposed approach used facial recognition to detect the face in each frame of the video using facial landmarks, followed by supervised learning using deep neural networks to train the machine learning model. The videos captured subjects performing different physical activities that result in varying cardiovascular responses. The proposed method did not require training data from every individual and thus the prediction can be obtained for the new individuals for which there is no prior data; critical in approach generalization. The approach was also evaluated on a dataset of people with different ethnicity. The proposed approach had less than a 4.6\% error in predicting the pulse rate.

* The main part of the paper appeared in DFHS'20: Proceedings of the 2nd ACM Workshop on Device-Free Human Sensing; while the Supplementary did not appear in the proceedings

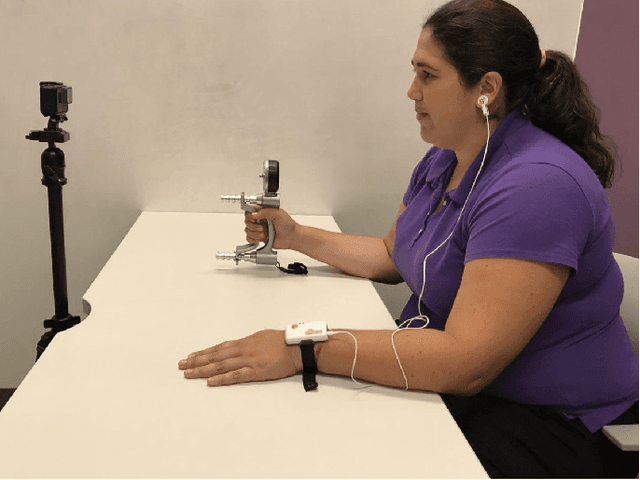

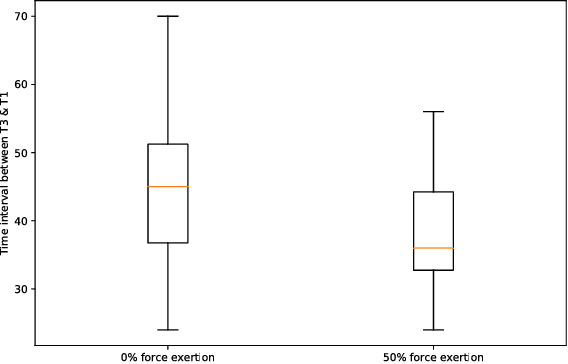

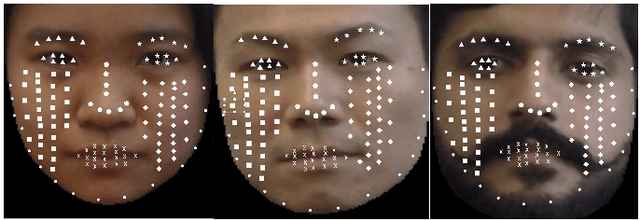

Covfefe: A Computer Vision Approach For Estimating Force Exertion

Sep 25, 2018

Abstract:Cumulative exposure to repetitive and forceful activities may lead to musculoskeletal injuries which not only reduce workers' efficiency and productivity, but also affect their quality of life. Thus, widely accessible techniques for reliable detection of unsafe muscle force exertion levels for human activity is necessary for their well-being. However, measurement of force exertion levels is challenging and the existing techniques pose a great challenge as they are either intrusive, interfere with human-machine interface, and/or subjective in the nature, thus are not scalable for all workers. In this work, we use face videos and the photoplethysmography (PPG) signals to classify force exertion levels of 0\%, 50\%, and 100\% (representing rest, moderate effort, and high effort), thus providing a non-intrusive and scalable approach. Efficient feature extraction approaches have been investigated, including standard deviation of the movement of different landmarks of the face, distances between peaks and troughs in the PPG signals. We note that the PPG signals can be obtained from the face videos, thus giving an efficient classification algorithm for the force exertion levels using face videos. Based on the data collected from 20 subjects, features extracted from the face videos give 90\% accuracy in classification among the 100\% and the combination of 0\% and 50\% datasets. Further combining the PPG signals provide 81.7\% accuracy. The approach is also shown to be robust to the correctly identify force level when the person is talking, even though such datasets are not included in the training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge