Denisa Roberts

Smart Vision-Language Reasoners

Jul 05, 2024

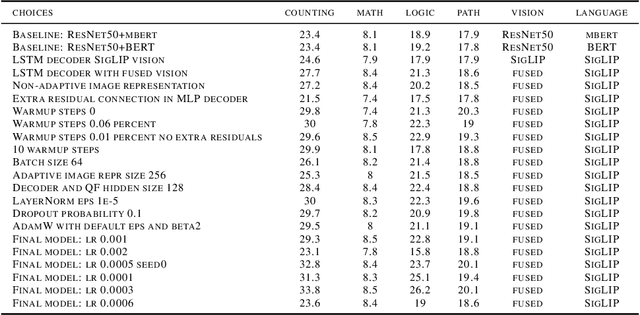

Abstract:In this article, we investigate vision-language models (VLM) as reasoners. The ability to form abstractions underlies mathematical reasoning, problem-solving, and other Math AI tasks. Several formalisms have been given to these underlying abstractions and skills utilized by humans and intelligent systems for reasoning. Furthermore, human reasoning is inherently multimodal, and as such, we focus our investigations on multimodal AI. In this article, we employ the abstractions given in the SMART task (Simple Multimodal Algorithmic Reasoning Task) introduced in \cite{cherian2022deep} as meta-reasoning and problem-solving skills along eight axes: math, counting, path, measure, logic, spatial, and pattern. We investigate the ability of vision-language models to reason along these axes and seek avenues of improvement. Including composite representations with vision-language cross-attention enabled learning multimodal representations adaptively from fused frozen pretrained backbones for better visual grounding. Furthermore, proper hyperparameter and other training choices led to strong improvements (up to $48\%$ gain in accuracy) on the SMART task, further underscoring the power of deep multimodal learning. The smartest VLM, which includes a novel QF multimodal layer, improves upon the best previous baselines in every one of the eight fundamental reasoning skills. End-to-end code is available at https://github.com/smarter-vlm/smarter.

Efficient Large-Scale Vision Representation Learning

May 24, 2023

Abstract:In this article, we present our approach to single-modality vision representation learning. Understanding vision representations of product content is vital for recommendations, search, and advertising applications in e-commerce. We detail and contrast techniques used to fine tune large-scale vision representation learning models in an efficient manner under low-resource settings, including several pretrained backbone architectures, both in the convolutional neural network as well as the vision transformer family. We highlight the challenges for e-commerce applications at-scale and highlight the efforts to more efficiently train, evaluate, and serve visual representations. We present ablation studies for several downstream tasks, including our visually similar ad recommendations. We evaluate the offline performance of the derived visual representations in downstream tasks. To this end, we present a novel text-to-image generative offline evaluation method for visually similar recommendation systems. Finally, we include online results from deployed machine learning systems in production at Etsy.

adSformers: Personalization from Short-Term Sequences and Diversity of Representations in Etsy Ads

Feb 02, 2023Abstract:In this article, we present our approach to personalizing Etsy Ads through encoding and learning from short-term (one-hour) sequences of user actions and diverse representations. To this end we introduce a three-component adSformer diversifiable personalization module (ADPM) and illustrate how we use this module to derive a short-term dynamic user representation and personalize the Click-Through Rate (CTR) and Post-Click Conversion Rate (PCCVR) models used in sponsored search (ad) ranking. The first component of the ADPM is a custom transformer encoder that learns the inherent structure from the sequence of actions. ADPM's second component enriches the signal through visual, multimodal and textual pretrained representations. Lastly, the third ADPM component includes a "learned" on the fly average pooled representation. The ADPM-personalized CTR and PCCVR models, henceforth referred to as adSformer CTR and adSformer PCCVR, outperform the CTR and PCCVR production baselines by $+6.65\%$ and $+12.70\%$, respectively, in offline Precision-Recall Area Under the Curve (PR AUC). At the time of this writing, following the online gains in A/B tests, such as $+5.34\%$ in return on ad spend, a seller success metric, we are ramping up the adSformers to $100\%$ traffic in Etsy Ads.

Lorenz Trajectories Prediction: Travel Through Time

Mar 18, 2019

Abstract:In this article the Lorenz dynamical system is revived and revisited and the current state of the art results for one step ahead forecasting for the Lorenz trajectories are published. The article is a reflection upon the evolution of neural networks with regards to the prediction performance on this canonical task.

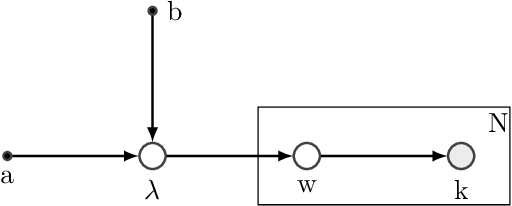

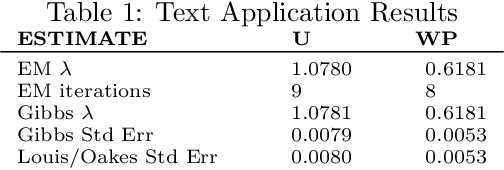

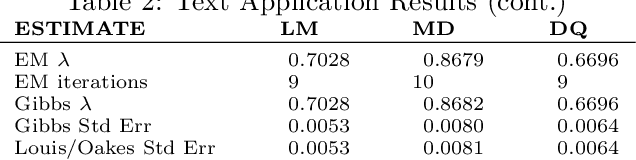

An Expectation Maximization Framework for Yule-Simon Preferential Attachment Models

Sep 16, 2018

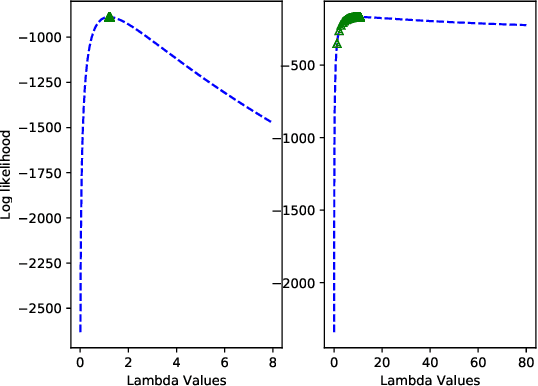

Abstract:In this paper we develop an Expectation Maximization(EM) algorithm to estimate the parameter of a Yule-Simon distribution. The Yule-Simon distribution exhibits the "rich get richer" effect whereby an 80-20 type of rule tends to dominate. These distributions are ubiquitous in industrial settings. The EM algorithm presented provides both frequentist and Bayesian estimates of the $\lambda$ parameter. By placing the estimation method within the EM framework we are able to derive Standard errors of the resulting estimate. Additionally, we prove convergence of the Yule-Simon EM algorithm and study the rate of convergence. An explicit, closed form solution for the rate of convergence of the algorithm is given.

A Second Order Cumulant Spectrum Based Test for Strict Stationarity

Jan 20, 2018

Abstract:This article develops a statistical test for the null hypothesis of strict stationarity of a discrete time stochastic process. When the null hypothesis is true, the second order cumulant spectrum is zero at all the discrete Fourier frequency pairs present in the principal domain of the cumulant spectrum. The test uses a frame (window) averaged sample estimate of the second order cumulant spectrum to build a test statistic that has an asymptotic complex standard normal distribution. We derive the test statistic, study the size and power properties of the test, and demonstrate its implementation with intraday stock market return data. The test has conservative size properties and good power to detect varying variance and unit root in the presence of varying variance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge