Dawei Zhan

An Adaptive Dropout Approach for High-Dimensional Bayesian Optimization

Apr 15, 2025Abstract:Bayesian optimization (BO) is a widely used algorithm for solving expensive black-box optimization problems. However, its performance decreases significantly on high-dimensional problems due to the inherent high-dimensionality of the acquisition function. In the proposed algorithm, we adaptively dropout the variables of the acquisition function along the iterations. By gradually reducing the dimension of the acquisition function, the proposed approach has less and less difficulty to optimize the acquisition function. Numerical experiments demonstrate that AdaDropout effectively tackle high-dimensional challenges and improve solution quality where standard Bayesian optimization methods often struggle. Moreover, it achieves superior results when compared with state-of-the-art high-dimensional Bayesian optimization approaches. This work provides a simple yet efficient solution for high-dimensional expensive optimization.

Batch Bayesian Optimization via Expected Subspace Improvement

Nov 25, 2024

Abstract:Extending Bayesian optimization to batch evaluation can enable the designer to make the most use of parallel computing technology. Most of current batch approaches use artificial functions to simulate the sequential Bayesian optimization algorithm's behavior to select a batch of points for parallel evaluation. However, as the batch size grows, the accumulated error introduced by these artificial functions increases rapidly, which dramatically decreases the optimization efficiency of the algorithm. In this work, we propose a simple and efficient approach to extend Bayesian optimization to batch evaluation. Different from existing batch approaches, the idea of the new approach is to draw a batch of subspaces of the original problem and select one acquisition point from each subspace. To achieve this, we propose the expected subspace improvement criterion to measure the amount of the improvement that a candidate point can achieve within a certain subspace. By optimizing these expected subspace improvement functions simultaneously, we can get a batch of query points for expensive evaluation. Numerical experiments show that our proposed approach can achieve near-linear speedup when compared with the sequential Bayesian optimization algorithm, and performs very competitively when compared with eight state-of-the-art batch algorithms. This work provides a simple yet efficient approach for batch Bayesian optimization. A Matlab implementation of our approach is available at https://github.com/zhandawei/Expected_Subspace_Improvement_Batch_Bayesian_Optimization

Expected Coordinate Improvement for High-Dimensional Bayesian Optimization

Apr 18, 2024Abstract:Bayesian optimization (BO) algorithm is very popular for solving low-dimensional expensive optimization problems. Extending Bayesian optimization to high dimension is a meaningful but challenging task. One of the major challenges is that it is difficult to find good infill solutions as the acquisition functions are also high-dimensional. In this work, we propose the expected coordinate improvement (ECI) criterion for high-dimensional Bayesian optimization. The proposed ECI criterion measures the potential improvement we can get by moving the current best solution along one coordinate. The proposed approach selects the coordinate with the highest ECI value to refine in each iteration and covers all the coordinates gradually by iterating over the coordinates. The greatest advantage of the proposed ECI-BO (expected coordinate improvement based Bayesian optimization) algorithm over the standard BO algorithm is that the infill selection problem of the proposed algorithm is always a one-dimensional problem thus can be easily solved. Numerical experiments show that the proposed algorithm can achieve significantly better results than the standard BO algorithm and competitive results when compared with five state-of-the-art high-dimensional BOs. This work provides a simple but efficient approach for high-dimensional Bayesian optimization.

RTFN: A Robust Temporal Feature Network for Time Series Classification

Nov 24, 2020

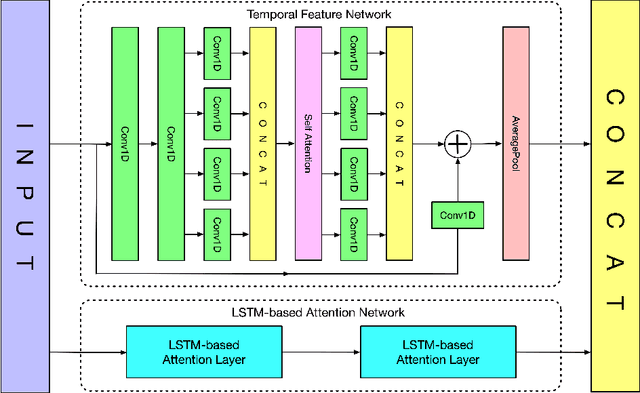

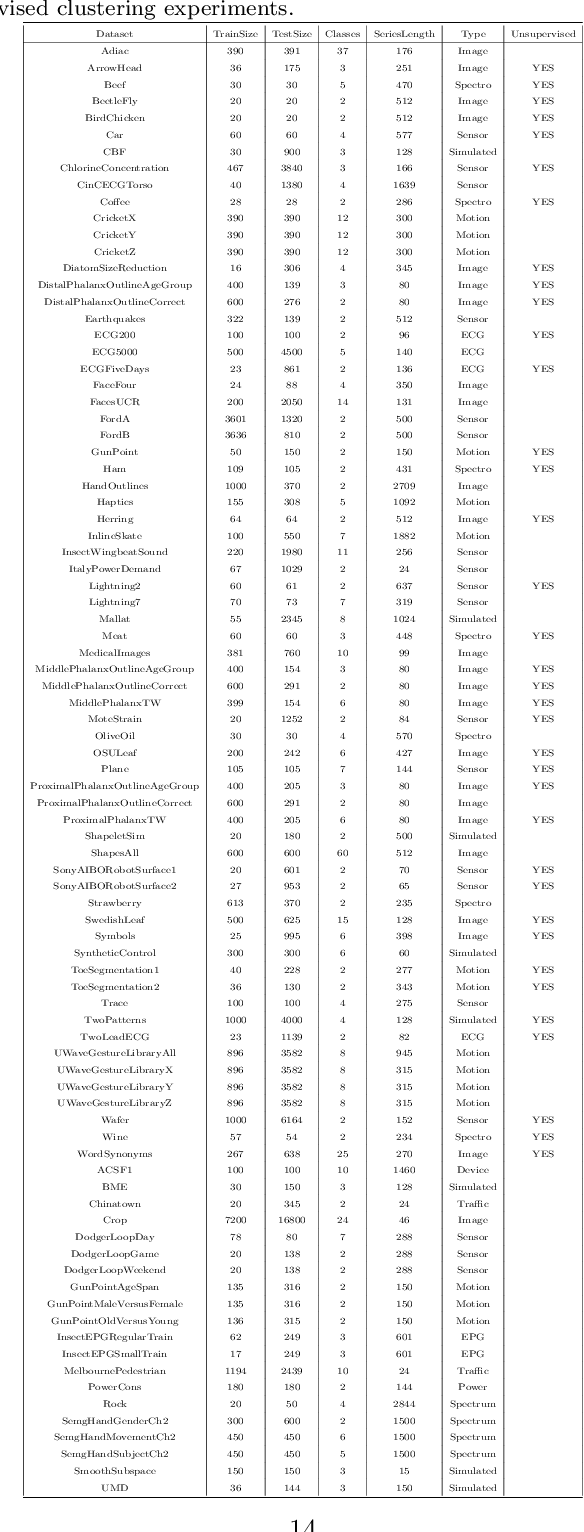

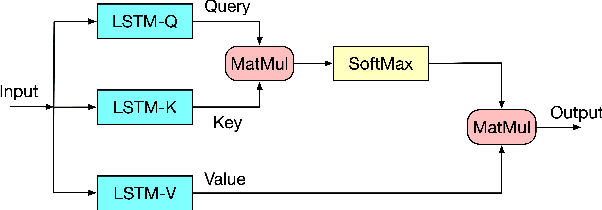

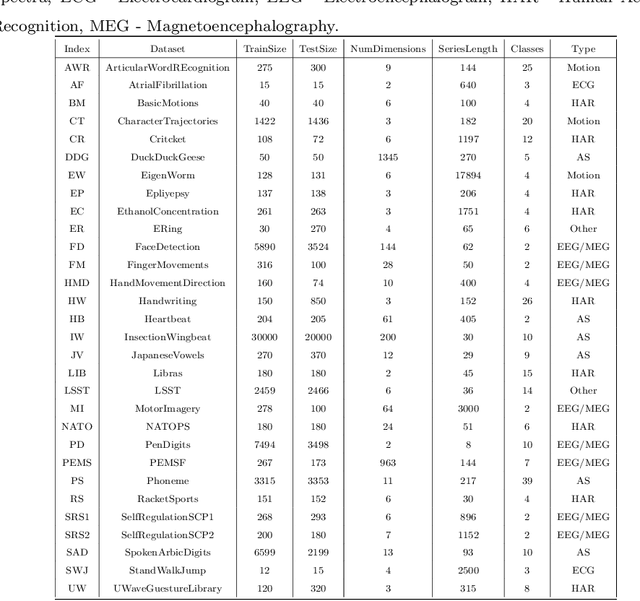

Abstract:Time series data usually contains local and global patterns. Most of the existing feature networks pay more attention to local features rather than the relationships among them. The latter is, however, also important yet more difficult to explore. To obtain sufficient representations by a feature network is still challenging. To this end, we propose a novel robust temporal feature network (RTFN) for feature extraction in time series classification, containing a temporal feature network (TFN) and an LSTM-based attention network (LSTMaN). TFN is a residual structure with multiple convolutional layers. It functions as a local-feature extraction network to mine sufficient local features from data. LSTMaN is composed of two identical layers, where attention and long short-term memory (LSTM) networks are hybridized. This network acts as a relation extraction network to discover the intrinsic relationships among the extracted features at different positions in sequential data. In experiments, we embed RTFN into a supervised structure as a feature extractor and into an unsupervised structure as an encoder, respectively. The results show that the RTFN-based structures achieve excellent supervised and unsupervised performance on a large number of UCR2018 and UEA2018 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge