David Rios Insua

A Cybersecurity Risk Analysis Framework for Systems with Artificial Intelligence Components

Jan 03, 2024Abstract:The introduction of the European Union Artificial Intelligence Act, the NIST Artificial Intelligence Risk Management Framework, and related norms demands a better understanding and implementation of novel risk analysis approaches to evaluate systems with Artificial Intelligence components. This paper provides a cybersecurity risk analysis framework that can help assessing such systems. We use an illustrative example concerning automated driving systems.

Protecting Classifiers From Attacks. A Bayesian Approach

Apr 18, 2020

Abstract:Classification problems in security settings are usually modeled as confrontations in which an adversary tries to fool a classifier manipulating the covariates of instances to obtain a benefit. Most approaches to such problems have focused on game-theoretic ideas with strong underlying common knowledge assumptions, which are not realistic in the security realm. We provide an alternative Bayesian framework that accounts for the lack of precise knowledge about the attacker's behavior using adversarial risk analysis. A key ingredient required by our framework is the ability to sample from the distribution of originating instances given the possibly attacked observed one. We propose a sampling procedure based on approximate Bayesian computation, in which we simulate the attacker's problem taking into account our uncertainty about his elements. For large scale problems, we propose an alternative, scalable approach that could be used when dealing with differentiable classifiers. Within it, we move the computational load to the training phase, simulating attacks from an adversary, adapting the framework to obtain a classifier robustified against attacks.

Adversarial Machine Learning: Perspectives from Adversarial Risk Analysis

Mar 07, 2020

Abstract:Adversarial Machine Learning (AML) is emerging as a major field aimed at the protection of automated ML systems against security threats. The majority of work in this area has built upon a game-theoretic framework by modelling a conflict between an attacker and a defender. After reviewing game-theoretic approaches to AML, we discuss the benefits that a Bayesian Adversarial Risk Analysis perspective brings when defending ML based systems. A research agenda is included.

Protecting from Malware Obfuscation Attacks through Adversarial Risk Analysis

Nov 09, 2019

Abstract:Malware constitutes a major global risk affecting millions of users each year. Standard algorithms in detection systems perform insufficiently when dealing with malware passed through obfuscation tools. We illustrate this studying in detail an open source metamorphic software, making use of a hybrid framework to obtain the relevant features from binaries. We then provide an improved alternative solution based on adversarial risk analysis which we illustrate describe with an example.

Variationally Inferred Sampling Through a Refined Bound for Probabilistic Programs

Sep 23, 2019

Abstract:A framework to boost efficiency of Bayesian inference in probabilistic programs is introduced by embedding a sampler inside a variational posterior approximation, which we call the refined variational approximation. Its strength lies both in ease of implementation and in automatically tuning the sampler parameters to speed up mixing time. Several strategies to approximate the \emph{evidence lower bound} (ELBO) computation are introduced, including a rewriting of the ELBO objective. A specialization towards state-space models is proposed. Experimental evidence of its efficient performance is shown by solving an influence diagram in a high-dimensional space using a conditional variational autoencoder (cVAE) as a deep Bayes classifier; an unconditional VAE on density estimation tasks; and state-space models for time-series data.

Opponent Aware Reinforcement Learning

Aug 26, 2019

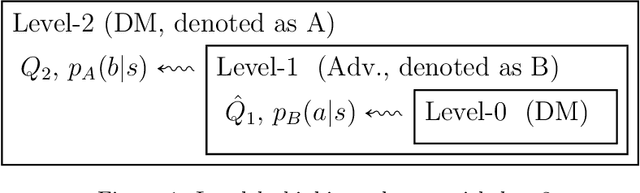

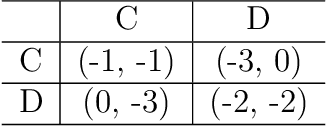

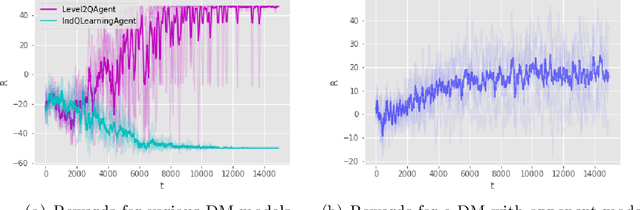

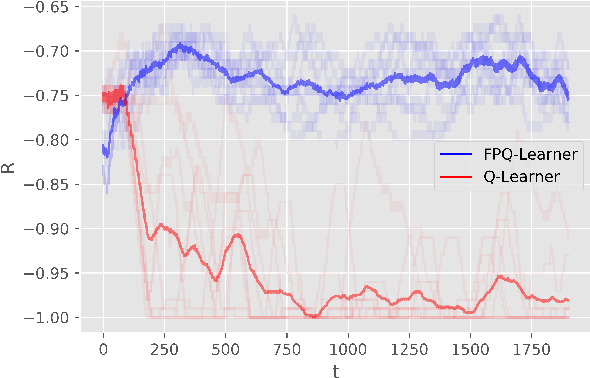

Abstract:We introduce Threatened Markov Decision Processes (TMDPs) as an extension of the classical Markov Decision Process framework for Reinforcement Learning (RL). TMDPs allow suporting a decision maker against potential opponents in a RL context. We also propose a level-k thinking scheme resulting in a novel learning approach to deal with TMDPs. After introducing our framework and deriving theoretical results, relevant empirical evidence is given via extensive experiments, showing the benefits of accounting for adversaries in RL while the agent learns

Stochastic Gradient MCMC with Repulsive Forces

Nov 30, 2018

Abstract:We propose a unifying view of two different families of Bayesian inference algorithms, SG-MCMC and SVGD. We show that SVGD plus a noise term can be framed as a multiple chain SG-MCMC method. Instead of treating each parallel chain independently from others, the proposed algorithm implements a repulsive force between particles, avoiding collapse. Experiments in both synthetic distributions and real datasets show the benefits of the proposed scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge