David Fuenmayor

Bridging between LegalRuleML and TPTP for Automated Normative Reasoning (extended version)

Sep 12, 2022

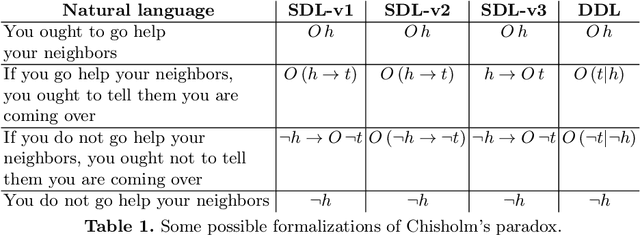

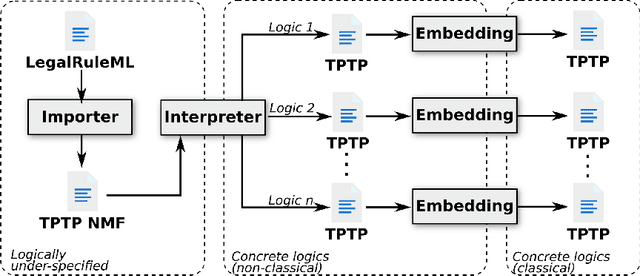

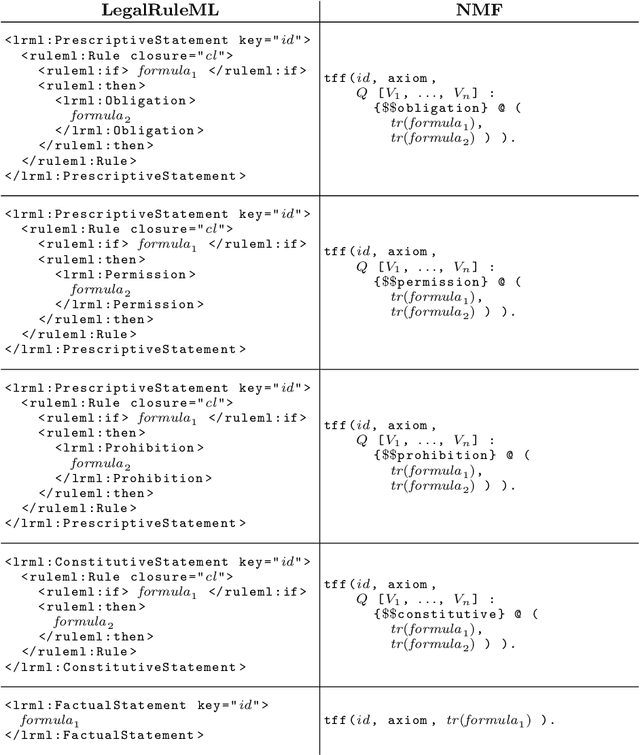

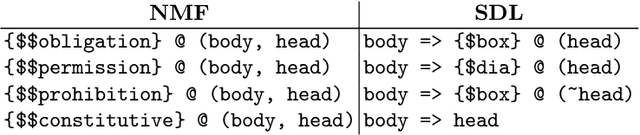

Abstract:LegalRuleML is a comprehensive XML-based representation framework for modeling and exchanging normative rules. The TPTP input and output formats, on the other hand, are general-purpose standards for the interaction with automated reasoning systems. In this paper we provide a bridge between the two communities by (i) defining a logic-pluralistic normative reasoning language based on the TPTP format, (ii) providing a translation scheme between relevant fragments of LegalRuleML and this language, and (iii) proposing a flexible architecture for automated normative reasoning based on this translation. We exemplarily instantiate and demonstrate the approach with three different normative logics.

Who Finds the Short Proof? An Exploration of Variants of Boolos' Curious Inference using Higher-order Automated Theorem Provers

Aug 22, 2022Abstract:This paper reports on an exploration of variants of Boolos' curious inference, using higher-order automated theorem provers (ATPs). Surprisingly, only a single shorthand notation had to be provided by hand. All higher-order lemmas required for obtaining short proof are automatically discovered by the ATPs. Given the observations and suggestions in this paper, full proof automation of Boolos' example on the speedup of proof lengths, and related examples, now seems to be within reach for higher-order ATPs.

Automated Reasoning in Non-classical Logics in the TPTP World

Feb 20, 2022

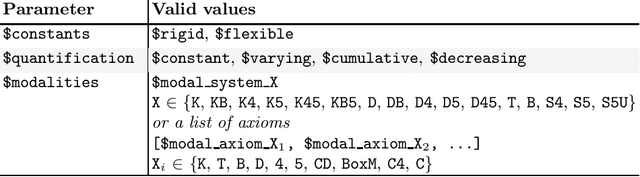

Abstract:Non-classical logics are used in a wide spectrum of disciplines, including artificial intelligence, computer science, mathematics, and philosophy. The de-facto standard infrastructure for automated theorem proving, the TPTP World, currently supports only classical logics. Similar standards for non-classical logic reasoning do not exist (yet). This hampers practical development of reasoning systems, and limits their interoperability and application. This paper describes the latest extension of the TPTP World, which provides languages and infrastructure for reasoning in non-classical logics. The extensions integrate seamlessly with the existing TPTP World.

A Formalisation of Abstract Argumentation in Higher-Order Logic

Oct 18, 2021

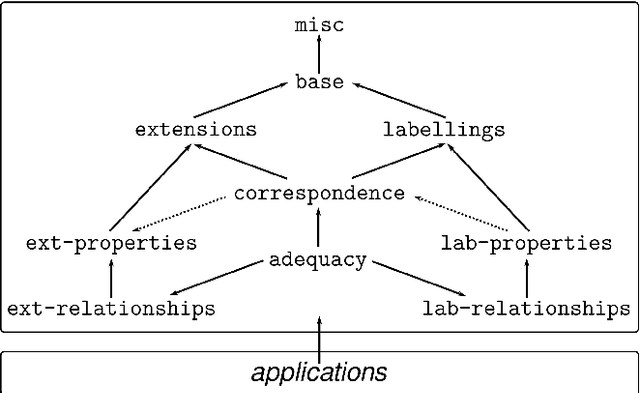

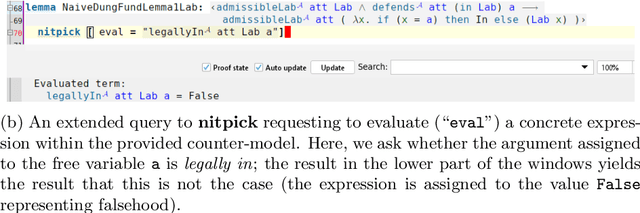

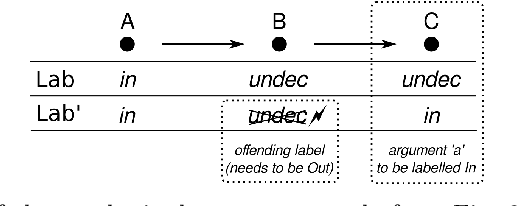

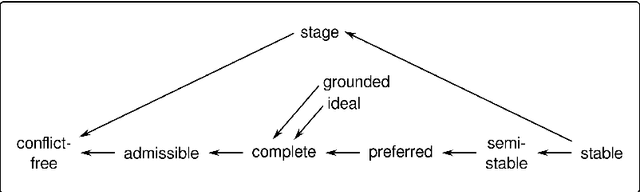

Abstract:We present an approach for representing abstract argumentation frameworks based on an encoding into classical higher-order logic. This provides a uniform framework for computer-assisted assessment of abstract argumentation frameworks using interactive and automated reasoning tools. This enables the formal analysis and verification of meta-theoretical properties as well as the flexible generation of extensions and labellings with respect to well-known argumentation semantics.

Higher-order Logic as Lingua Franca -- Integrating Argumentative Discourse and Deep Logical Analysis

Jul 02, 2020

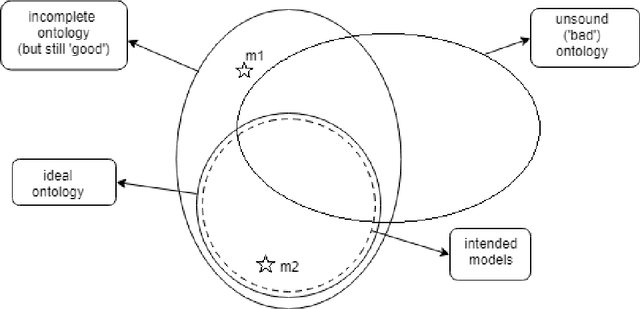

Abstract:We present an approach towards the deep, pluralistic logical analysis of argumentative discourse that benefits from the application of state-of-the-art automated reasoning technology for classical higher-order logic. Thanks to its expressivity this logic can adopt the status of a uniform \textit{lingua franca} allowing the encoding of both formalized arguments (their deep logical structure) and dialectical interactions (their attack and support relations). We illustrate this by analyzing an excerpt from an argumentative debate on climate engineering. Another, novel contribution concerns the definition of abstract, language-theoretical foundations for the characterization and assessment of shallow semantical embeddings (SSEs) of non-classical logics in classical higher-order logic, which constitute a pillar stone of our approach. The novel perspective we draw enables more concise and more elegant characterizations of semantical embeddings of logics and logic combinations, which is demonstrated with several examples.

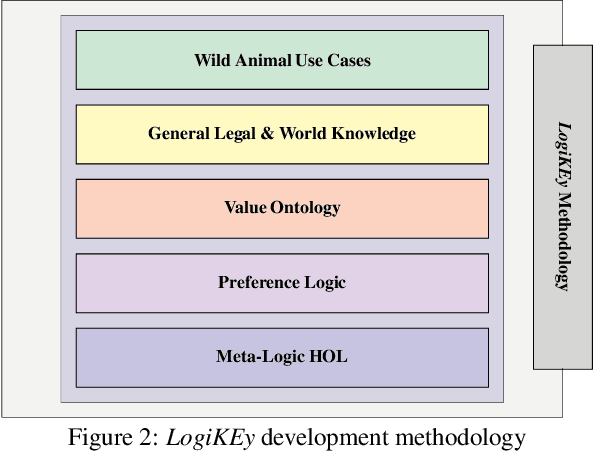

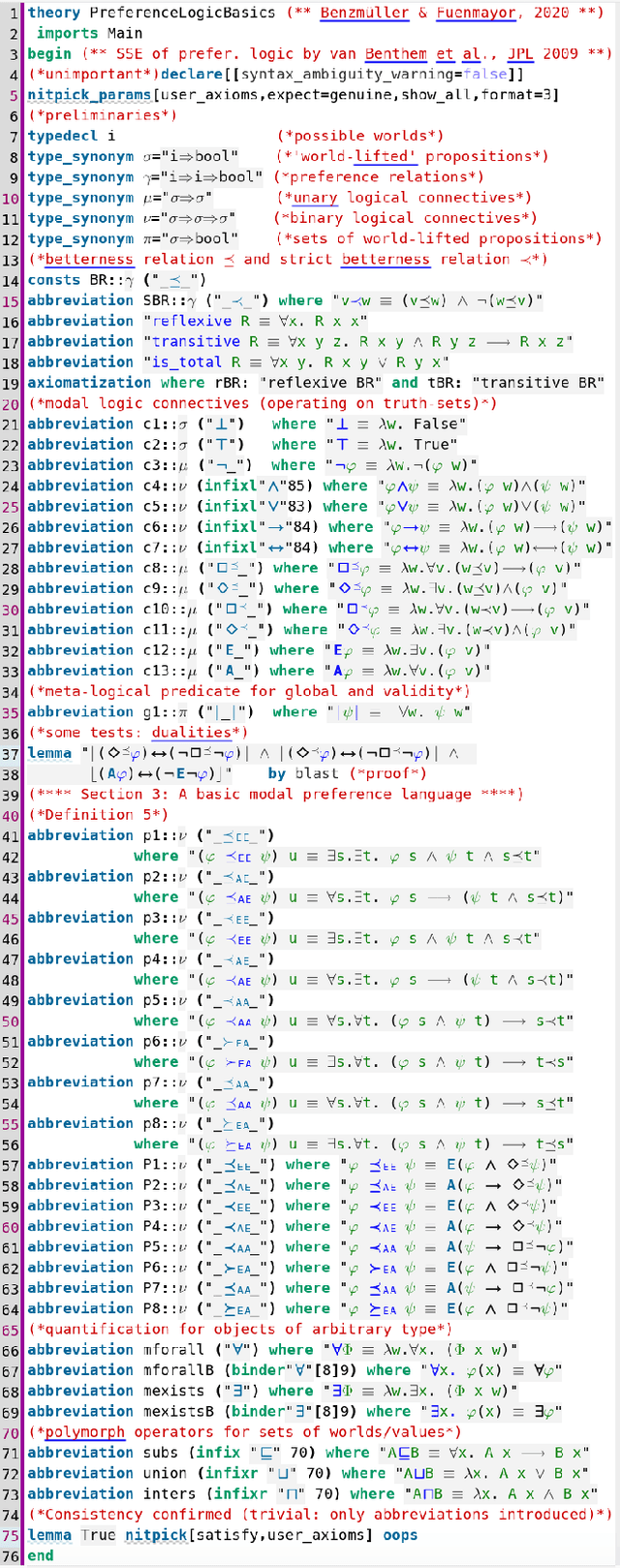

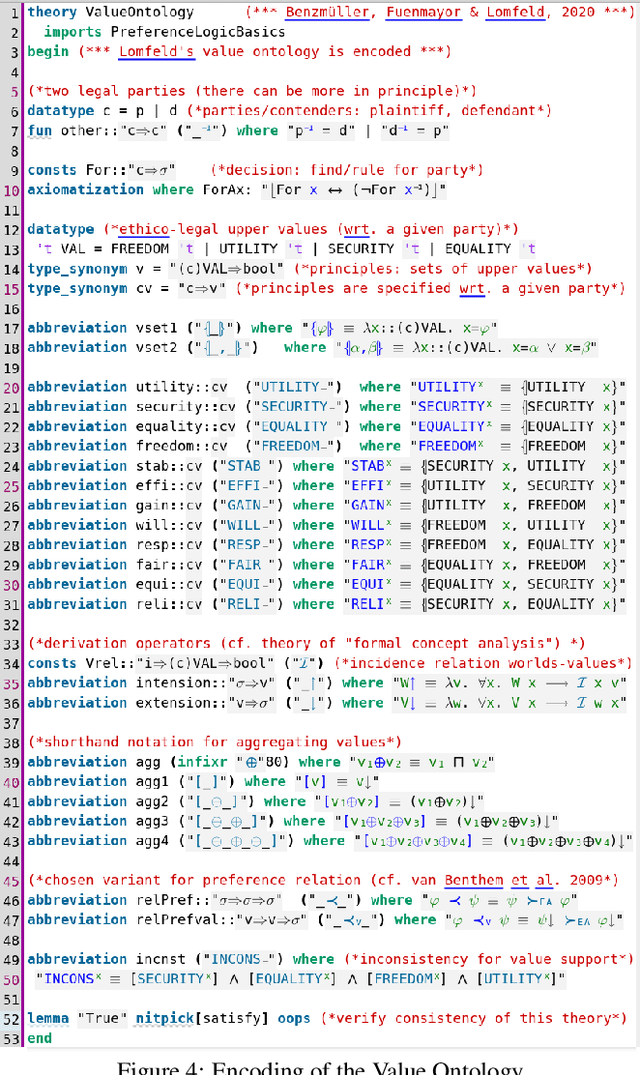

Encoding Legal Balancing: Automating an Abstract Ethico-Legal Value Ontology in Preference Logic

Jun 23, 2020

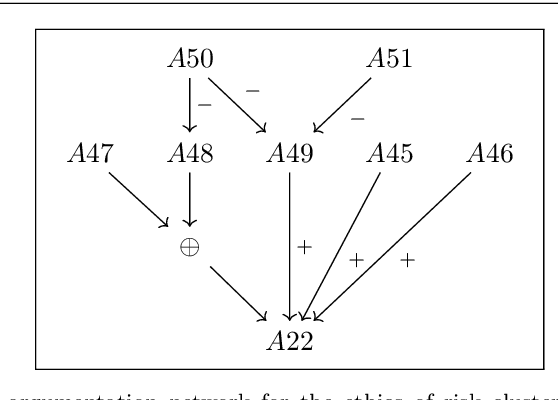

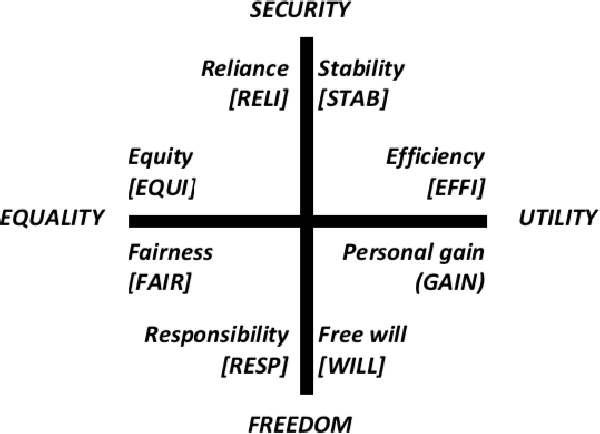

Abstract:Enabling machines to legal balancing is a non-trivial task challenged by a multitude of factors some of which are addressed and explored in this work. We propose a holistic approach to formal modeling at different abstraction layers supported by a pluralistic framework in which the encoding of an ethico-legal value and upper ontology is developed in combination with the exploration of a formalization logic, with legal domain knowledge and with exemplary use cases until a reflective equilibrium is reached. Our work is enabled by a meta-logical approach to universal logical reasoning and it applies the recently introduced \logikey\ methodology for designing normative theories for ethical and legal reasoning. The particular focus in this paper is on the formalization and encoding of a value ontology suitable e.g. for explaining and resolving legal conflicts in property law (wild animal cases).

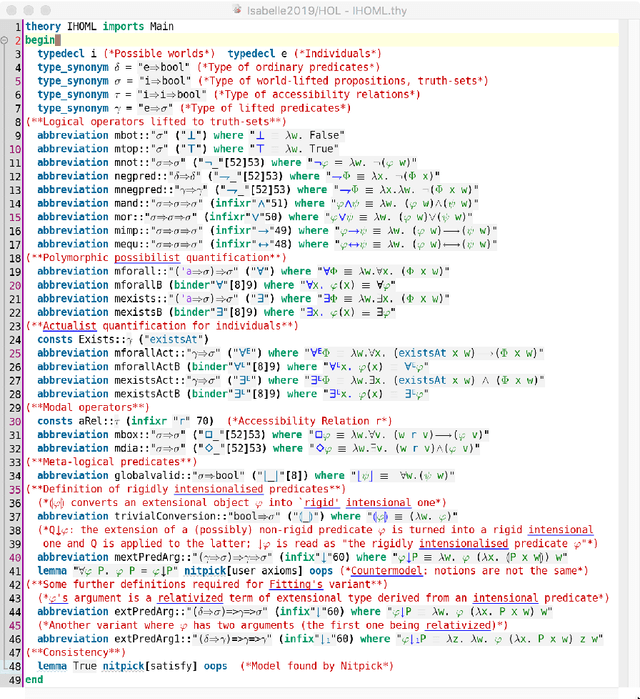

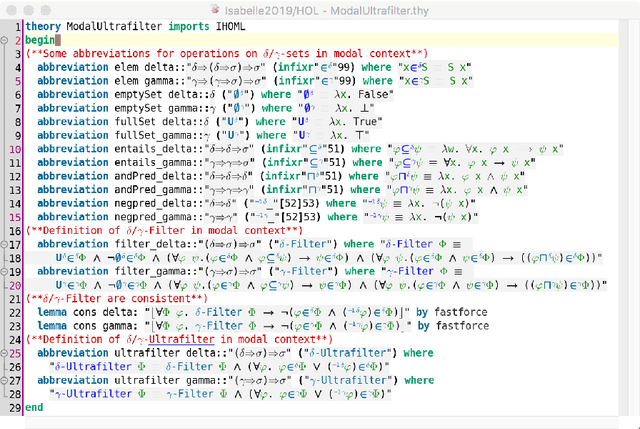

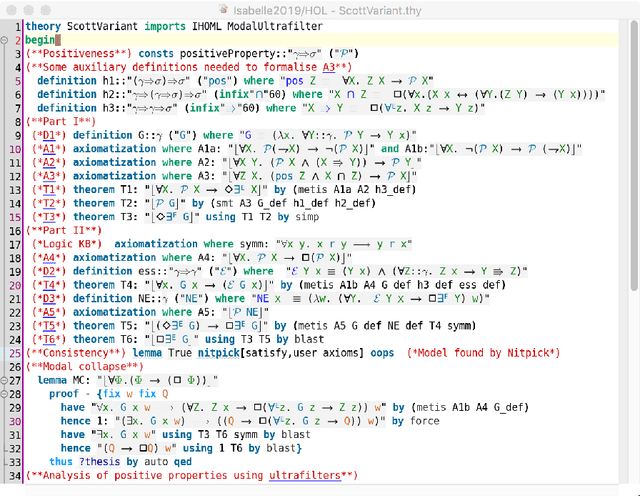

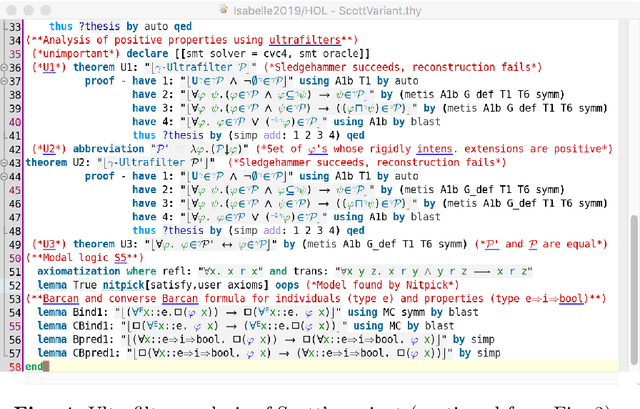

Computer-supported Analysis of Positive Properties, Ultrafilters and Modal Collapse in Variants of Gödel's Ontological Argument

Oct 20, 2019

Abstract:Three variants of Kurt G\"odel's ontological argument, as proposed byDana Scott, C. Anthony Anderson and Melvin Fitting, are encoded and rigorously assessed on the computer. In contrast to Scott's version of G\"odel's argument, the two variants contributed by Anderson and Fitting avoid modal collapse. Although they appear quite different on a cursory reading, they are in fact closely related, as our computer-supported formal analysis (conducted in the proof assistant system Isabelle/HOL) reveals. Key to our formal analysis is the utilization of suitably adapted notions of (modal) ultrafilters, and a careful distinction between extensions and intensions of positive properties.

A Computational-Hermeneutic Approach for Conceptual Explicitation

Jul 19, 2019

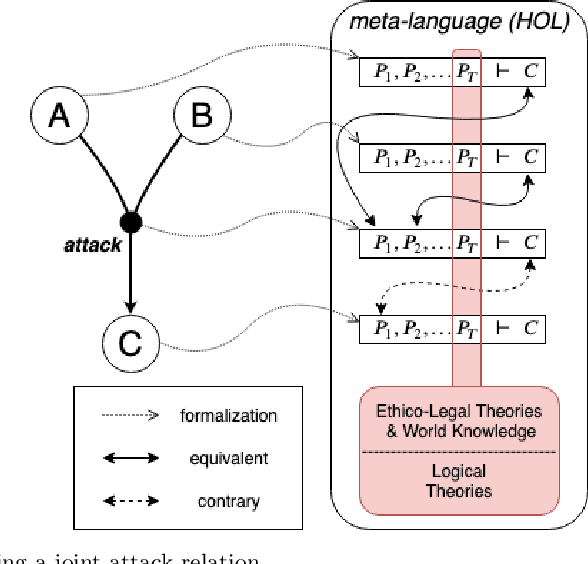

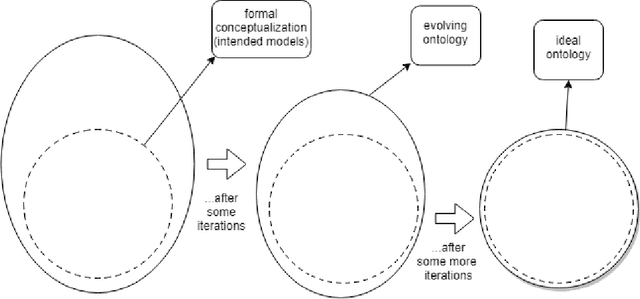

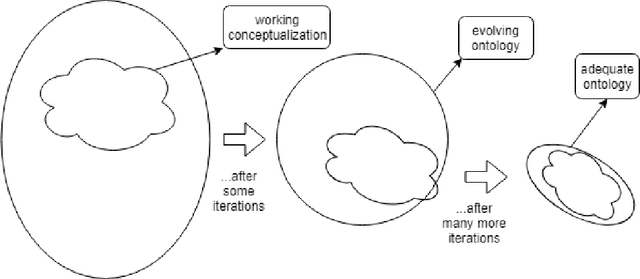

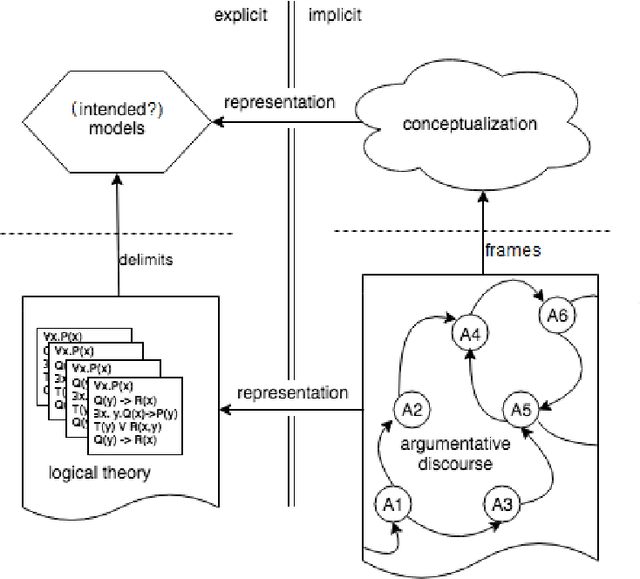

Abstract:We present a computer-supported approach for the logical analysis and conceptual explicitation of argumentative discourse. Computational hermeneutics harnesses recent progresses in automated reasoning for higher-order logics and aims at formalizing natural-language argumentative discourse using flexible combinations of expressive non-classical logics. In doing so, it allows us to render explicit the tacit conceptualizations implicit in argumentative discursive practices. Our approach operates on networks of structured arguments and is iterative and two-layered. At one layer we search for logically correct formalizations for each of the individual arguments. At the next layer we select among those correct formalizations the ones which honor the argument's dialectic role, i.e. attacking or supporting other arguments as intended. We operate at these two layers in parallel and continuously rate sentences' formalizations by using, primarily, inferential adequacy criteria. An interpretive, logical theory will thus gradually evolve. This theory is composed of meaning postulates serving as explications for concepts playing a role in the analyzed arguments. Such a recursive, iterative approach to interpretation does justice to the inherent circularity of understanding: the whole is understood compositionally on the basis of its parts, while each part is understood only in the context of the whole (hermeneutic circle). We summarily discuss previous work on exemplary applications of human-in-the-loop computational hermeneutics in metaphysical discourse. We also discuss some of the main challenges involved in fully-automating our approach. By sketching some design ideas and reviewing relevant technologies, we argue for the technological feasibility of a highly-automated computational hermeneutics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge