Daniel Pak-Kong Lun

Optimal Coded Diffraction Patterns for Practical Phase Retrieval

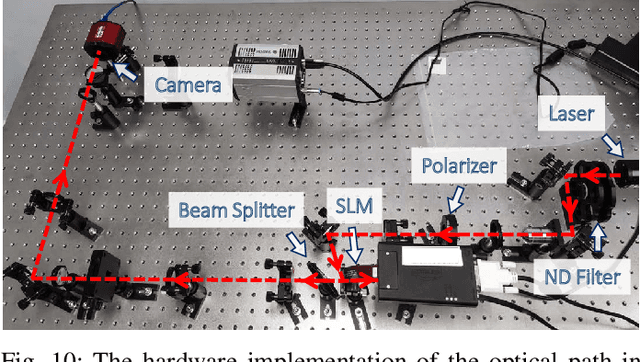

Mar 28, 2023Abstract:Phase retrieval, a long-established challenge for recovering a complex-valued signal from its Fourier intensity measurements, has attracted significant interest because of its far-flung applications in optical imaging. To enhance accuracy, researchers introduce extra constraints to the measuring procedure by including a random aperture mask in the optical path that randomly modulates the light projected on the target object and gives the coded diffraction patterns (CDP). It is known that random masks are non-bandlimited and can lead to considerable high-frequency components in the Fourier intensity measurements. These high-frequency components can be beyond the Nyquist frequency of the optical system and are thus ignored by the phase retrieval optimization algorithms, resulting in degraded reconstruction performances. Recently, our team developed a binary green noise masking scheme that can significantly reduce the high-frequency components in the measurement. However, the scheme cannot be extended to generate multiple-level aperture masks. This paper proposes a two-stage optimization algorithm to generate multi-level random masks named $\textit{OptMask}$ that can also significantly reduce high-frequency components in the measurements but achieve higher accuracy than the binary masking scheme. Extensive experiments on a practical optical platform were conducted. The results demonstrate the superiority and practicality of the proposed $\textit{OptMask}$ over the existing masking schemes for CDP phase retrieval.

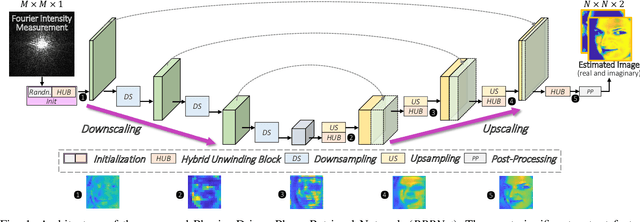

Towards Practical Single-shot Phase Retrieval with Physics-Driven Deep Neural Network

Aug 18, 2022

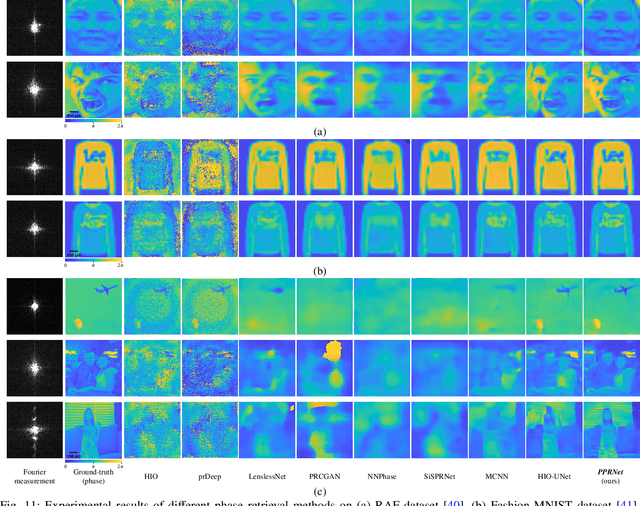

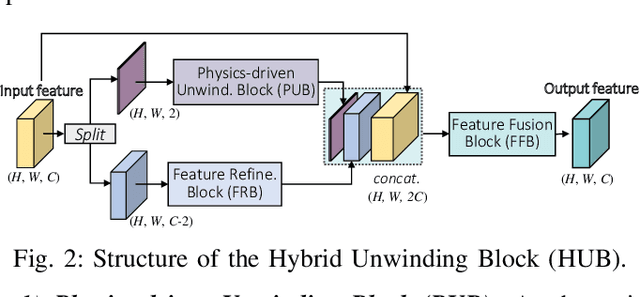

Abstract:Phase retrieval (PR), a long-established challenge for recovering a complex-valued signal from its Fourier intensity-only measurements, has attracted considerable attention due to its widespread applications in digital imaging. Recently, deep learning-based approaches were developed that achieved some success in single-shot PR. These approaches require a single Fourier intensity measurement without the need to impose any additional constraints on the measured data. Nevertheless, vanilla deep neural networks (DNN) do not give good performance due to the substantial disparity between the input and output domains of the PR problems. Physics-informed approaches try to incorporate the Fourier intensity measurements into an iterative approach to increase the reconstruction accuracy. It, however, requires a lengthy computation process, and the accuracy still cannot be guaranteed. Besides, many of these approaches work on simulation data that ignore some common problems such as saturation and quantization errors in practical optical PR systems. In this paper, a novel physics-driven multi-scale DNN structure dubbed PPRNet is proposed. Similar to other deep learning-based PR methods, PPRNet requires only a single Fourier intensity measurement. It is physics-driven that the network is guided to follow the Fourier intensity measurement at different scales to enhance the reconstruction accuracy. PPRNet has a feedforward structure and can be end-to-end trained. Thus, it is much faster and more accurate than the traditional physics-driven PR approaches. Extensive simulations and experiments on a practical optical platform were conducted. The results demonstrate the superiority and practicality of the proposed PPRNet over the traditional learning-based PR methods.

DeepGIN: Deep Generative Inpainting Network for Extreme Image Inpainting

Aug 17, 2020

Abstract:The degree of difficulty in image inpainting depends on the types and sizes of the missing parts. Existing image inpainting approaches usually encounter difficulties in completing the missing parts in the wild with pleasing visual and contextual results as they are trained for either dealing with one specific type of missing patterns (mask) or unilaterally assuming the shapes and/or sizes of the masked areas. We propose a deep generative inpainting network, named DeepGIN, to handle various types of masked images. We design a Spatial Pyramid Dilation (SPD) ResNet block to enable the use of distant features for reconstruction. We also employ Multi-Scale Self-Attention (MSSA) mechanism and Back Projection (BP) technique to enhance our inpainting results. Our DeepGIN outperforms the state-of-the-art approaches generally, including two publicly available datasets (FFHQ and Oxford Buildings), both quantitatively and qualitatively. We also demonstrate that our model is capable of completing masked images in the wild.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge