Daniel Barry

Electric Sheep Team Description Paper Humanoid League Kid-Size 2019

Oct 20, 2019

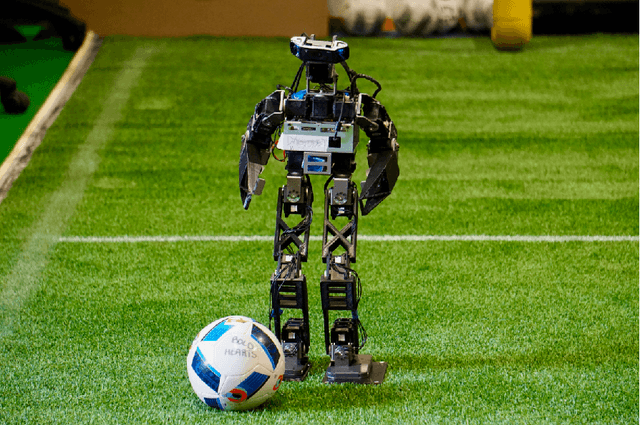

Abstract:In this paper we introduce the newly formed New Zealand based RoboCup Humanoid Kid-Size team, Electric Sheep. We describe our developed humanoid robot platform, particularly our unique take on the chassis, electronics and use of several motor types to create a low-cost entry platform. To support this hardware, we discuss our software framework, vision processing, walking and game-play strategy methodology. Lastly we give an overview of future research interests within the team and intentions of future contributions for the league and the goal of RoboCup.

xYOLO: A Model For Real-Time Object Detection In Humanoid Soccer On Low-End Hardware

Oct 08, 2019

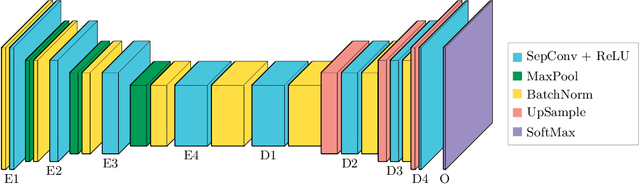

Abstract:With the emergence of onboard vision processing for areas such as the internet of things (IoT), edge computing and autonomous robots, there is increasing demand for computationally efficient convolutional neural network (CNN) models to perform real-time object detection on resource constraints hardware devices. Tiny-YOLO is generally considered as one of the faster object detectors for low-end devices and is the basis for our work. Our experiments on this network have shown that Tiny-YOLO can achieve 0.14 frames per second(FPS) on the Raspberry Pi 3 B, which is too slow for soccer playing autonomous humanoid robots detecting goal and ball objects. In this paper we propose an adaptation to the YOLO CNN model named xYOLO, that can achieve object detection at a speed of 9.66 FPS on the Raspberry Pi 3 B. This is achieved by trading an acceptable amount of accuracy, making the network approximately 70 times faster than Tiny-YOLO. Greater inference speed-ups were also achieved on a desktop CPU and GPU. Additionally we contribute an annotated Darknet dataset for goal and ball detection.

Bold Hearts Team Description for RoboCup 2019

Apr 22, 2019

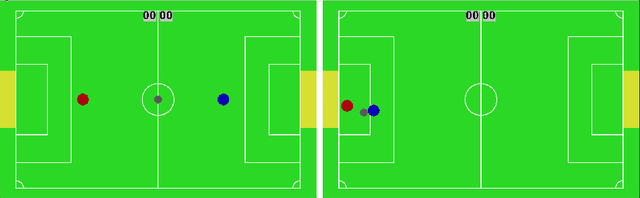

Abstract:We participated in the RoboCup 2018 competition in Montreal with our newly developed BoldBot based on the Darwin-OP and mostly self-printed custom parts. This paper is about the lessons learnt from that competition and further developments for the RoboCup 2019 competition. Firstly, we briefly introduce the team along with an overview of past achievements. We then present a simple, standalone 2D simulator we use for simplifying the entry for new members with making basic RoboCup concepts quickly accessible. We describe our approach for semantic-segmentation for our vision used in the 2018 competition, which replaced the lookup-table (LUT) implementation we had before. We also discuss the extra structural support we plan to add to the printed parts of the BoldBot and our transition to ROS 2 as our new middleware. Lastly, we will present a collection of open-source contributions of our team.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge