Sander G. van Dijk

ROS 2 for RoboCup

Jun 29, 2019

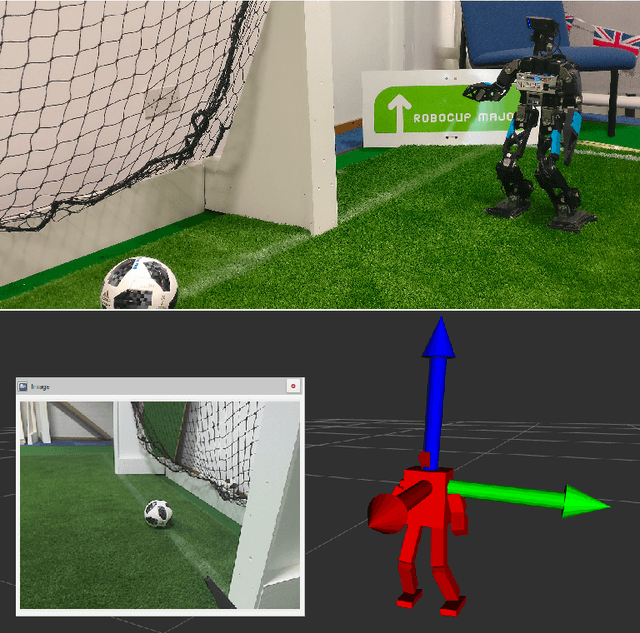

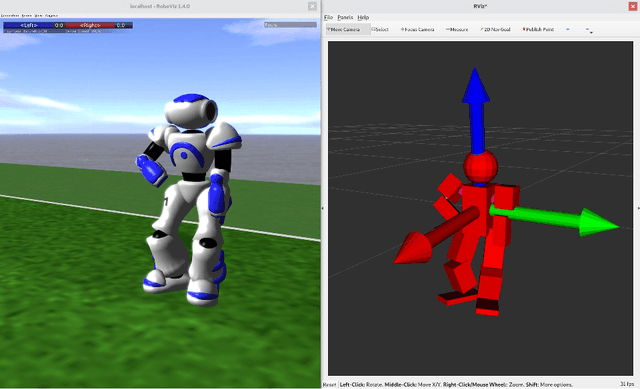

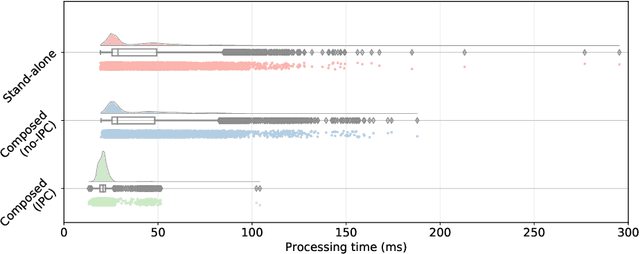

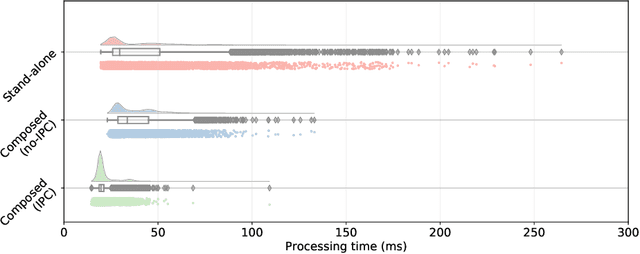

Abstract:There has always been much motivation for sharing code and solutions among teams in the RoboCup community. Yet the transfer of code between teams was usually complicated due to a huge variety of used frameworks and their differences in processing sensory information. The RoboCup@Home league has tackled this by transitioning to ROS as a common framework. In contrast, other leagues, such as those using humanoid robots, are reluctant to use ROS, as in those leagues real-time processing and low-computational complexity is crucial. However, ROS 2 now offers built-in support for real-time processing and promises to be suitable for embedded systems and multi-robot systems. It also offers the possibility to compose a set of nodes needed to run a robot into a single process. This, as we will show, reduces communication overhead and allows to have one single binary, which is pertinent to competitions such as the 3D-Simulation League. Although ROS 2 has not yet been announced to be production ready, we started the process to develop ROS 2 packages for using it with humanoid robots (real and simulated). This paper presents the developed modules, our contributions to ROS 2 core and RoboCup related packages, and most importantly it provides benchmarks that indicate that ROS 2 is a promising candidate for a common framework used among leagues.

Bold Hearts Team Description for RoboCup 2019

Apr 22, 2019

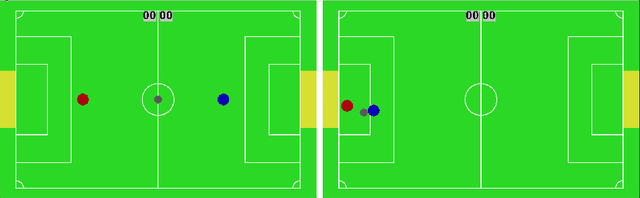

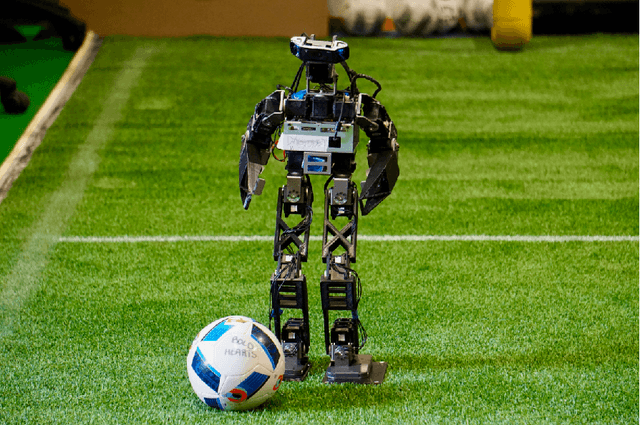

Abstract:We participated in the RoboCup 2018 competition in Montreal with our newly developed BoldBot based on the Darwin-OP and mostly self-printed custom parts. This paper is about the lessons learnt from that competition and further developments for the RoboCup 2019 competition. Firstly, we briefly introduce the team along with an overview of past achievements. We then present a simple, standalone 2D simulator we use for simplifying the entry for new members with making basic RoboCup concepts quickly accessible. We describe our approach for semantic-segmentation for our vision used in the 2018 competition, which replaced the lookup-table (LUT) implementation we had before. We also discuss the extra structural support we plan to add to the printed parts of the BoldBot and our transition to ROS 2 as our new middleware. Lastly, we will present a collection of open-source contributions of our team.

Deep Learning for Semantic Segmentation on Minimal Hardware

Jul 15, 2018

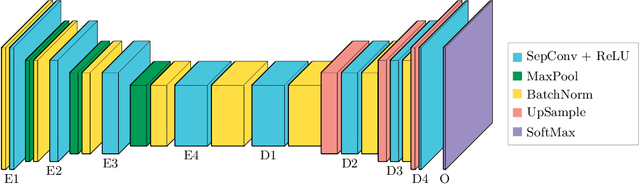

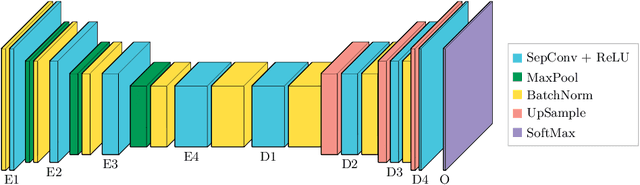

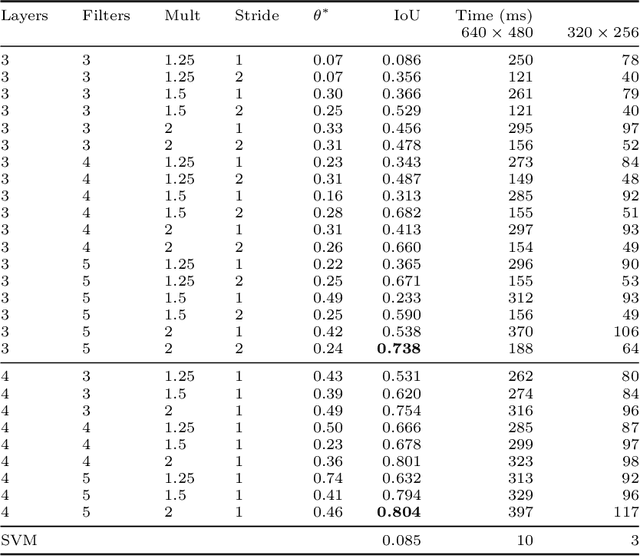

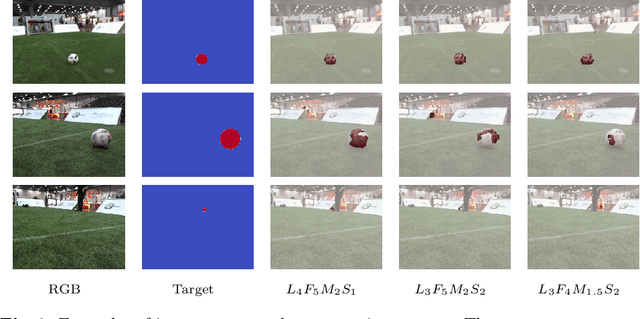

Abstract:Deep learning has revolutionised many fields, but it is still challenging to transfer its success to small mobile robots with minimal hardware. Specifically, some work has been done to this effect in the RoboCup humanoid football domain, but results that are performant and efficient and still generally applicable outside of this domain are lacking. We propose an approach conceptually different from those taken previously. It is based on semantic segmentation and does achieve these desired properties. In detail, it is being able to process full VGA images in real-time on a low-power mobile processor. It can further handle multiple image dimensions without retraining, it does not require specific domain knowledge for achieving a high frame rate and it is applicable on a minimal mobile hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge