Dan Nettleton

"Rebuilding" Statistics in the Age of AI: A Town Hall Discussion on Culture, Infrastructure, and Training

Jan 24, 2026Abstract:This article presents the full, original record of the 2024 Joint Statistical Meetings (JSM) town hall, "Statistics in the Age of AI," which convened leading statisticians to discuss how the field is evolving in response to advances in artificial intelligence, foundation models, large-scale empirical modeling, and data-intensive infrastructures. The town hall was structured around open panel discussion and extensive audience Q&A, with the aim of eliciting candid, experience-driven perspectives rather than formal presentations or prepared statements. This document preserves the extended exchanges among panelists and audience members, with minimal editorial intervention, and organizes the conversation around five recurring questions concerning disciplinary culture and practices, data curation and "data work," engagement with modern empirical modeling, training for large-scale AI applications, and partnerships with key AI stakeholders. By providing an archival record of this discussion, the preprint aims to support transparency, community reflection, and ongoing dialogue about the evolving role of statistics in the data- and AI-centric future.

Stability of Random Forests and Coverage of Random-Forest Prediction Intervals

Oct 28, 2023

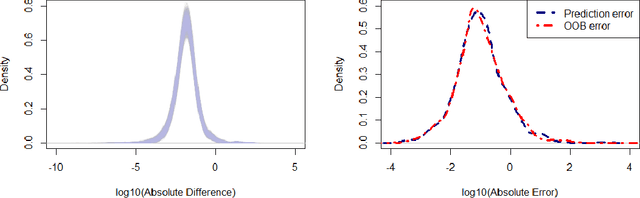

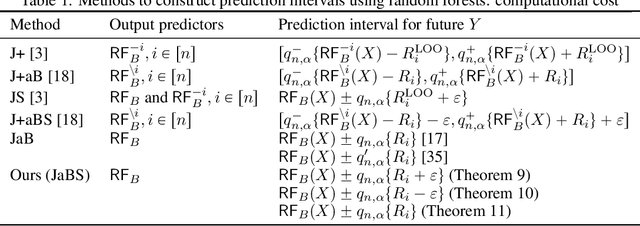

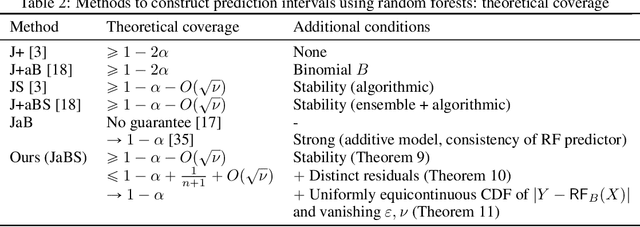

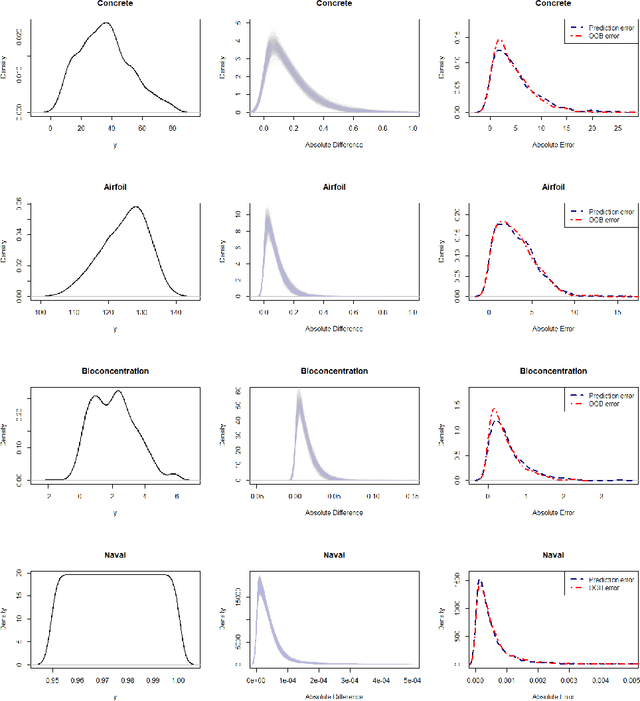

Abstract:We establish stability of random forests under the mild condition that the squared response ($Y^2$) does not have a heavy tail. In particular, our analysis holds for the practical version of random forests that is implemented in popular packages like \texttt{randomForest} in \texttt{R}. Empirical results show that stability may persist even beyond our assumption and hold for heavy-tailed $Y^2$. Using the stability property, we prove a non-asymptotic lower bound for the coverage probability of prediction intervals constructed from the out-of-bag error of random forests. With another mild condition that is typically satisfied when $Y$ is continuous, we also establish a complementary upper bound, which can be similarly established for the jackknife prediction interval constructed from an arbitrary stable algorithm. We also discuss the asymptotic coverage probability under assumptions weaker than those considered in previous literature. Our work implies that random forests, with its stability property, is an effective machine learning method that can provide not only satisfactory point prediction but also justified interval prediction at almost no extra computational cost.

Conformal Prediction Intervals for Neural Networks Using Cross Validation

Jun 30, 2020

Abstract:Neural networks are among the most powerful nonlinear models used to address supervised learning problems. Similar to most machine learning algorithms, neural networks produce point predictions and do not provide any prediction interval which includes an unobserved response value with a specified probability. In this paper, we proposed the $k$-fold prediction interval method to construct prediction intervals for neural networks based on $k$-fold cross validation. Simulation studies and analysis of 10 real datasets are used to compare the finite-sample properties of the prediction intervals produced by the proposed method and the split conformal (SC) method. The results suggest that the proposed method tends to produce narrower prediction intervals compared to the SC method while maintaining the same coverage probability. Our experimental results also reveal that the proposed $k$-fold prediction interval method produces effective prediction intervals and is especially advantageous relative to competing approaches when the number of training observations is limited.

Regression-Enhanced Random Forests

Apr 23, 2019

Abstract:Random forest (RF) methodology is one of the most popular machine learning techniques for prediction problems. In this article, we discuss some cases where random forests may suffer and propose a novel generalized RF method, namely regression-enhanced random forests (RERFs), that can improve on RFs by borrowing the strength of penalized parametric regression. The algorithm for constructing RERFs and selecting its tuning parameters is described. Both simulation study and real data examples show that RERFs have better predictive performance than RFs in important situations often encountered in practice. Moreover, RERFs may incorporate known relationships between the response and the predictors, and may give reliable predictions in extrapolation problems where predictions are required at points out of the domain of the training dataset. Strategies analogous to those described here can be used to improve other machine learning methods via combination with penalized parametric regression techniques.

* 12 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge