Dakshina Ranjan Kisku

Improving Bias in Facial Attribute Classification: A Combined Impact of KL Divergence induced Loss Function and Dual Attention

Oct 15, 2024

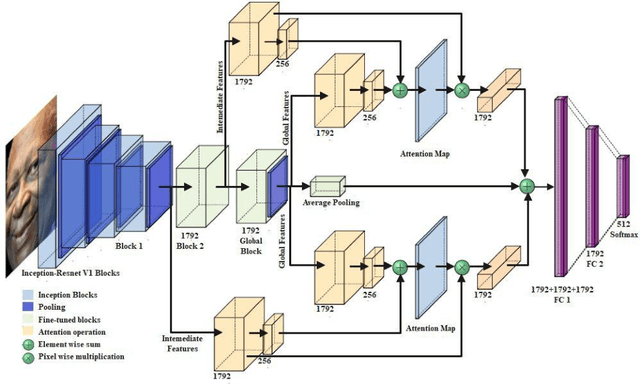

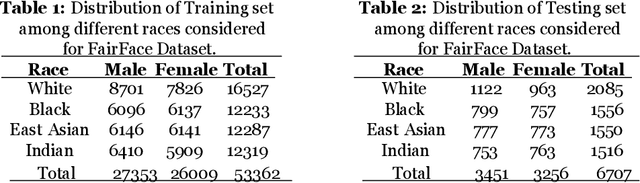

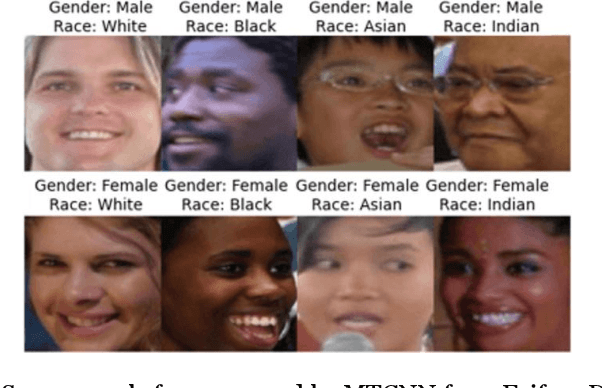

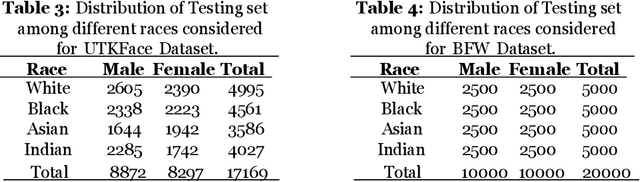

Abstract:Ensuring that AI-based facial recognition systems produce fair predictions and work equally well across all demographic groups is crucial. Earlier systems often exhibited demographic bias, particularly in gender and racial classification, with lower accuracy for women and individuals with darker skin tones. To tackle this issue and promote fairness in facial recognition, researchers have introduced several bias-mitigation techniques for gender classification and related algorithms. However, many challenges remain, such as data diversity, balancing fairness with accuracy, disparity, and bias measurement. This paper presents a method using a dual attention mechanism with a pre-trained Inception-ResNet V1 model, enhanced by KL-divergence regularization and a cross-entropy loss function. This approach reduces bias while improving accuracy and computational efficiency through transfer learning. The experimental results show significant improvements in both fairness and classification accuracy, providing promising advances in addressing bias and enhancing the reliability of facial recognition systems.

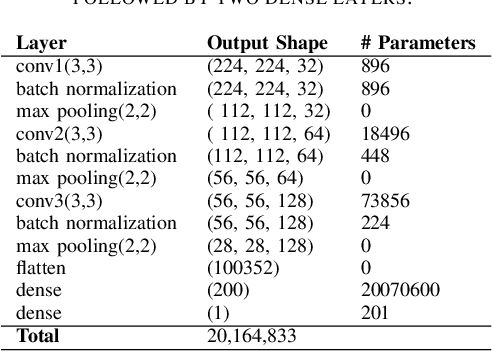

AI-based BMI Inference from Facial Images: An Application to Weight Monitoring

Oct 15, 2020

Abstract:Self-diagnostic image-based methods for healthy weight monitoring is gaining increased interest following the alarming trend of obesity. Only a handful of academic studies exist that investigate AI-based methods for Body Mass Index (BMI) inference from facial images as a solution to healthy weight monitoring and management. To promote further research and development in this area, we evaluate and compare the performance of five different deep-learning based Convolutional Neural Network (CNN) architectures i.e., VGG19, ResNet50, DenseNet, MobileNet, and lightCNN for BMI inference from facial images. Experimental results on the three publicly available BMI annotated facial image datasets assembled from social media, namely, VisualBMI, VIP-Attributes, and Bollywood datasets, suggest the efficacy of the deep learning methods in BMI inference from face images with minimum Mean Absolute Error (MAE) of $1.04$ obtained using ResNet50.

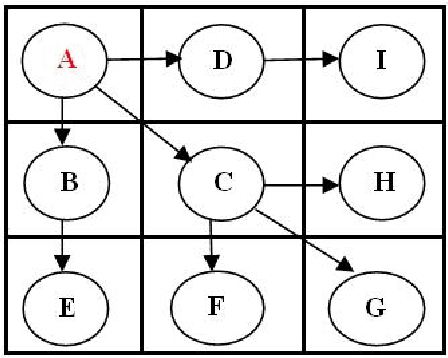

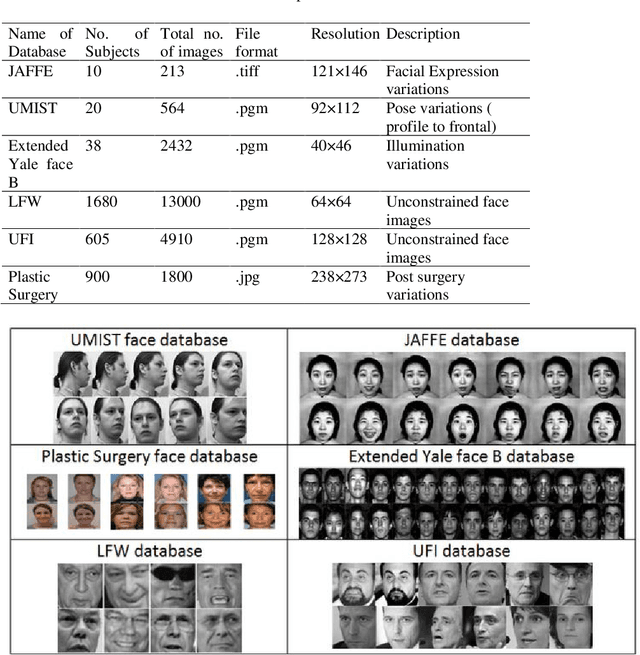

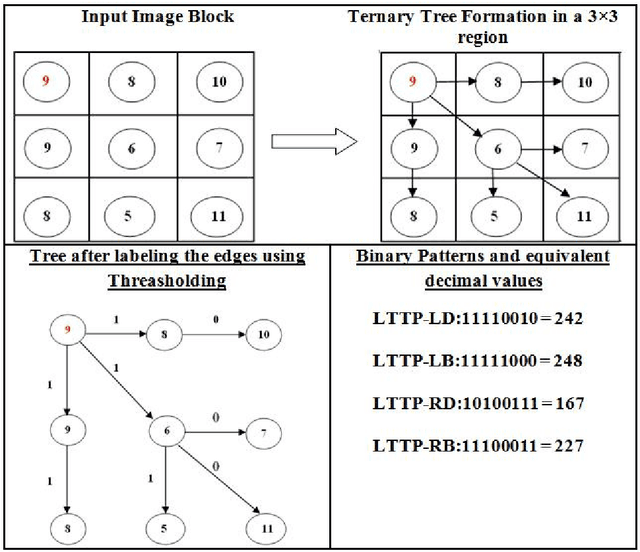

Face Identification using Local Ternary Tree Pattern based Spatial Structural Components

May 02, 2019

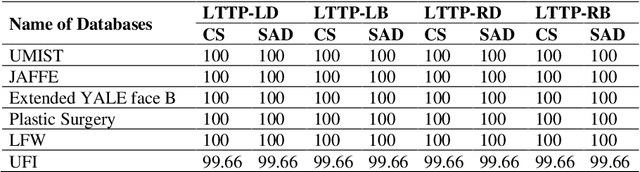

Abstract:This paper reports groundbreaking results of a face identification system which makes use of a novel local descriptor called Local Ternary Tree Pattern. Devising deft and feasible local descriptors for a face image plays an emergent preface in face identification task when the system performs in presence of lots of variety of face images including constrained, unconstrained and plastic surgery images. The LTTP has been proposed to extract robust and discriminatory spatial features from a face image as this descriptor can be used to best describe the various structural components of a face. To extract the most useful features, a ternary tree is formed for each pixel with its eight neighbors. LTTP pattern can be generated in four ways: LTTP Left Depth, LTTP Left Breadth, LTTP Right Depth and LTTP Right Breadth. The encoding schemes of these four patterns generation are very simple and efficient in terms of computational complexity as well as time complexity. The proposed face identification system is tested on six face databases, namely, the UMIST, the JAFFE, the extended Yale face B, the Plastic Surgery, the LFW and the UFI. The experimental evaluation demonstrates the most outstanding results which will have long term impact in designing face identification systems considering a variety of faces captured under different environments.

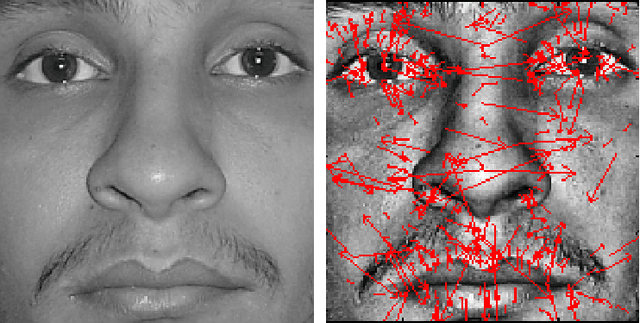

Ear Identification by Fusion of Segmented Slice Regions using Invariant Features: An Experimental Manifold with Dual Fusion Approach

Jul 21, 2010

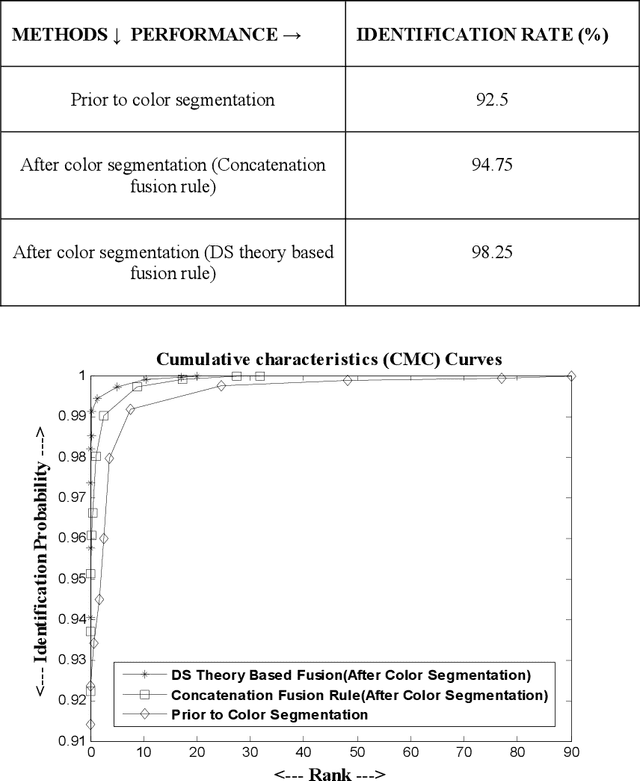

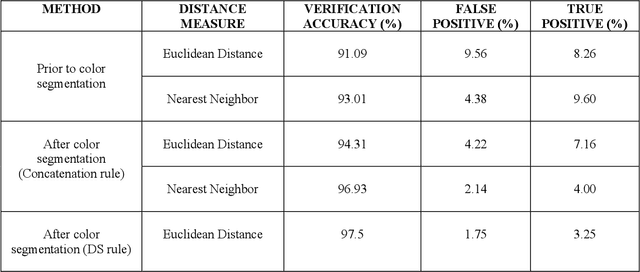

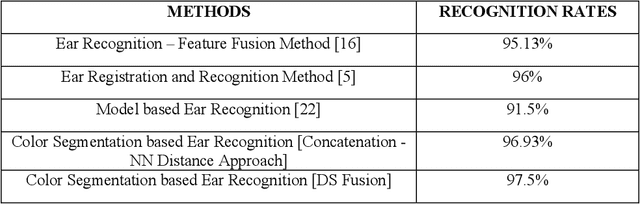

Abstract:This paper proposes a robust ear identification system which is developed by fusing SIFT features of color segmented slice regions of an ear. The proposed ear identification method makes use of Gaussian mixture model (GMM) to build ear model with mixture of Gaussian using vector quantization algorithm and K-L divergence is applied to the GMM framework for recording the color similarity in the specified ranges by comparing color similarity between a pair of reference ear and probe ear. SIFT features are then detected and extracted from each color slice region as a part of invariant feature extraction. The extracted keypoints are then fused separately by the two fusion approaches, namely concatenation and the Dempster-Shafer theory. Finally, the fusion approaches generate two independent augmented feature vectors which are used for identification of individuals separately. The proposed identification technique is tested on IIT Kanpur ear database of 400 individuals and is found to achieve 98.25% accuracy for identification while top 5 matched criteria is set for each subject.

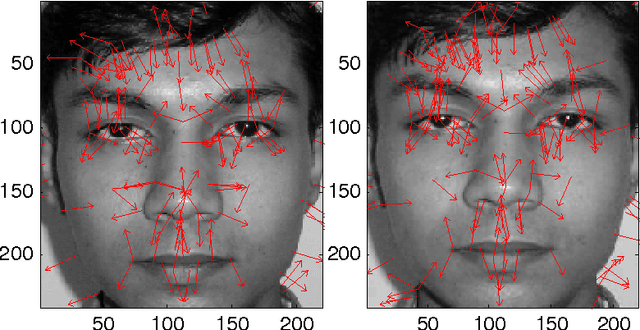

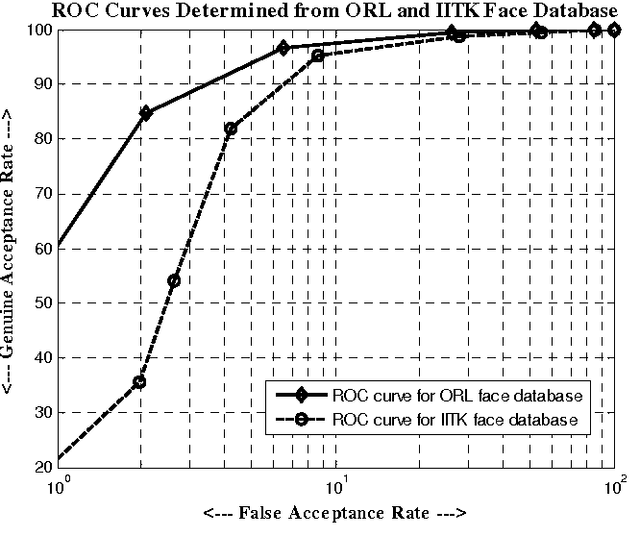

Maximized Posteriori Attributes Selection from Facial Salient Landmarks for Face Recognition

Apr 12, 2010

Abstract:This paper presents a robust and dynamic face recognition technique based on the extraction and matching of devised probabilistic graphs drawn on SIFT features related to independent face areas. The face matching strategy is based on matching individual salient facial graph characterized by SIFT features as connected to facial landmarks such as the eyes and the mouth. In order to reduce the face matching errors, the Dempster-Shafer decision theory is applied to fuse the individual matching scores obtained from each pair of salient facial features. The proposed algorithm is evaluated with the ORL and the IITK face databases. The experimental results demonstrate the effectiveness and potential of the proposed face recognition technique also in case of partially occluded faces.

* 8 pages, 2 figures

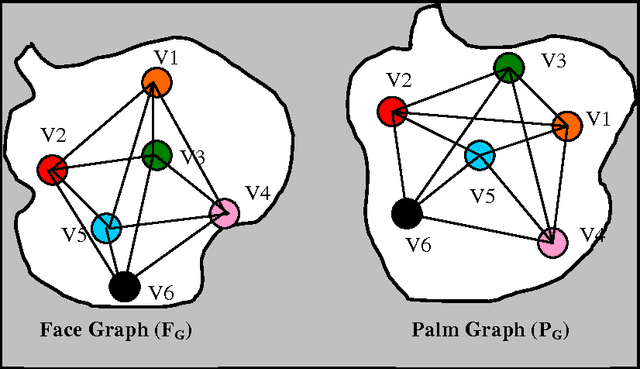

Feature Level Fusion of Face and Palmprint Biometrics by Isomorphic Graph-based Improved K-Medoids Partitioning

Apr 12, 2010

Abstract:This paper presents a feature level fusion approach which uses the improved K-medoids clustering algorithm and isomorphic graph for face and palmprint biometrics. Partitioning around medoids (PAM) algorithm is used to partition the set of n invariant feature points of the face and palmprint images into k clusters. By partitioning the face and palmprint images with scale invariant features SIFT points, a number of clusters is formed on both the images. Then on each cluster, an isomorphic graph is drawn. In the next step, the most probable pair of graphs is searched using iterative relaxation algorithm from all possible isomorphic graphs for a pair of corresponding face and palmprint images. Finally, graphs are fused by pairing the isomorphic graphs into augmented groups in terms of addition of invariant SIFT points and in terms of combining pair of keypoint descriptors by concatenation rule. Experimental results obtained from the extensive evaluation show that the proposed feature level fusion with the improved K-medoids partitioning algorithm increases the performance of the system with utmost level of accuracy.

* 13 pages, 4 figures

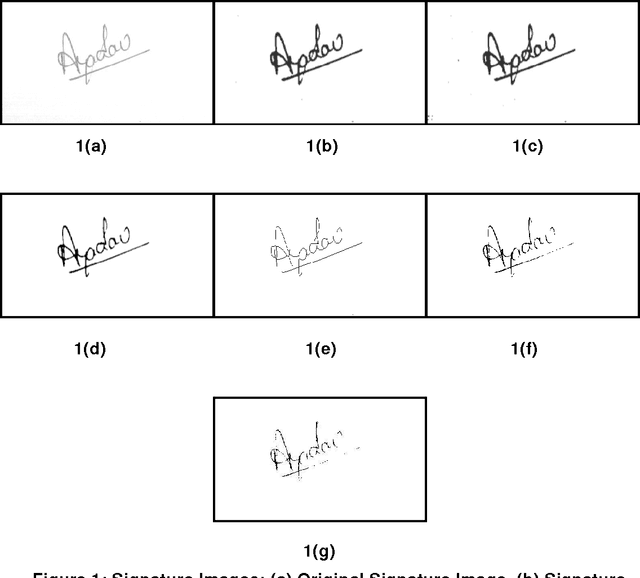

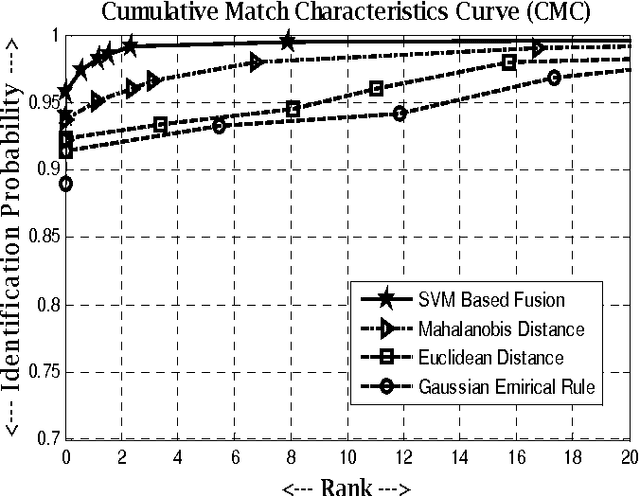

Offline Signature Identification by Fusion of Multiple Classifiers using Statistical Learning Theory

Mar 30, 2010

Abstract:This paper uses Support Vector Machines (SVM) to fuse multiple classifiers for an offline signature system. From the signature images, global and local features are extracted and the signatures are verified with the help of Gaussian empirical rule, Euclidean and Mahalanobis distance based classifiers. SVM is used to fuse matching scores of these matchers. Finally, recognition of query signatures is done by comparing it with all signatures of the database. The proposed system is tested on a signature database contains 5400 offline signatures of 600 individuals and the results are found to be promising.

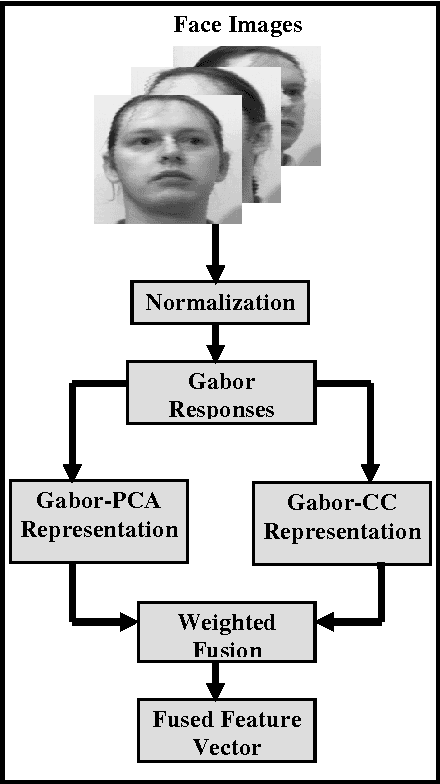

Robust multi-camera view face recognition

Mar 30, 2010

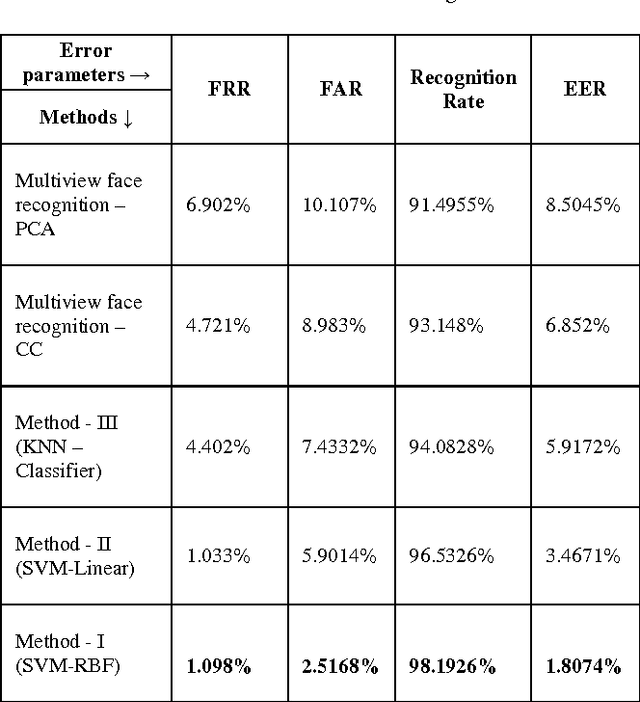

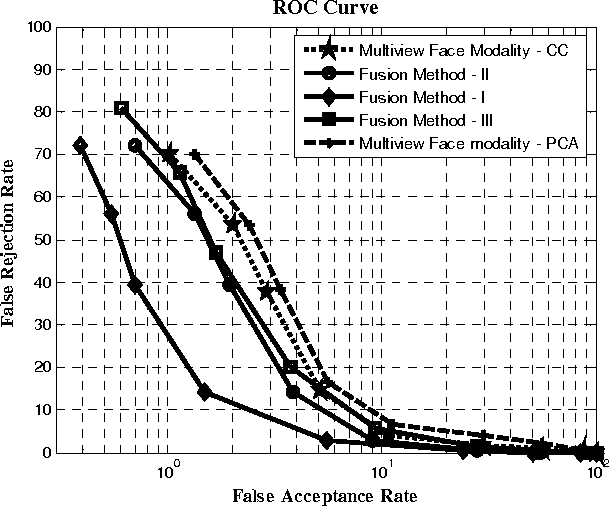

Abstract:This paper presents multi-appearance fusion of Principal Component Analysis (PCA) and generalization of Linear Discriminant Analysis (LDA) for multi-camera view offline face recognition (verification) system. The generalization of LDA has been extended to establish correlations between the face classes in the transformed representation and this is called canonical covariate. The proposed system uses Gabor filter banks for characterization of facial features by spatial frequency, spatial locality and orientation to make compensate to the variations of face instances occurred due to illumination, pose and facial expression changes. Convolution of Gabor filter bank to face images produces Gabor face representations with high dimensional feature vectors. PCA and canonical covariate are then applied on the Gabor face representations to reduce the high dimensional feature spaces into low dimensional Gabor eigenfaces and Gabor canonical faces. Reduced eigenface vector and canonical face vector are fused together using weighted mean fusion rule. Finally, support vector machines (SVM) have trained with augmented fused set of features and perform the recognition task. The system has been evaluated with UMIST face database consisting of multiview faces. The experimental results demonstrate the efficiency and robustness of the proposed system for multi-view face images with high recognition rates. Complexity analysis of the proposed system is also presented at the end of the experimental results.

Multibiometrics Belief Fusion

Feb 14, 2010

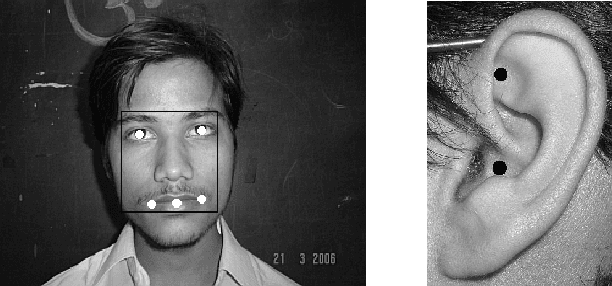

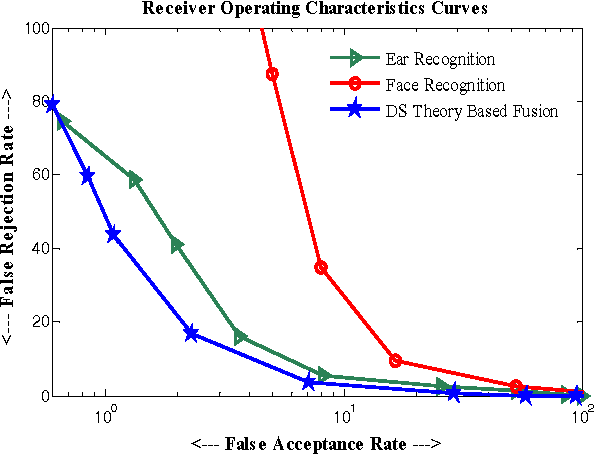

Abstract:This paper proposes a multimodal biometric system through Gaussian Mixture Model (GMM) for face and ear biometrics with belief fusion of the estimated scores characterized by Gabor responses and the proposed fusion is accomplished by Dempster-Shafer (DS) decision theory. Face and ear images are convolved with Gabor wavelet filters to extracts spatially enhanced Gabor facial features and Gabor ear features. Further, GMM is applied to the high-dimensional Gabor face and Gabor ear responses separately for quantitive measurements. Expectation Maximization (EM) algorithm is used to estimate density parameters in GMM. This produces two sets of feature vectors which are then fused using Dempster-Shafer theory. Experiments are conducted on multimodal database containing face and ear images of 400 individuals. It is found that use of Gabor wavelet filters along with GMM and DS theory can provide robust and efficient multimodal fusion strategy.

* 4 pages, 3 figures

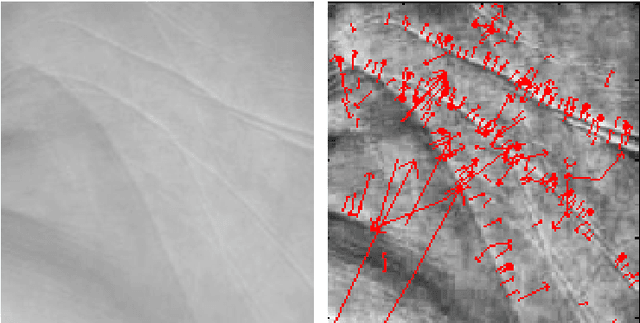

Feature Level Fusion of Face and Fingerprint Biometrics

Feb 12, 2010

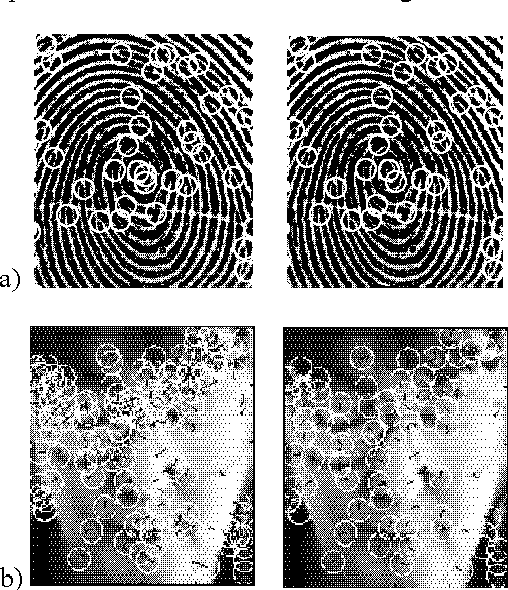

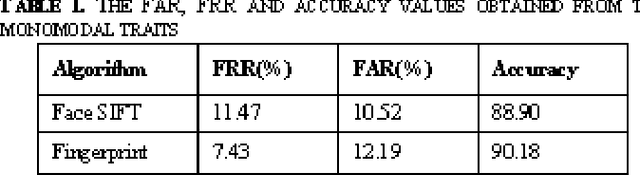

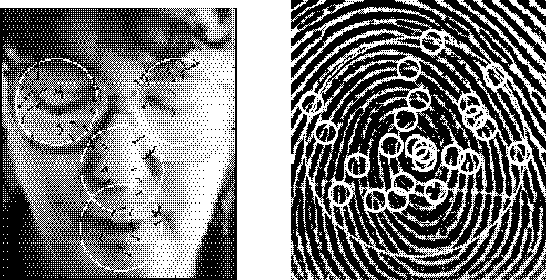

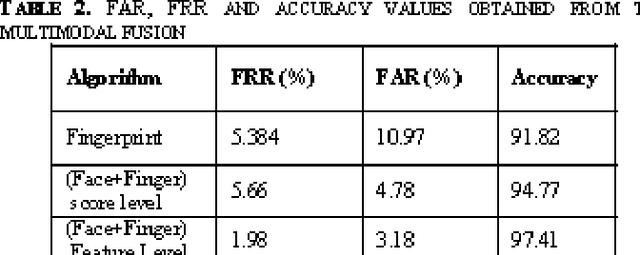

Abstract:The aim of this paper is to study the fusion at feature extraction level for face and fingerprint biometrics. The proposed approach is based on the fusion of the two traits by extracting independent feature pointsets from the two modalities, and making the two pointsets compatible for concatenation. Moreover, to handle the problem of curse of dimensionality, the feature pointsets are properly reduced in dimension. Different feature reduction techniques are implemented, prior and after the feature pointsets fusion, and the results are duly recorded. The fused feature pointset for the database and the query face and fingerprint images are matched using techniques based on either the point pattern matching, or the Delaunay triangulation. Comparative experiments are conducted on chimeric and real databases, to assess the actual advantage of the fusion performed at the feature extraction level, in comparison to the matching score level.

* 6 pages, 7 figures, conference

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge