Jamuna Kanta Sing

Ear Identification by Fusion of Segmented Slice Regions using Invariant Features: An Experimental Manifold with Dual Fusion Approach

Jul 21, 2010

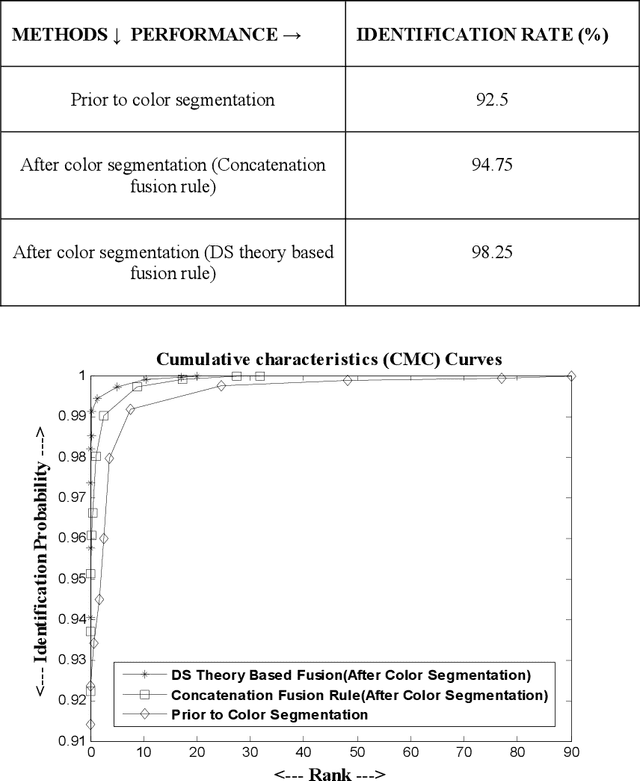

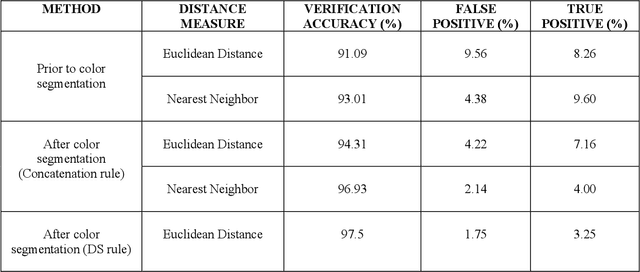

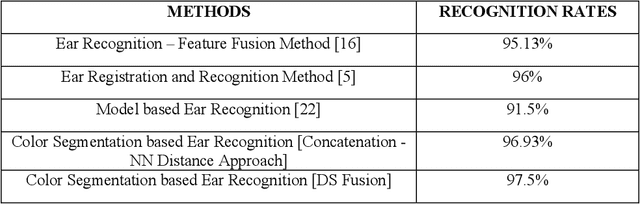

Abstract:This paper proposes a robust ear identification system which is developed by fusing SIFT features of color segmented slice regions of an ear. The proposed ear identification method makes use of Gaussian mixture model (GMM) to build ear model with mixture of Gaussian using vector quantization algorithm and K-L divergence is applied to the GMM framework for recording the color similarity in the specified ranges by comparing color similarity between a pair of reference ear and probe ear. SIFT features are then detected and extracted from each color slice region as a part of invariant feature extraction. The extracted keypoints are then fused separately by the two fusion approaches, namely concatenation and the Dempster-Shafer theory. Finally, the fusion approaches generate two independent augmented feature vectors which are used for identification of individuals separately. The proposed identification technique is tested on IIT Kanpur ear database of 400 individuals and is found to achieve 98.25% accuracy for identification while top 5 matched criteria is set for each subject.

Maximized Posteriori Attributes Selection from Facial Salient Landmarks for Face Recognition

Apr 12, 2010

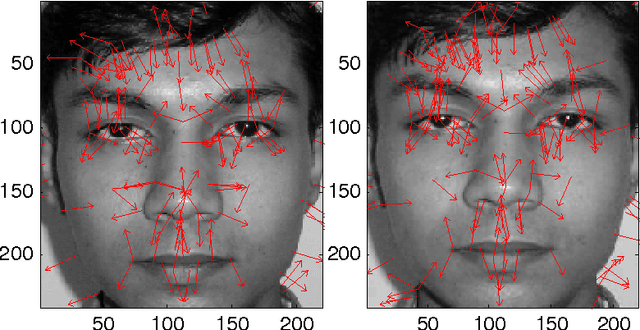

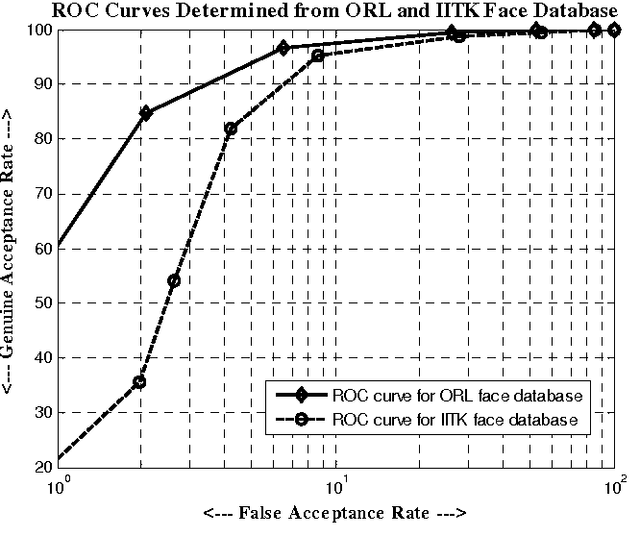

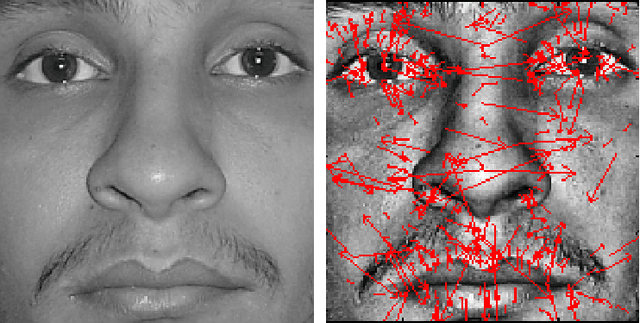

Abstract:This paper presents a robust and dynamic face recognition technique based on the extraction and matching of devised probabilistic graphs drawn on SIFT features related to independent face areas. The face matching strategy is based on matching individual salient facial graph characterized by SIFT features as connected to facial landmarks such as the eyes and the mouth. In order to reduce the face matching errors, the Dempster-Shafer decision theory is applied to fuse the individual matching scores obtained from each pair of salient facial features. The proposed algorithm is evaluated with the ORL and the IITK face databases. The experimental results demonstrate the effectiveness and potential of the proposed face recognition technique also in case of partially occluded faces.

* 8 pages, 2 figures

Feature Level Fusion of Face and Palmprint Biometrics by Isomorphic Graph-based Improved K-Medoids Partitioning

Apr 12, 2010

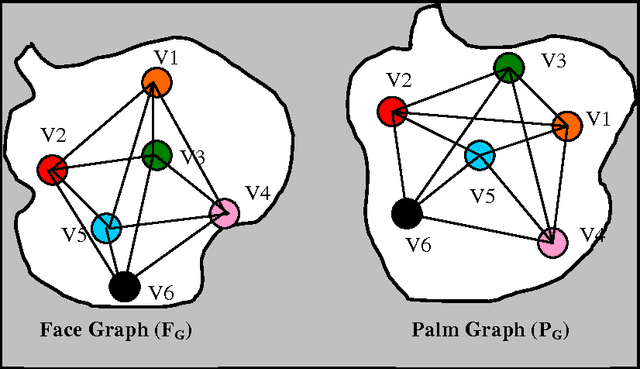

Abstract:This paper presents a feature level fusion approach which uses the improved K-medoids clustering algorithm and isomorphic graph for face and palmprint biometrics. Partitioning around medoids (PAM) algorithm is used to partition the set of n invariant feature points of the face and palmprint images into k clusters. By partitioning the face and palmprint images with scale invariant features SIFT points, a number of clusters is formed on both the images. Then on each cluster, an isomorphic graph is drawn. In the next step, the most probable pair of graphs is searched using iterative relaxation algorithm from all possible isomorphic graphs for a pair of corresponding face and palmprint images. Finally, graphs are fused by pairing the isomorphic graphs into augmented groups in terms of addition of invariant SIFT points and in terms of combining pair of keypoint descriptors by concatenation rule. Experimental results obtained from the extensive evaluation show that the proposed feature level fusion with the improved K-medoids partitioning algorithm increases the performance of the system with utmost level of accuracy.

* 13 pages, 4 figures

Offline Signature Identification by Fusion of Multiple Classifiers using Statistical Learning Theory

Mar 30, 2010

Abstract:This paper uses Support Vector Machines (SVM) to fuse multiple classifiers for an offline signature system. From the signature images, global and local features are extracted and the signatures are verified with the help of Gaussian empirical rule, Euclidean and Mahalanobis distance based classifiers. SVM is used to fuse matching scores of these matchers. Finally, recognition of query signatures is done by comparing it with all signatures of the database. The proposed system is tested on a signature database contains 5400 offline signatures of 600 individuals and the results are found to be promising.

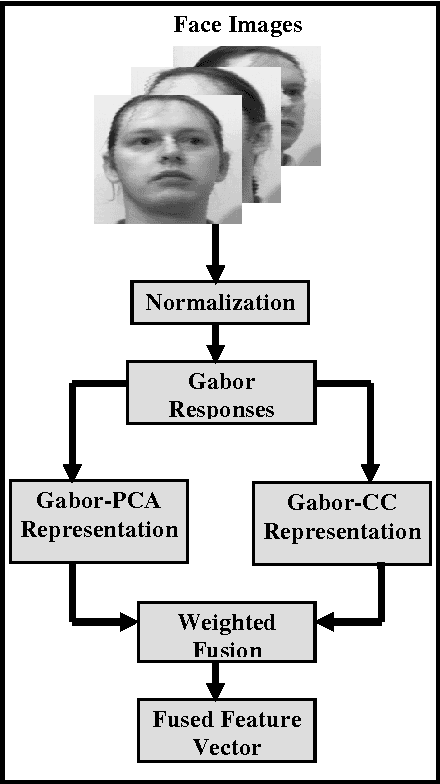

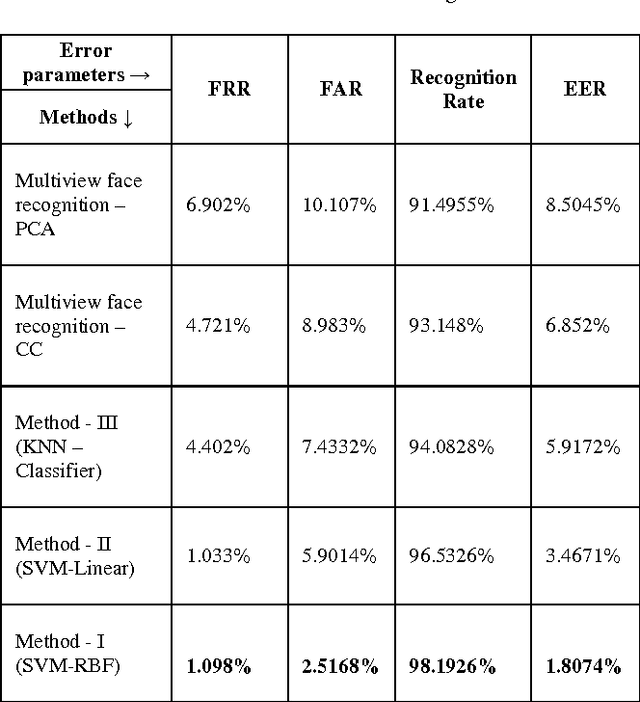

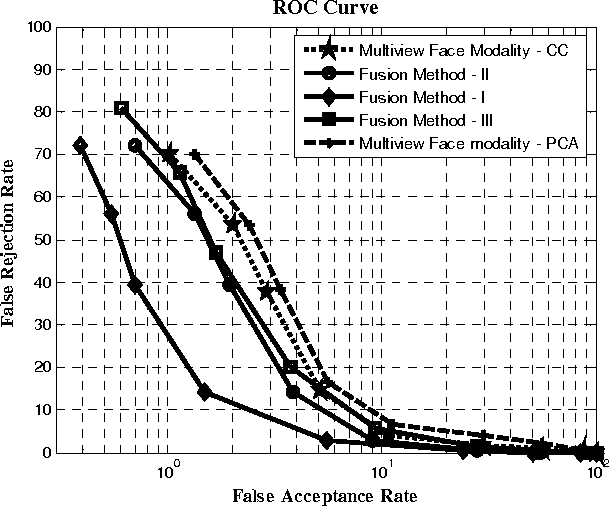

Robust multi-camera view face recognition

Mar 30, 2010

Abstract:This paper presents multi-appearance fusion of Principal Component Analysis (PCA) and generalization of Linear Discriminant Analysis (LDA) for multi-camera view offline face recognition (verification) system. The generalization of LDA has been extended to establish correlations between the face classes in the transformed representation and this is called canonical covariate. The proposed system uses Gabor filter banks for characterization of facial features by spatial frequency, spatial locality and orientation to make compensate to the variations of face instances occurred due to illumination, pose and facial expression changes. Convolution of Gabor filter bank to face images produces Gabor face representations with high dimensional feature vectors. PCA and canonical covariate are then applied on the Gabor face representations to reduce the high dimensional feature spaces into low dimensional Gabor eigenfaces and Gabor canonical faces. Reduced eigenface vector and canonical face vector are fused together using weighted mean fusion rule. Finally, support vector machines (SVM) have trained with augmented fused set of features and perform the recognition task. The system has been evaluated with UMIST face database consisting of multiview faces. The experimental results demonstrate the efficiency and robustness of the proposed system for multi-view face images with high recognition rates. Complexity analysis of the proposed system is also presented at the end of the experimental results.

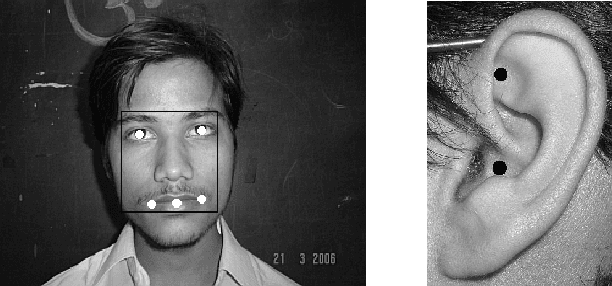

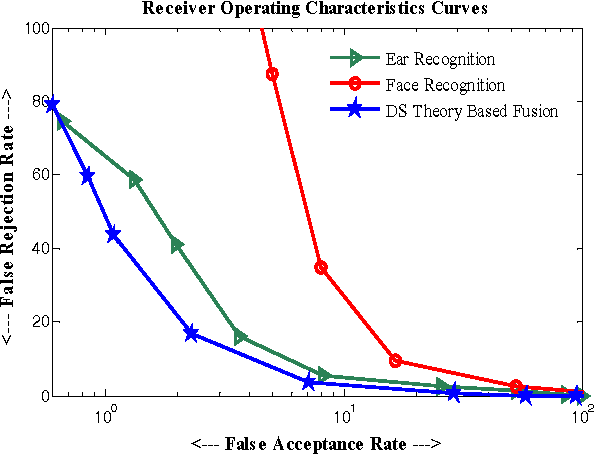

Multibiometrics Belief Fusion

Feb 14, 2010

Abstract:This paper proposes a multimodal biometric system through Gaussian Mixture Model (GMM) for face and ear biometrics with belief fusion of the estimated scores characterized by Gabor responses and the proposed fusion is accomplished by Dempster-Shafer (DS) decision theory. Face and ear images are convolved with Gabor wavelet filters to extracts spatially enhanced Gabor facial features and Gabor ear features. Further, GMM is applied to the high-dimensional Gabor face and Gabor ear responses separately for quantitive measurements. Expectation Maximization (EM) algorithm is used to estimate density parameters in GMM. This produces two sets of feature vectors which are then fused using Dempster-Shafer theory. Experiments are conducted on multimodal database containing face and ear images of 400 individuals. It is found that use of Gabor wavelet filters along with GMM and DS theory can provide robust and efficient multimodal fusion strategy.

* 4 pages, 3 figures

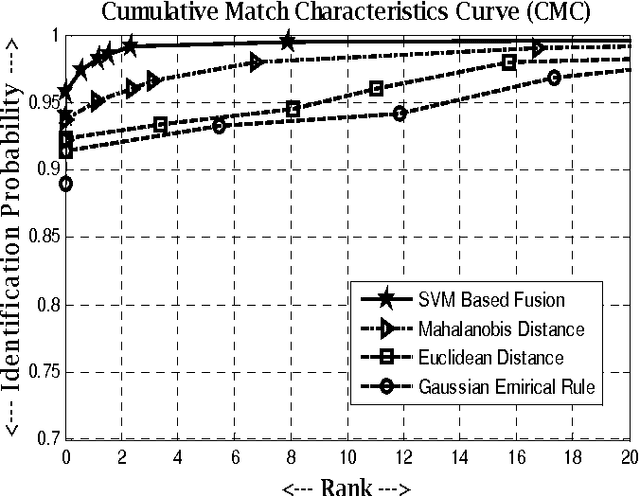

Fusion of Multiple Matchers using SVM for Offline Signature Identification

Feb 02, 2010

Abstract:This paper uses Support Vector Machines (SVM) to fuse multiple classifiers for an offline signature system. From the signature images, global and local features are extracted and the signatures are verified with the help of Gaussian empirical rule, Euclidean and Mahalanobis distance based classifiers. SVM is used to fuse matching scores of these matchers. Finally, recognition of query signatures is done by comparing it with all signatures of the database. The proposed system is tested on a signature database contains 5400 offline signatures of 600 individuals and the results are found to be promising.

Feature Level Fusion of Biometrics Cues: Human Identification with Doddingtons Caricature

Feb 02, 2010

Abstract:This paper presents a multimodal biometric system of fingerprint and ear biometrics. Scale Invariant Feature Transform (SIFT) descriptor based feature sets extracted from fingerprint and ear are fused. The fused set is encoded by K-medoids partitioning approach with less number of feature points in the set. K-medoids partition the whole dataset into clusters to minimize the error between data points belonging to the clusters and its center. Reduced feature set is used to match between two biometric sets. Matching scores are generated using wolf-lamb user-dependent feature weighting scheme introduced by Doddington. The technique is tested to exhibit its robust performance.

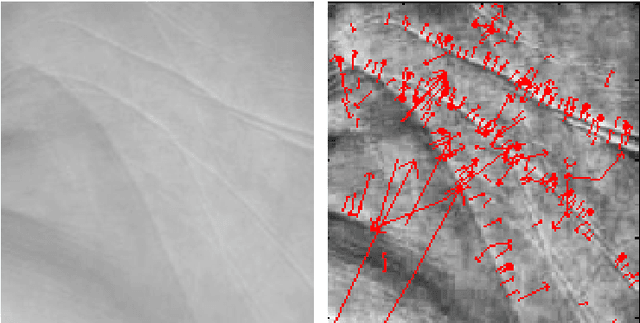

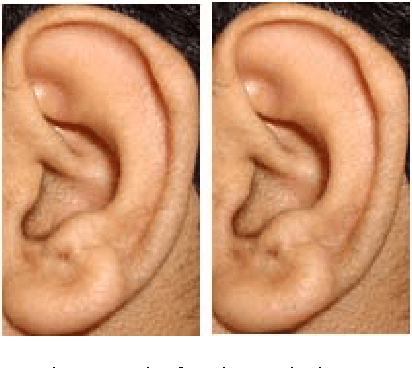

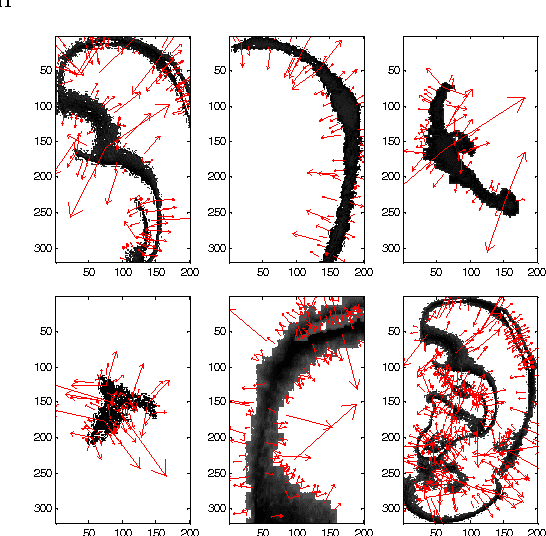

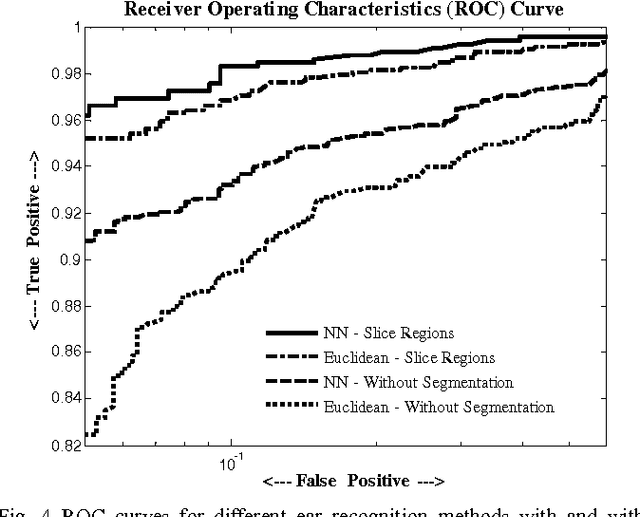

SIFT-based Ear Recognition by Fusion of Detected Keypoints from Color Similarity Slice Regions

Feb 02, 2010

Abstract:Ear biometric is considered as one of the most reliable and invariant biometrics characteristics in line with iris and fingerprint characteristics. In many cases, ear biometrics can be compared with face biometrics regarding many physiological and texture characteristics. In this paper, a robust and efficient ear recognition system is presented, which uses Scale Invariant Feature Transform (SIFT) as feature descriptor for structural representation of ear images. In order to make it more robust to user authentication, only the regions having color probabilities in a certain ranges are considered for invariant SIFT feature extraction, where the K-L divergence is used for keeping color consistency. Ear skin color model is formed by Gaussian mixture model and clustering the ear color pattern using vector quantization. Finally, K-L divergence is applied to the GMM framework for recording the color similarity in the specified ranges by comparing color similarity between a pair of reference model and probe ear images. After segmentation of ear images in some color slice regions, SIFT keypoints are extracted and an augmented vector of extracted SIFT features are created for matching, which is accomplished between a pair of reference model and probe ear images. The proposed technique has been tested on the IITK Ear database and the experimental results show improvements in recognition accuracy while invariant features are extracted from color slice regions to maintain the robustness of the system.

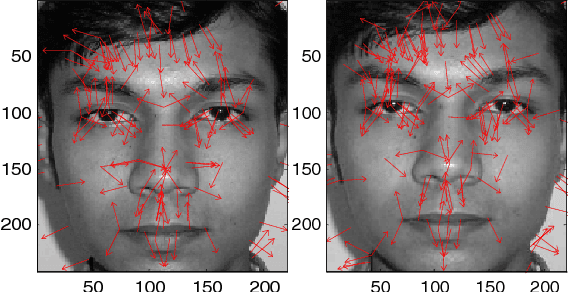

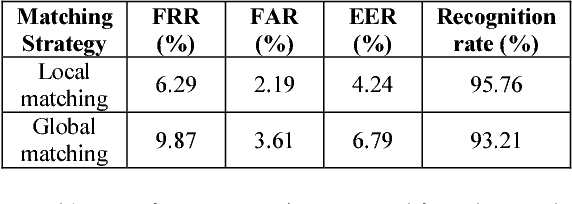

Face Recognition by Fusion of Local and Global Matching Scores using DS Theory: An Evaluation with Uni-classifier and Multi-classifier Paradigm

Feb 02, 2010

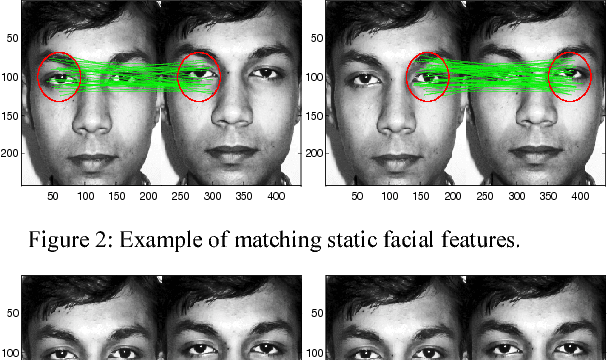

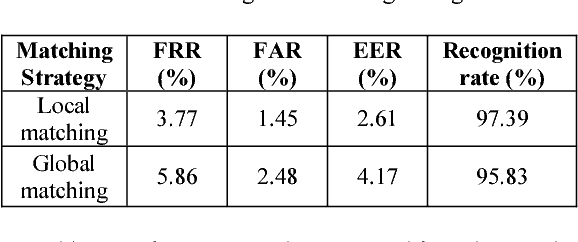

Abstract:Faces are highly deformable objects which may easily change their appearance over time. Not all face areas are subject to the same variability. Therefore decoupling the information from independent areas of the face is of paramount importance to improve the robustness of any face recognition technique. This paper presents a robust face recognition technique based on the extraction and matching of SIFT features related to independent face areas. Both a global and local (as recognition from parts) matching strategy is proposed. The local strategy is based on matching individual salient facial SIFT features as connected to facial landmarks such as the eyes and the mouth. As for the global matching strategy, all SIFT features are combined together to form a single feature. In order to reduce the identification errors, the Dempster-Shafer decision theory is applied to fuse the two matching techniques. The proposed algorithms are evaluated with the ORL and the IITK face databases. The experimental results demonstrate the effectiveness and potential of the proposed face recognition techniques also in the case of partially occluded faces or with missing information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge