Dai Hoang Tran

BERT-CoQAC: BERT-based Conversational Question Answering in Context

Apr 23, 2021

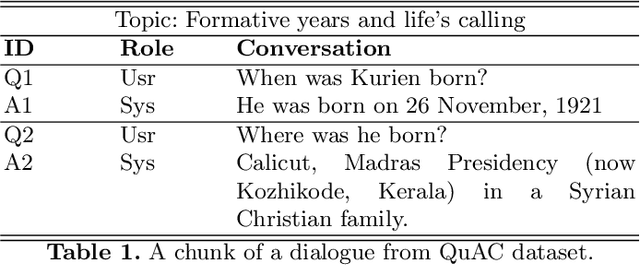

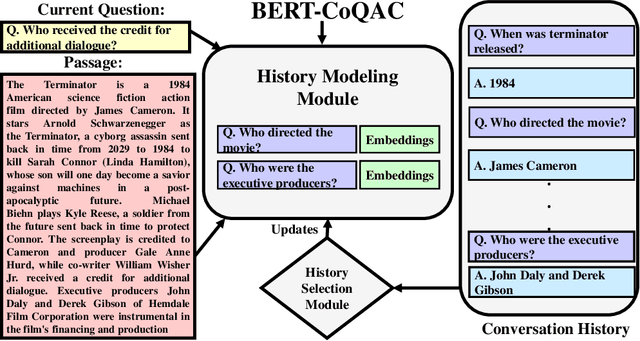

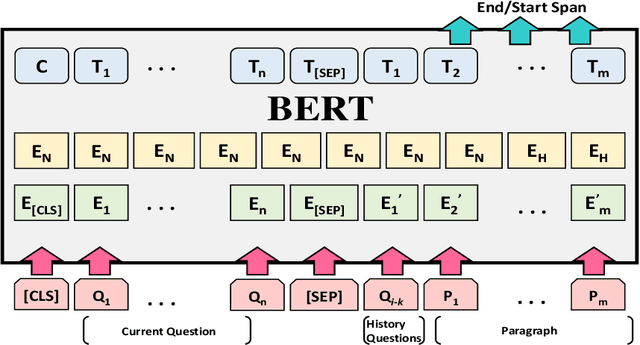

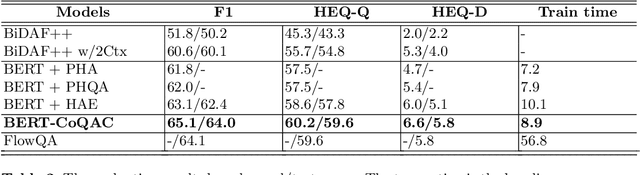

Abstract:As one promising way to inquire about any particular information through a dialog with the bot, question answering dialog systems have gained increasing research interests recently. Designing interactive QA systems has always been a challenging task in natural language processing and used as a benchmark to evaluate a machine's ability of natural language understanding. However, such systems often struggle when the question answering is carried out in multiple turns by the users to seek more information based on what they have already learned, thus, giving rise to another complicated form called Conversational Question Answering (CQA). CQA systems are often criticized for not understanding or utilizing the previous context of the conversation when answering the questions. To address the research gap, in this paper, we explore how to integrate conversational history into the neural machine comprehension system. On one hand, we introduce a framework based on a publically available pre-trained language model called BERT for incorporating history turns into the system. On the other hand, we propose a history selection mechanism that selects the turns that are relevant and contributes the most to answer the current question. Experimentation results revealed that our framework is comparable in performance with the state-of-the-art models on the QuAC leader board. We also conduct a number of experiments to show the side effects of using entire context information which brings unnecessary information and noise signals resulting in a decline in the model's performance.

Deep Conversational Recommender Systems: A New Frontier for Goal-Oriented Dialogue Systems

Apr 28, 2020

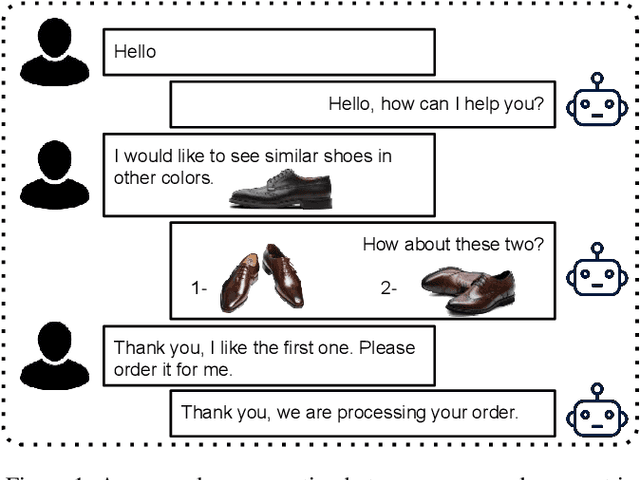

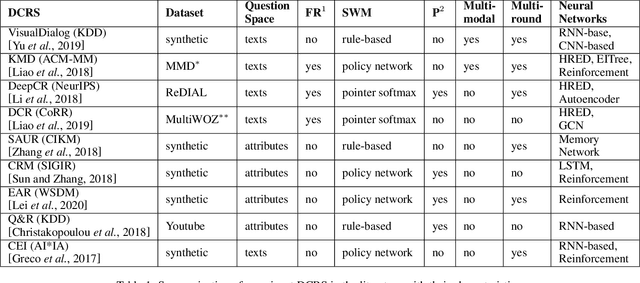

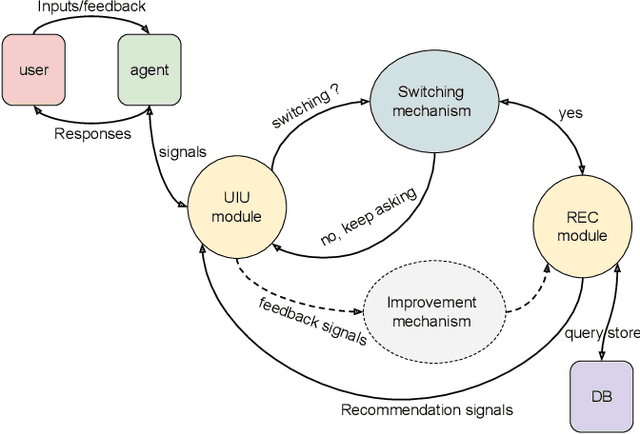

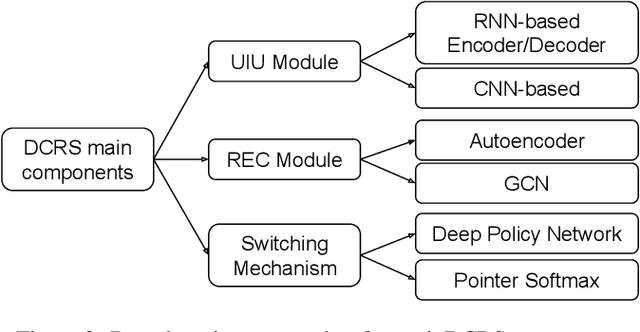

Abstract:In recent years, the emerging topics of recommender systems that take advantage of natural language processing techniques have attracted much attention, and one of their applications is the Conversational Recommender System (CRS). Unlike traditional recommender systems with content-based and collaborative filtering approaches, CRS learns and models user's preferences through interactive dialogue conversations. In this work, we provide a summarization of the recent evolution of CRS, where deep learning approaches are applied to CRS and have produced fruitful results. We first analyze the research problems and present key challenges in the development of Deep Conversational Recommender Systems (DCRS), then present the current state of the field taken from the most recent researches, including the most common deep learning models that benefit DCRS. Finally, we discuss future directions for this vibrant area.

Deep Autoencoder for Recommender Systems: Parameter Influence Analysis

Dec 25, 2018

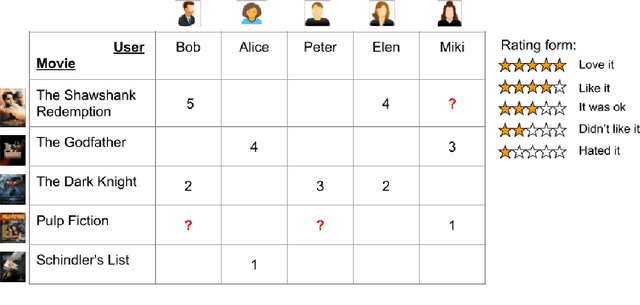

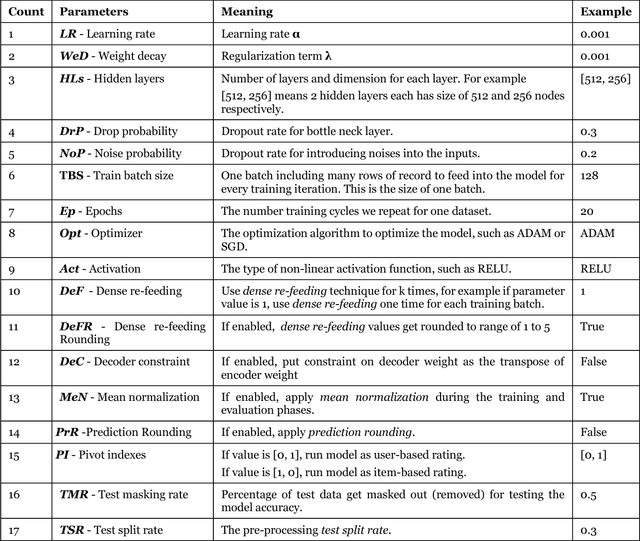

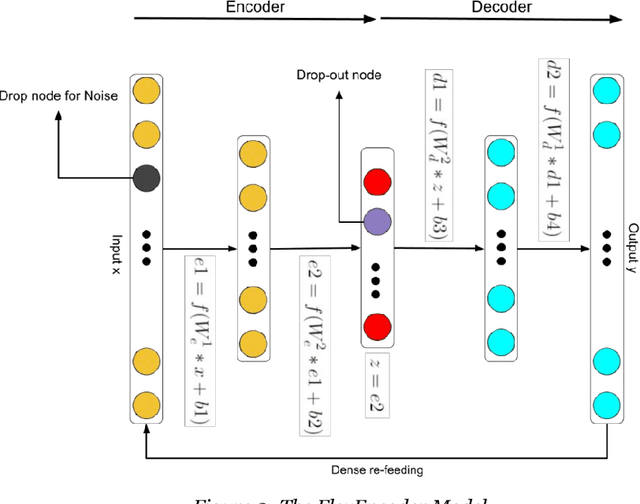

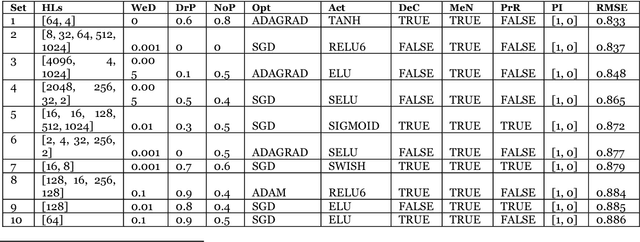

Abstract:Recommender systems have recently attracted many researchers in the deep learning community. The state-of-the-art deep neural network models used in recommender systems are typically multilayer perceptron and deep Autoencoder (DAE), among which DAE usually shows better performance due to its superior capability to reconstruct the inputs. However, we found existing DAE recommendation systems that have similar implementations on similar datasets result in vastly different parameter settings. In this work, we have built a flexible DAE model, named FlexEncoder that uses configurable parameters and unique features to analyse the parameter influences on the prediction accuracy of recommender systems. This will help us identify the best-performance parameters given a dataset. Extensive evaluation on the MovieLens datasets are conducted, which drives our conclusions on the influences of DAE parameters. Specifically, we find that DAE parameters strongly affect the prediction accuracy of the recommender systems, and the effect is transferable to similar datasets in a larger size. We open our code to public which could benefit both new users for DAE -- they can quickly understand how DAE works for recommendation systems, and experienced DAE users -- it easier for them to tune the parameters on different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge